Video object tracking method based on feature optical flow and online ensemble learning

An integrated learning and target tracking technology, applied in image data processing, instrumentation, computing, etc., can solve problems such as poor tracking results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

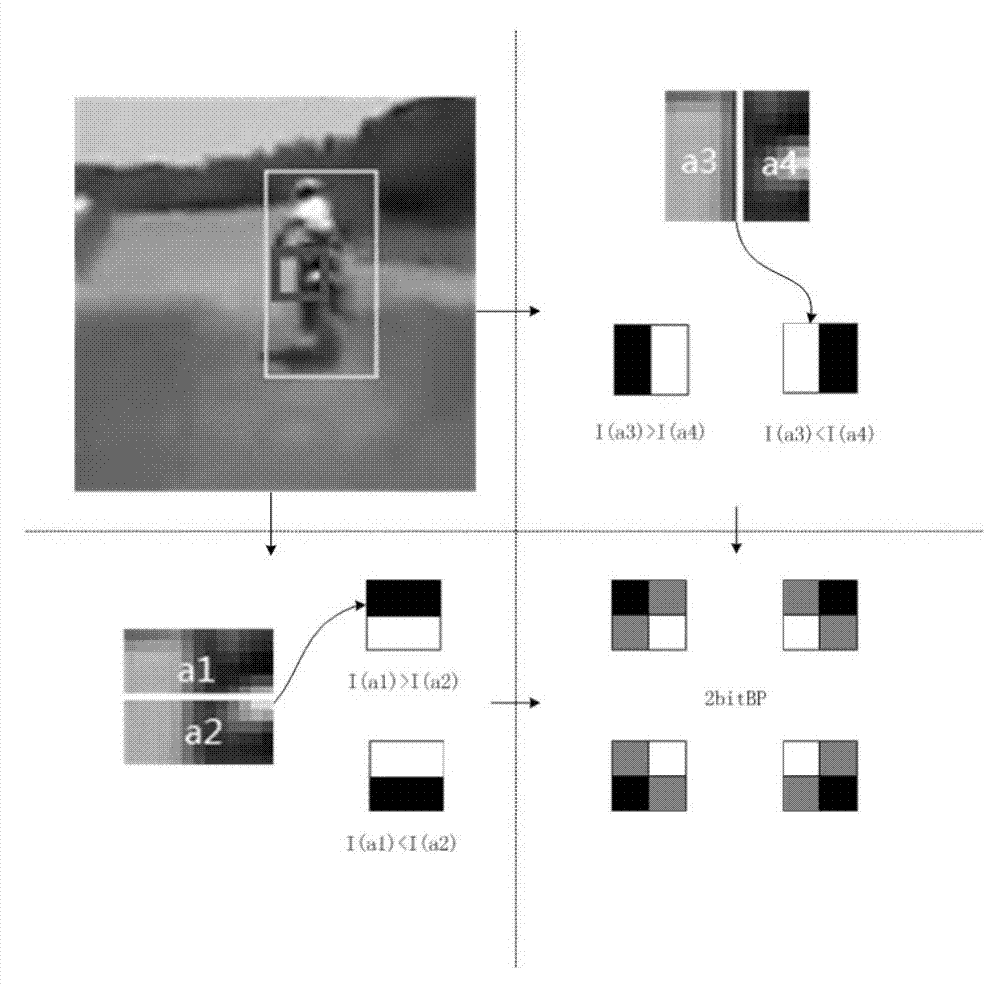

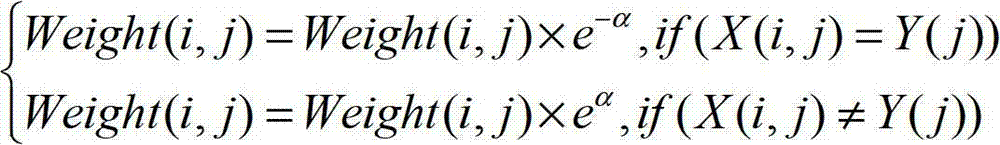

[0041] The concrete steps of the inventive method are as follows:

[0042] 1. Tracking part.

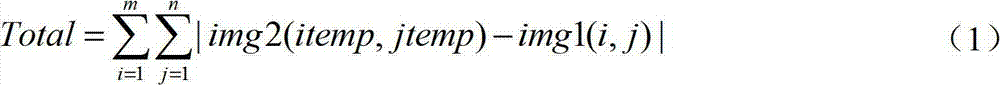

[0043] The preprocessing part inputs the video sequence, uses the function that comes with OpenCV to track the feature points of each frame with the iterative pyramid optical flow method, and obtains the position of these feature points in the next frame.

[0044] For target tracking without scaling, proceed as follows:

[0045] (i) When the number of feature points is less than 4, use the median value of the displacement of these feature points in the x direction and y direction as the displacement of the entire target in the x direction and y direction.

[0046] (ii) When the number of feature points is ≥ 4, use the RANSAC algorithm to calculate the transformation matrix from the bounding box of the previous frame to the bounding box of the next frame.

[0047] Since the moving object may have slight scaling in two consecutive frames, it is incomplete to only consider the unscale...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com