Method and system for processing split online video on the basis of depth sensor

A depth sensor and video segmentation technology, which is applied in image data processing, instruments, image analysis, etc., can solve the problems of accuracy and real-time performance, online video segmentation is prone to errors, etc., to avoid video flicker, ensure timing consistency, Guaranteed consistent effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

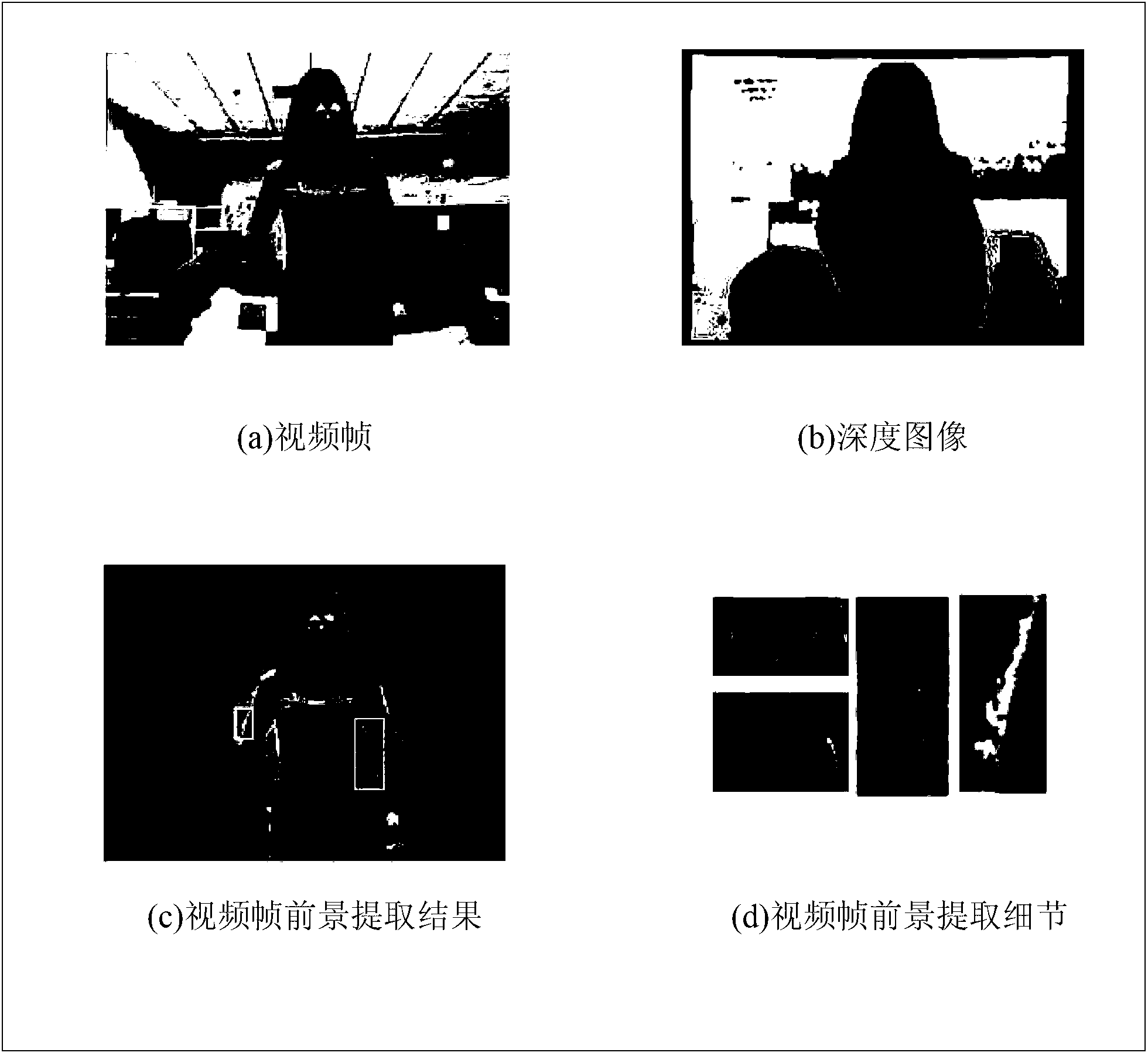

[0063] In recent years, as the size of the depth sensor has been gradually miniaturized and the cost price has been gradually reduced, it has become practical and feasible to use the depth information directly obtained by the depth sensor to assist video segmentation. The robustness of depth information to illumination changes and dynamic shadows will improve the quality of image segmentation. image 3 It is an example diagram of the online video segmentation result of a certain video frame obtained by using the scene segmentation API in OpenNI based on the Kinect depth sensor, where image 3 (a) is the video frame, image 3 (b) is the depth image corresponding to the video frame acquired by the depth sensor, image 3 (c) is the foreground segmented based on the depth image, image 3 (d) enlarged to show the image 3 Segmentation results of marked regions in (c), from image 3 It can be seen from (c) that the online video segmentation based on the depth sensor can obtain b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com