Large-scale processing task scheduling method for income driving under cloud environment

A technology for processing tasks and scheduling methods, applied in the field of distributed computing, can solve problems such as reducing resource leasing overhead, extending task scheduling length, and non-mapping schemes, etc., to achieve the effect of reducing resource leasing costs and meeting performance requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] Below in conjunction with accompanying drawing, the present invention is described in further detail:

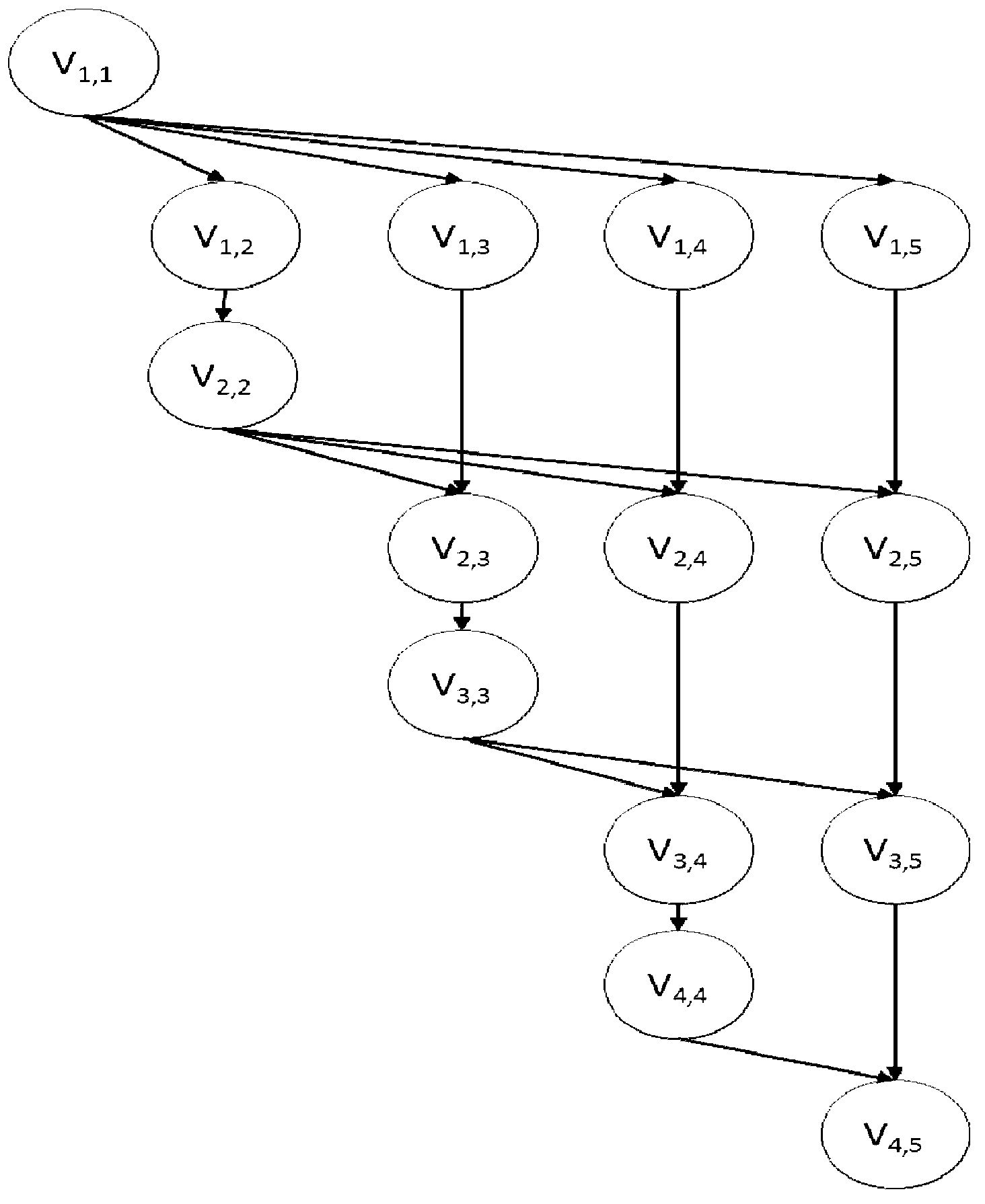

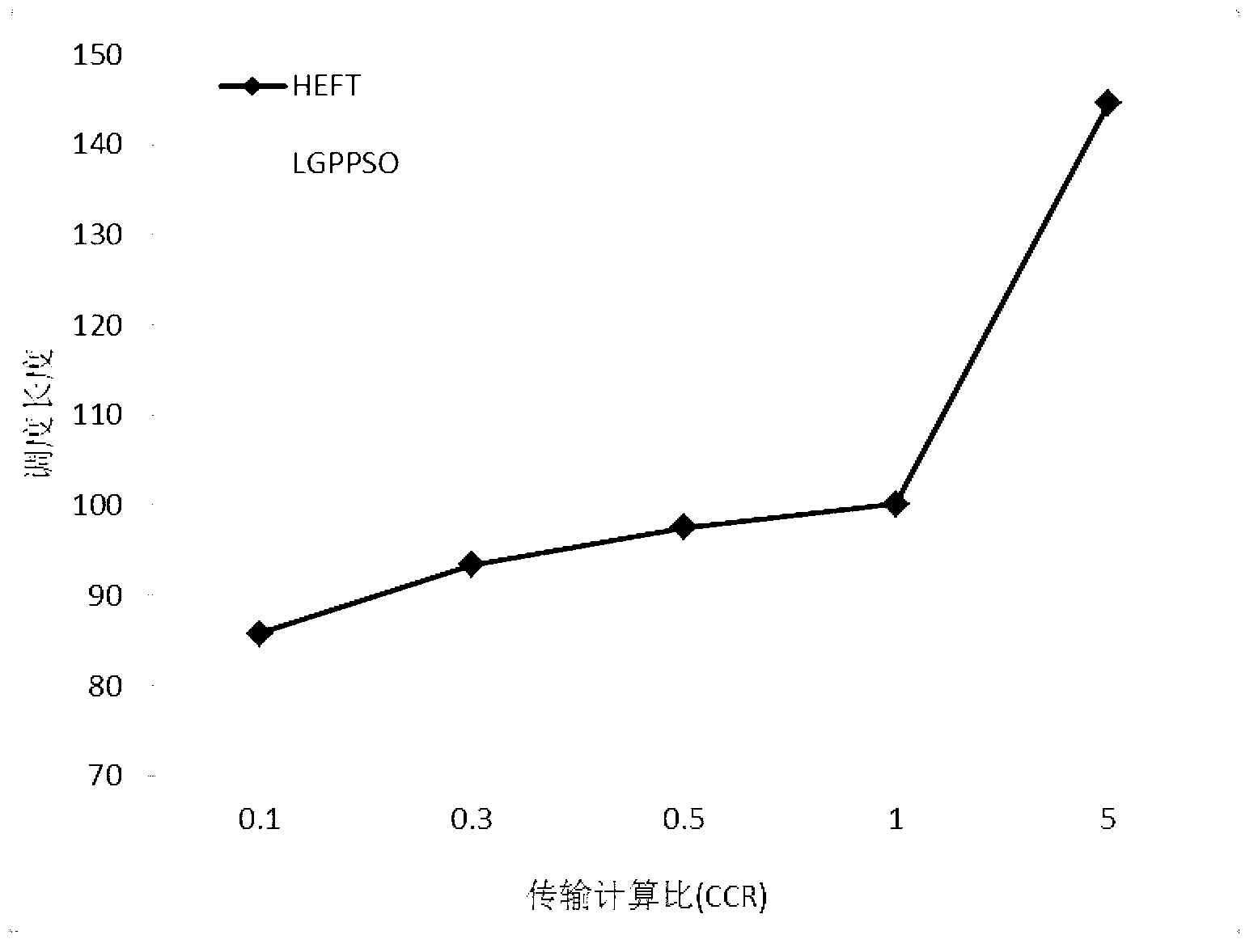

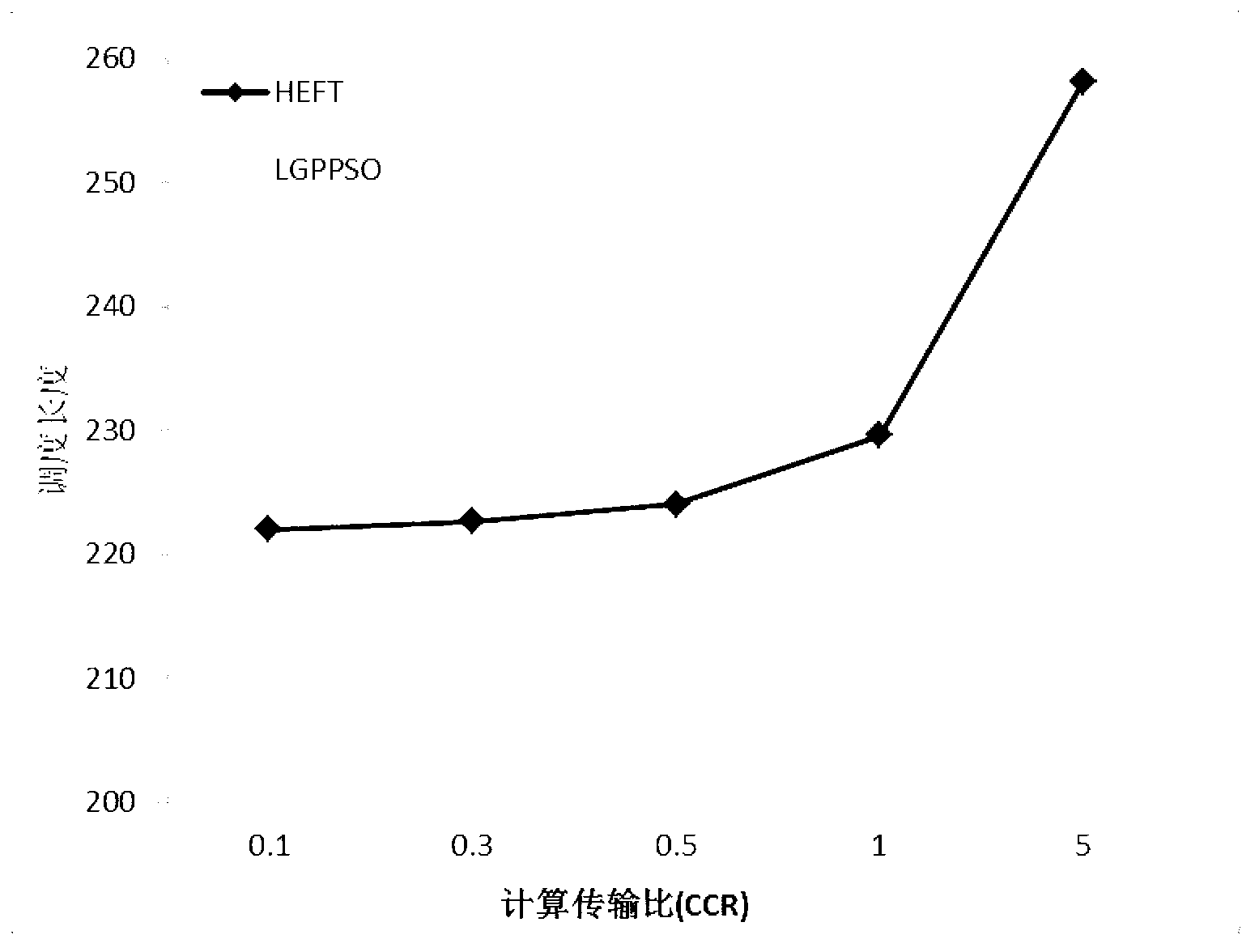

[0043] Aiming at the powerful computing power and flexible pricing mode of cloud computing, the present invention establishes a large-scale graphical data processing task scheduling model, and designs a multi-objective optimization function of execution time and resource leasing cost according to the Pareto optimal theory, and then proposes a A revenue-driven large-scale processing task scheduling method in a cloud environment, which is a new large-scale graph processing task scheduling method based on particle swarm optimization (Large GraphProcessing Based on Particle Swarm Optimization in the Cloud, referred to as LGPPSO (which is the invention method abbreviation).

[0044] The formal description of task scheduling problem for large-scale graph data processing is as follows:

[0045] (1) Cloud computing virtual resource billing model: The underlying provider of c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com