System reducing overhead of central processing unit (CPU) of network input/output (I/O) operation under condition of X86 virtualization

An X86 and network technology, applied in the system field of I/O virtualization and reducing the CPU overhead of network I/O operations, can solve the problem of large CPU overhead, and achieve the effect of reducing additional overhead, reducing overhead, and avoiding data exchange.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

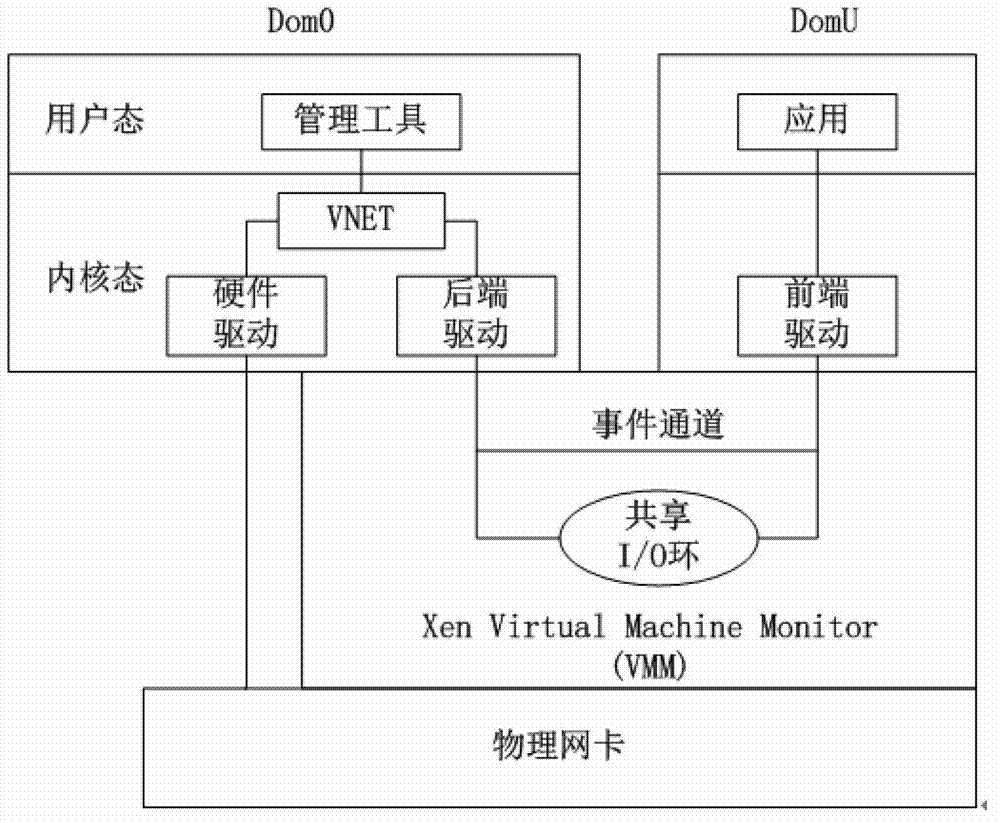

[0048] Figure 4 The traditional virtual machine network architecture is shown. The request data packet from the network first arrives at VMM or Dom0, and then is sent to the responding virtual machine through vNet, and the virtual machine and its application process the data packet; after the processing, the response data Packets are sent through the vNet to the VMM or Dom0, which in turn is sent to the network.

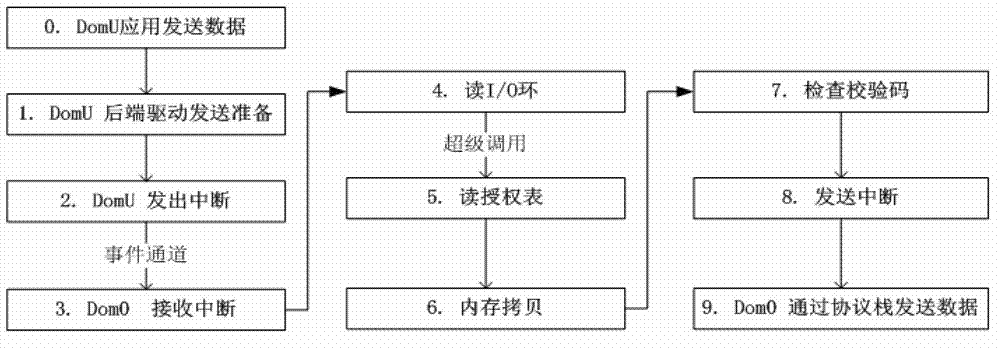

[0049] like Figure 5 , 6 As shown, the present invention includes a data cache module, a network data request interception module and a cache data exchange communication module, and the application calls the interface of the data exchange communication module to transmit the data to be cached, its serial number and characteristic value to the data cache module. When the network data request interception module receives a data packet from the network, it first extracts the characteristic value of the data packet, and uses the characteristic value as an index to fi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com