Video fingertip positioning method based on Kinect

A positioning method, fingertip technology, applied in the input/output of user/computer interaction, computer parts, graphic reading, etc., can solve the problem of unable to handle the fingertip facing the camera

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

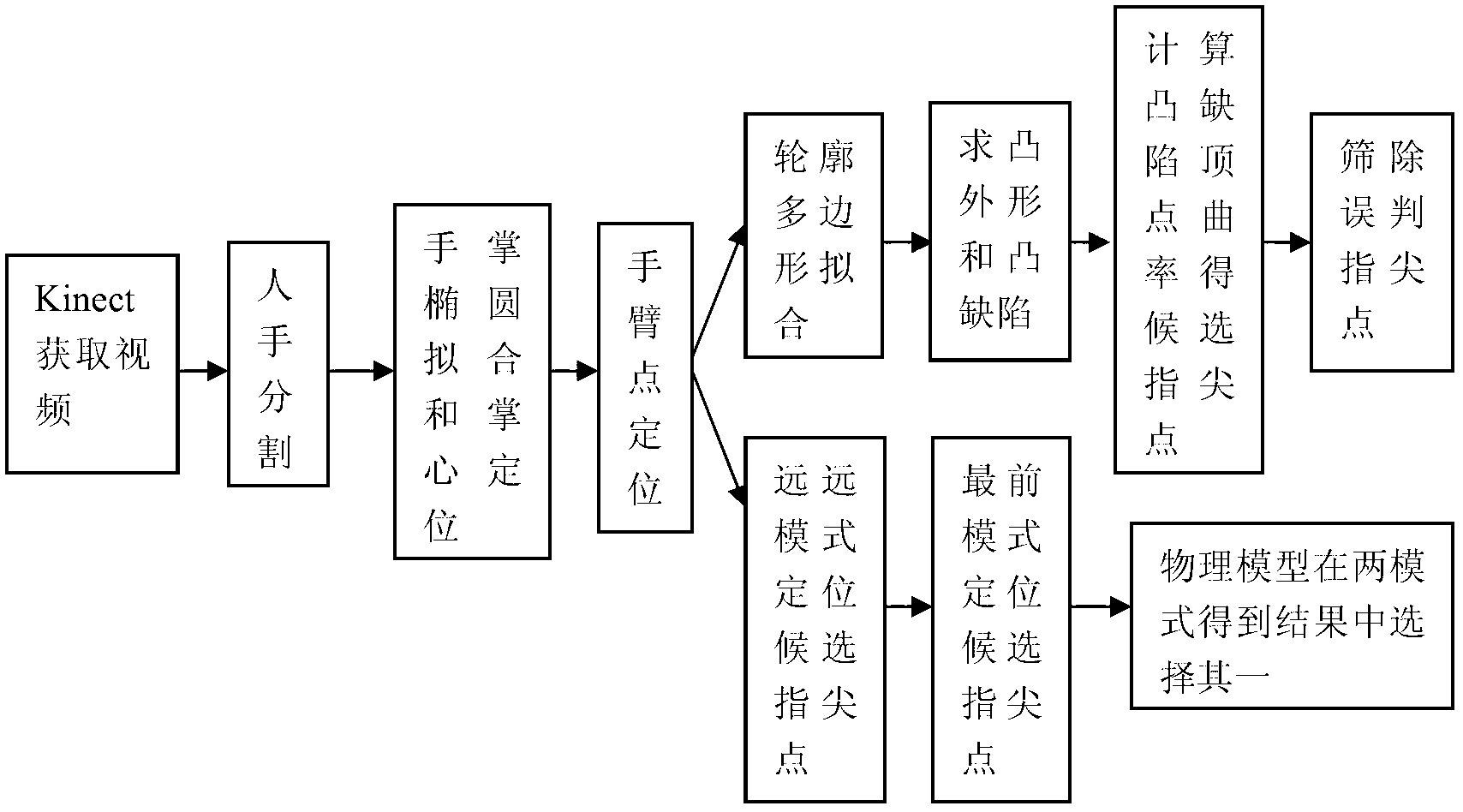

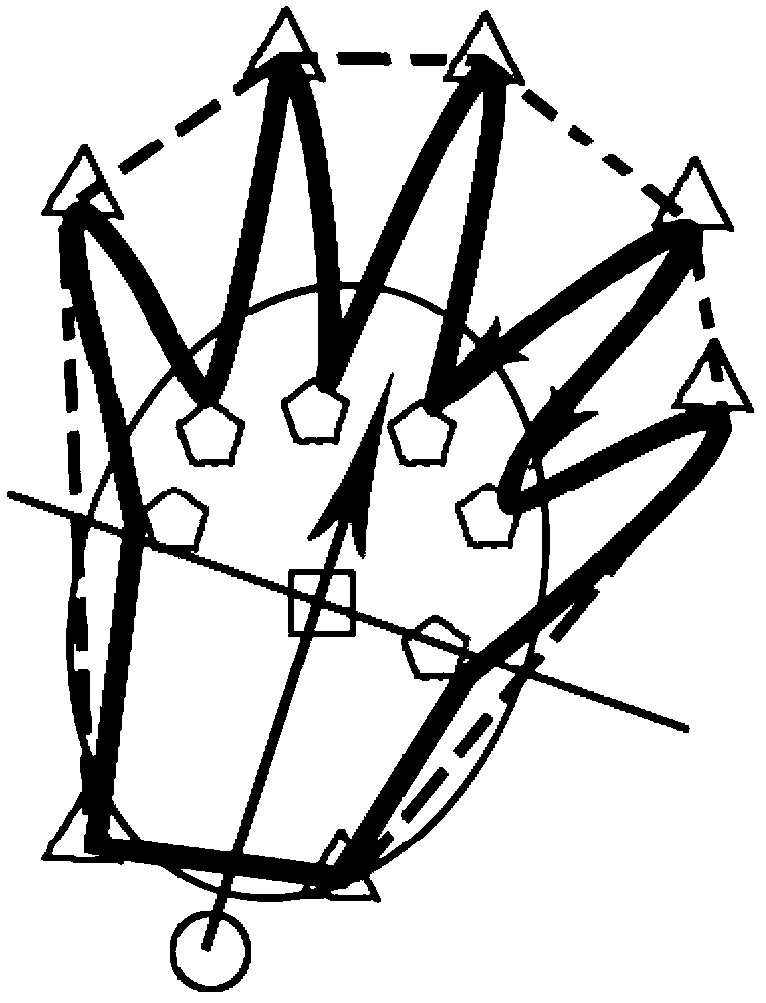

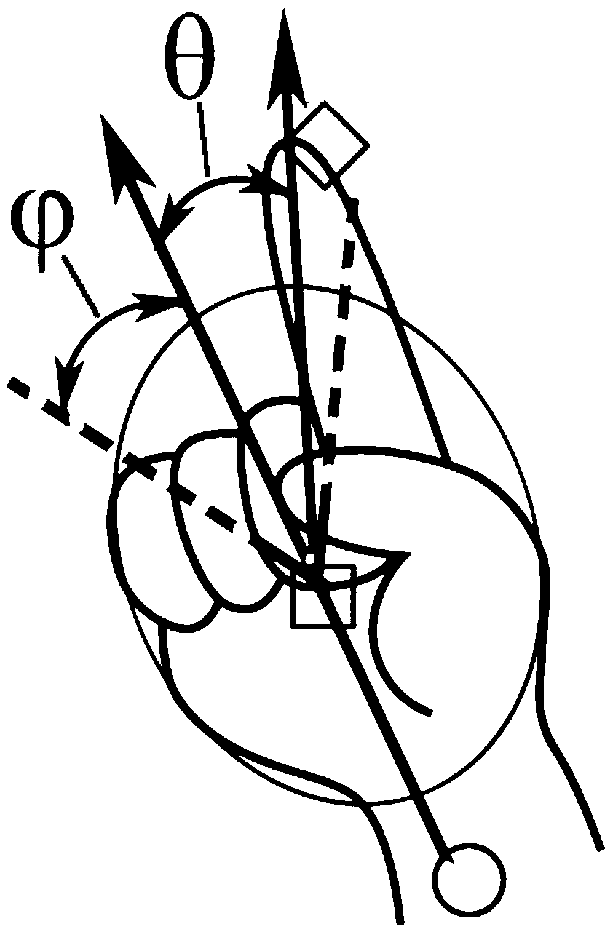

[0064] Such as figure 1 Shown is a block diagram of the system structure of the present invention. After the user's handwriting video is acquired by Kinect, the hand is segmented to separate the region of interest of the hand from the background. After segmentation, ellipse fitting is performed on the palm and palm points are obtained. Then the arm points are obtained by quadratic depth thresholding segmentation method. After that, the system is divided into two modules: "multi-finger positioning" module and "multi-finger positioning" module. In the "Multipoint Positioning" module, polygon fitting is performed on the contour of the hand to remove contour noise. Then calculate its convex shape, and use the fitting polygon and convex shape to form a convex defect. Candidate fingertip points are obtained by calculating the curvature of convex defect vertices. The opponent's part is partitioned and the misjudged fingertips from the previous step are screened out. In the "sing...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com