Human body movement identification method based on Kinect

A human action recognition, human body technology, applied in the input/output of user/computer interaction, computer parts, graphics reading, etc. The effect of human-computer interaction, the effect of improving speed and accuracy, and reducing the complexity of the system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

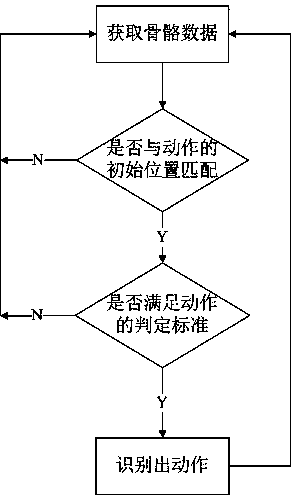

[0023] Such as figure 1 Shown, a kind of human action recognition method based on Kinect comprises the steps of following order:

[0024] (1) Use Kinect to collect the spatial position information of the target human bone joint points at different times;

[0025] (2) For the acquired spatial position information of the target human skeleton joint points at each moment, judge whether it matches the preset initial position information of various human movements; if so, record this moment as the initial moment, and execute the steps (3), if not, return to step (1);

[0026] (3) Starting from the initial moment, judge whether the spatial position information of the target human bone joint points acquired within a period of time meets the preset judgment standards of various human actions; if yes, perform step (4), if not, return step 1);

[0027] (4) Identify the action type of the target human body, and then return to step (1).

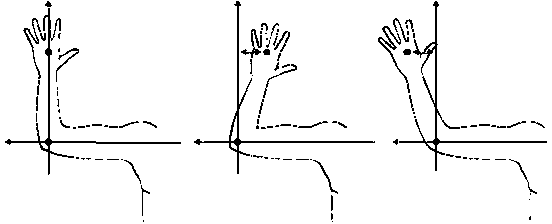

[0028] Next, the present invention will be fur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com