Gesture recognition method and apparatus with improved background suppression

A background interference and gesture technology, applied in image data processing, instrument, character and pattern recognition, etc., to avoid misjudgment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

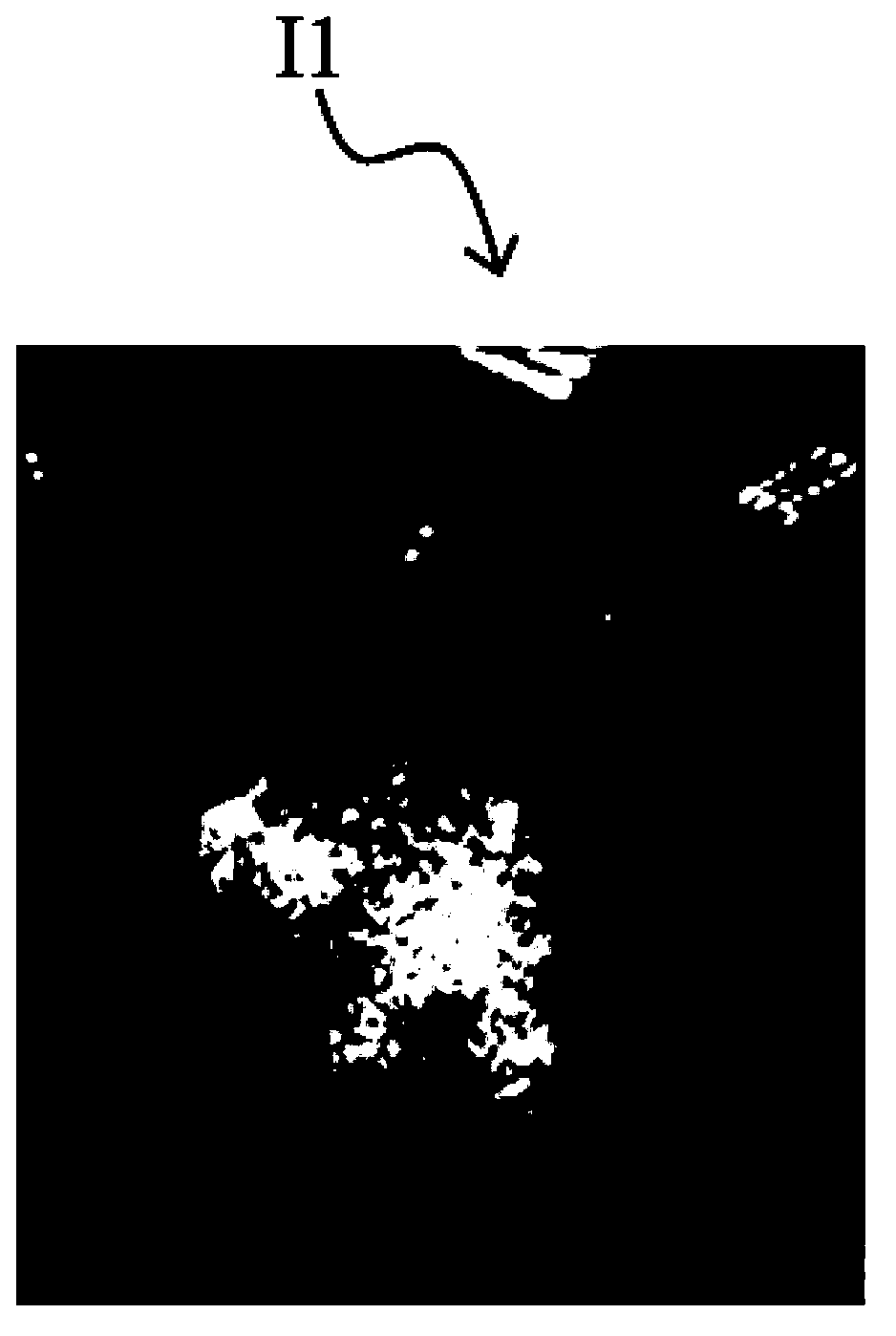

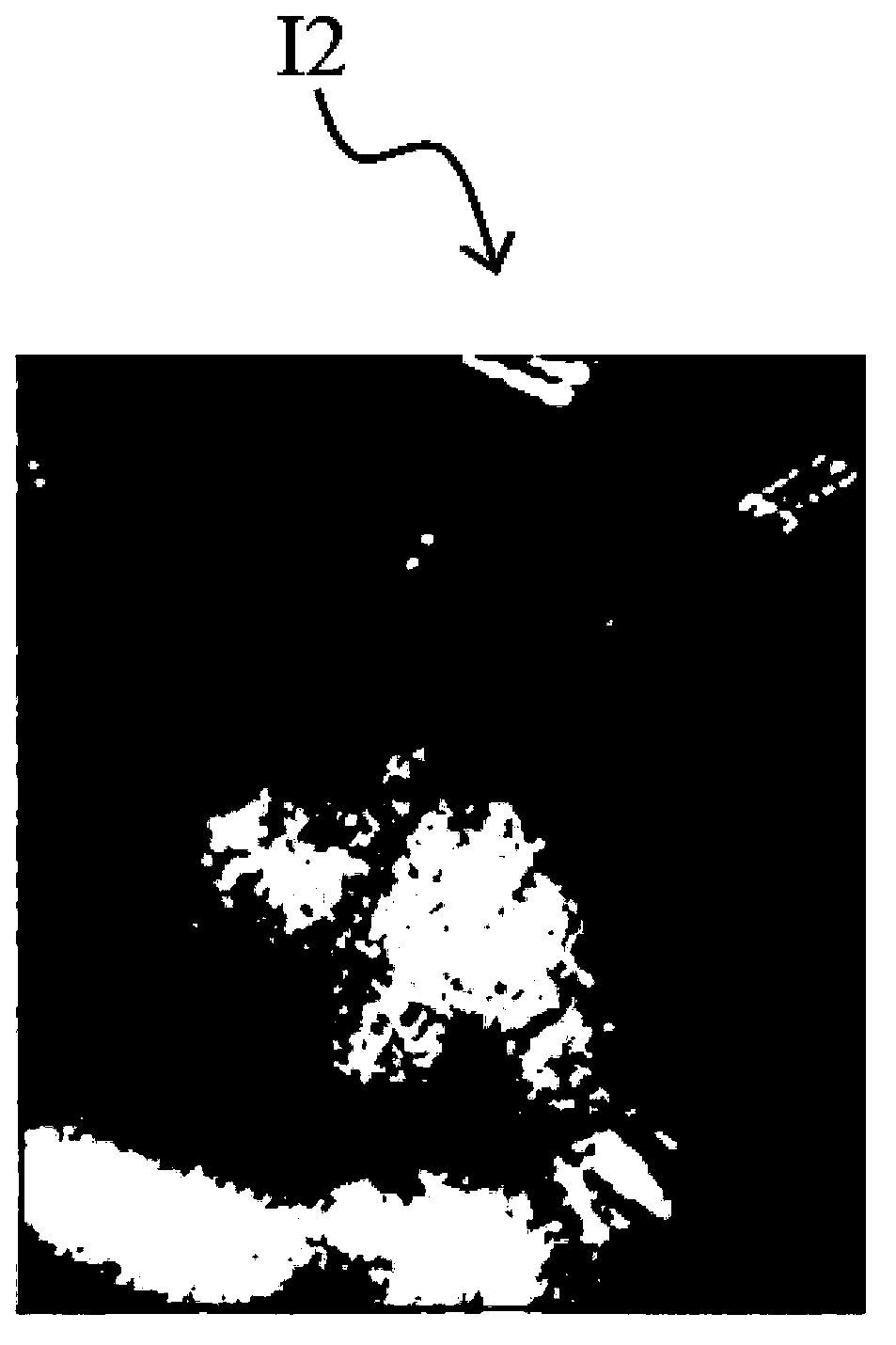

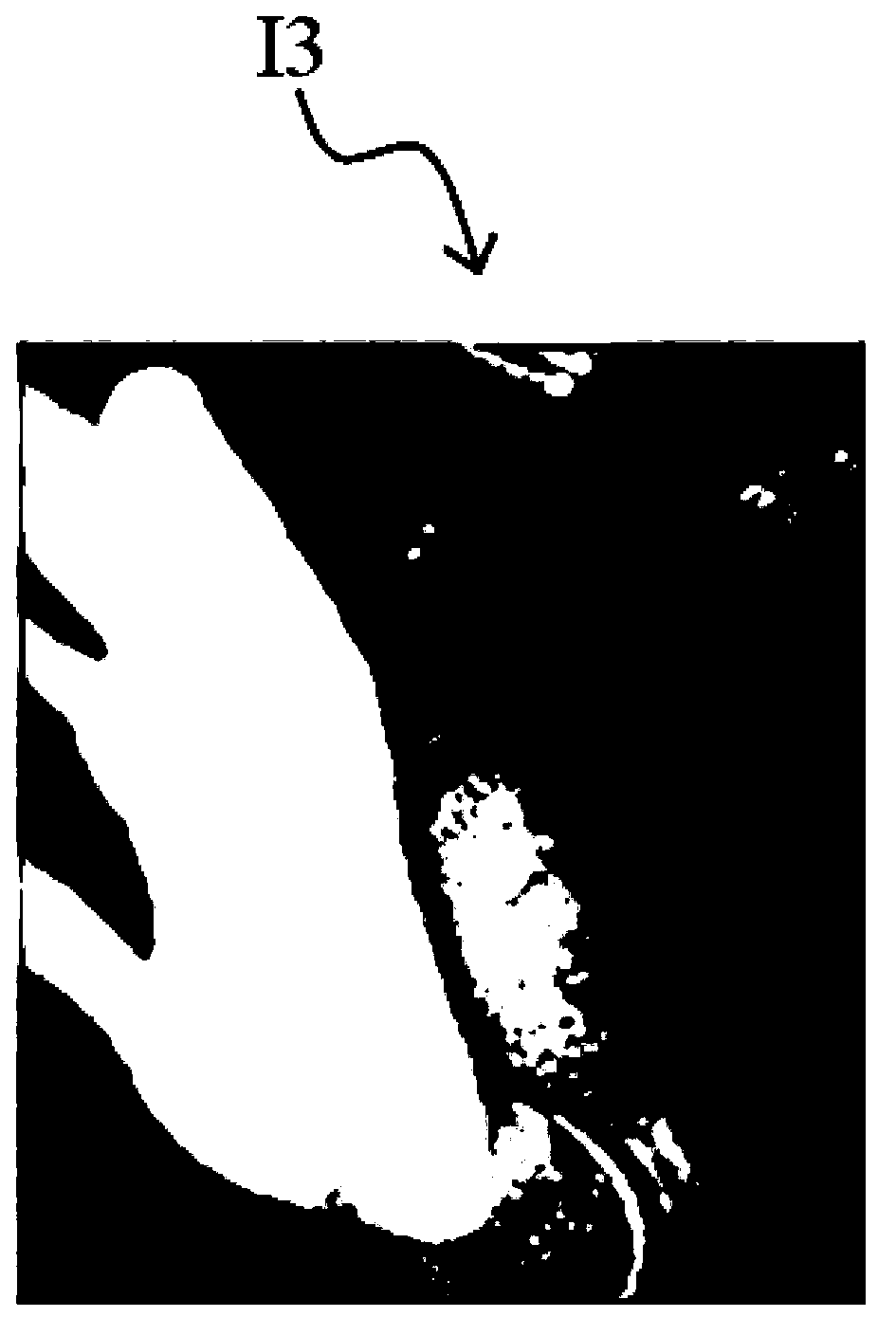

Image

Examples

Embodiment Construction

[0041] The aforementioned and other technical contents, features and effects of the present invention will be clearly presented in the following detailed description of preferred embodiments with reference to the drawings. The directional terms mentioned in the following embodiments, such as: up, down, left, right, front or back, etc., are only directions referring to the attached drawings. Accordingly, the directional terms are used to illustrate and not to limit the invention.

[0042] figure 2 It is a schematic diagram of a gesture judging device according to an embodiment of the present invention. Please refer to figure 2 , the gesture determination device 200 of this embodiment includes an image acquisition unit 210 and a processing unit 220 . The image acquisition unit 210 may be a complementary metal-oxide-semiconductor (Complementary Metal-Oxide-Semiconductor, CMOS) image sensor or a charge-coupled device (Charge-coupled Device, CCD) image sensor, wherein the imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com