Intelligent tour guide realizing method and intelligent tour guide device based on mobile network and mobile client

A mobile Internet and smart tour guide technology, applied in the field of smart tourism, can solve the problems of low positioning accuracy and reliability, easy damage, high price, etc., to improve the experience and experience of time and space, easy to use, and reduce costs.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] All features disclosed in this specification, or steps in all methods or processes disclosed, may be combined in any manner, except for mutually exclusive features and / or steps.

[0056] Any feature disclosed in this specification, unless specifically stated, can be replaced by other alternative features that are equivalent or have similar purposes. That is, unless expressly stated otherwise, each feature is one example only of a series of equivalent or similar features.

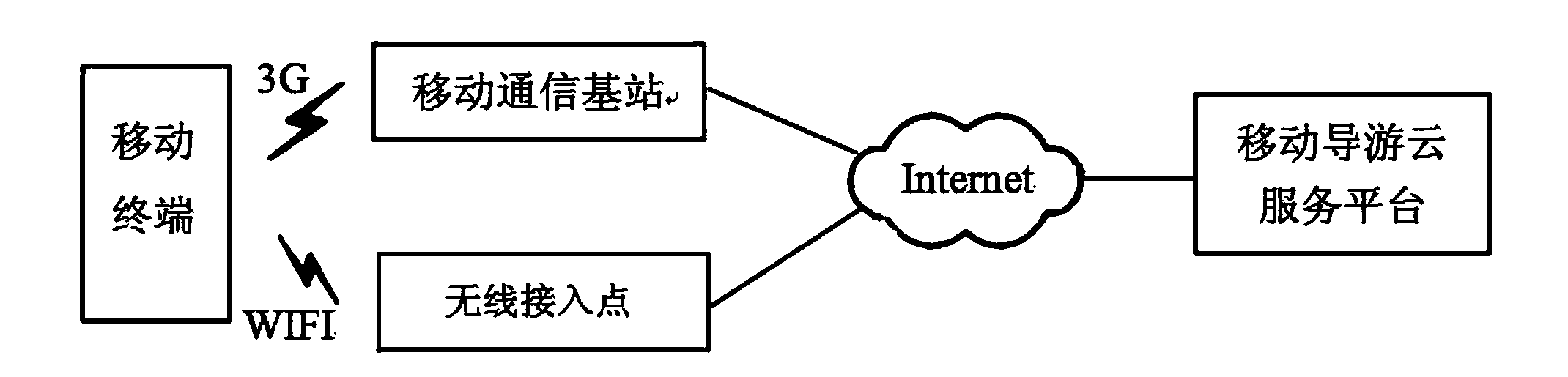

[0057] Such as figure 1 , the mobile Internet-based intelligent tour guide device in the present invention includes a mobile client module and a tour guide service module;

[0058] The mobile client module is installed on a mobile terminal, such as a smart phone, a notebook computer and a tablet computer, and is used to control the mobile terminal to collect location information of a scenic spot, and then pass through a mobile network, such as a 3G wireless network or a wifi wireless network, to view t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com