Key frame extraction method based on visual attention model and system

A technology of visual attention and extraction methods, applied in the field of video analysis, can solve problems such as heavy workload and different video understanding

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

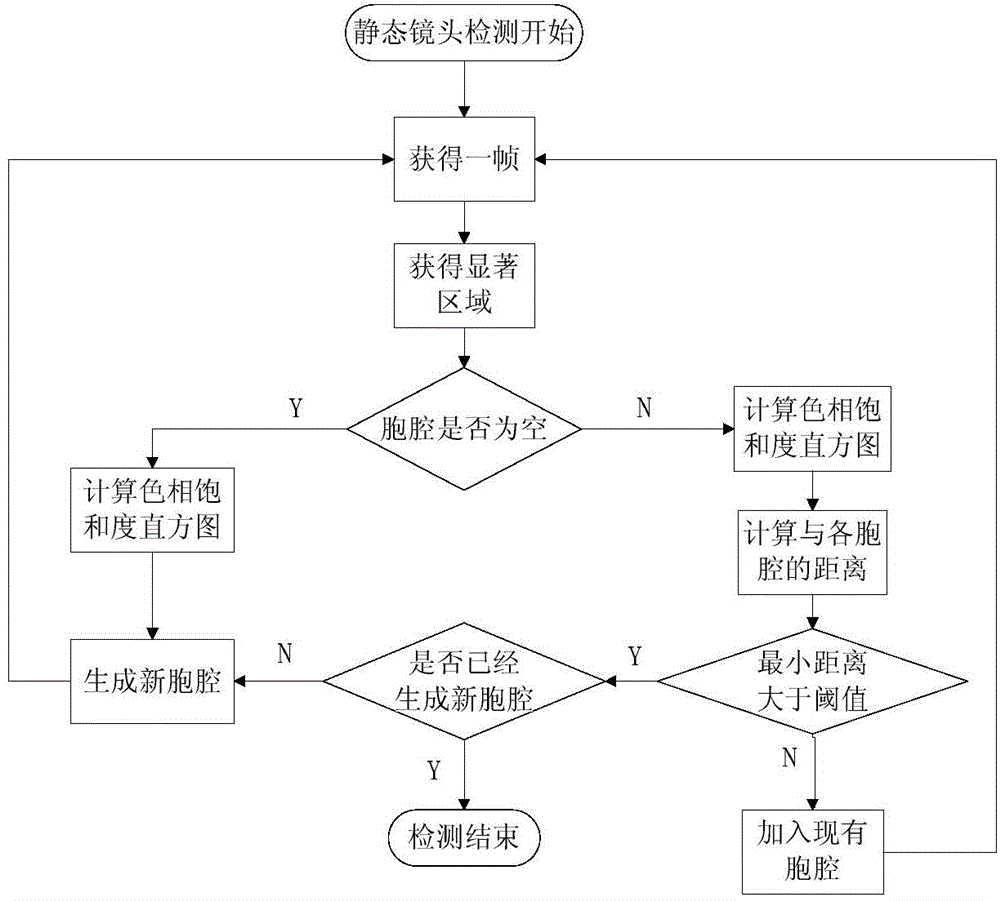

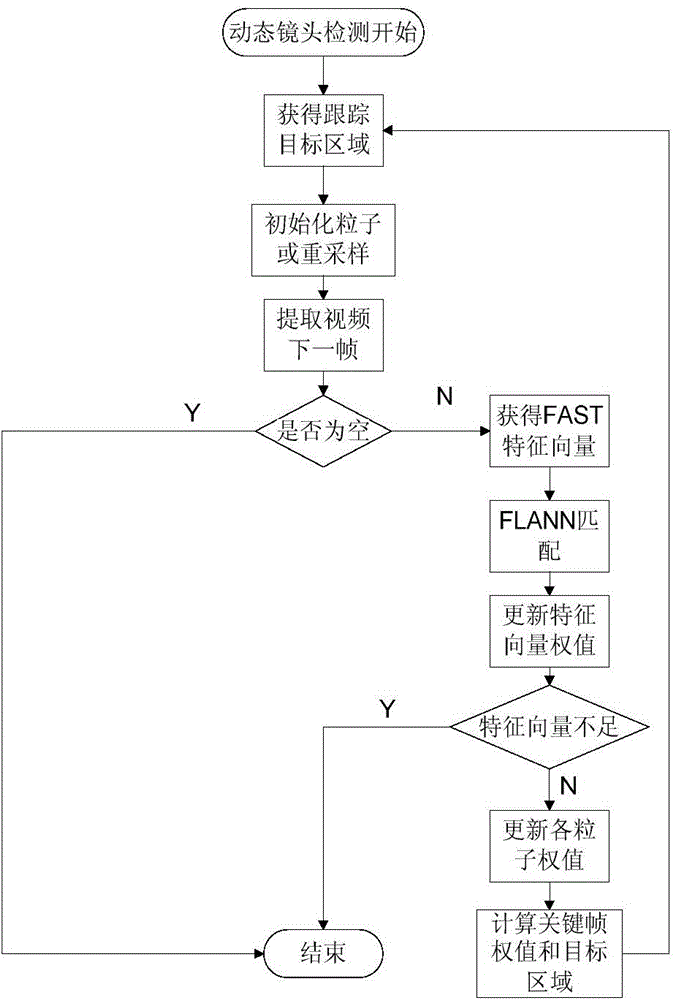

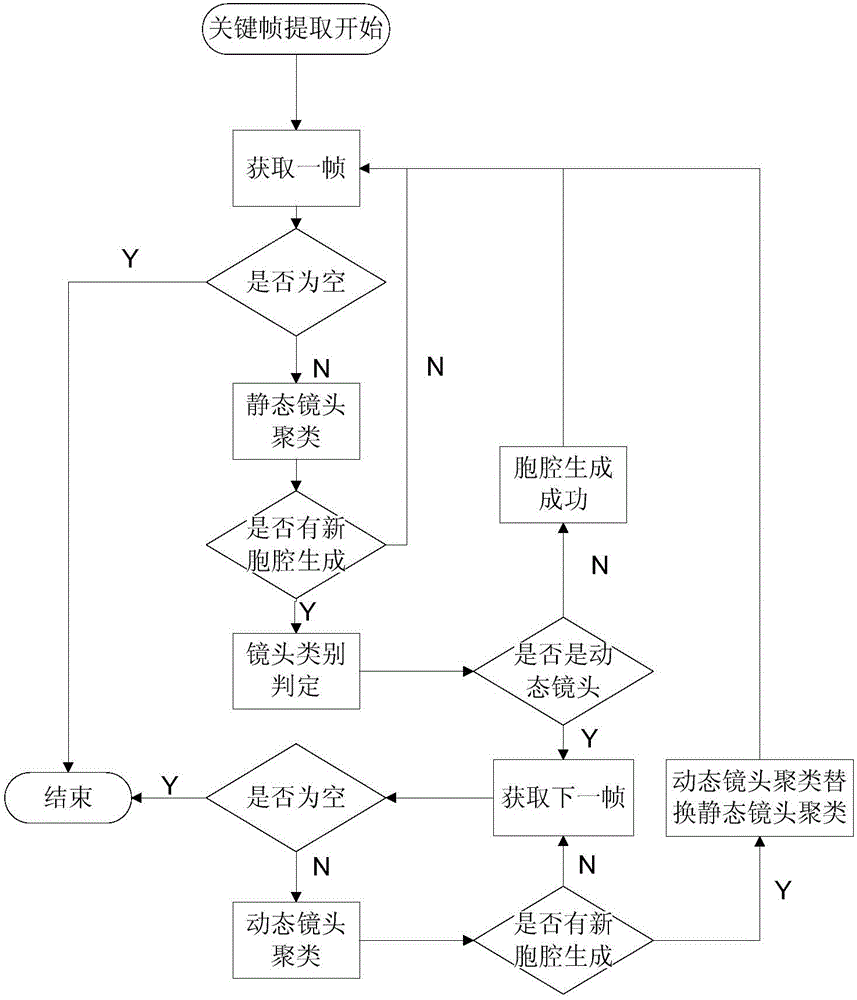

[0024] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0025] A kind of video key frame extraction method based on visual attention model disclosed by the present invention, the specific implementation is as follows:

[0026] First, in the spatial domain, the binomial coefficient is used to filter the global contrast for saliency detection, and the adaptive threshold is used to extract the target area. The specific method is as follows:

[0027] (11) The binomial coefficient is constructed according to the Yang Hui triangle, and the normalization factor of the N layer is 2 N . The fourth layer is selected, so the filter coefficients B 4 =(1 / 16)[1 4 6 4 1];

[0028] (12) Let I be the original stimulus intensity, is the mean value of the surrounding stimulus intensity, for I and B 4 The convolution of the pixel point is measured by the vector form of CIELAB color space to measure the strength of the stimulu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com