Depth image matching method based on ASIFT (Affine Scale-invariant Feature Transform)

A deep image matching and depth technology, applied in image analysis, image data processing, instruments, etc., can solve the problems of narrow adaptability, difficult identification, poor matching accuracy, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

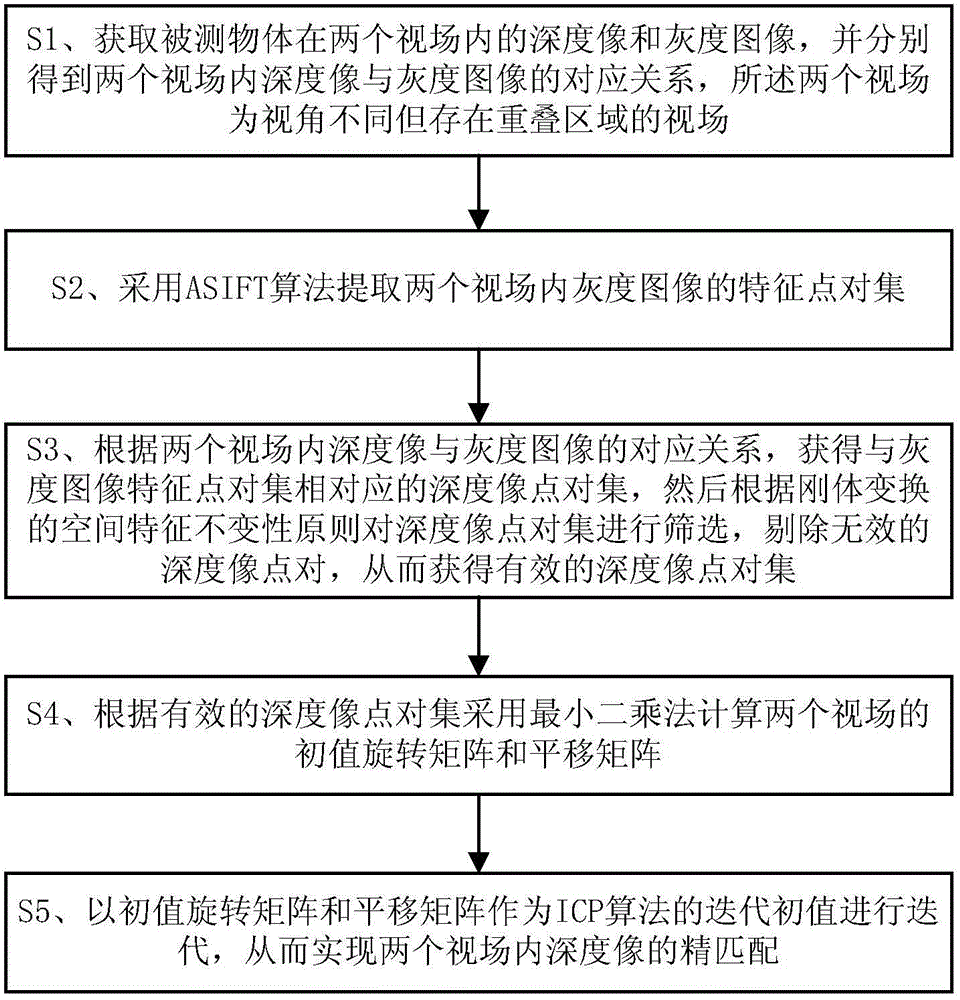

[0034] refer to figure 1 , an ASIFT-based depth image matching method, including:

[0035] S1. Obtain the depth image and grayscale image of the measured object in two fields of view, and respectively obtain the corresponding relationship between the depth image and the grayscale image in the two fields of view. The two fields of view have different viewing angles but overlap field of view of the area;

[0036] S2. Using the ASIFT algorithm to extract feature point pairs of the two grayscale images in the field of view;

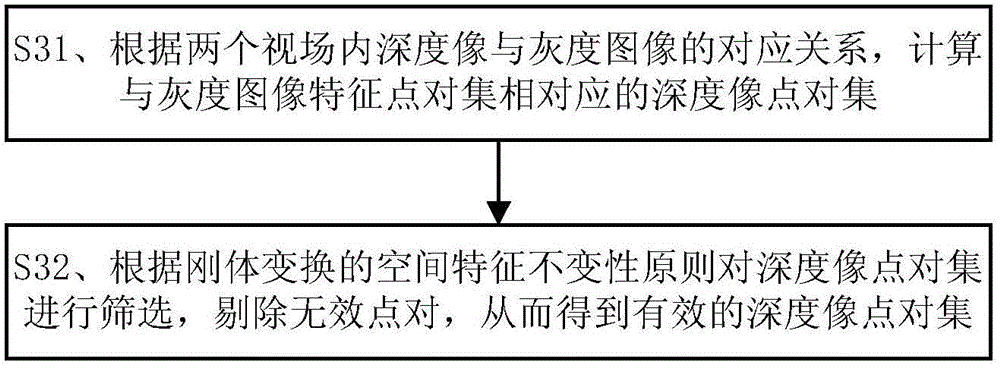

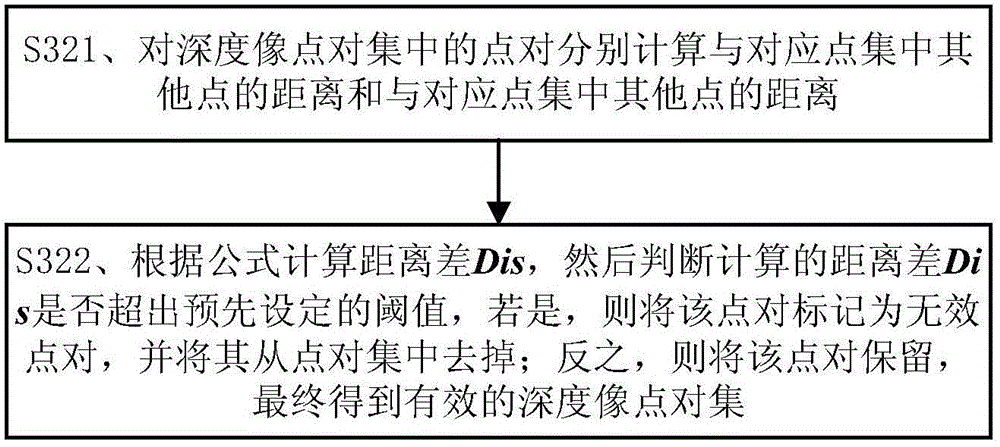

[0037] S3. According to the corresponding relationship between the depth image and the grayscale image in the two fields of view, obtain the depth image point pair set corresponding to the grayscale image feature point pair set, and then perform the depth image point set according to the principle of spatial feature invariance of rigid body transformation Filter the set to remove invalid depth image point pairs, so as to obtain an effective depth image poin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com