Wide-baseline visible light camera pose estimation method

A pose estimation, visible light technology, applied in computing, navigation computing tools, special data processing applications, etc., can solve problems such as difficult to directly apply to outdoor complex environments, and achieve robust detection and matching, and accurate calibration results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] Now in conjunction with embodiment the present invention will be further described:

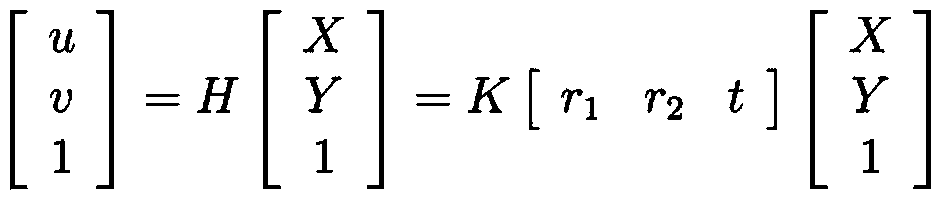

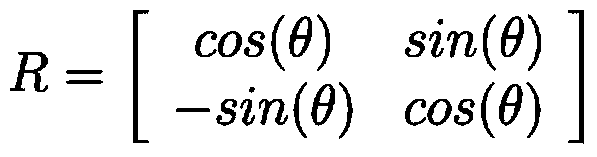

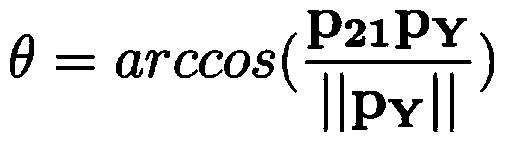

[0028] 1. Camera internal parameter calibration

[0029] Zhang's calibration method is used to calibrate the internal parameters of each camera in the landing navigation system, that is, the focal length, the principal point coordinates, the tilt angle and the distortion coefficient and other parameters. This method belongs to the common method of camera internal parameter calibration, which is briefly described as follows: use black and white two-dimensional checkerboard as internal parameter calibration target, and place the camera at 10-15 The target is shot in different poses, and the obtained image is used as the image for calibration; corner detection is performed on the image for calibration, and the geometric relationship of the checkerboard is used to establish the corresponding relationship of each corner in the image of each viewing angle; because all corners on the checkerb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com