Indoor passive navigation and positioning system and indoor passive navigation and positioning method

A technology for navigation and positioning and positioning points, which is applied in the field of image processing technology and robotics, and can solve problems such as environmental changes.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

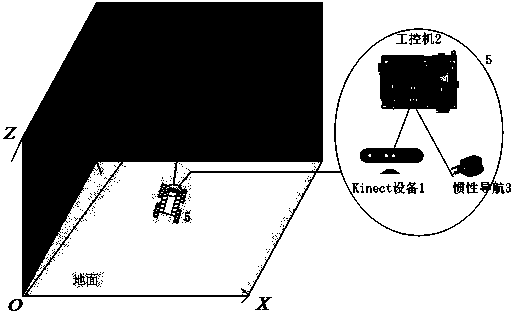

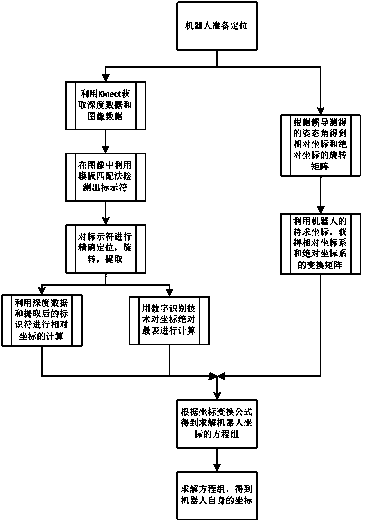

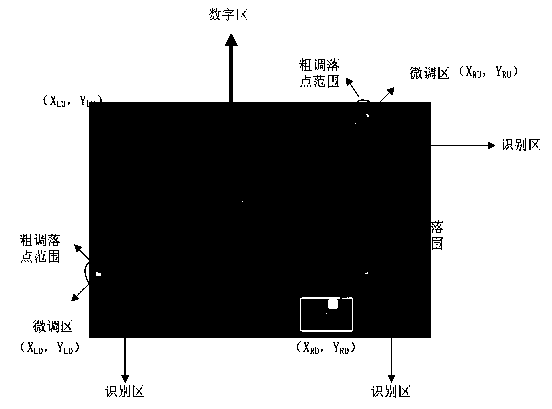

[0046] see figure 1 , figure 2 , the native indoor passive navigation and positioning system, including depth camera Kinect (1) and xsens inertial navigation device (2), characterized in that: the robot (5) is in an indoor environment, depth camera Kinect (1) and xsens inertial navigation device ( 2) It is integrated inside the robot (5), and connected to the industrial computer SBC84823 (2) inside the robot (5) through two USB serial ports. The industrial computer (2) processes the image data returned by the depth camera Kinect (1) and the xsens inertial navigation device (2), the depth data and the attitude angle data of the robot (5). Such as image 3 As shown, the identifier (4) is just a special digital code, which contains the location information of the identifier, and is collected by Kinect (1) as image data; the code contains two parts, the matching area and the digital area: the matching area contains There are three matching templates composed of salient feature...

Embodiment 2

[0048] see Figure 1 to Figure 4 , the indoor passive navigation positioning method uses the above system for positioning, and the specific operation steps are as follows:

[0049] Step 1: The robot runs indoors, and uses Kinect (1) to collect image data and depth data inside the building, and transmits the data to the industrial computer (2);

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com