Server multithread parallel data processing method and load balancing method

A data processing and server-side technology, applied in the field of computer networks, can solve problems affecting system performance, data processing time inconsistency, data processing thread pool thread idle, etc., to achieve the effect of not consuming computer resources, simple calculation, and strong application value

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The technical solution of the present invention will be described in detail below in conjunction with the drawings:

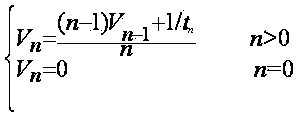

[0026] The idea of the present invention is to allocate reasonable data to each data processing thread according to the relative idle rate of each data processing thread when distributing data processed in parallel by multiple threads, thereby improving the system’s parallel processing and intensive calculation of massive data Ability.

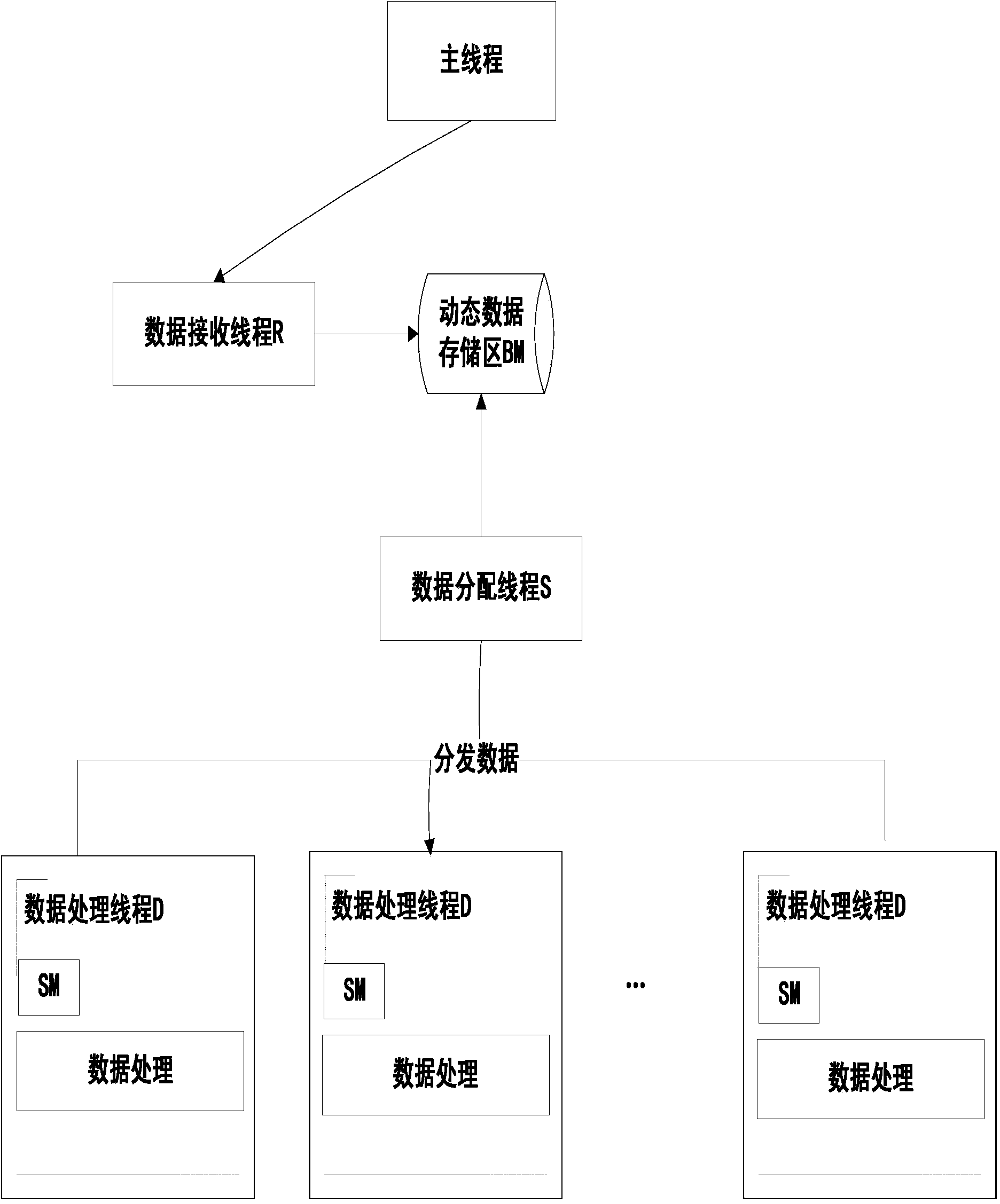

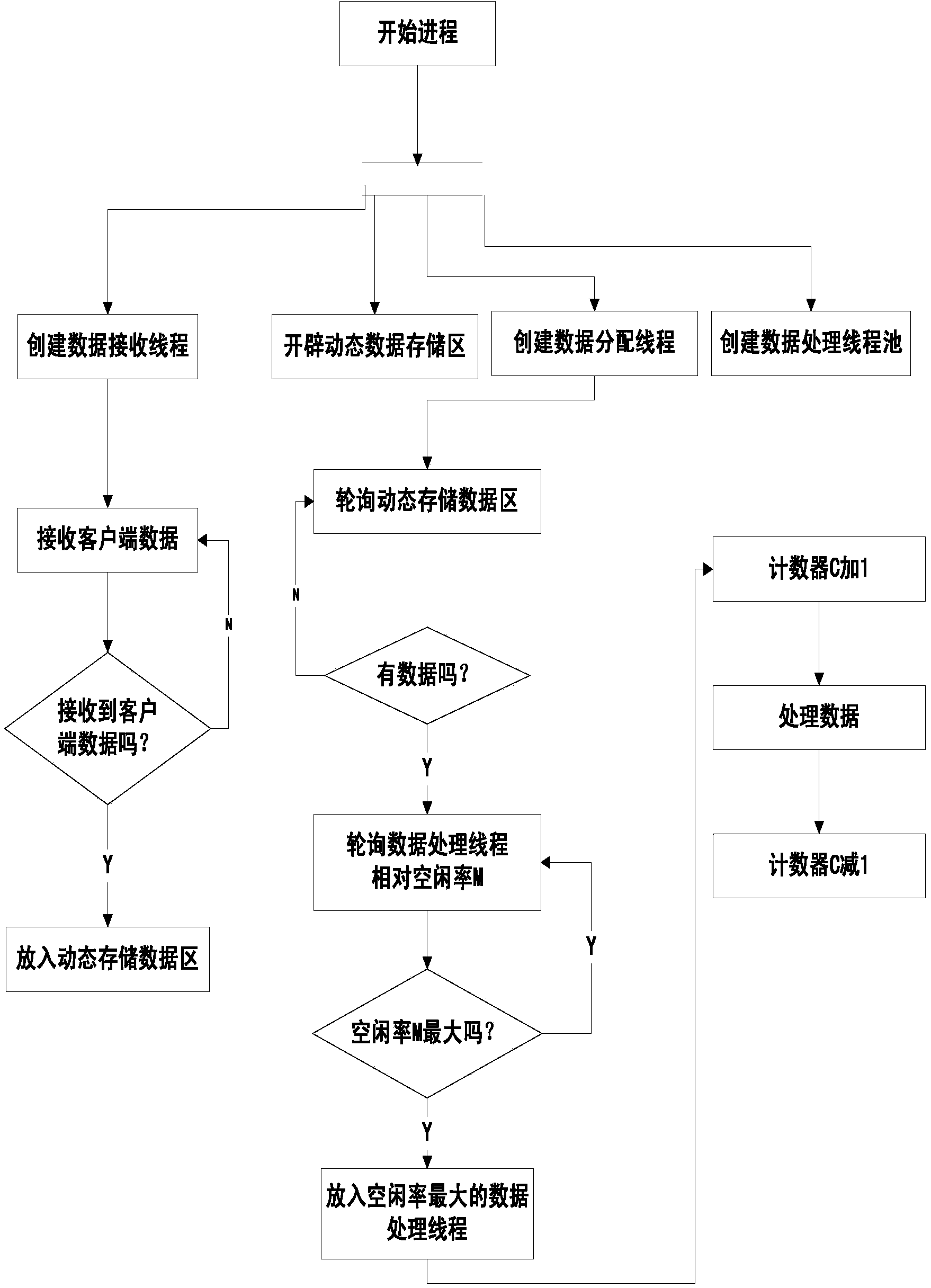

[0027] figure 1 Shows an example of server-side multi-threaded parallel data processing. In this example, when the process starts, the server starts a data receiving thread R, and creates a thread pool containing multiple data processing threads D, and a data distribution thread S, open up a dynamic storage area BM at the same time, and open up a dynamic storage area SM and a counter C with an initial value of 0 for each data processing thread D in the thread pool; the data receiving thread R is only responsible for receivi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com