Data caching method, cache and computer system

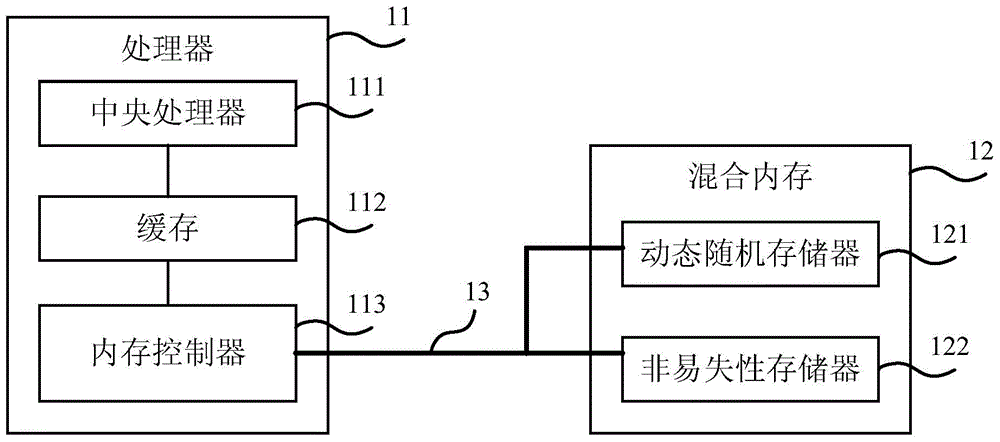

A data cache and data technology, applied in the storage field, can solve the problems of long NVM read and write delays, achieve the effects of reducing delays and improving access efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

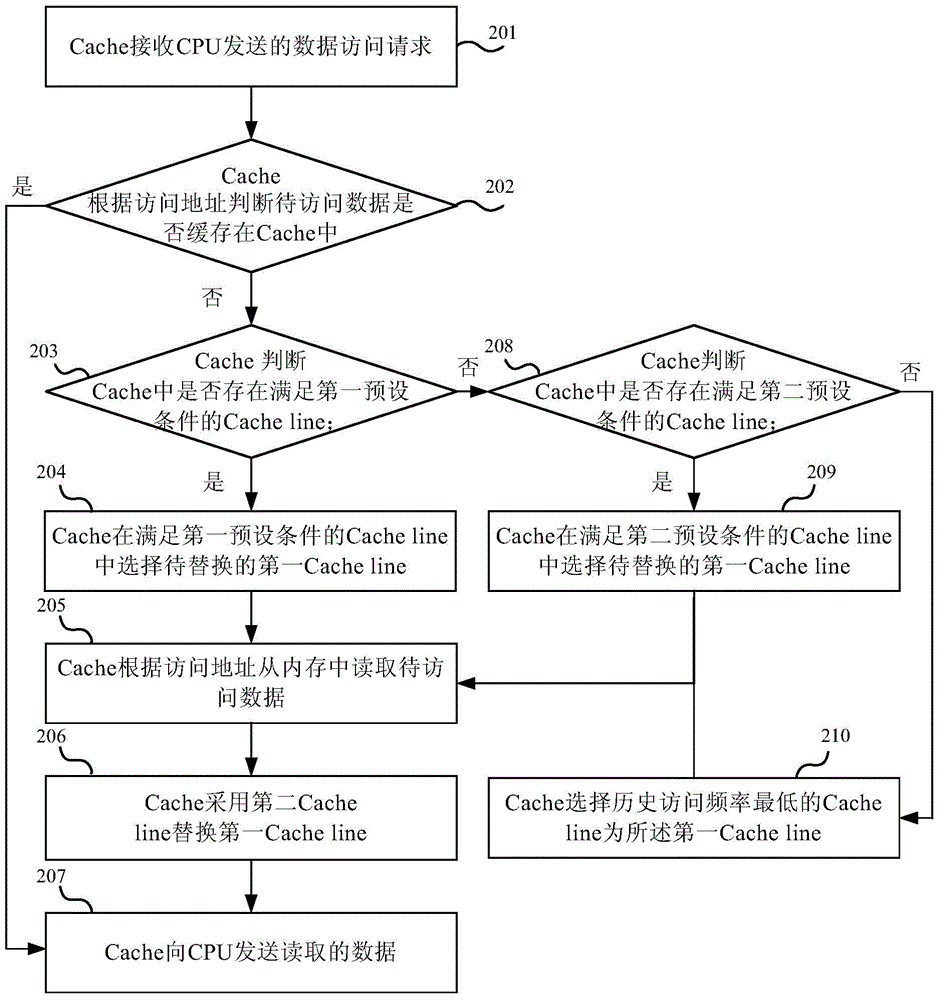

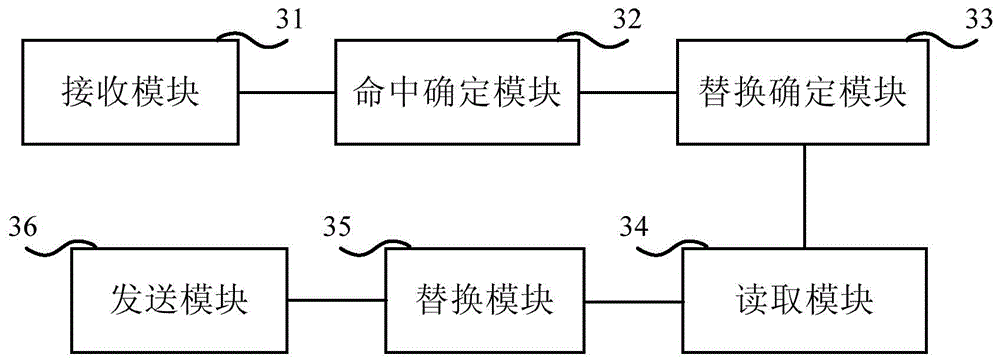

Method used

Image

Examples

example 1

[0085] Example 1: After the Cache searches locally, it does not find the corresponding data, indicating a miss. Then the Cache searches the first 5 Cachelines of the LRU linked list, and according to the location bits of the first 5 Cachelines, it determines that no Cacheline whose memory type is DRAM is found, then Cache checks the Modify bits of the first 3 Cachelines in the LRU linked list, and finds that the Modify bit of the second Cacheline is 0, which means clean, and this Cacheline is the first Cacheline to be replaced. The Cache may read the Cacheline containing the data whose access address is 0x08000000 into the Cache to replace the first Cacheline, and return the read data to the CPU. And, Cache can judge that data is stored in DRAM according to the access address (0x08000000), so the newly read Cacheline, that is, the second Cacheline, is added to the end of the LRU linked list, and its location position is 0 (to prove that the second Cacheline comes from DRAM), a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com