Patents

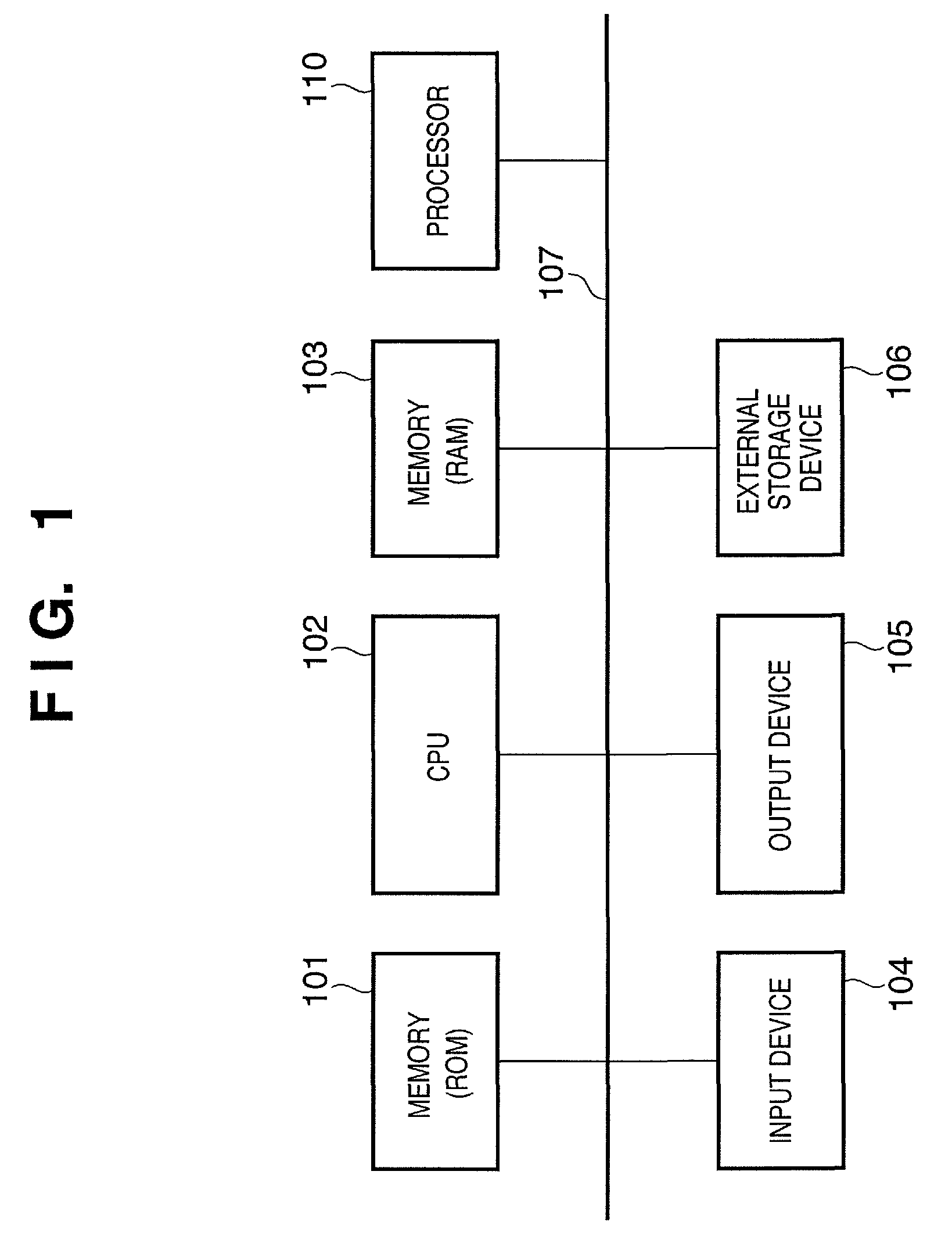

Literature

56results about How to "Reduce cache size" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

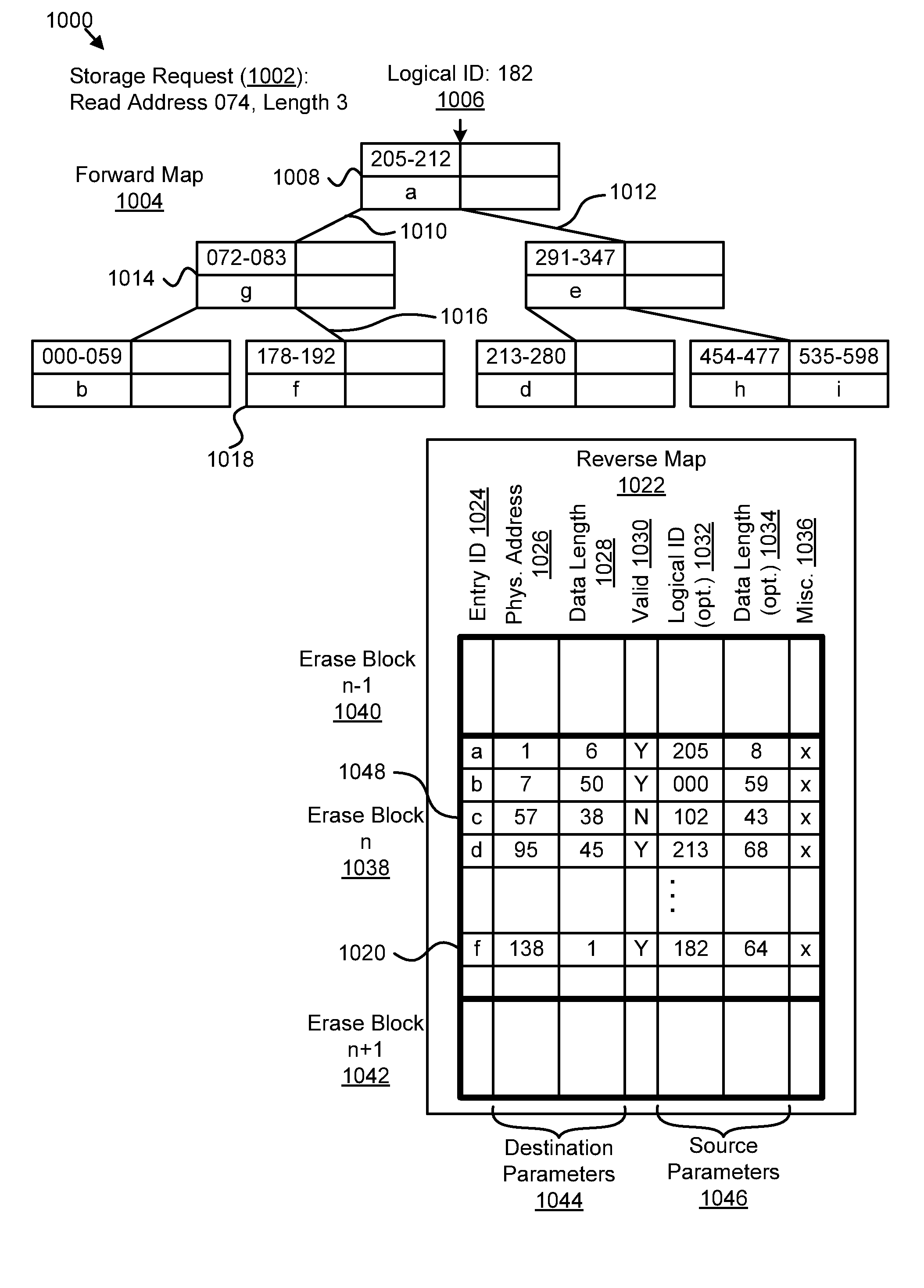

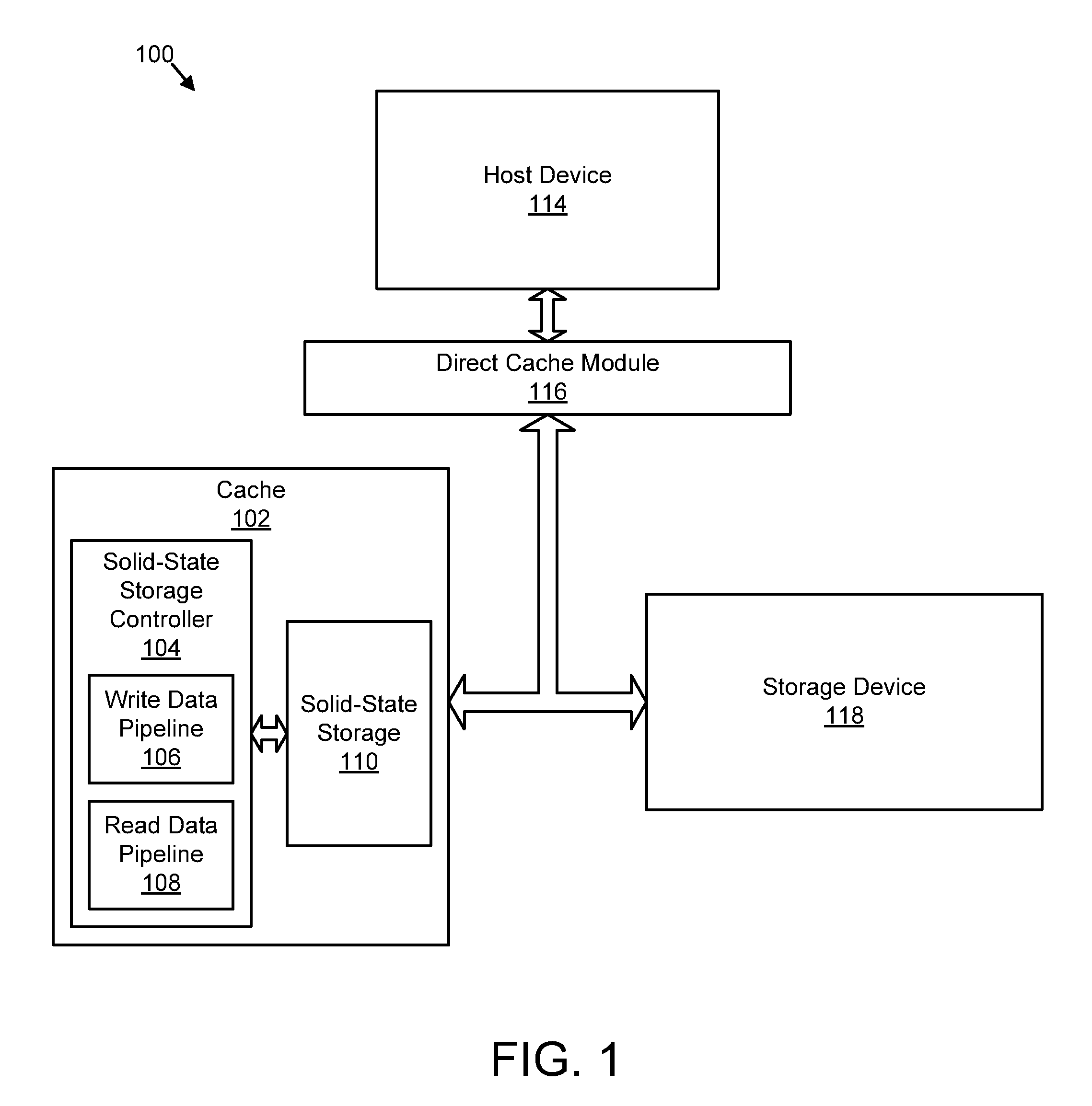

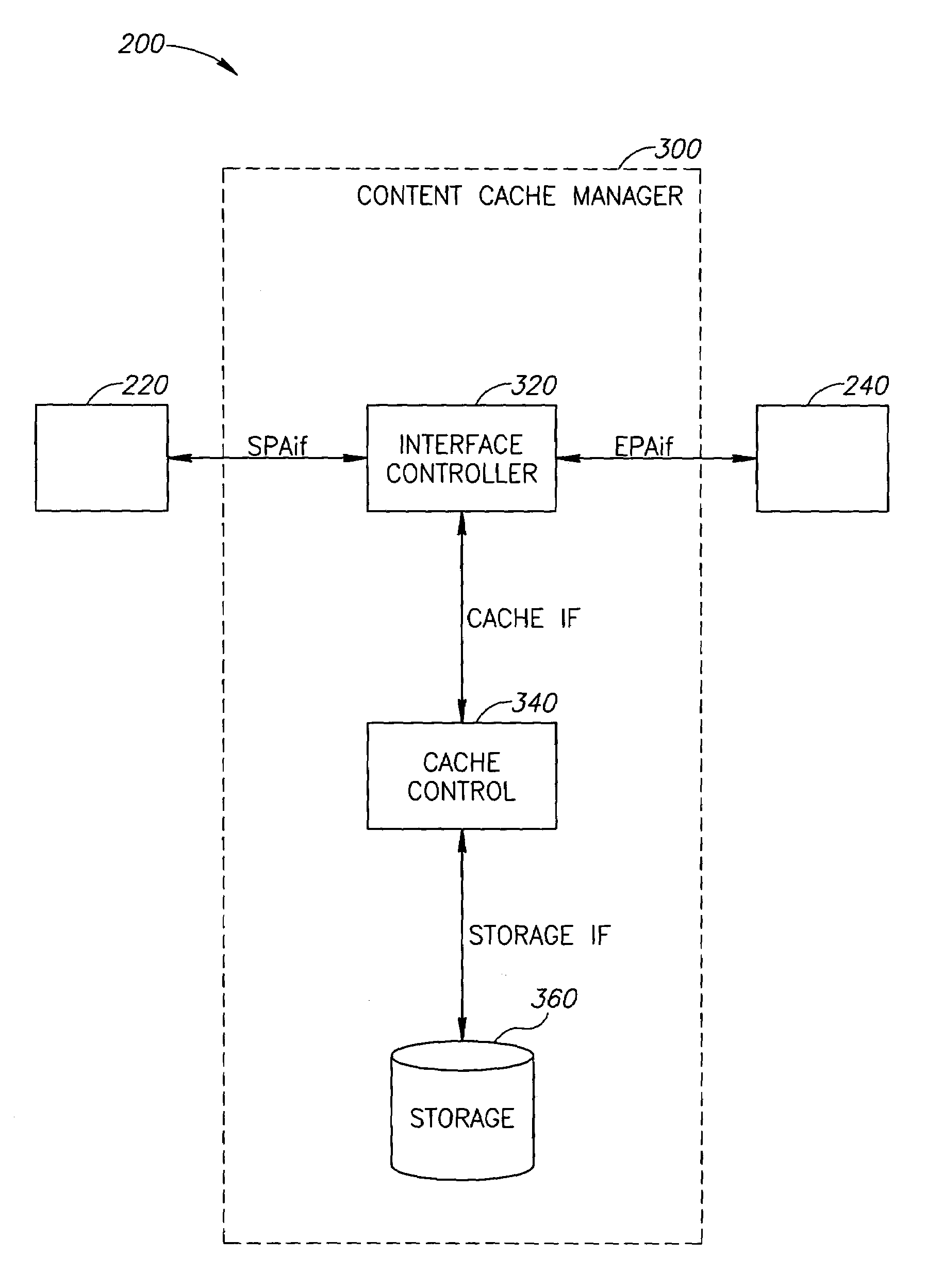

Apparatus, system, and method for caching data

ActiveUS20120210041A1Effective cachingMinimize cache collisionMemory architecture accessing/allocationServersSolid-state storageLogical block addressing

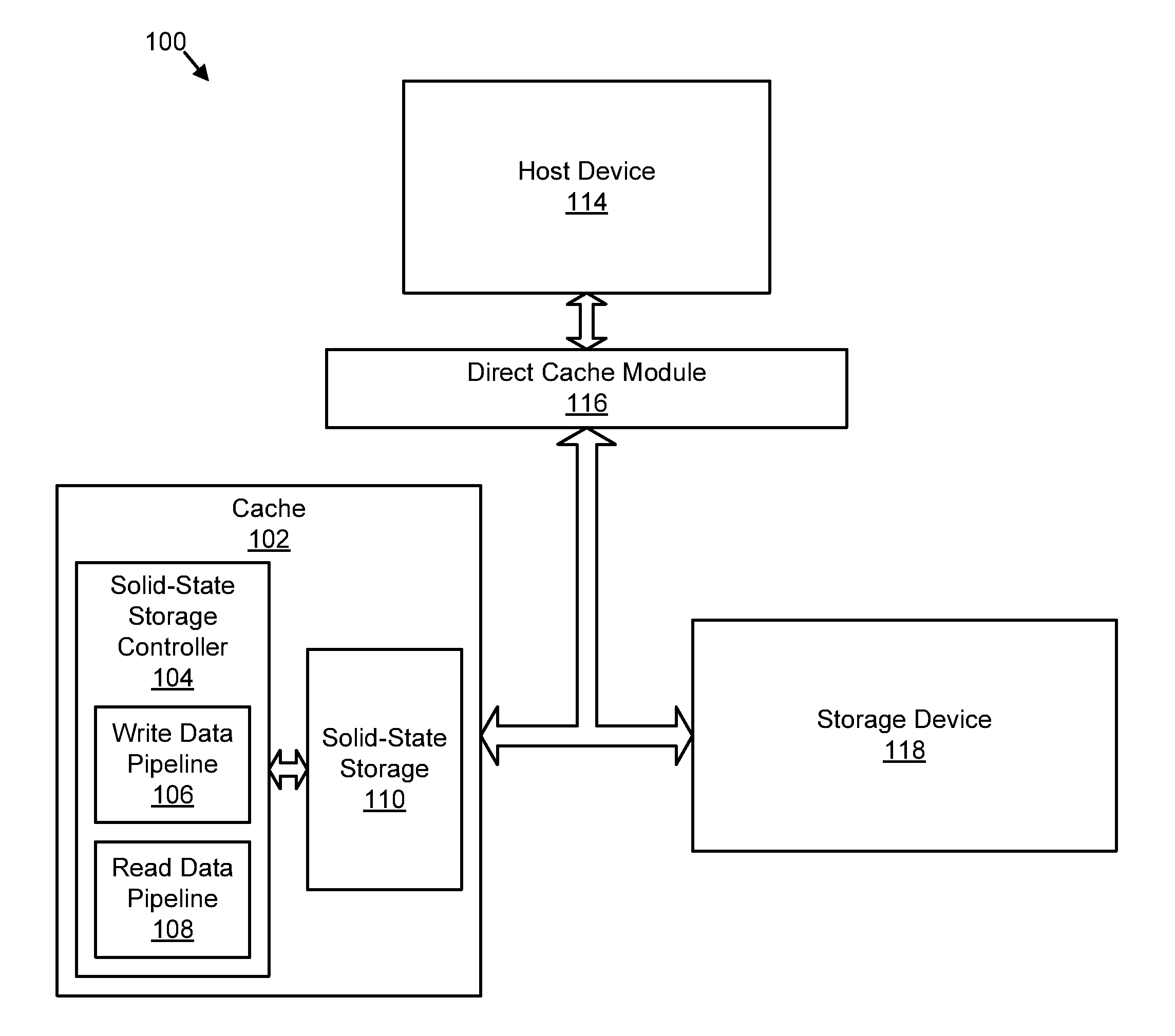

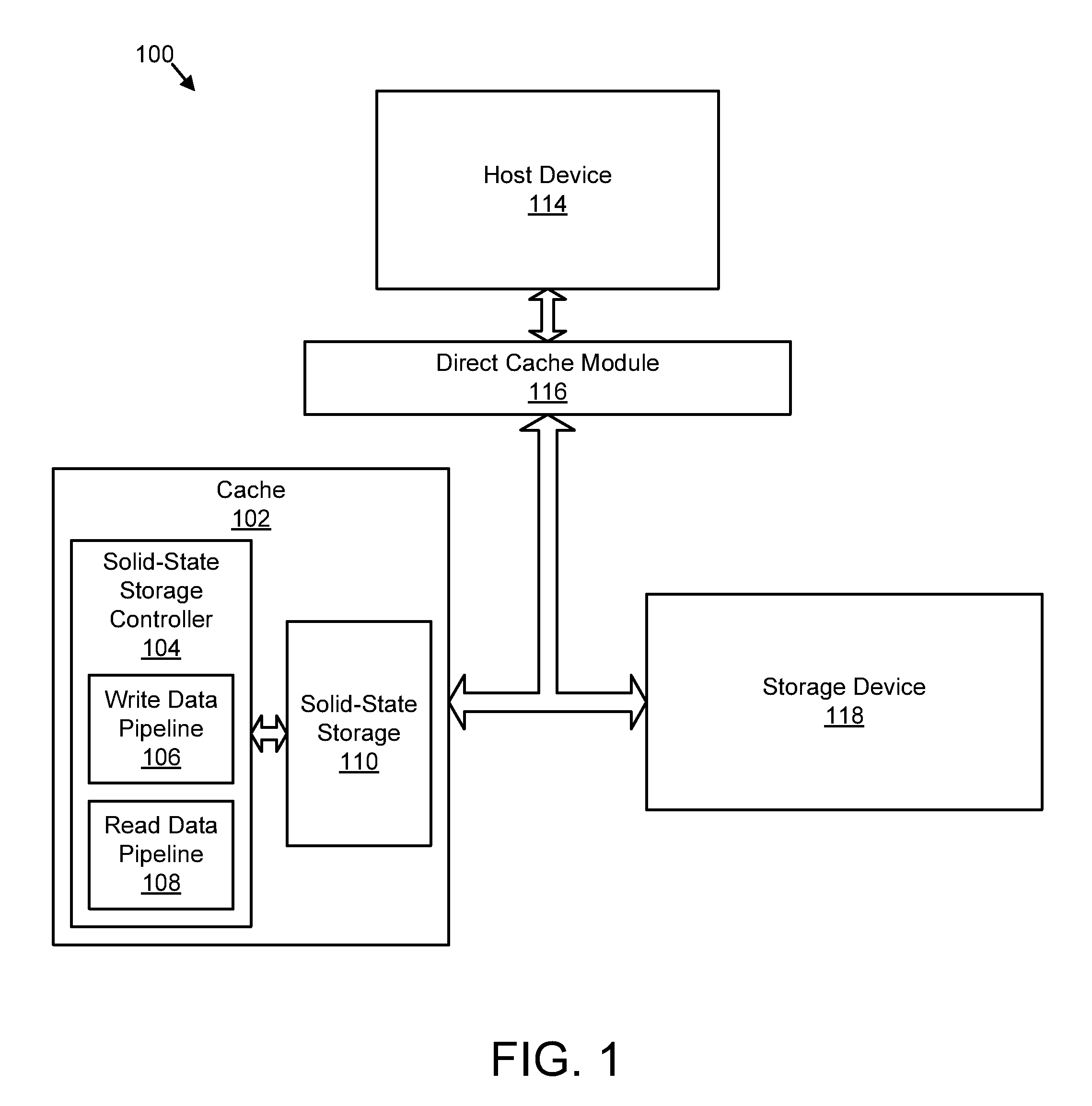

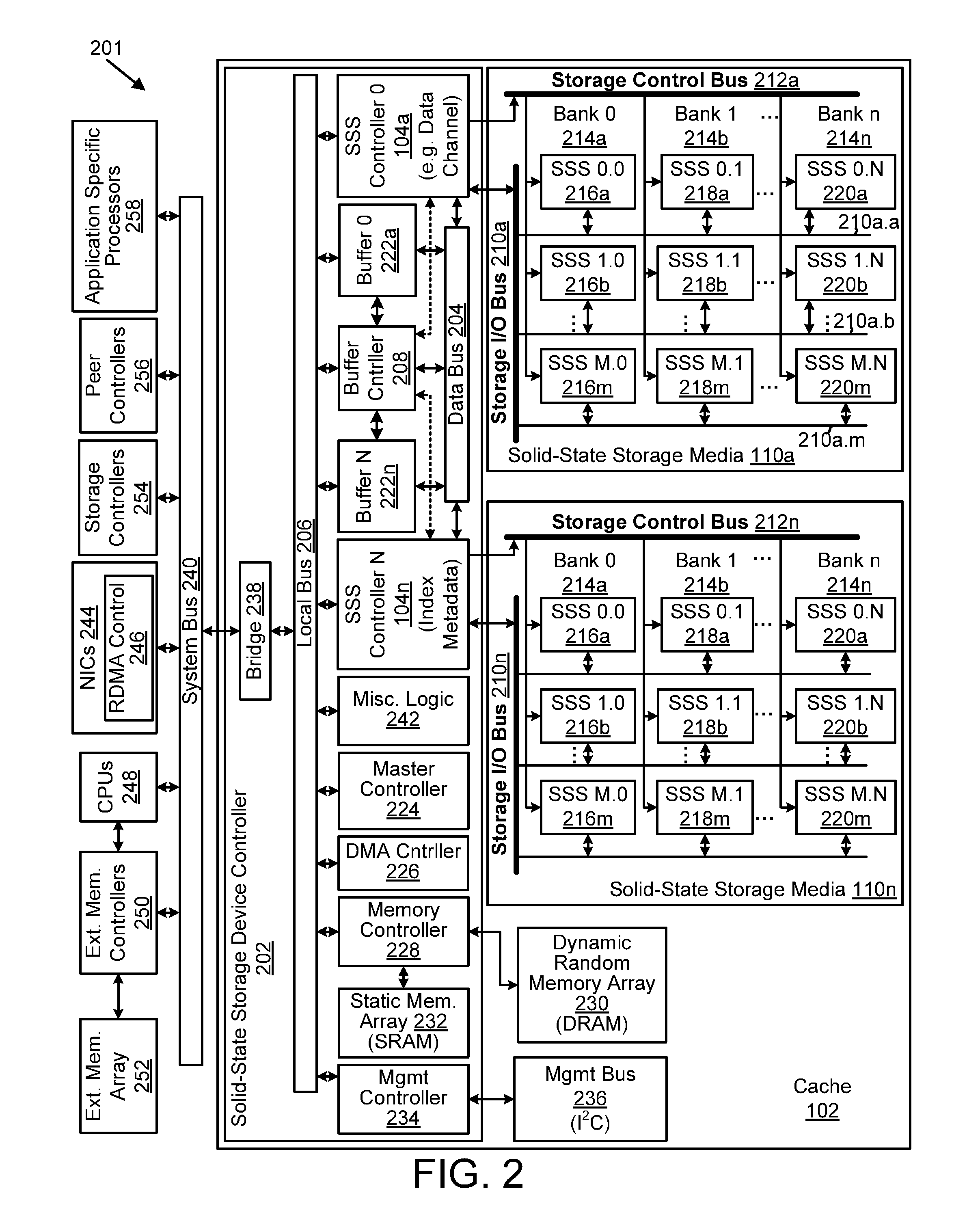

An apparatus, system, and method are disclosed for caching data. A storage request module detects an input / output (“I / O”) request for a storage device cached by solid-state storage media of a cache. A direct mapping module references a single mapping structure to determine that the cache comprises data of the I / O request. The single mapping structure maps each logical block address of the storage device directly to a logical block address of the cache. The single mapping structure maintains a fully associative relationship between logical block addresses of the storage device and physical storage addresses on the solid-state storage media. A cache fulfillment module satisfies the I / O request using the cache in response to the direct mapping module determining that the cache comprises at least one data block of the I / O request.

Owner:SANDISK TECH LLC

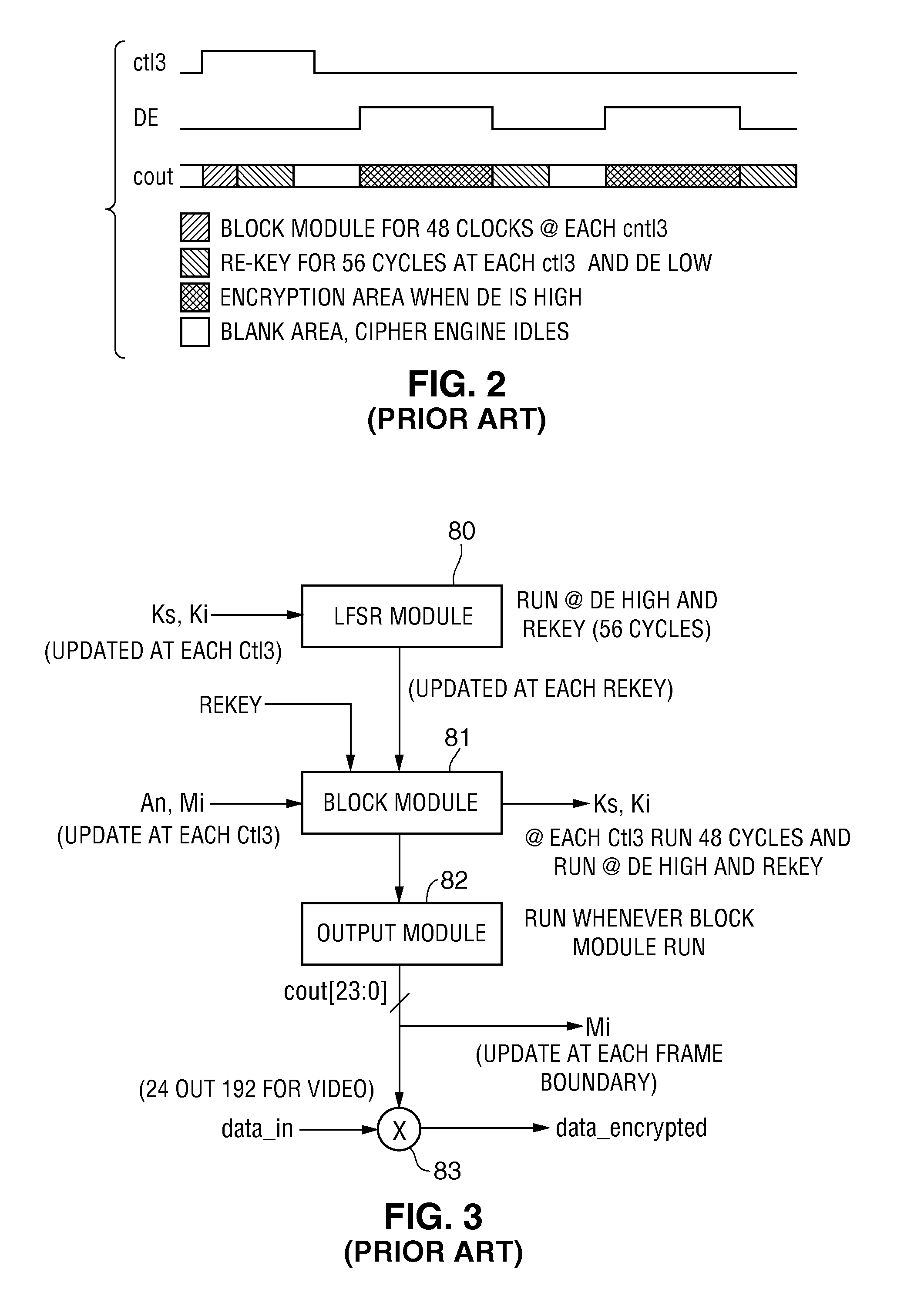

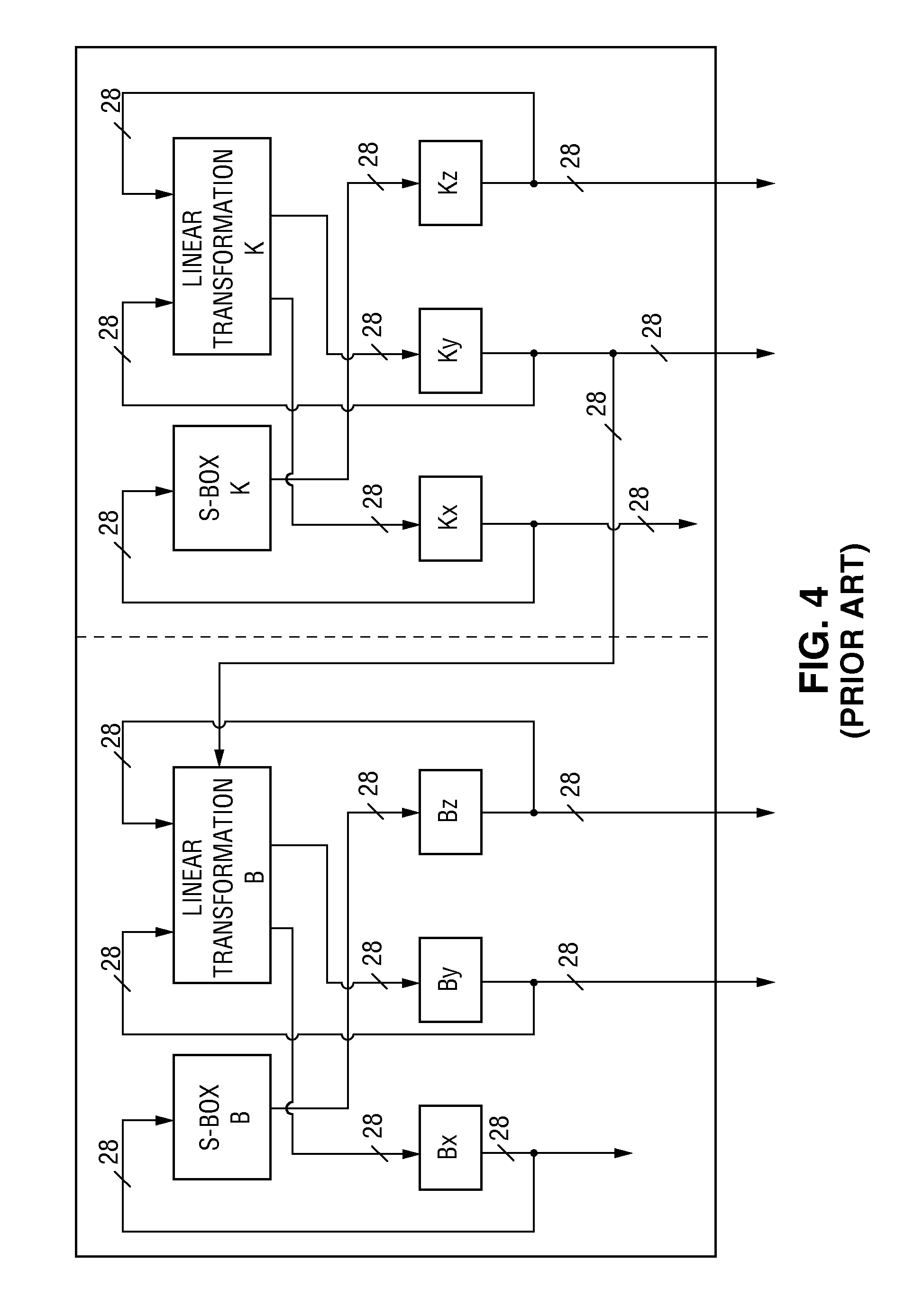

Cryptographic device with stored key data and method for using stored key data to perform an authentication exchange or self test

ActiveUS7412053B1Cheap to storeLow costElectronic circuit testingUser identity/authority verificationEngineeringAuthentication

In preferred embodiments, a cryptographic device in which two key sets are stored: a normal key set (typically unique to the device) and a test key set (typically used by each of a relatively large number of devices). The device uses the normal key set in a normal operating mode and uses the test key set in at least one test mode which can be a built-in self test mode. Alternatively, the device stores test data (e.g., an intermediate result of an authentication exchange) in addition to or instead of the test key set. In other embodiments, the invention is a cryptographic device including a cache memory which caches a portion of a key set for performing an authentication exchange and / or at least one authentication value generated during an authentication exchange. Other embodiments of the invention are systems including devices that embody the invention and methods that can be performed by systems or devices that embody the invention.

Owner:LATTICE SEMICON CORP

Apparatus, system, and method for caching data

ActiveUS8489817B2Effective cachingMinimize collisionsMemory architecture accessing/allocationServersSolid-state storageLogical block addressing

An apparatus, system, and method are disclosed for caching data. A storage request module detects an input / output (“I / O”) request for a storage device cached by solid-state storage media of a cache. A direct mapping module references a single mapping structure to determine that the cache comprises data of the I / O request. The single mapping structure maps each logical block address of the storage device directly to a logical block address of the cache. The single mapping structure maintains a fully associative relationship between logical block addresses of the storage device and physical storage addresses on the solid-state storage media. A cache fulfillment module satisfies the I / O request using the cache in response to the direct mapping module determining that the cache comprises at least one data block of the I / O request.

Owner:SANDISK TECH LLC

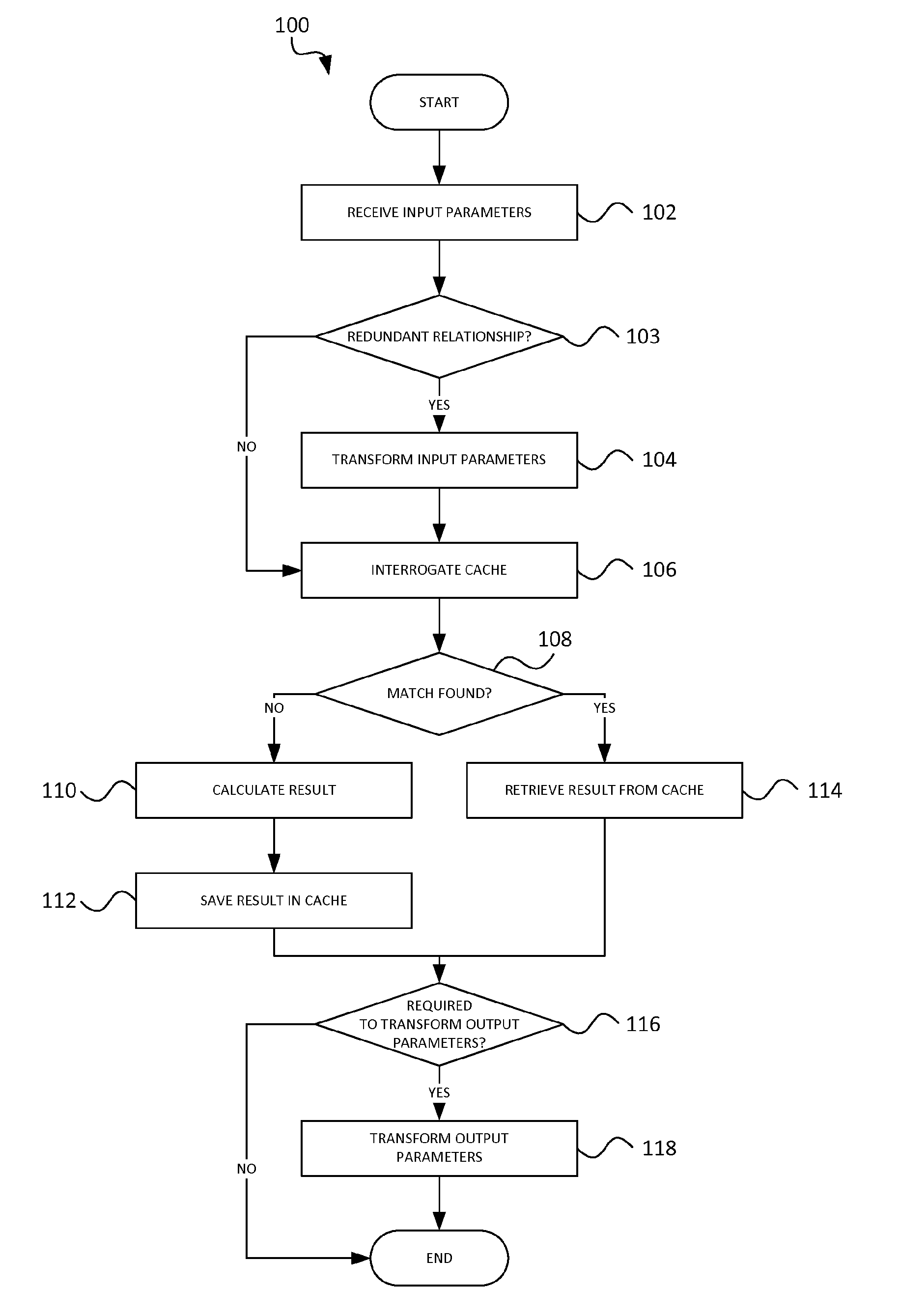

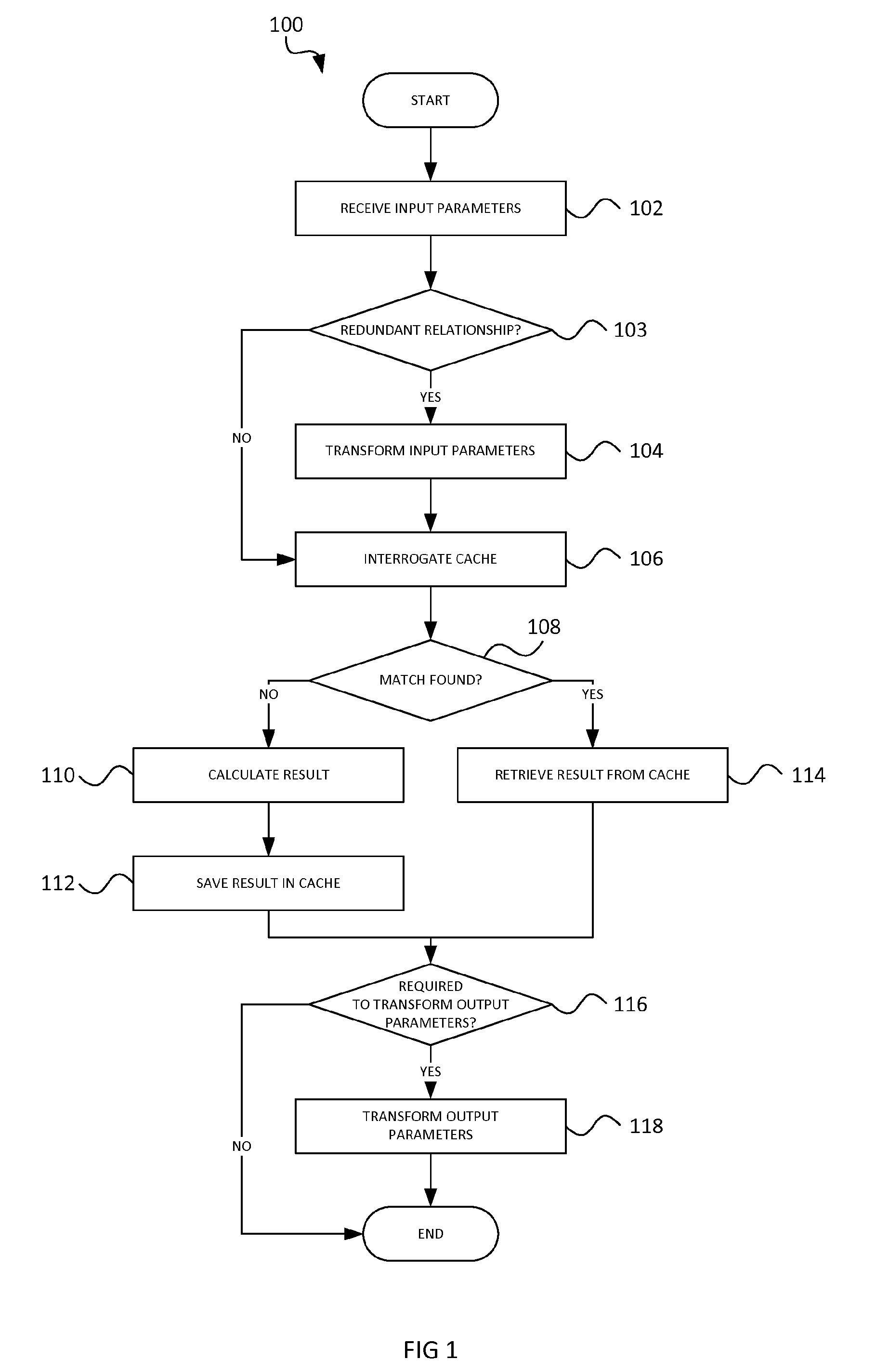

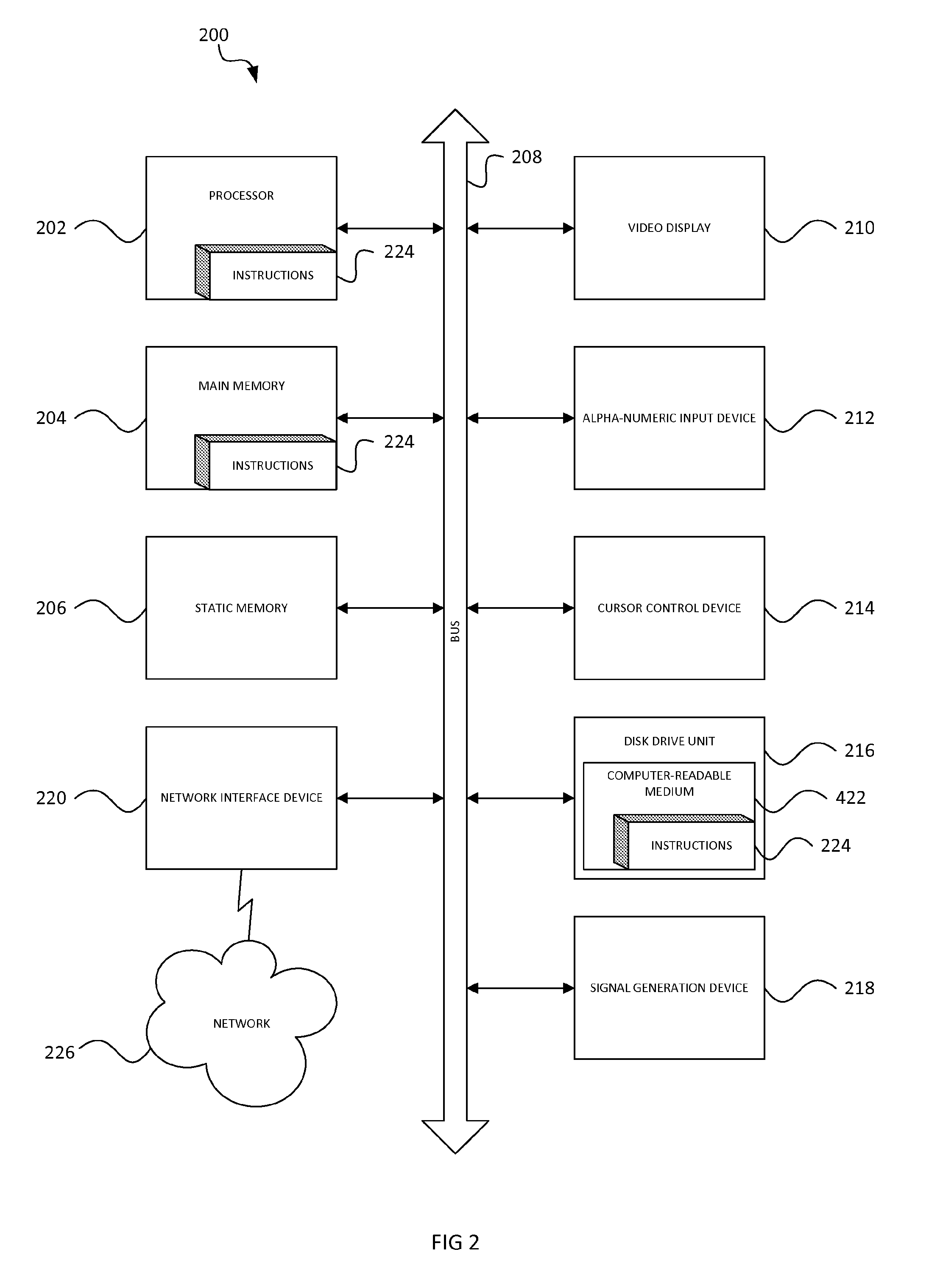

Method of operating a computing device to perform memoization

InactiveUS20110302371A1Improve caching efficiencyReduce in quantityDigital data processing detailsMemory adressing/allocation/relocationParallel computingMemoization

Owner:CSIR

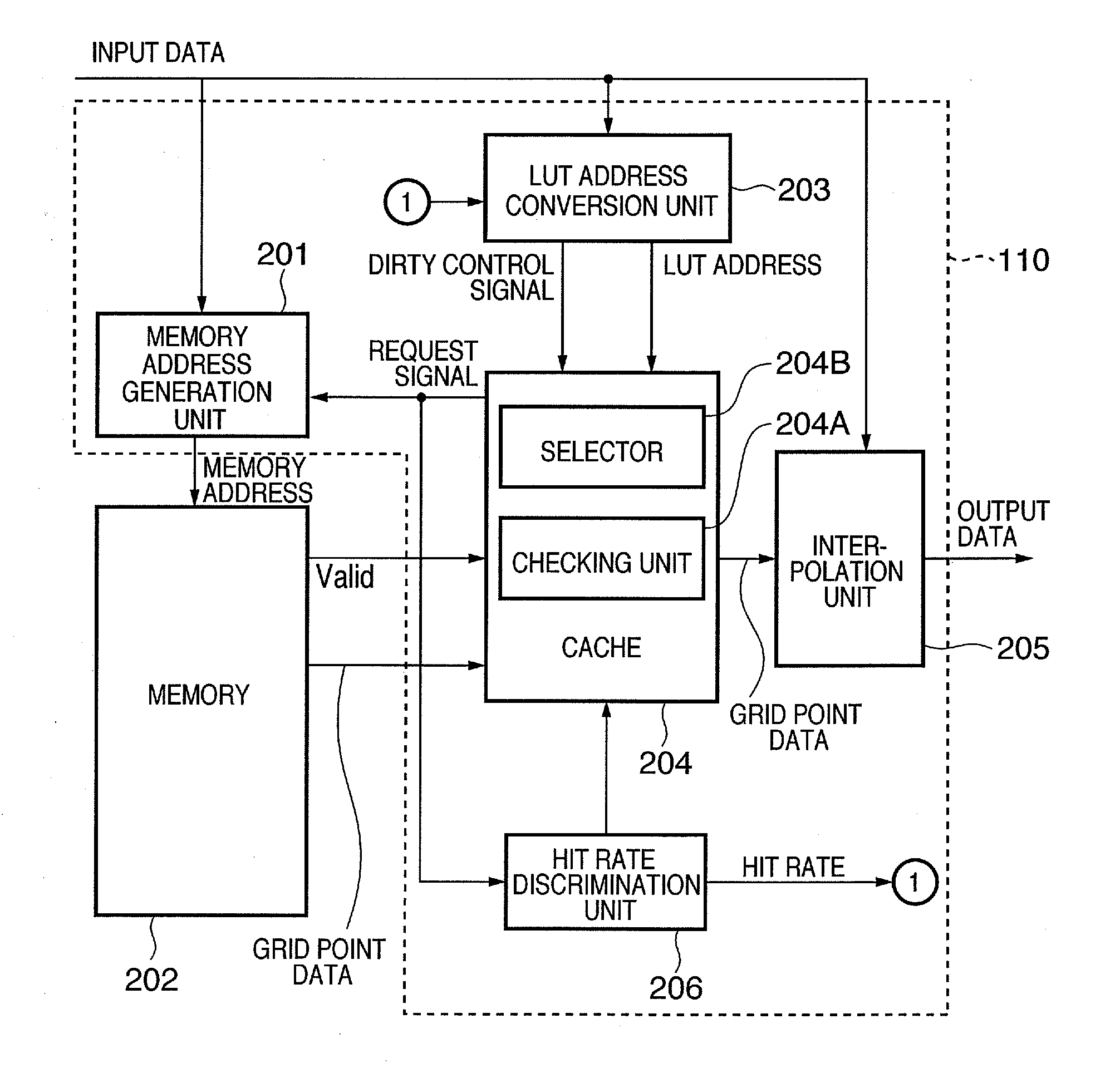

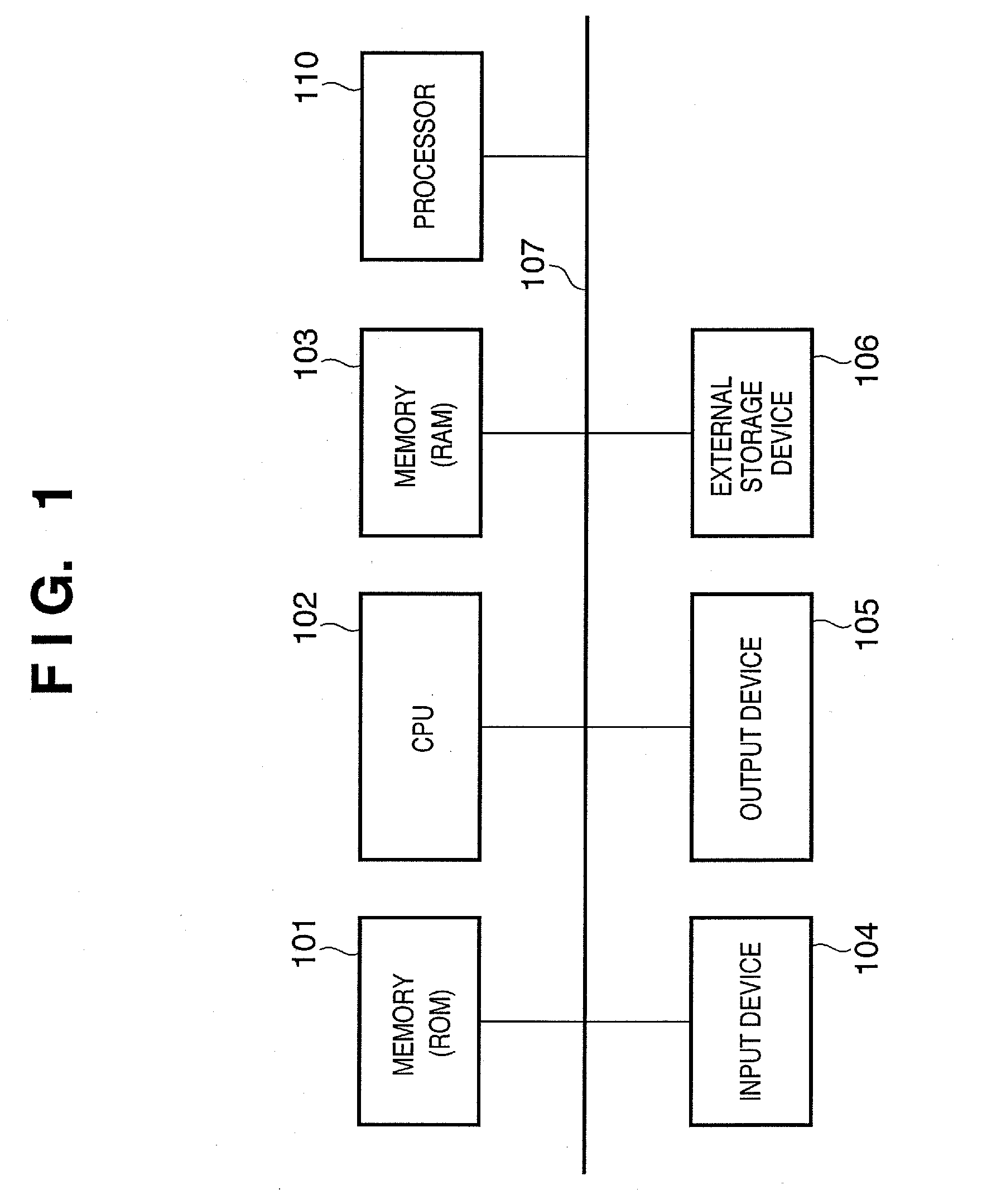

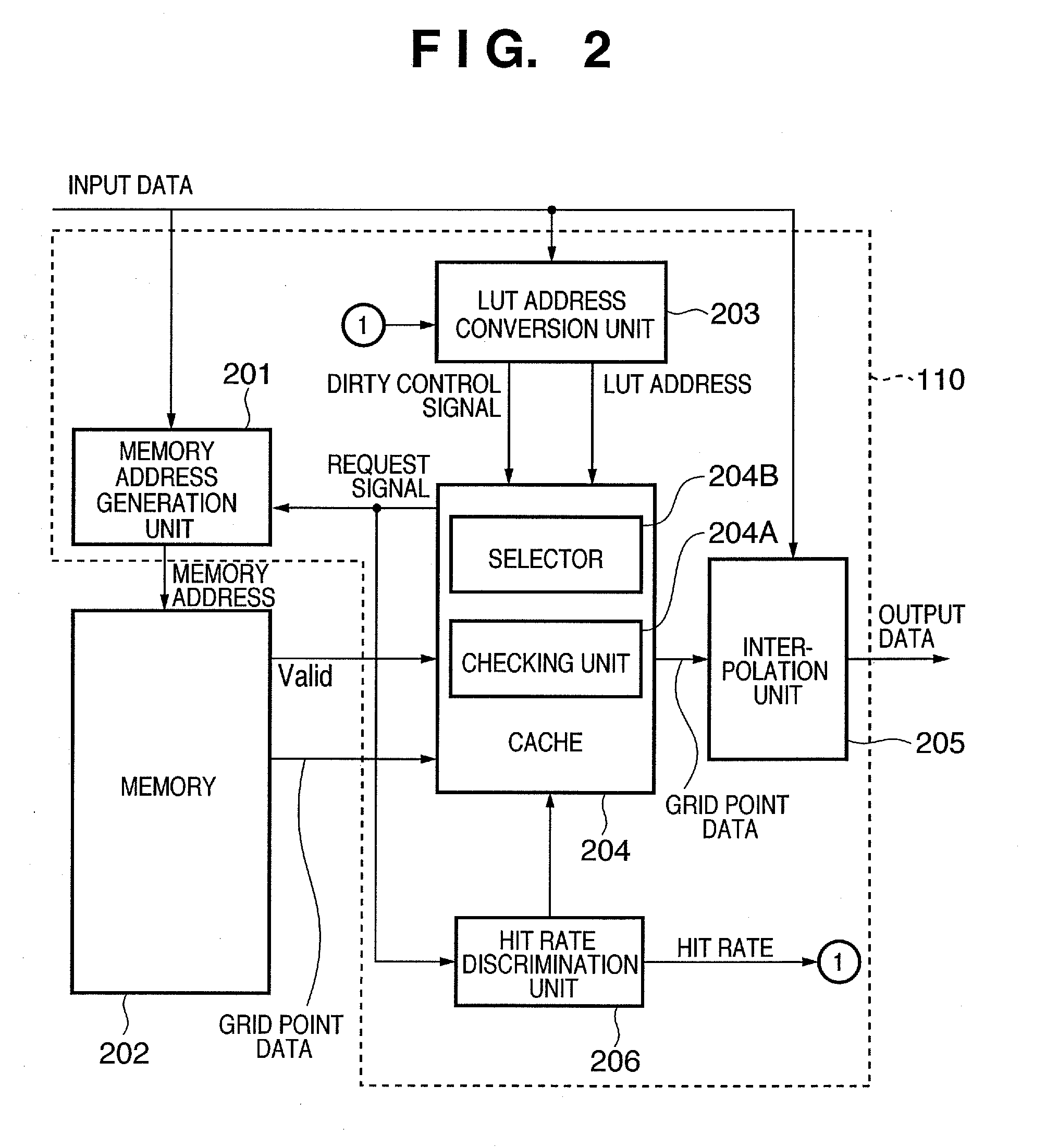

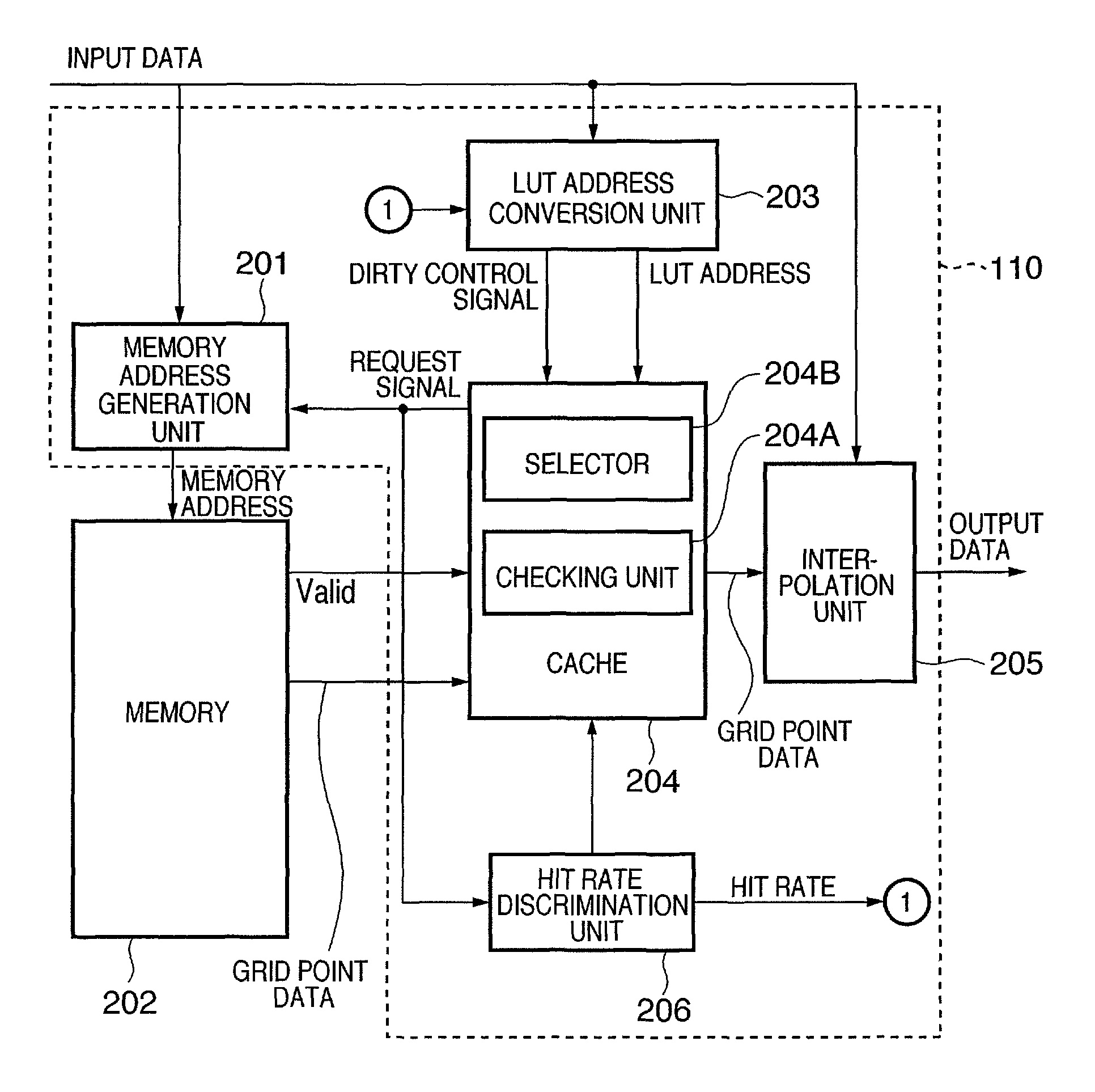

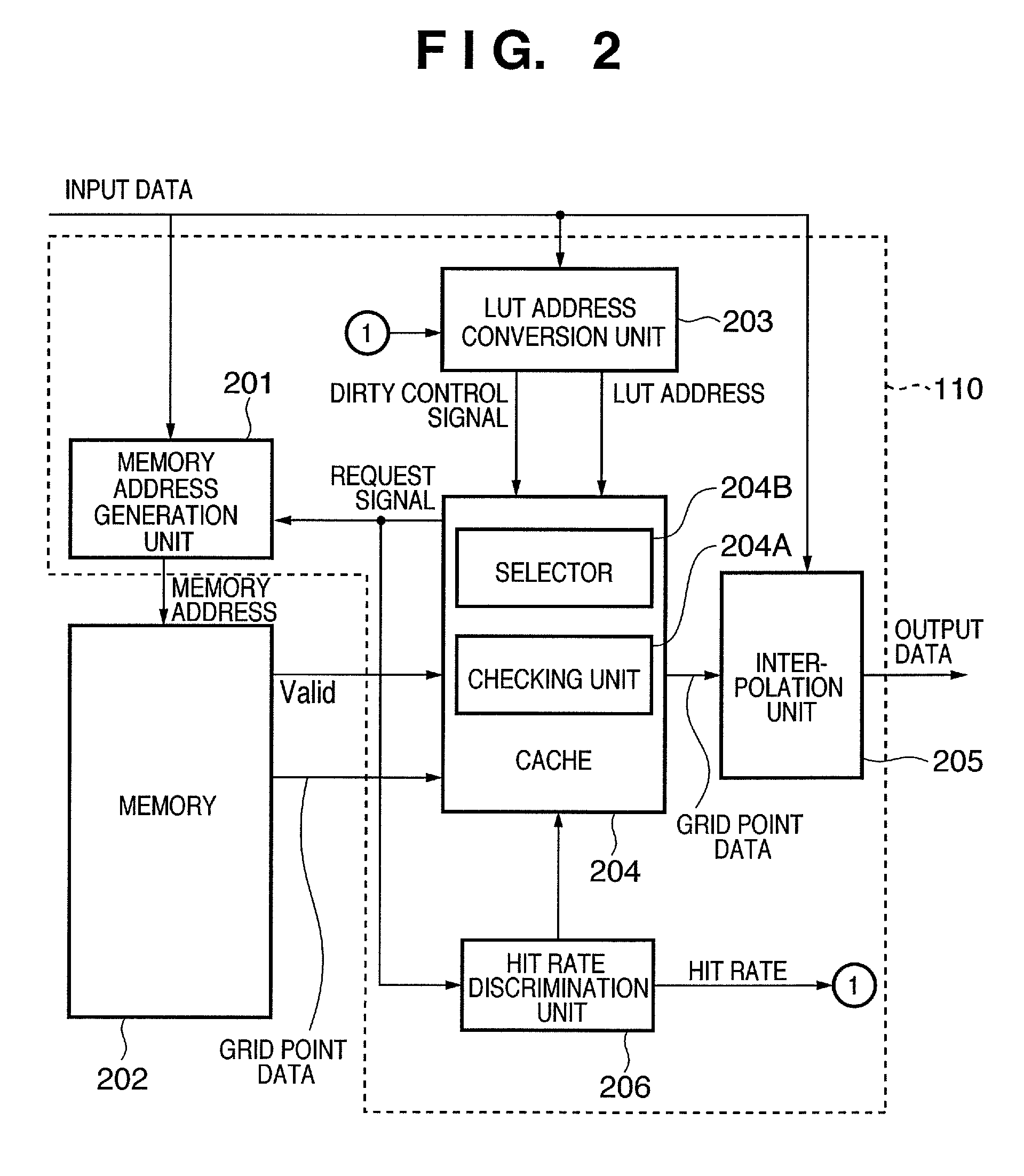

Data processing apparatus and method thereof

InactiveUS20070126744A1Good value for moneyReduce cache sizeMemory adressing/allocation/relocationCathode-ray tube indicatorsMemory addressComputer hardware

A data processing apparatus generates a memory address corresponding to a first memory, and interpolates data read out from the first memory. The data processing apparatus selects a part of the memory address, checks if the first memory stores data corresponding to the selected part of the memory address, and transfers the data, for which it is determined that the first memory does not store the data, and which corresponds to the part of the memory address, from a second memory to the first memory. The data processing apparatus determines to change a part to be selected of the memory address based on the checking result indicating that the first memory does not store the data corresponding to the selected part of the memory address, and changes the part of the memory address corresponding to the characteristics of the memory address.

Owner:CANON KK

Cache memory storage

ActiveUS20080010410A1Reduce accessReduce cache sizeTransmissionMemory systemsData streamData transmission

Owner:AVAYA COMM ISRAEL

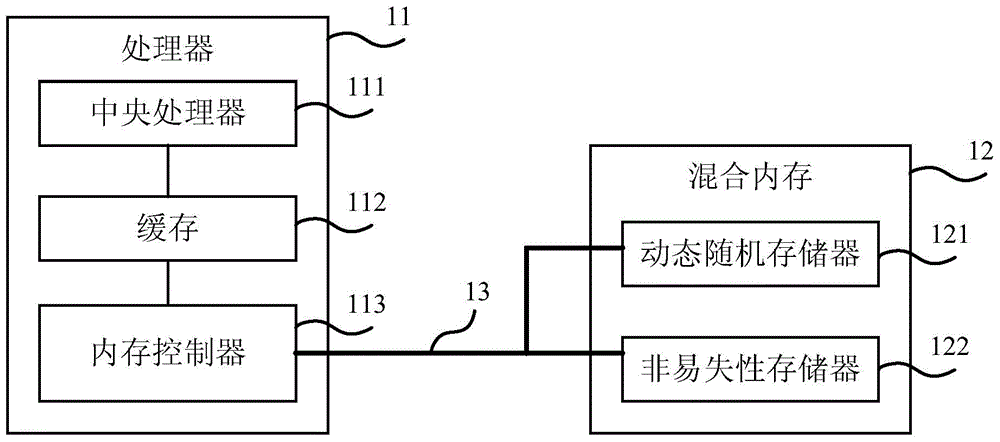

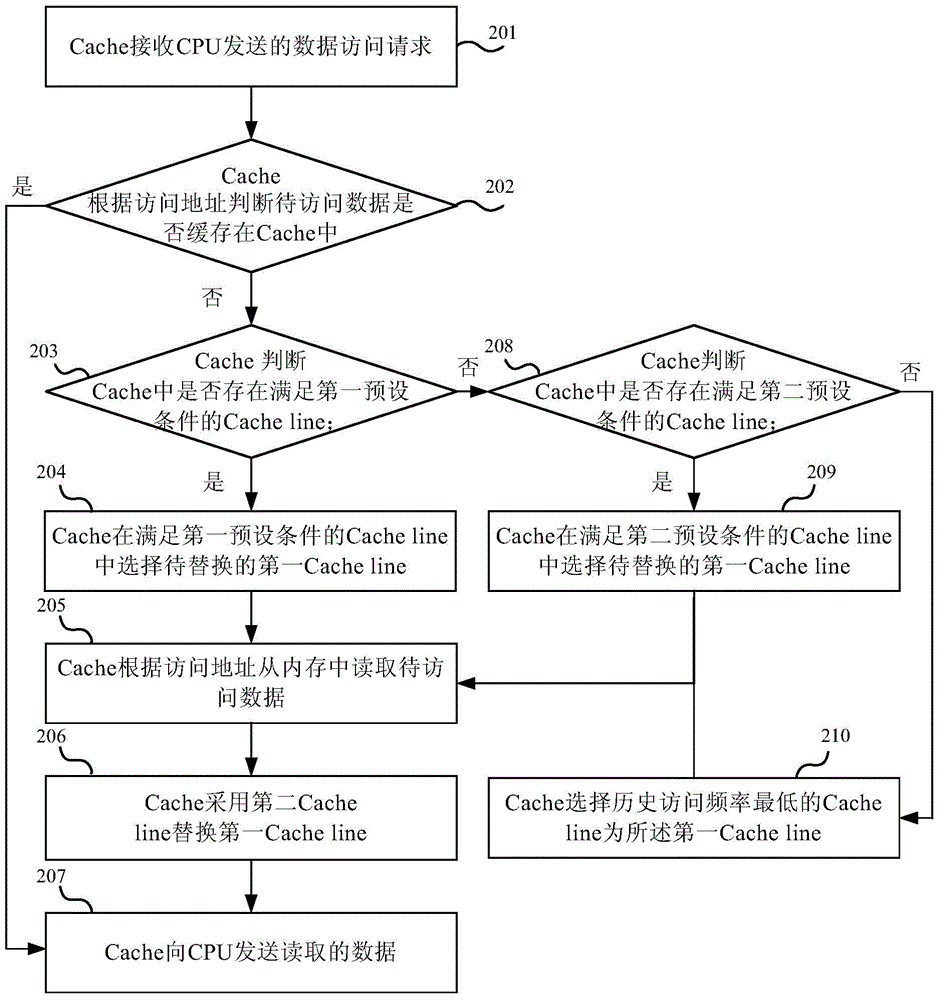

Data caching method, cache and computer system

ActiveCN105094686AReduce cache sizeIncrease the amount of cacheMemory architecture accessing/allocationInput/output to record carriersMemory typeDram memory

A data caching method, a cache and a computer system. In the method, when an access request does not hit a cache line to be replaced which is required to be determined, a cache not only needs to take account of a historical access frequency of the cache line, but also needs to take account of a memory type corresponding to the cache line, so that the cache line corresponding to the memory type of a DRAM can be replaced preferentially, thereby reducing the caching amount of the cache for data stored in the DRAM, and therefore, the cache can increase the caching amount of data stored in an NVM, so that for the access request of the data stored in the NVM, corresponding data can be found in the cache as much as possible, thereby reducing cases of data being read from the NVM, reducing the delay of reading data from the NVM and effectively improving the access efficiency.

Owner:HUAWEI TECH CO LTD +1

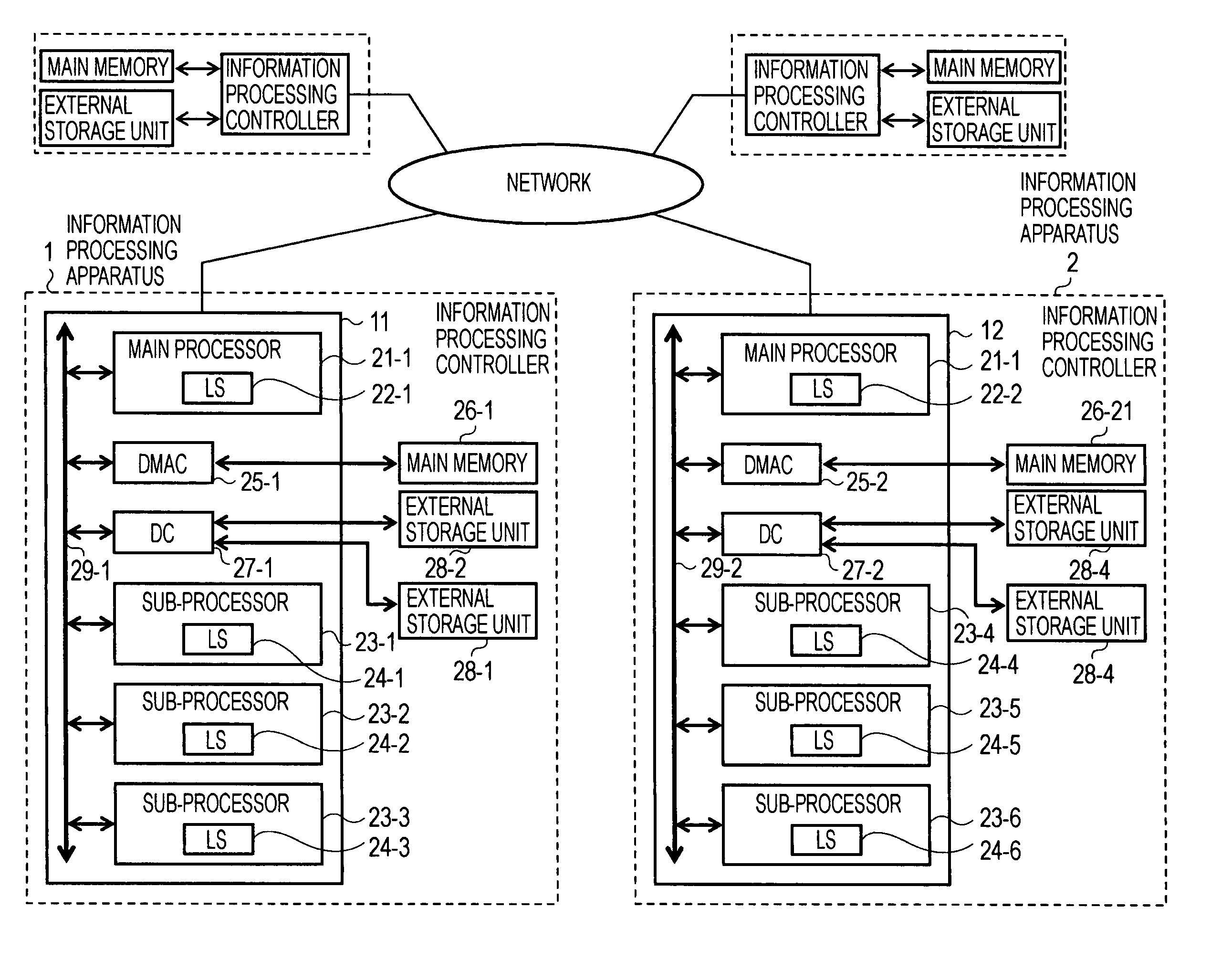

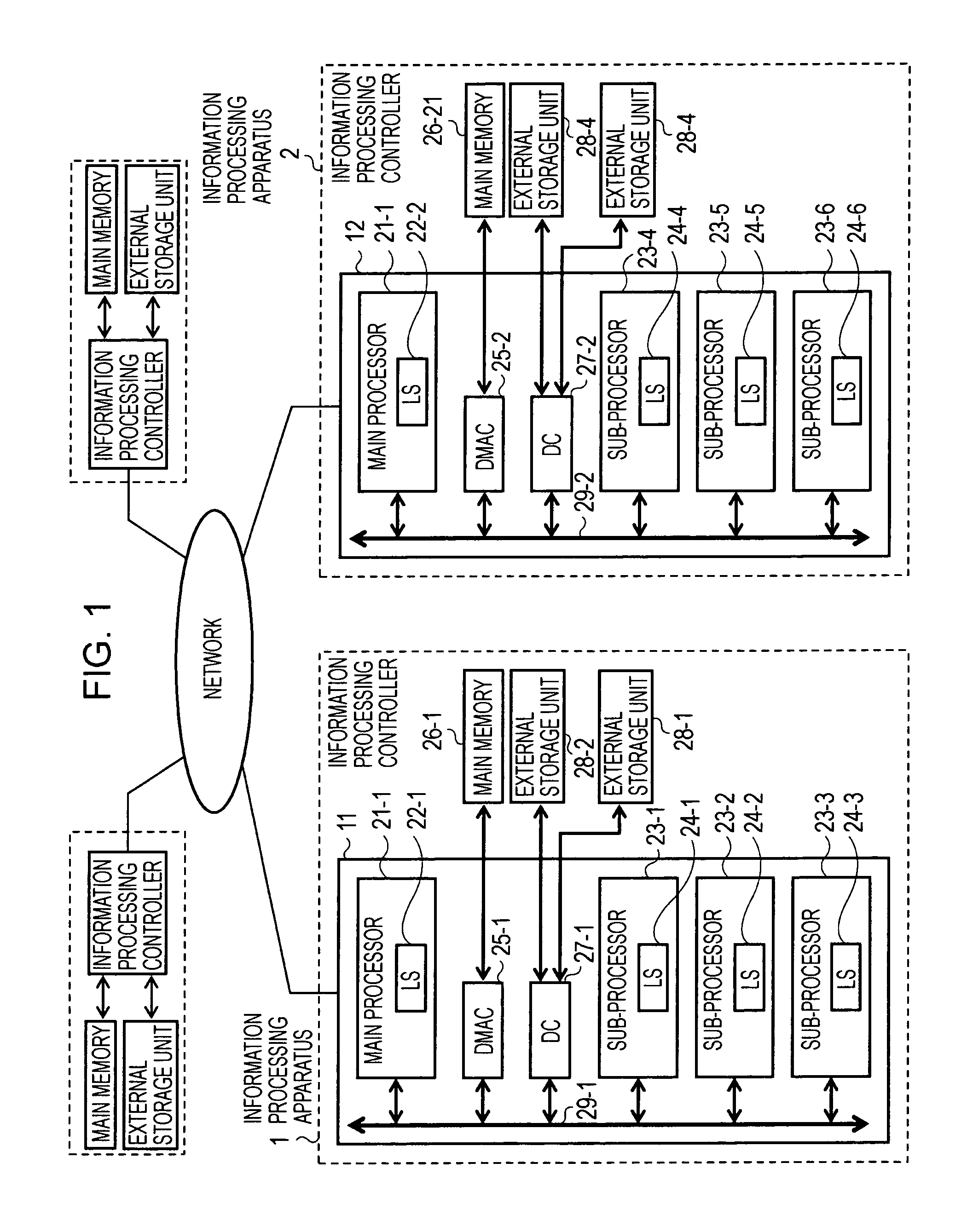

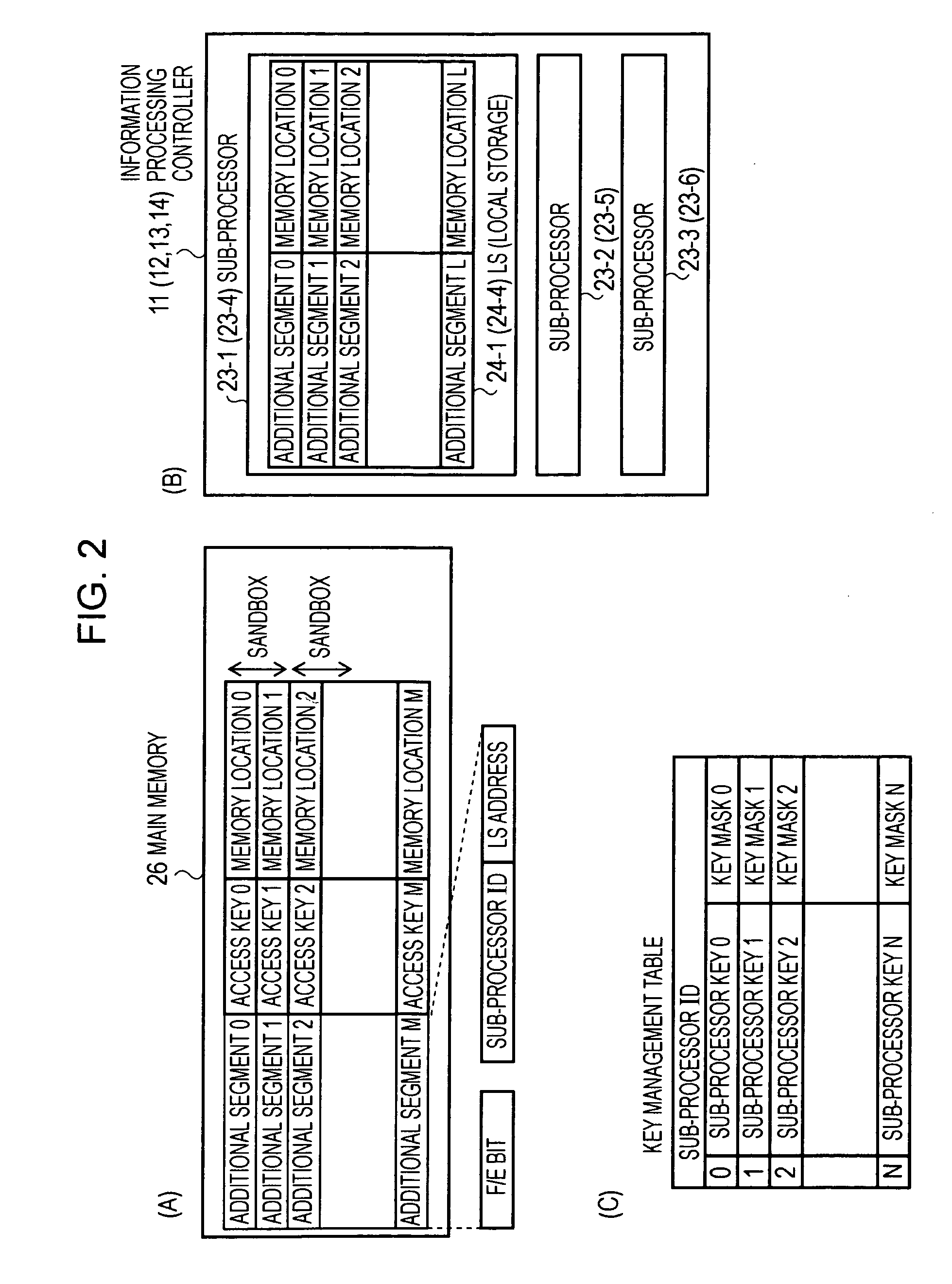

Information Processing System, Information Processing Method, and Computer Program

InactiveUS20080253673A1Without reducing performanceReduce probabilityResource allocationMemory adressing/allocation/relocationInformation processingParallel computing

Each sub-processor executes processing, assigned by a main processor, without reducing the performance of an entire system.During transfer of data from the main memory to a cache, a sub-processor for performing decoding processing on a compressed image calculates a cache area based on a parameter indicating a feature of an image and adaptively changes a memory region to be cached, thereby increasing a cache hit probability during a subsequent processing. The performance of image signal processing performed by the sub-processor is improved, traffic in a system bus for memory transfer is reduced, and a decrease in the performance of the entire system is prevented.

Owner:SONY CORP

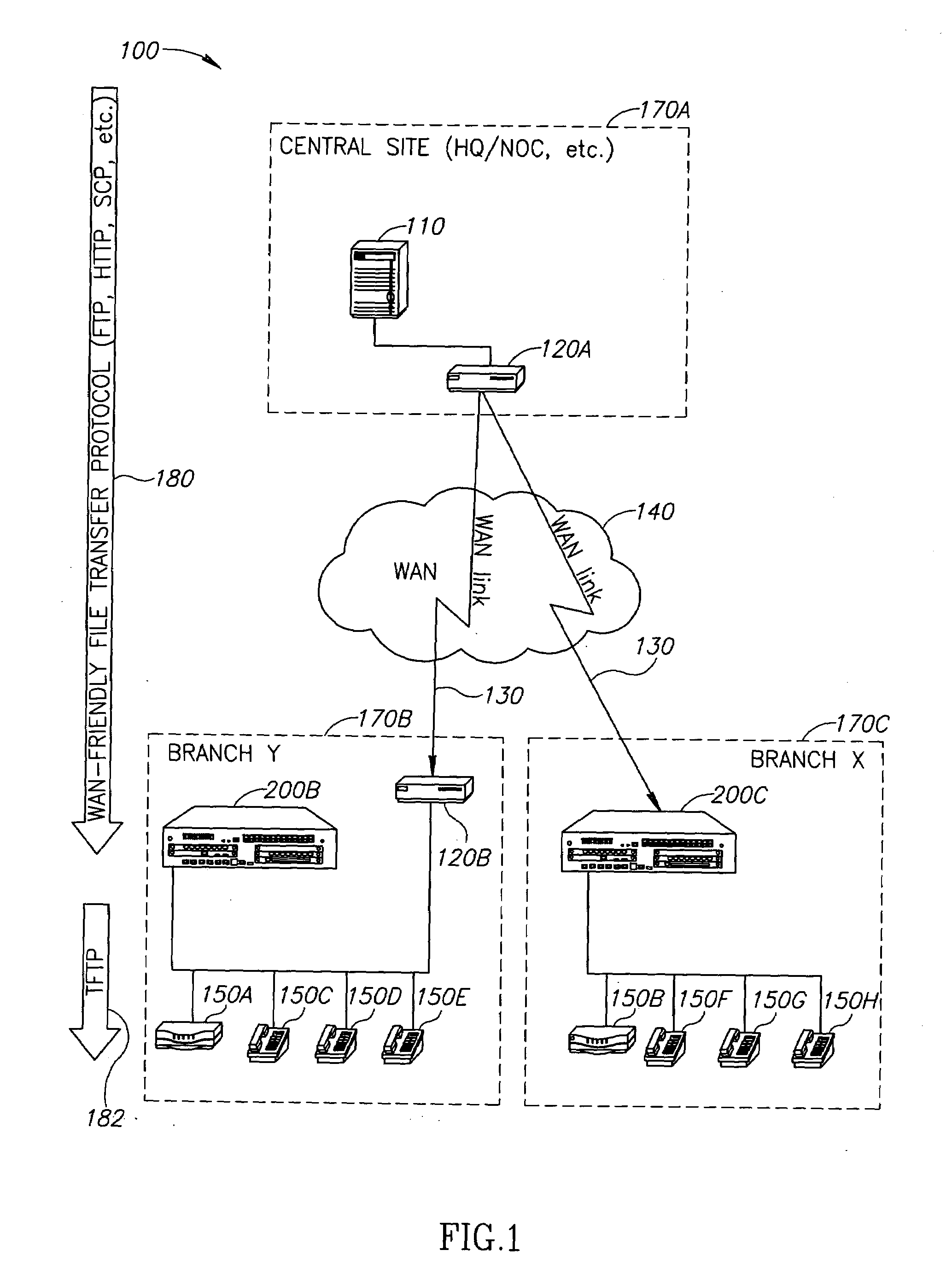

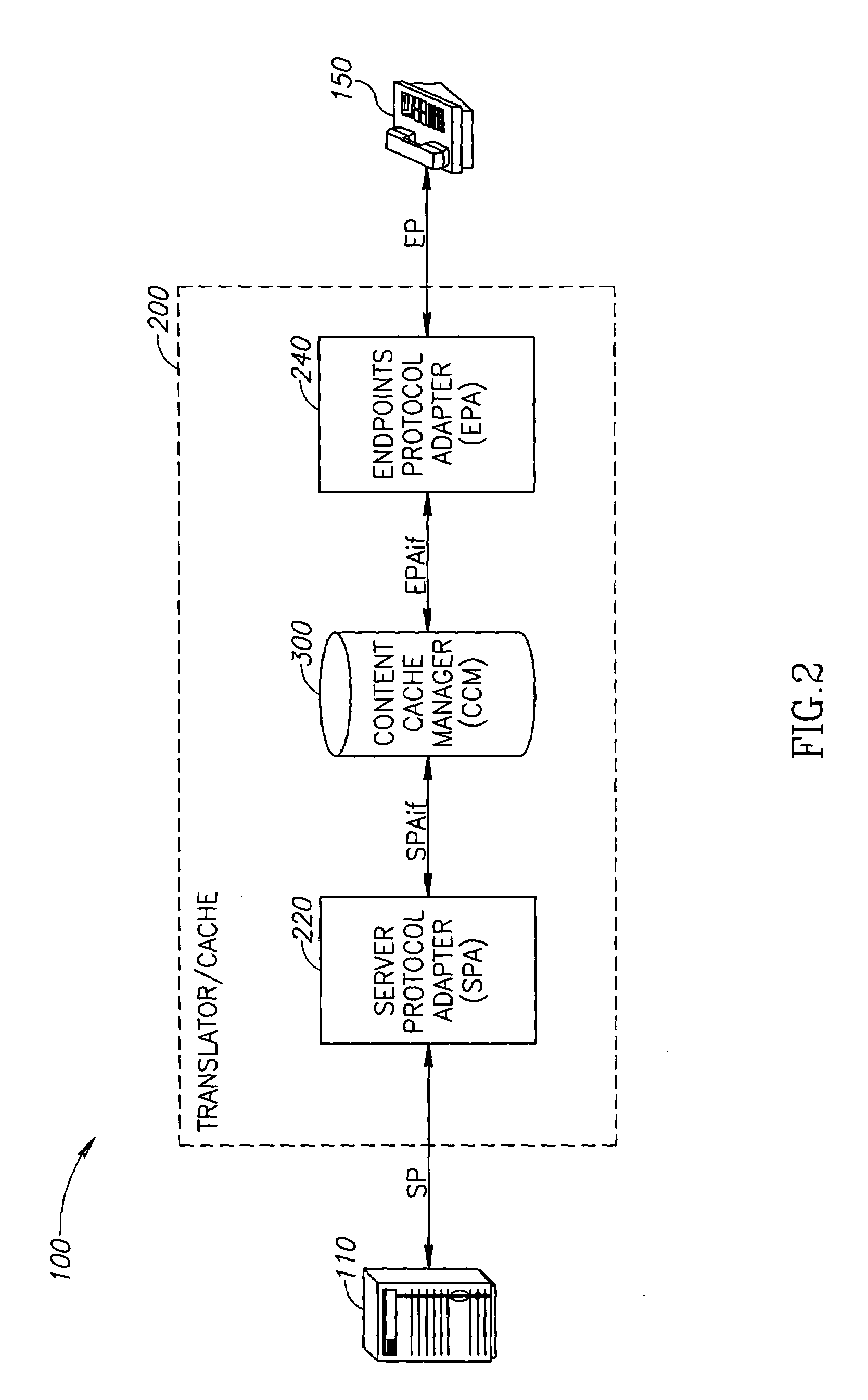

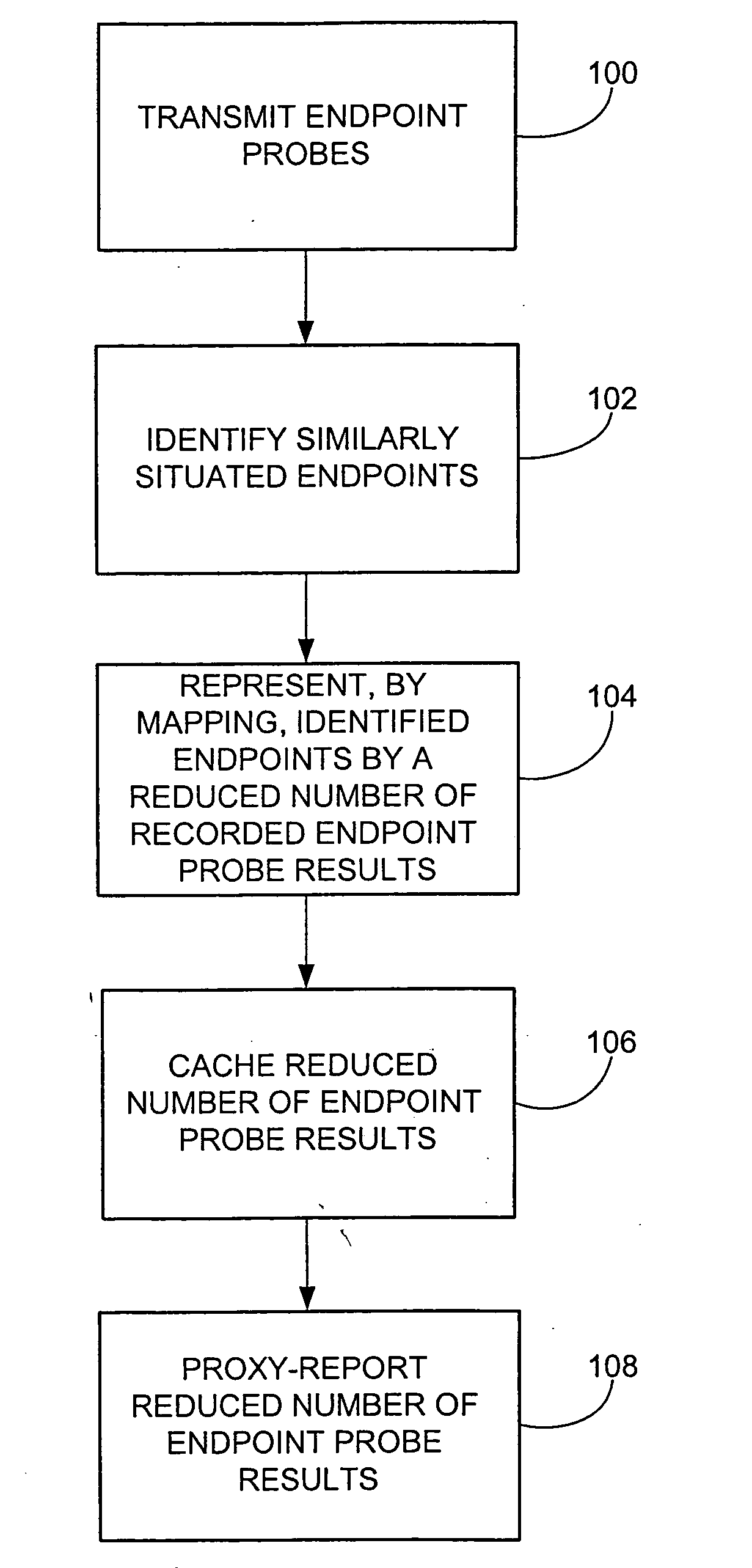

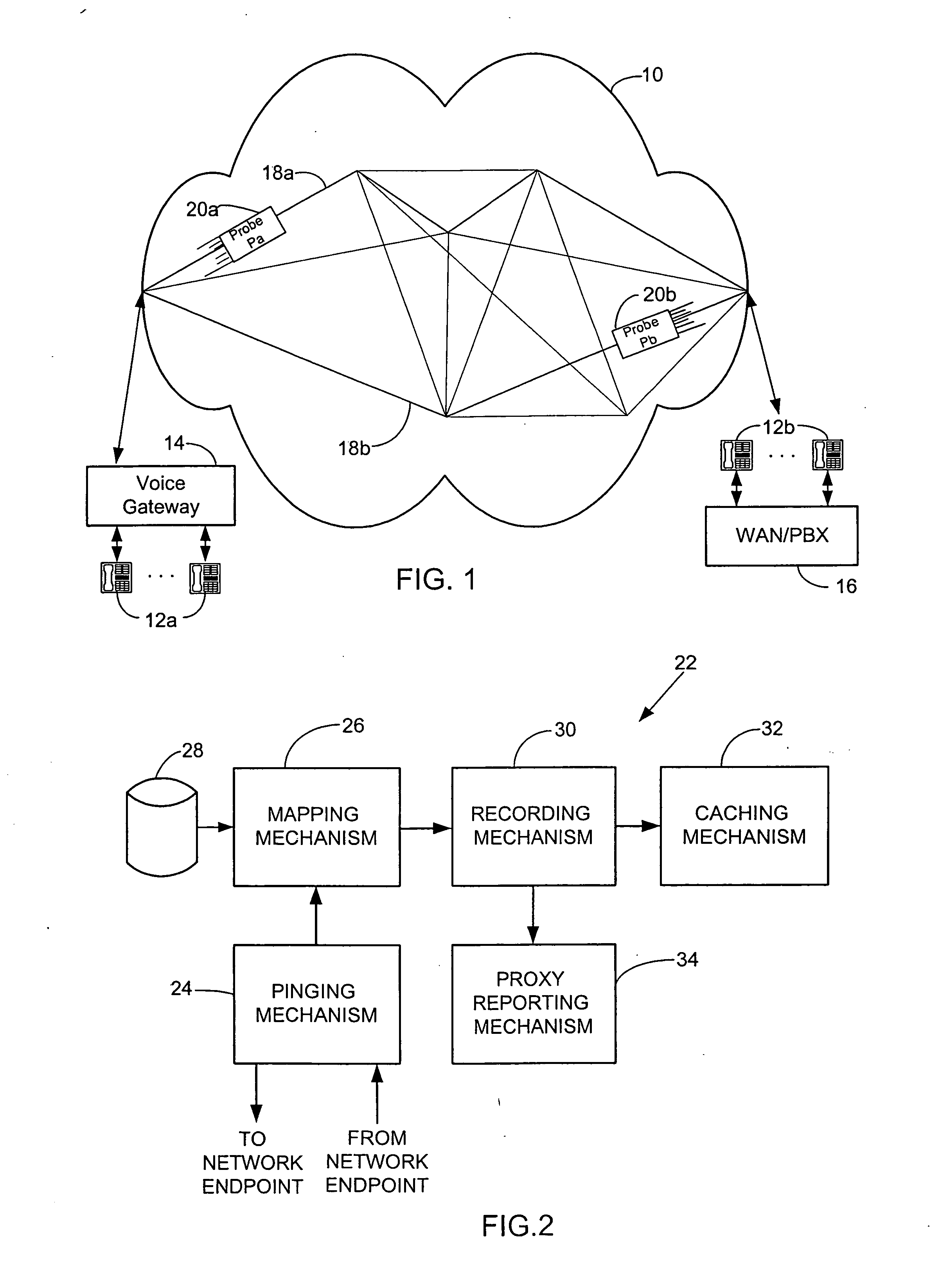

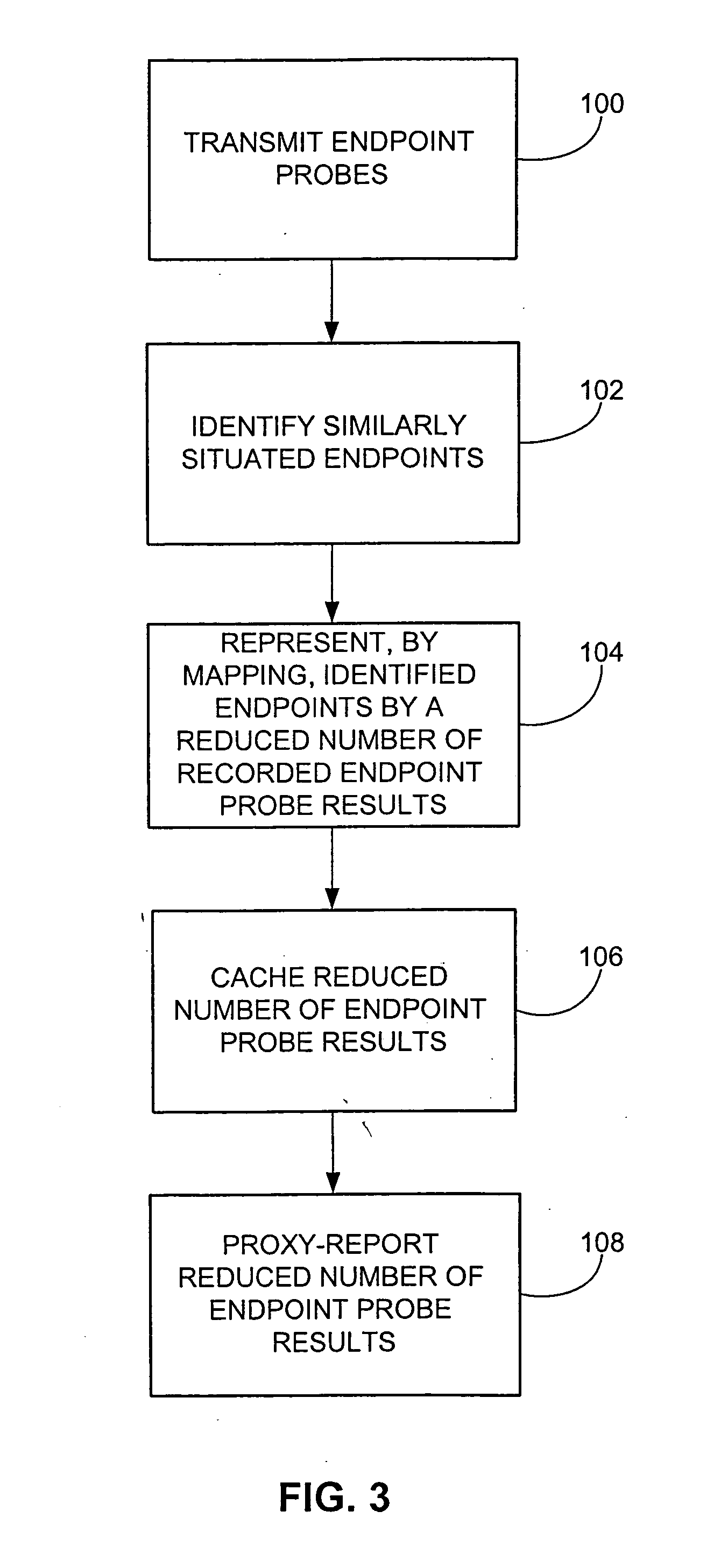

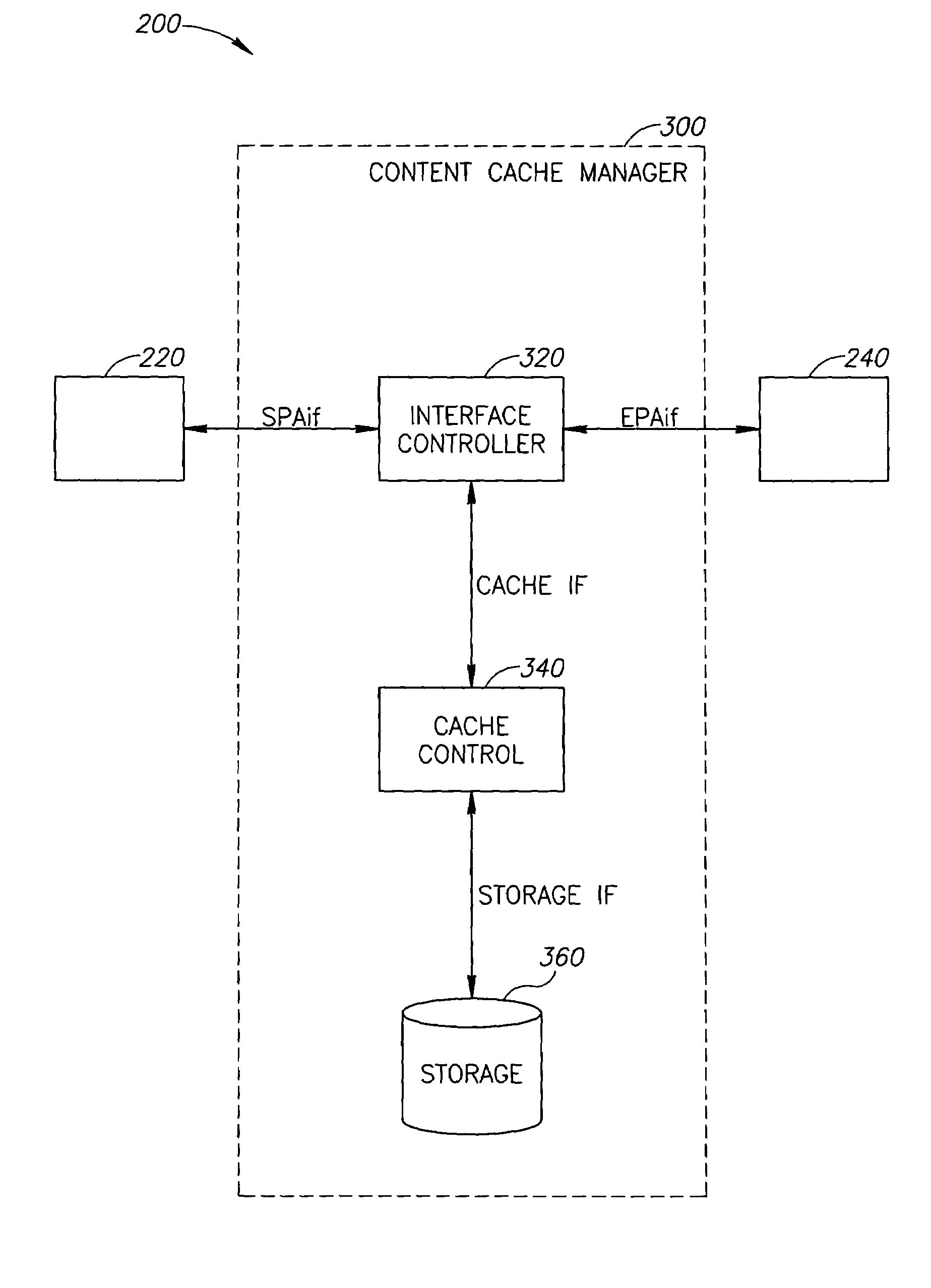

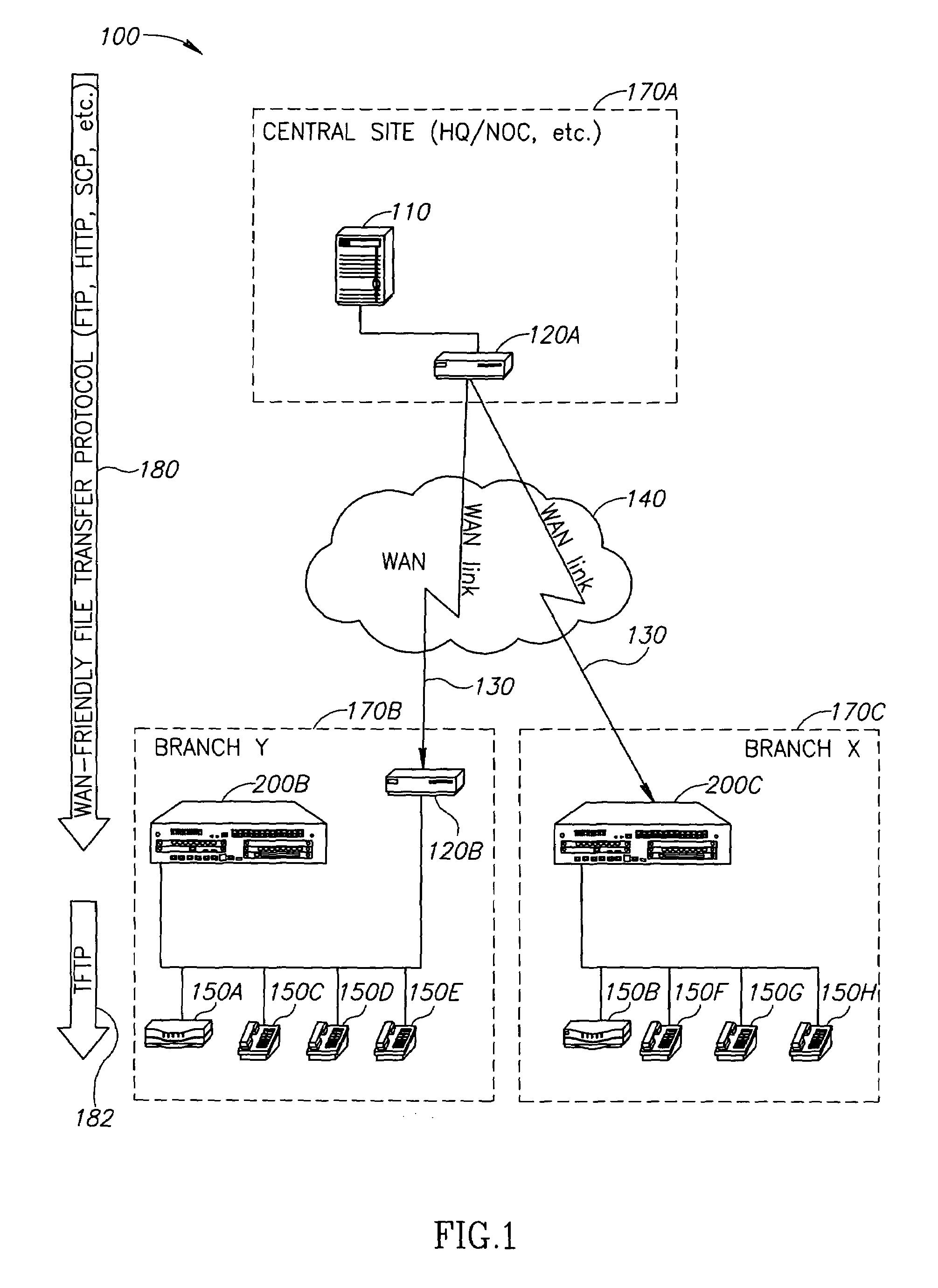

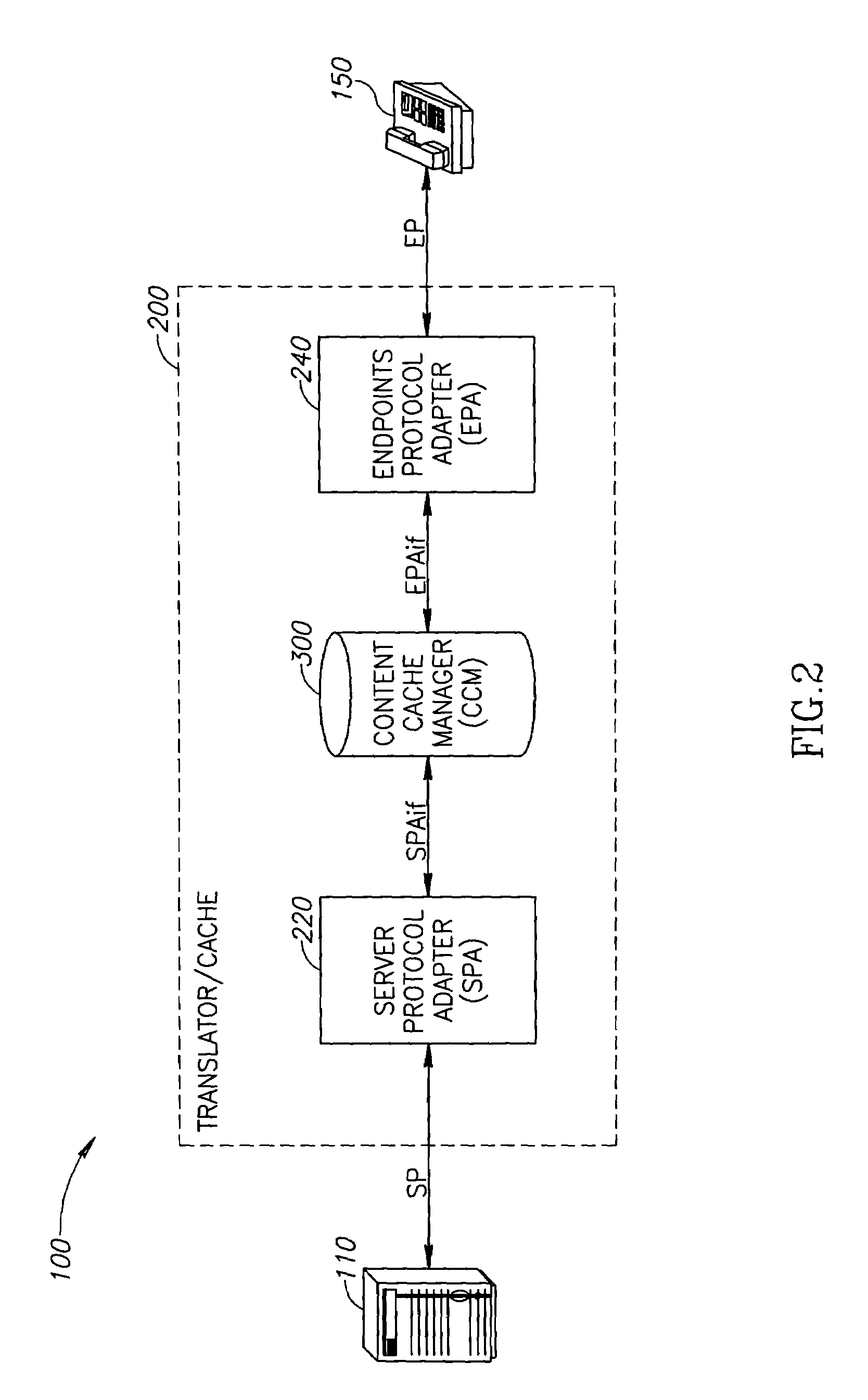

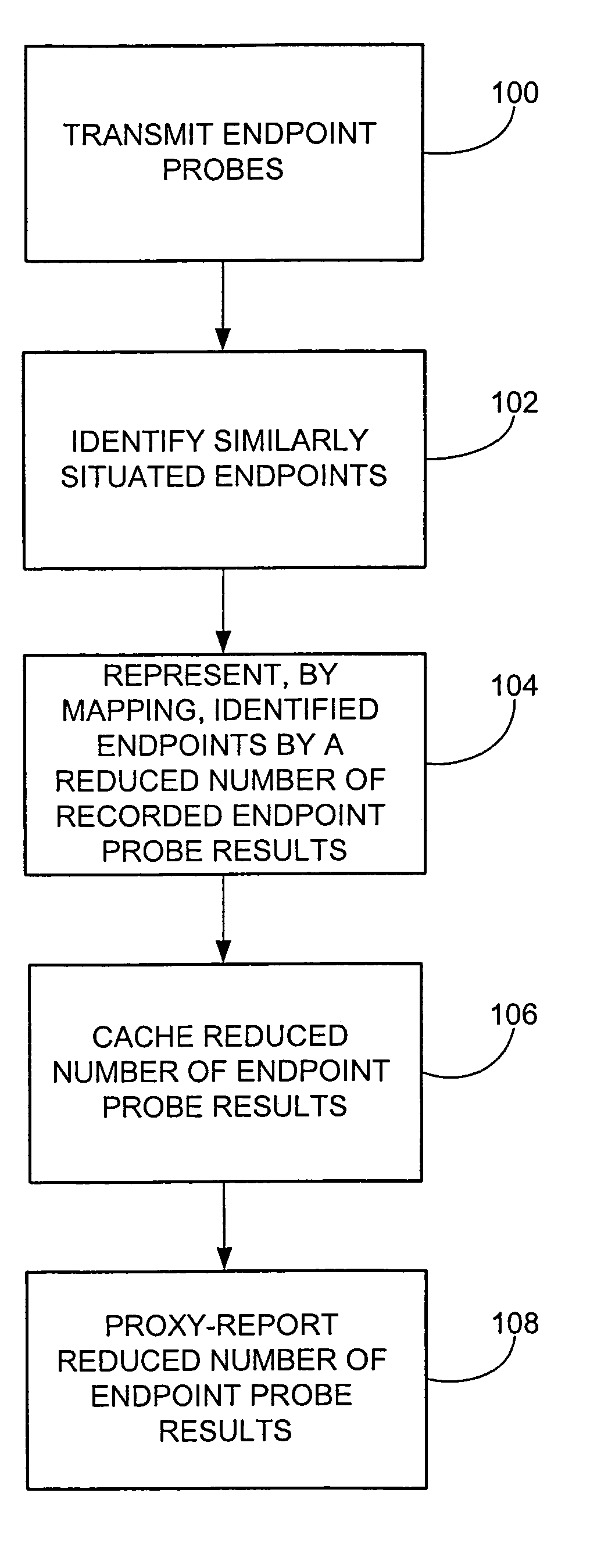

Method and apparatus for call setup within a voice frame network

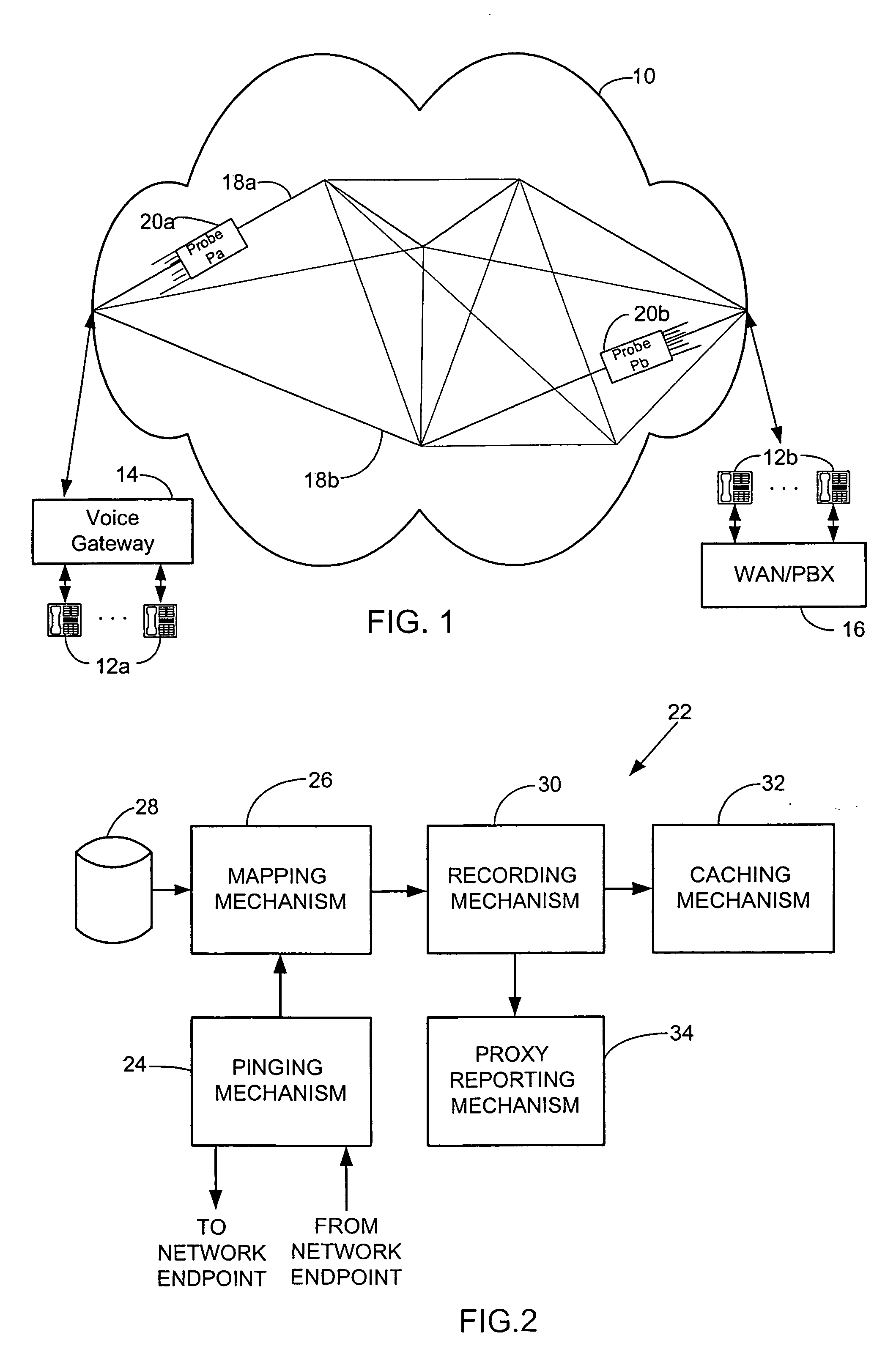

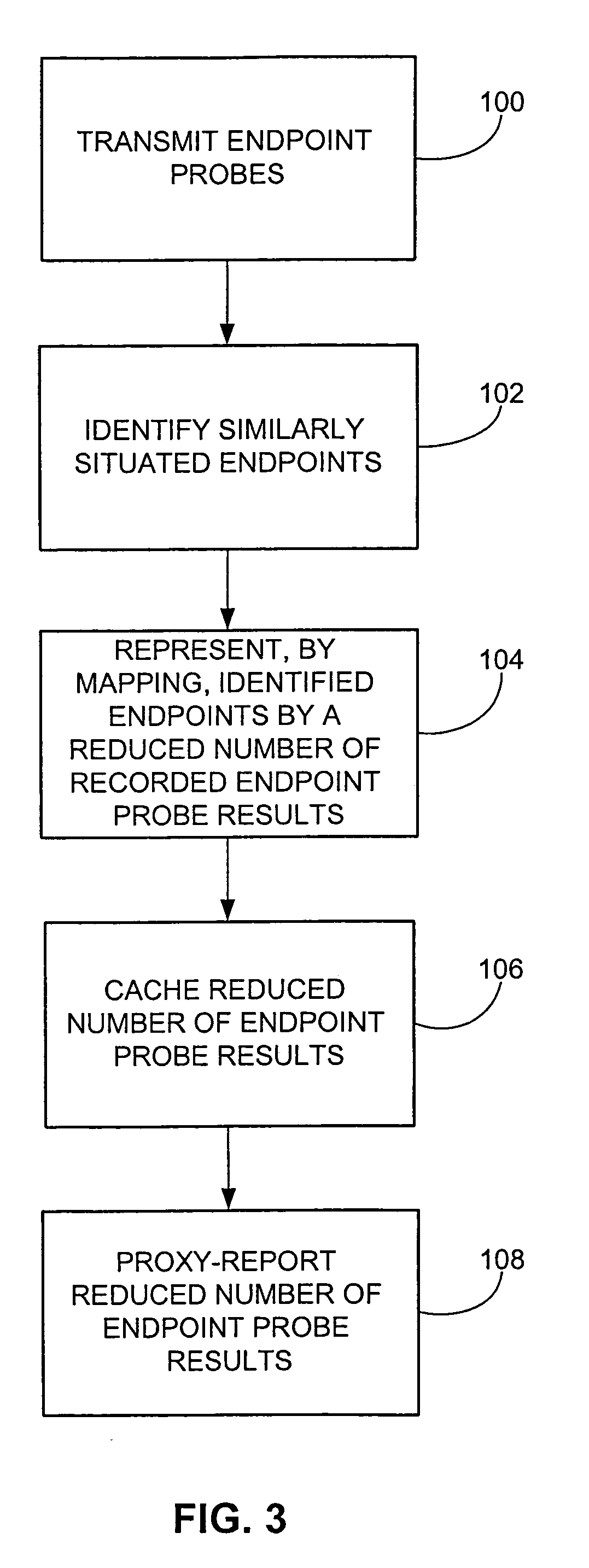

InactiveUS20050027861A1Reduce cache sizeImprove cache hit ratioSpecial service provision for substationDigital computer detailsOperational systemInternet operating system

The method involves transmitting plural endpoint probes to produce plural endpoint probe results indicating the preparedness of the endpoints for calls routed thereto; identifying similarly situated endpoints; and representing each of the similarly situated endpoints by a reduced number of recorded endpoint probe results that substantially represent the inter-connective preparedness of each of the similarly situated endpoints. The apparatus includes a mapping mechanism for mapping the probe results for the similarly situated endpoints into a reduced number of endpoint probe results that substantially represent the inter-connective preparedness of each of the similarly situated endpoints, and a recording mechanism for recording the reduced number of endpoint probe results. Such mapping permits an Internet operating system (IOS) router / gateway to act as an real-time responder / service assurance agent (RTR / SAA) proxy on behalf of non-RTR / SAA capable voice over Internet protocol (VoIP) endpoints.

Owner:CISCO TECH INC

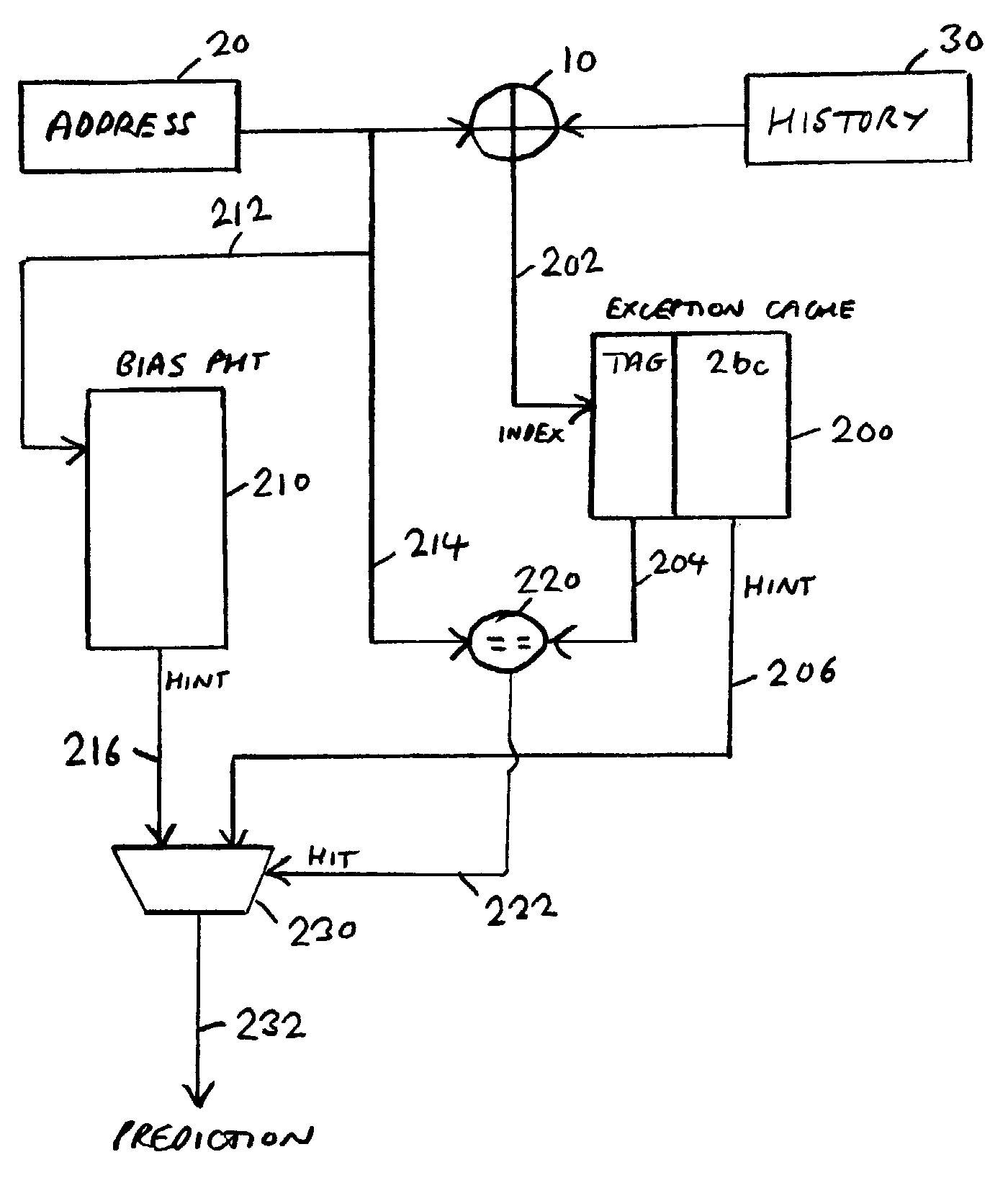

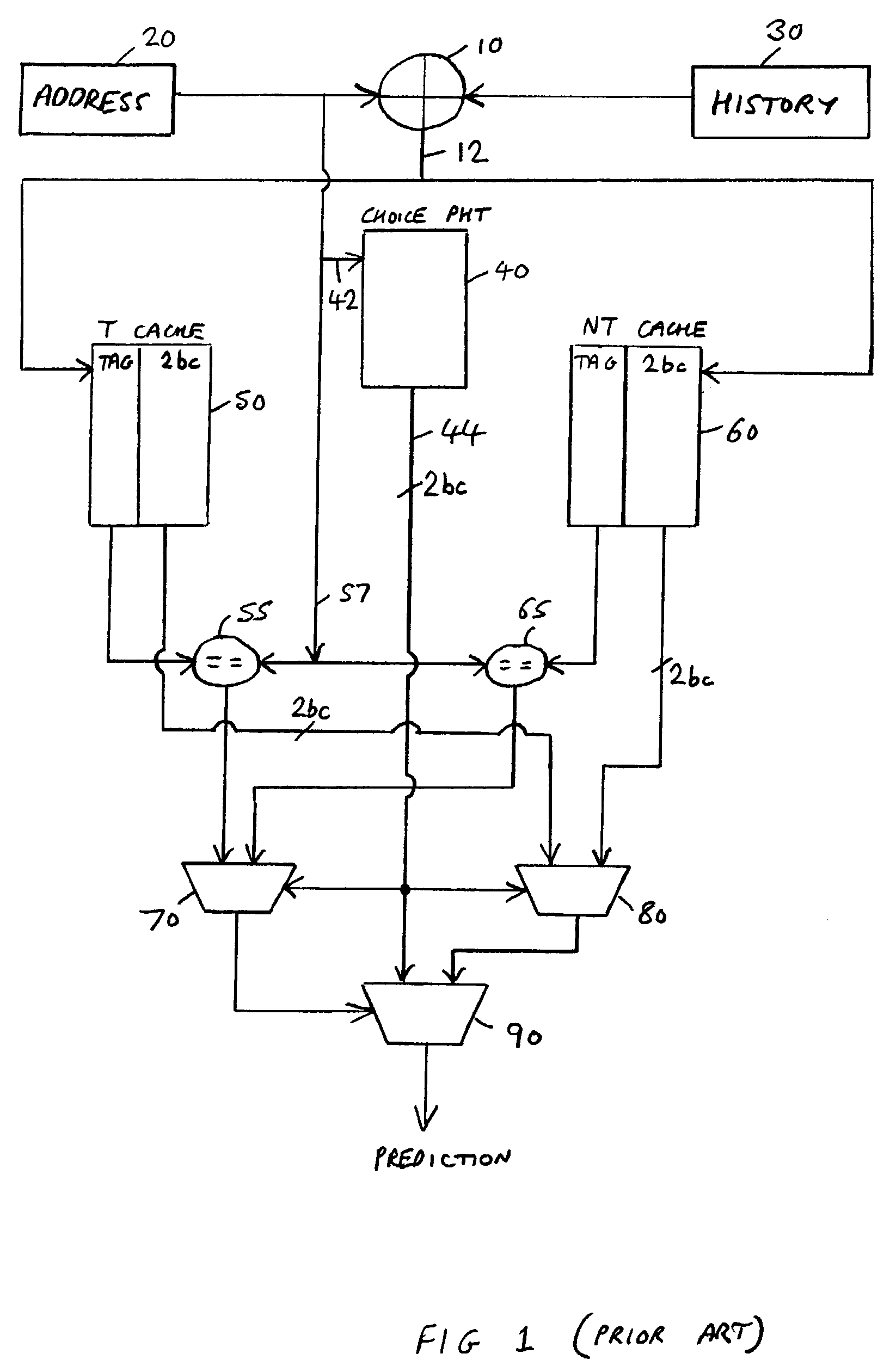

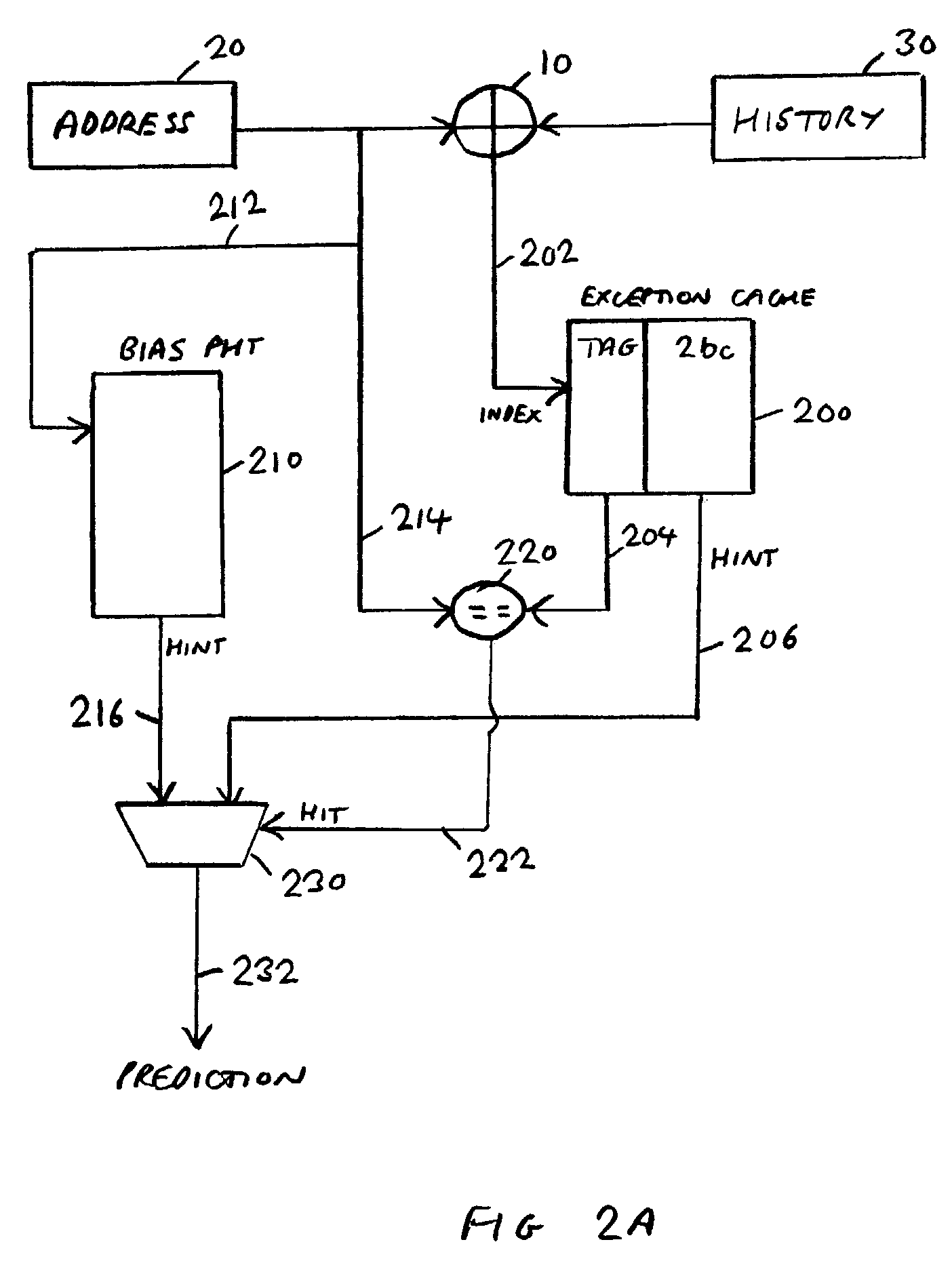

Two-level branch prediction apparatus

ActiveUS7831817B2Reduced resourceReduce cache sizeDigital computer detailsConcurrent instruction executionParallel computingReceipt

A two-level branch prediction apparatus includes branch bias logic for predicting whether a branch instruction will result in a branch being taken. Upon receipt of a first address portion of a branch instruction's address, a first store outputs a corresponding first branch bias value. A history store stores history data identifying an actual branch outcome for preceding branch instructions. A second store stores multiple entries, each entry including a replacement branch bias value and a TAG value. An index derived from the history data causes the second store to output a corresponding entry. The first branch bias value is selected unless the TAG value corresponds to a comparison TAG value derived from a second address portion of the branch instruction's address, in which event, the replacement branch bias value is selected.

Owner:ARM LTD

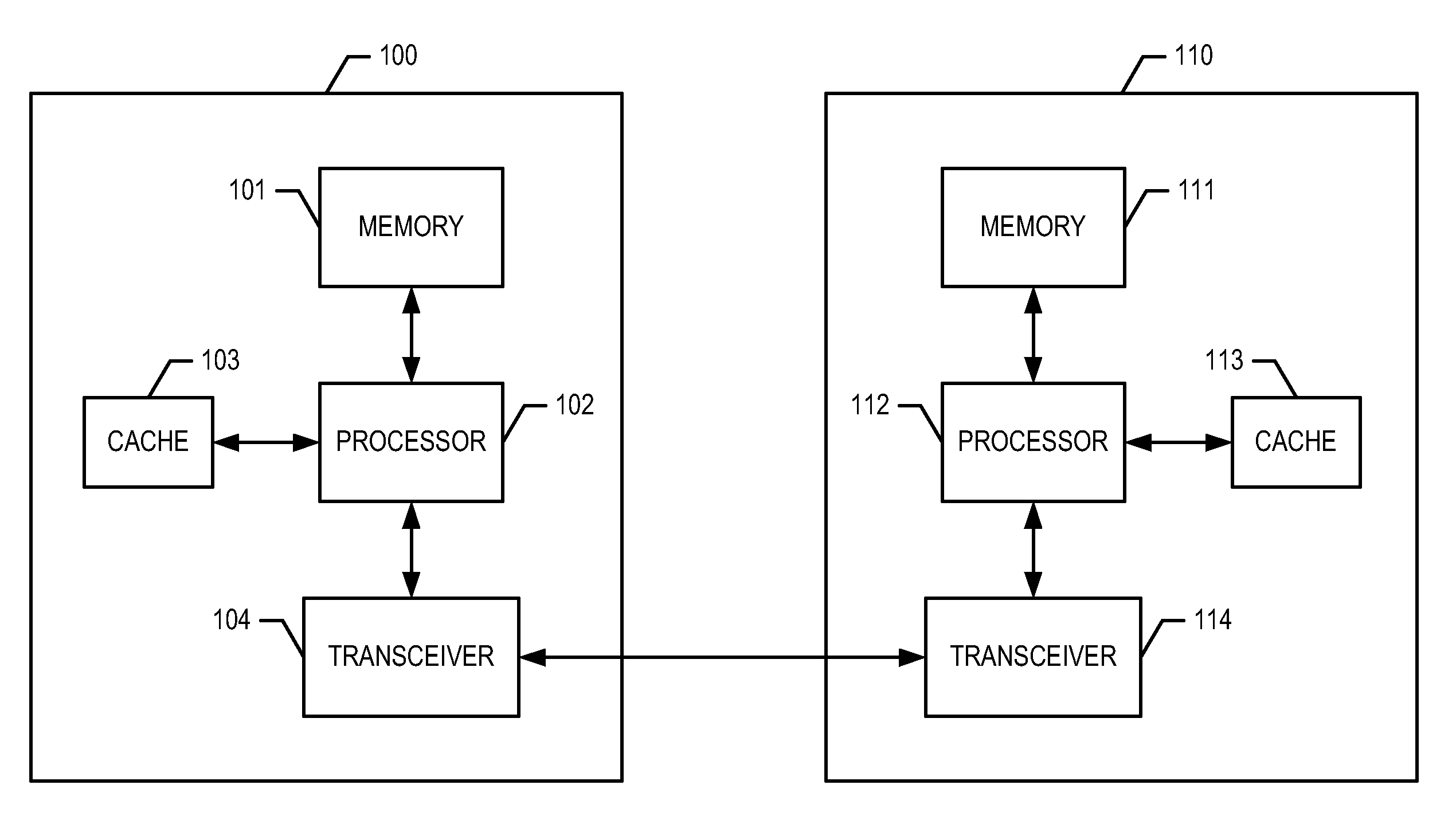

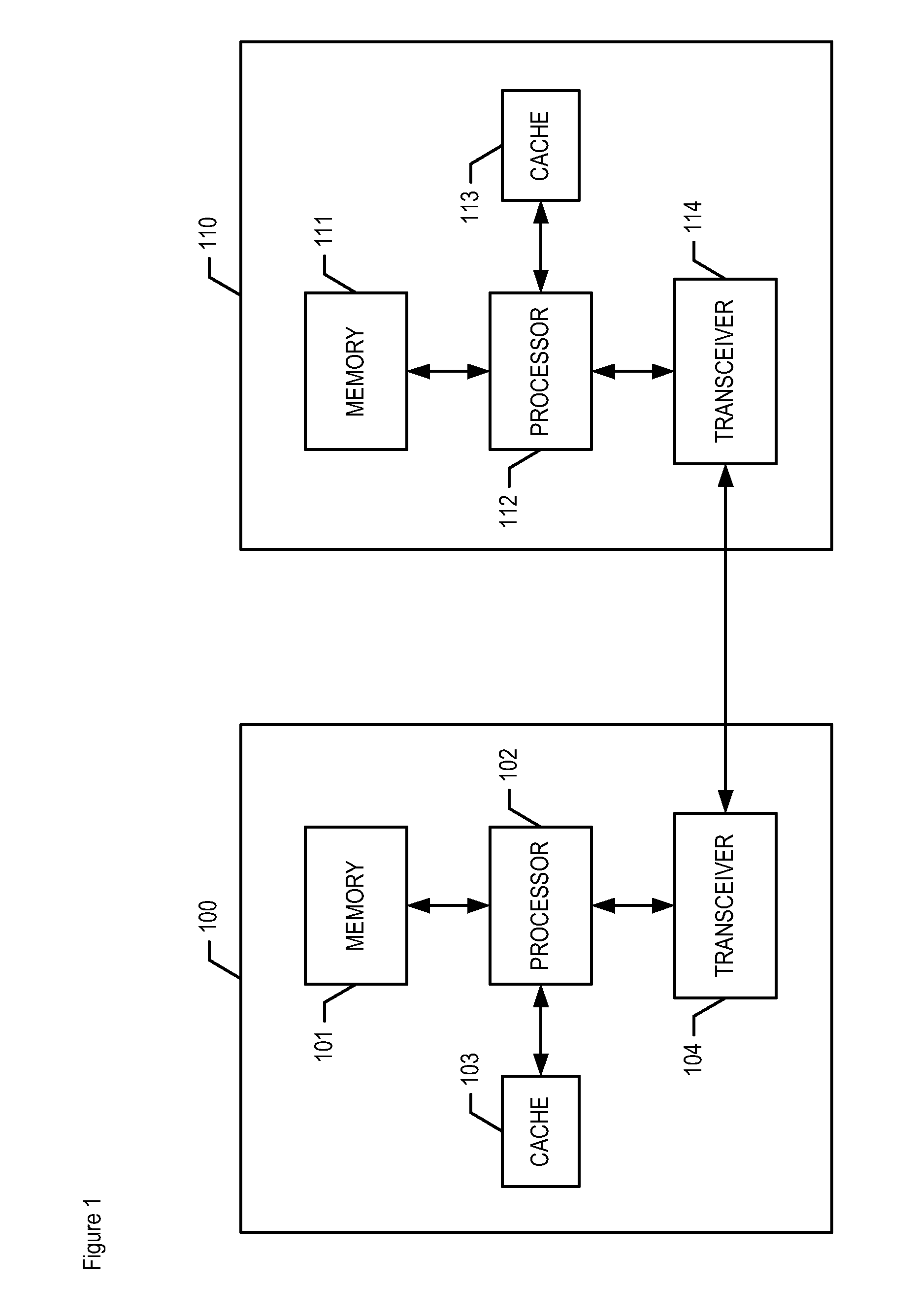

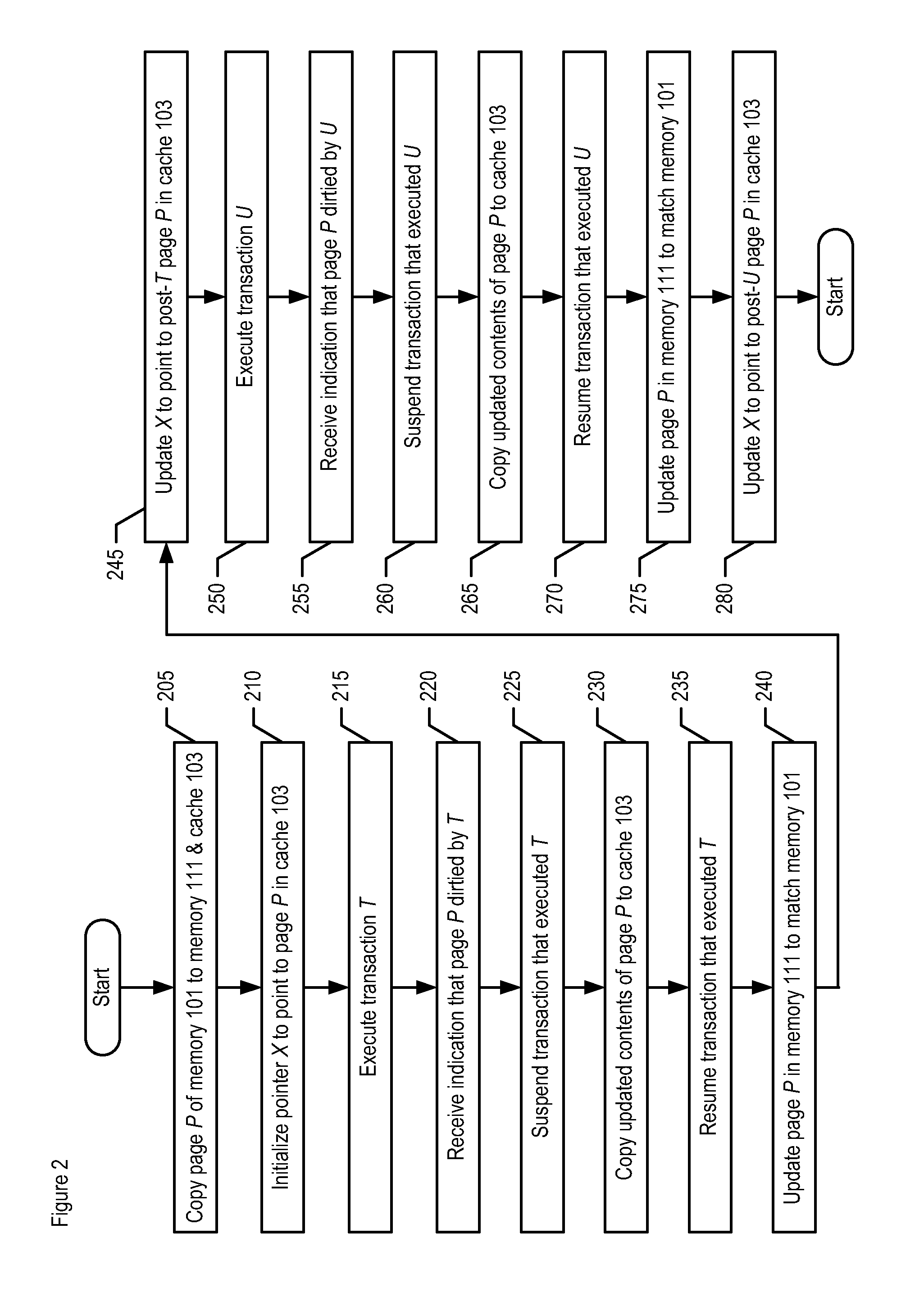

Cache management for increasing performance of high-availability multi-core systems

ActiveUS8312239B2Reduce overheadReduce cache sizeError detection/correctionMemory systemsParallel computingHigh availability

An apparatus and method for improving performance in high-availability systems are disclosed. In accordance with the illustrative embodiment, pages of memory of a primary system that are to be shadowed are initially copied to a backup system's memory, as well as to a cache in the primary system. A duplication manager process maintains the cache in an intelligent manner that significantly reduces the overhead required to keep the backup system in sync with the primary system, as well as the cache size needed to achieve a given level of performance. Advantageously, the duplication manager is executed on a different processor core than the application process executing transactions, further improving performance.

Owner:AVAYA INC

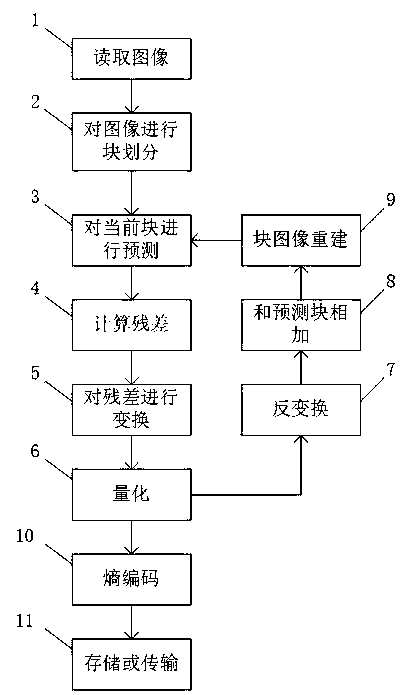

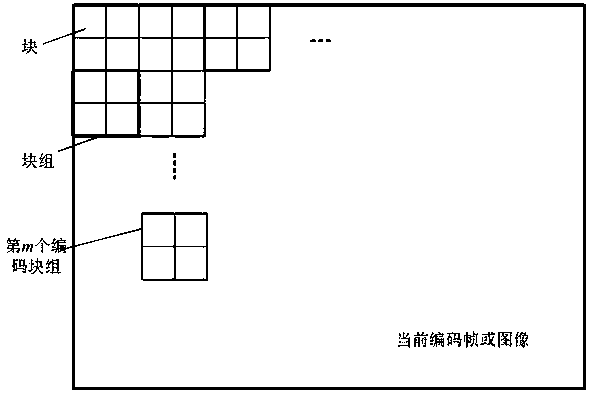

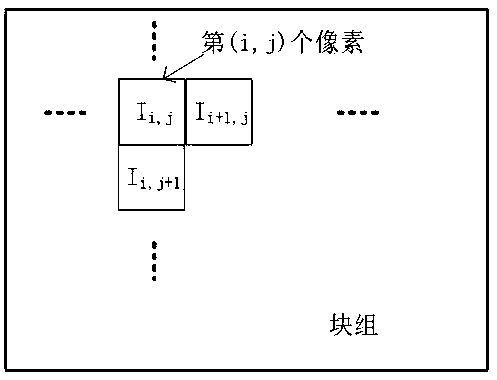

Rate control method for intra-frame coding

InactiveCN104038769AReduce cache sizePrecise rate controlDigital video signal modificationDigital imageIntra-frame

The invention discloses a precise rate control method for intra-frame coding and relates to the technical field of digital image and video coding. The rate control method for intra-frame coding comprises the steps of: dividing a frame of images into block groups; describing complexity of the block groups by utilizing a sum of absolute values of gradients among adjacent pixel points in the block groups, and determining a quantization step of each block group by using an index model for a ratio of a coding data amount to the complexity. Therefore, rate control at the block group level is realized, and the purpose of precise control on the rate is realized.

Owner:TONGJI UNIV

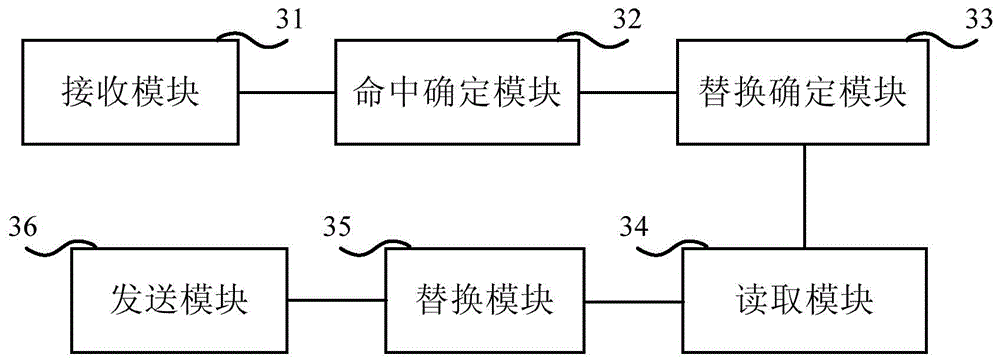

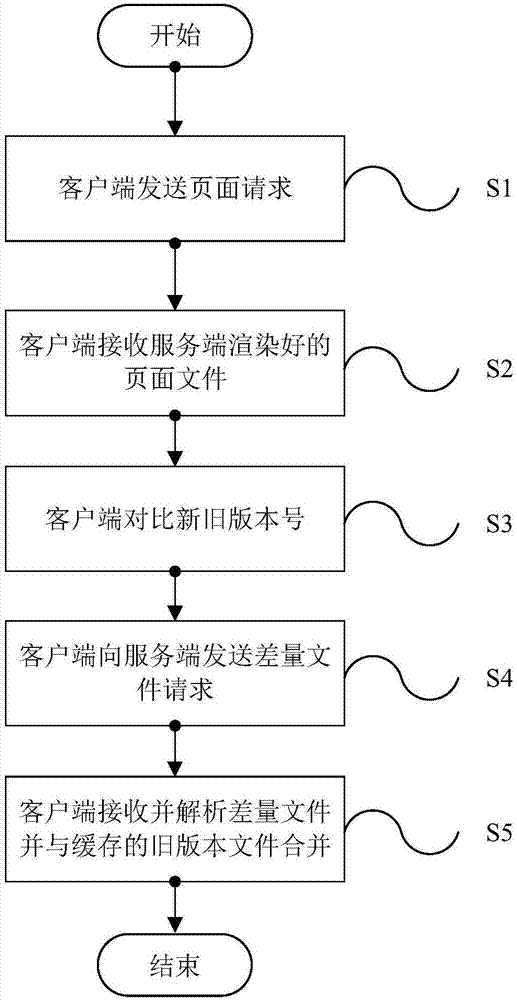

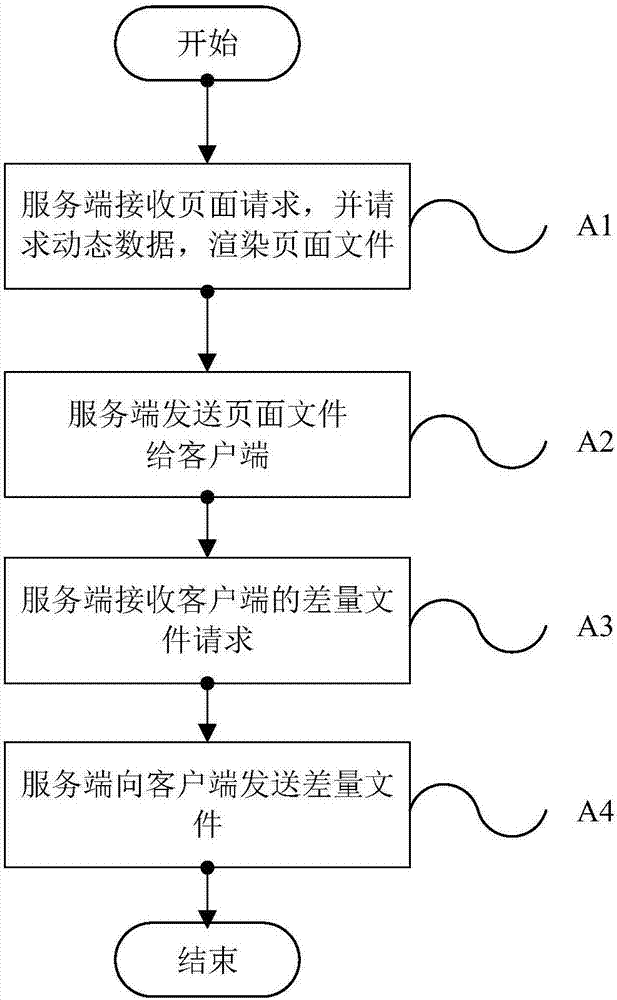

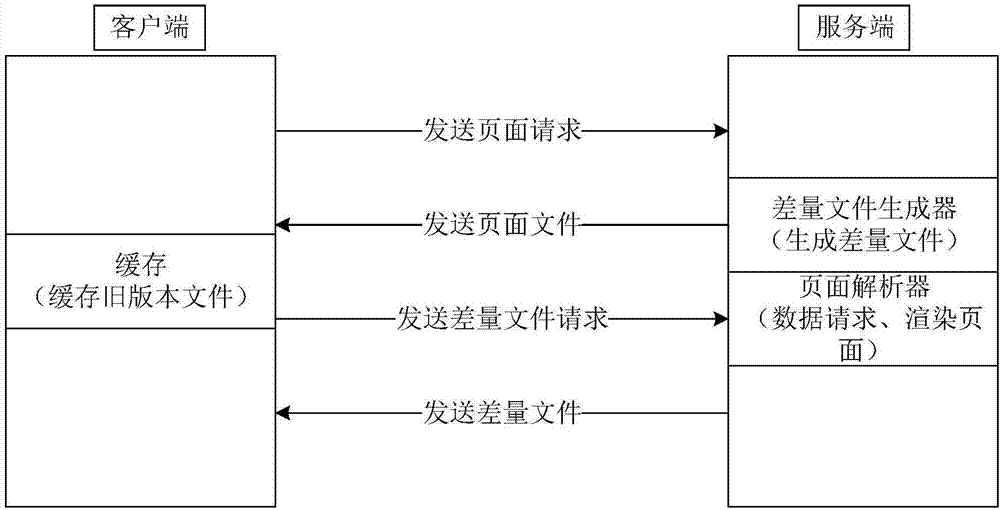

Mobile Web request processing method and system based on data de-duplication, and equipment

ActiveCN107196998AReduce transfer volumeReduce cache sizeTransmissionSpecial data processing applicationsDuplicate contentMobile web applications

The invention discloses a mobile Web request processing method and a mobile Web request processing system based on data de-duplication, and equipment, which belong to the technical field of mobile Web application and data processing. The mobile Web request processing method proposes an idea of adopting a mobile Web request data de-duplication mechanism in mobile Web for the problem that Web performance is reduced by transmitting the majority of unnecessary duplicate content in the network when a small amount of client cache files are updated in the field of mobile Web, generates a difference file through deleting duplicate data in a new version for an update file according to the cache files, and only returns the difference file to a client in network transmission, thus the client conducts restoration according to the difference file and the cache file, thereby reducing data volume of network transmission; and the mobile Web request processing method proposes a method of processing difference file analysis and server side rendering asynchronously, thereby relieving the stress on the client, reducing a number of network requests for dynamic data, and improving the overall Web performance.

Owner:HUAZHONG UNIV OF SCI & TECH

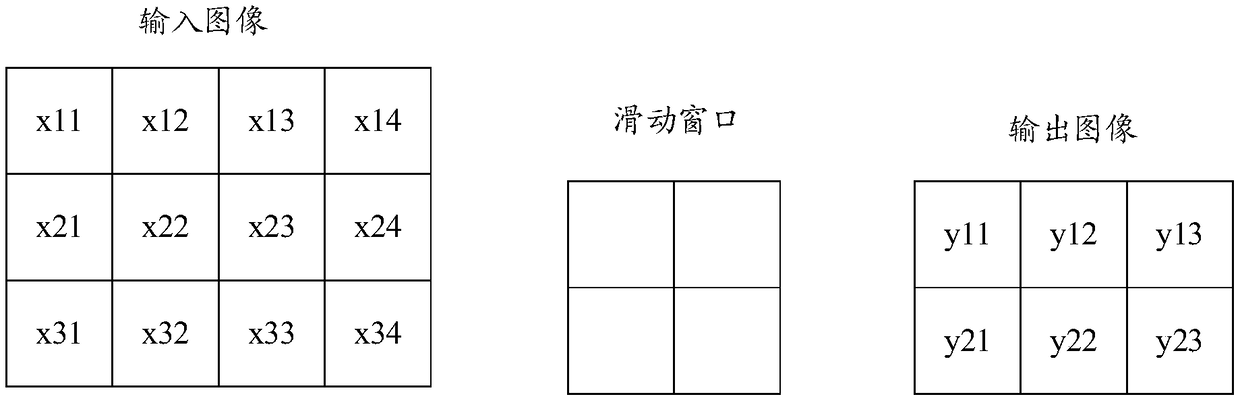

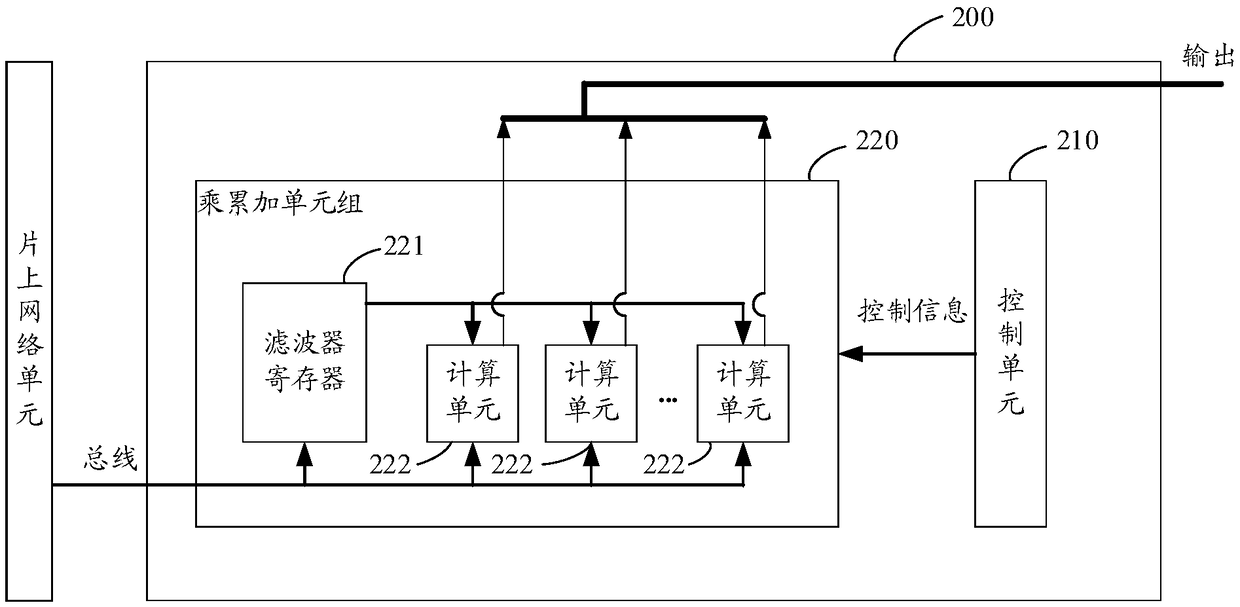

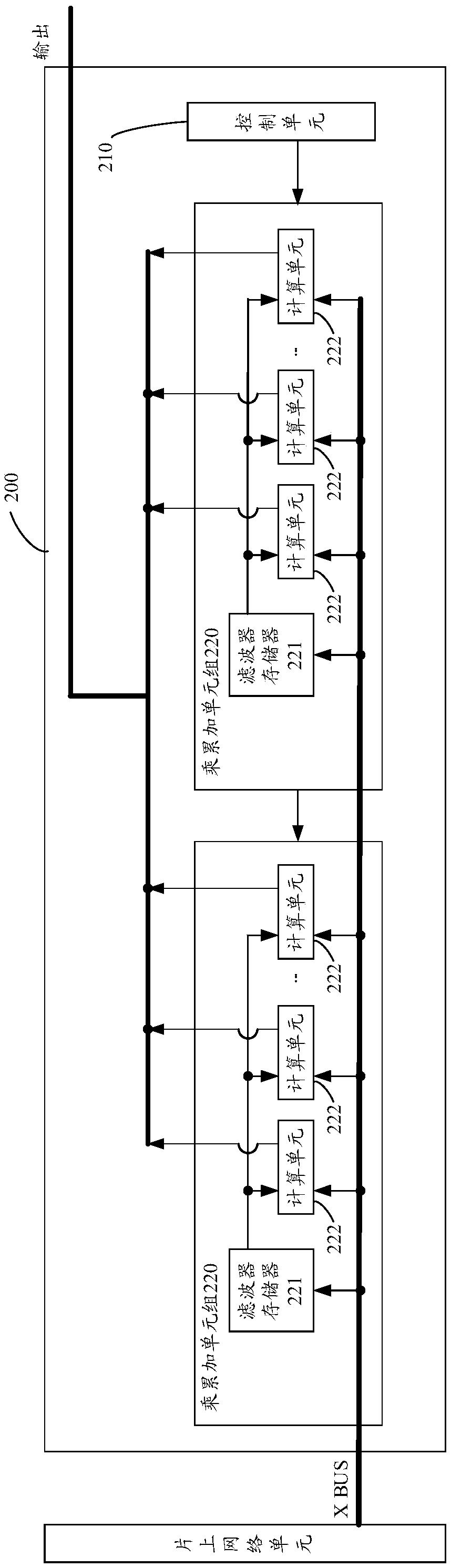

Arithmetic device for neural network, chip, equipment and related method

InactiveCN108701015AReduce volumeImprove energy efficiency ratioComputation using non-contact making devicesNeural architecturesProcessor registerControl unit

Provided are an arithmetic device for a neural network, a chip and equipment, wherein the arithmetic device includes a control unit and a multiplication-accumulation unit, the multiplication-accumulation unit includes a filter register and a plurality of calculation units, and the filter register is connected with the plurality of calculation units; the control unit is used for generating controlinformation and sending the control information to the calculation units; the filter register is used for caching a filter weight value to be subjected to multiplication-accumulation operation; and the calculation units are used for caching input characteristic values to be subjected to multiplication-accumulation operation, and performing multiplication-accumulation operation on the filter weightvalue and the input feature values according to the received control information. All the calculation units are controlled through one control unit, and thus the design complexity of the control unitcan be lowered; and one filter register is shared by the plurality of calculation units, and thus a required cache size can be reduced.

Owner:SZ DJI TECH CO LTD

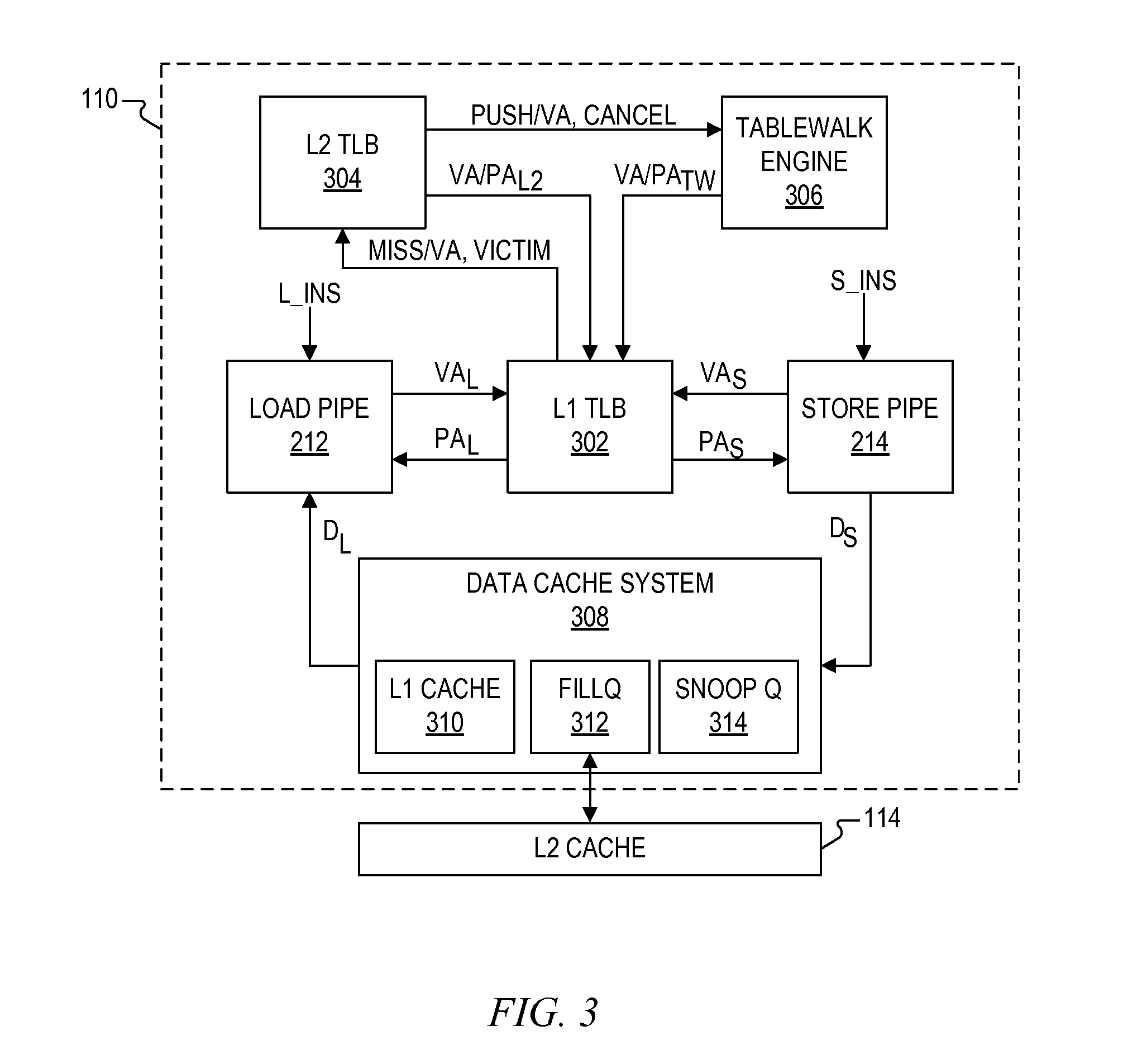

Cache system with a primary cache and an overflow cache that use different indexing schemes

PendingUS20160170884A1Improve utilityImprove cache utilizationMemory architecture accessing/allocationMemory adressing/allocation/relocationHash tableMemory systems

A cache memory system including a primary cache and an overflow cache that are searched together using a search address. The overflow cache operates as an eviction array for the primary cache. The primary cache is addressed using bits of the search address, and the overflow cache is addressed by a hash index generated by a hash function applied to bits of the search address. The hash function operates to distribute victims evicted from the primary cache to different sets of the overflow cache to improve overall cache utilization. A hash generator may be included to perform the hash function. A hash table may be included to store hash indexes of valid entries in the primary cache. The cache memory system may be used to implement a translation lookaside buffer for a microprocessor.

Owner:VIA ALLIANCE SEMICON CO LTD

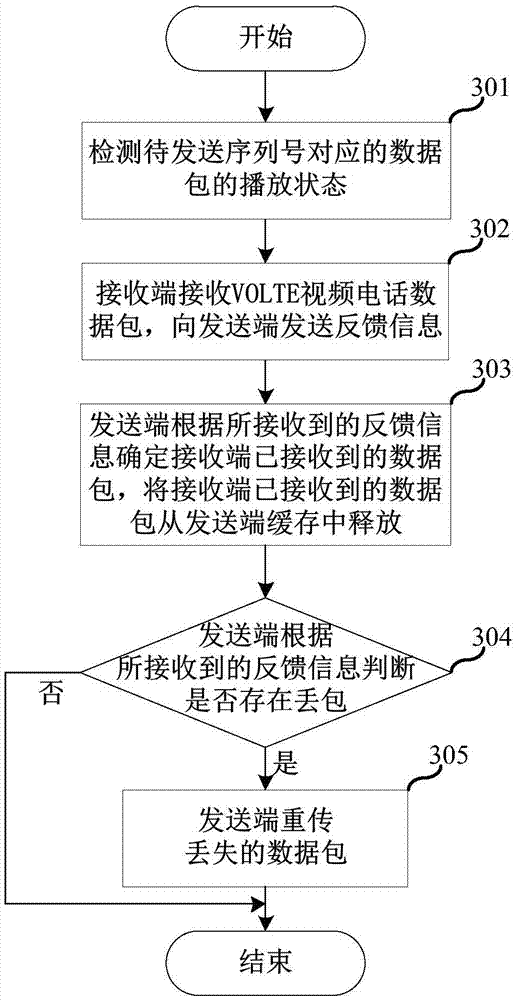

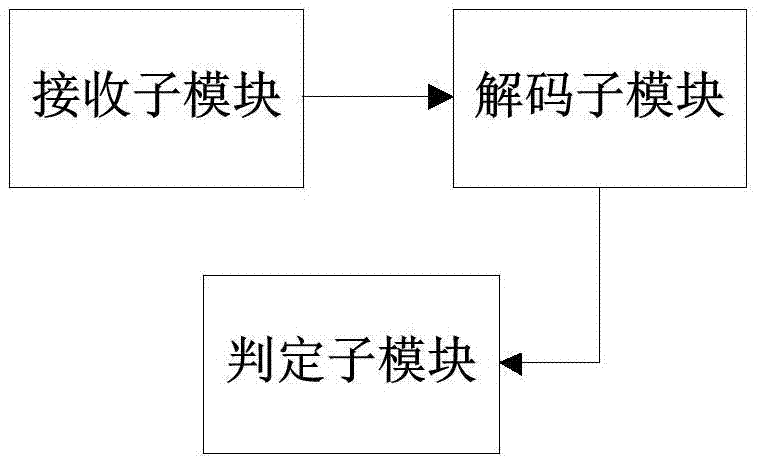

Transmission method and system for VOLTE video call

ActiveCN106899380AReliable Fast RetransmitAvoid burdenError prevention/detection by using return channelTransmitted data organisation to avoid errorsPacket lossReal-time computing

The invention relates to the technical field of information and discloses a transmission method and system for a VOLTE video call. The method comprises the following steps that a receiving end receives a data package of the VOLTE video call and sends feedback information to a sending end, wherein the feedback information is a receiving state of the data package; the sending end judges whether packet loss exists according to the received feedback information; and if yes, the lost data package is retransmitted. Through the method, the sending end can check whether the packet loss exists, and when the packet loss exists, the reliable and quick data retransmission is realized, so that the overall delay and the system overhead are reduced.

Owner:LEADCORE TECH +1

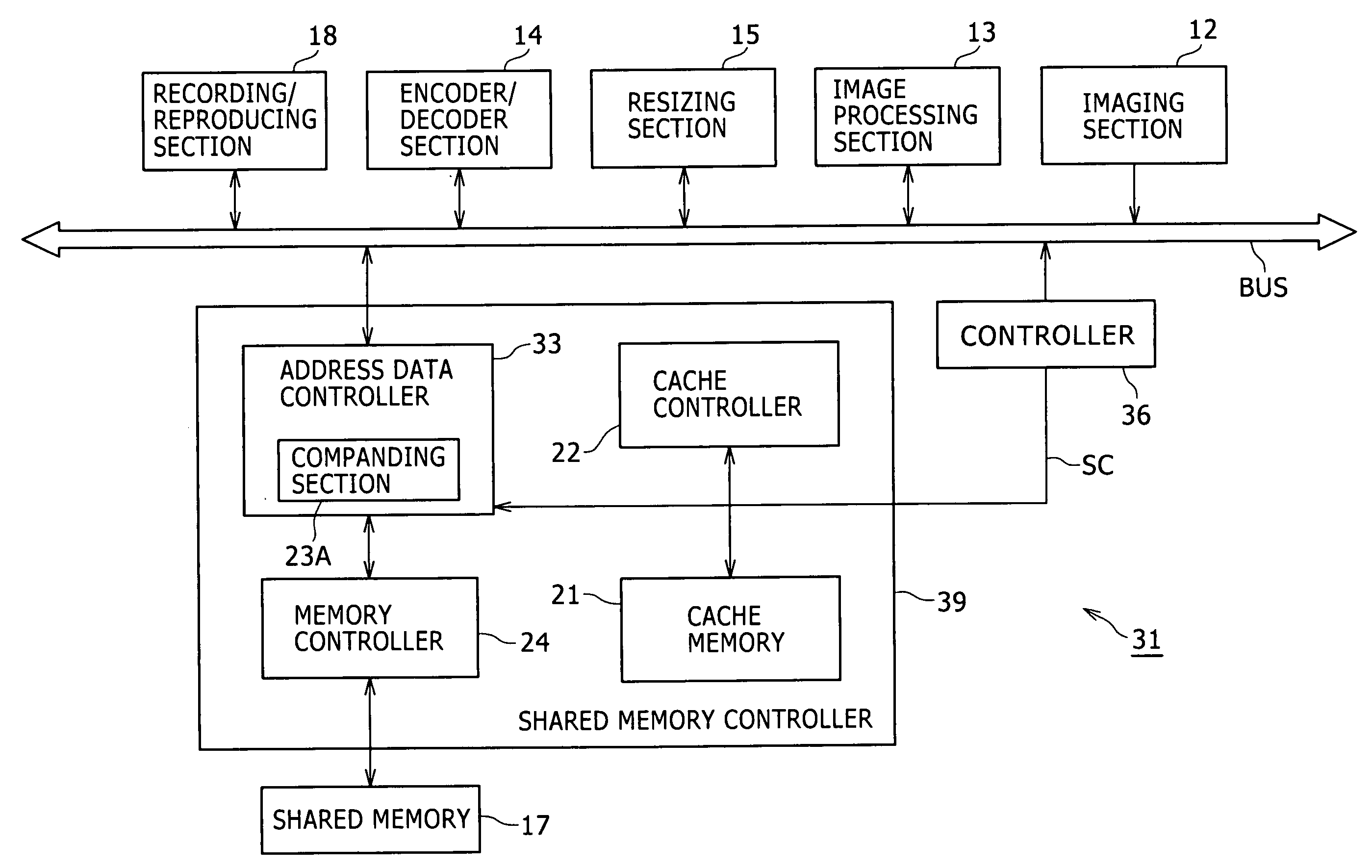

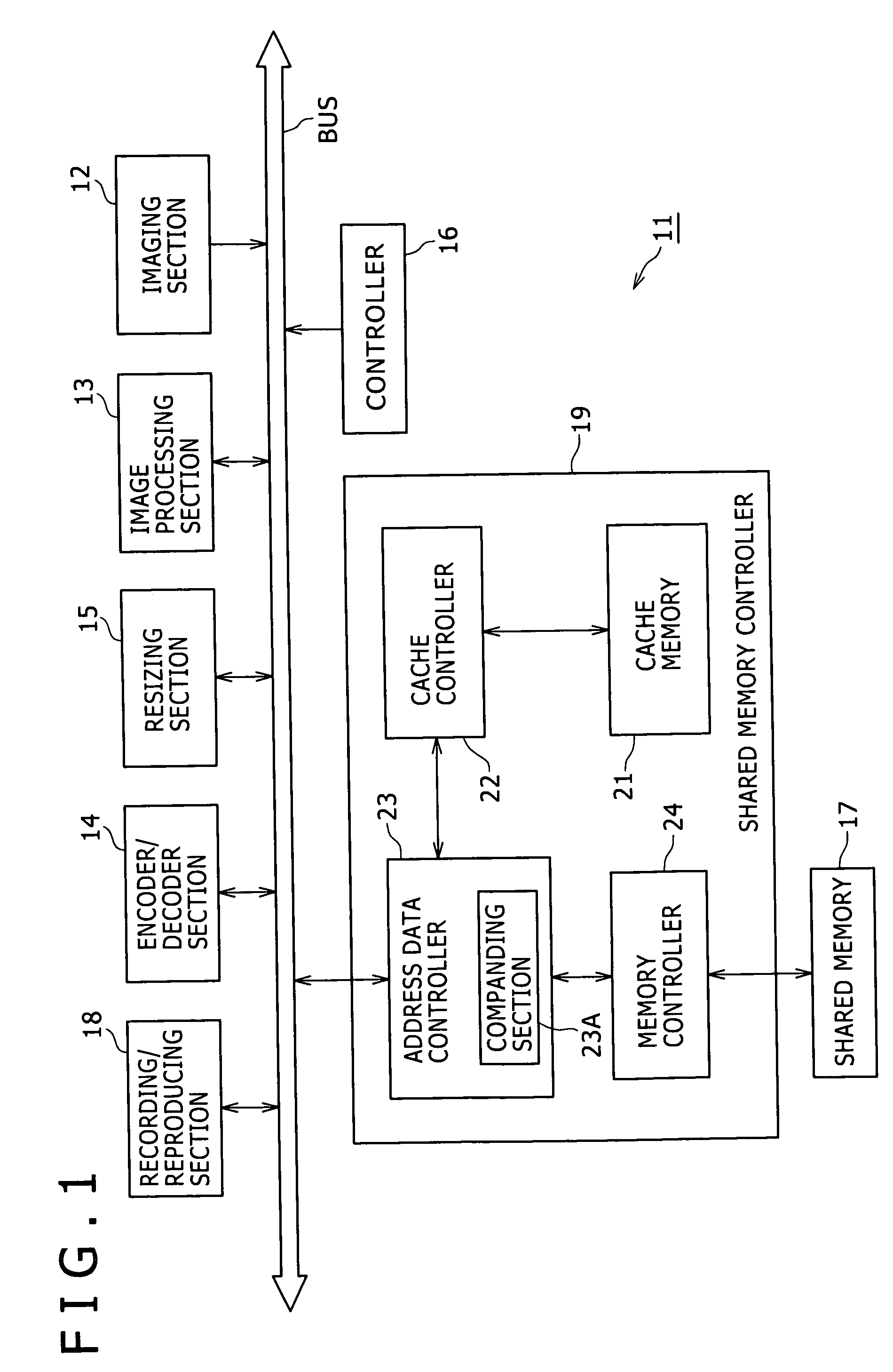

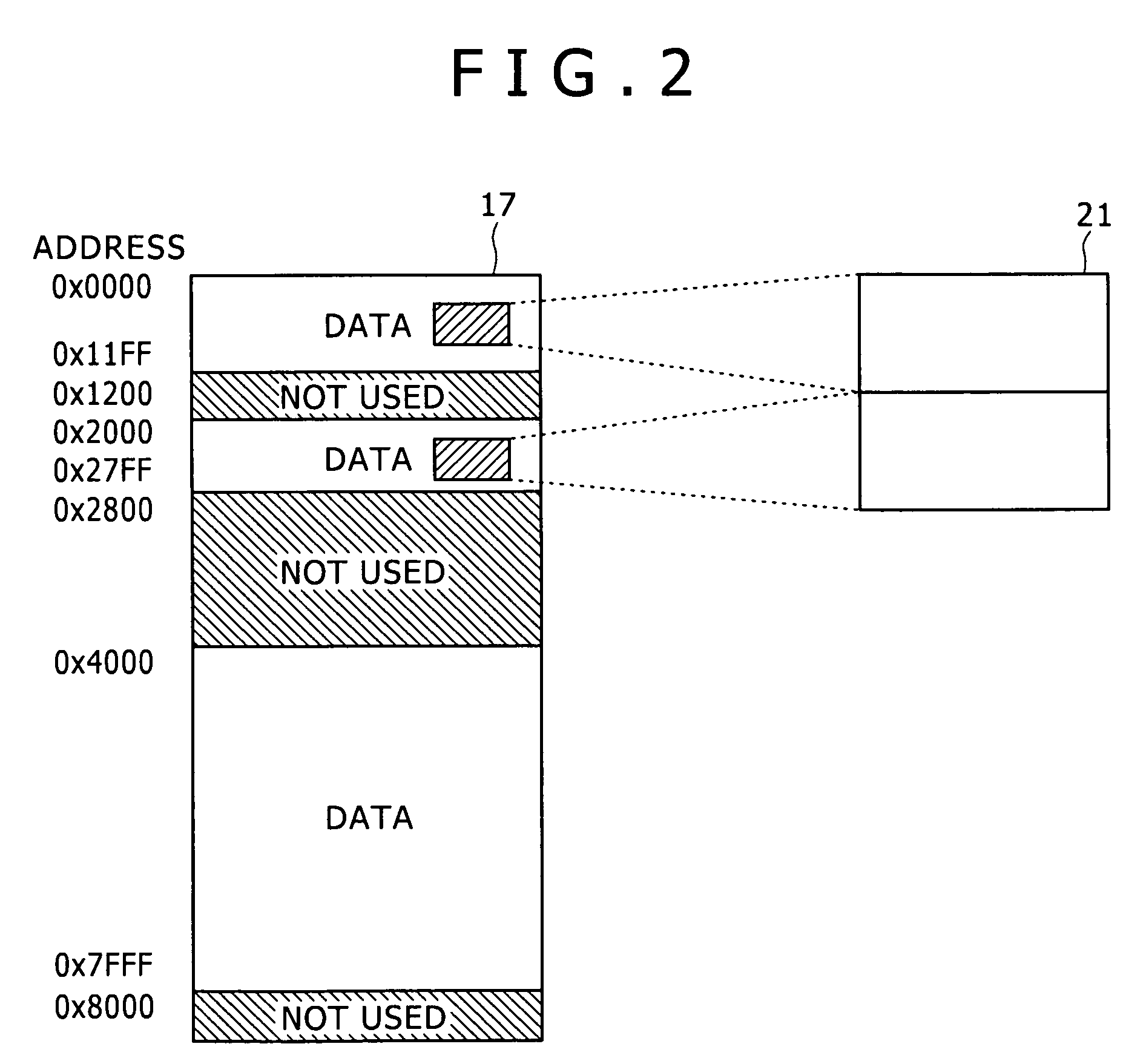

Data processing apparatus and shared memory accessing method

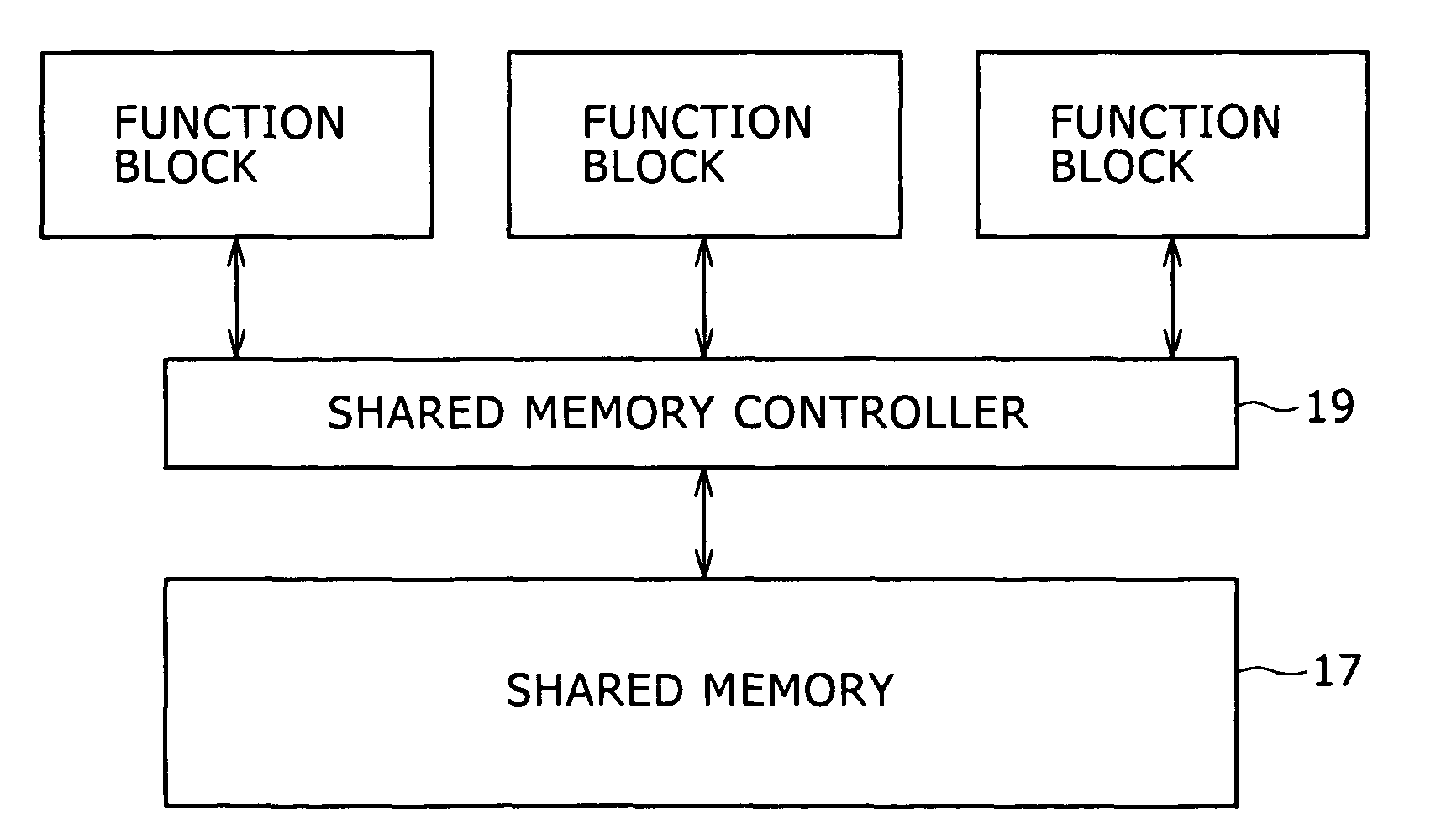

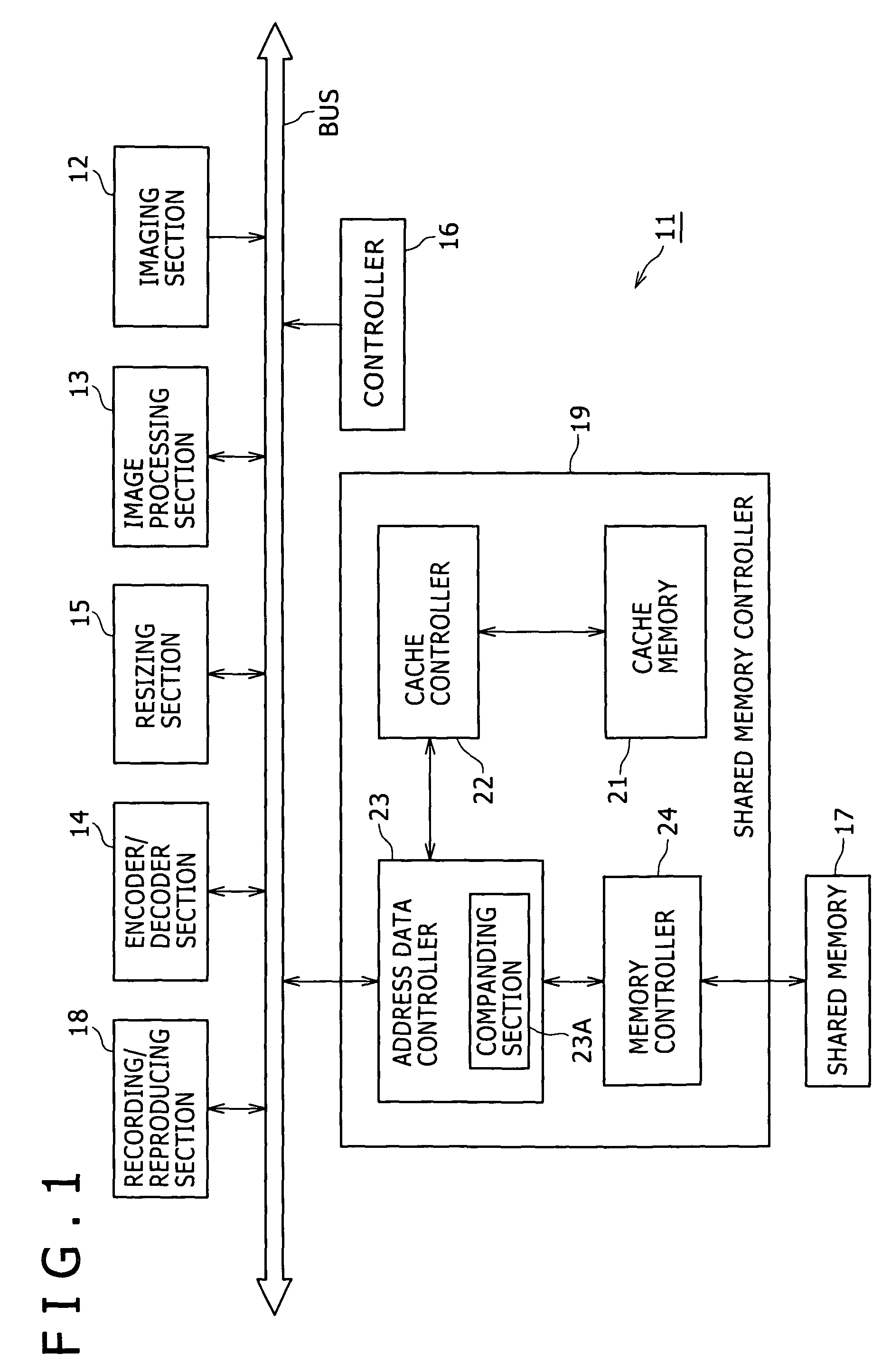

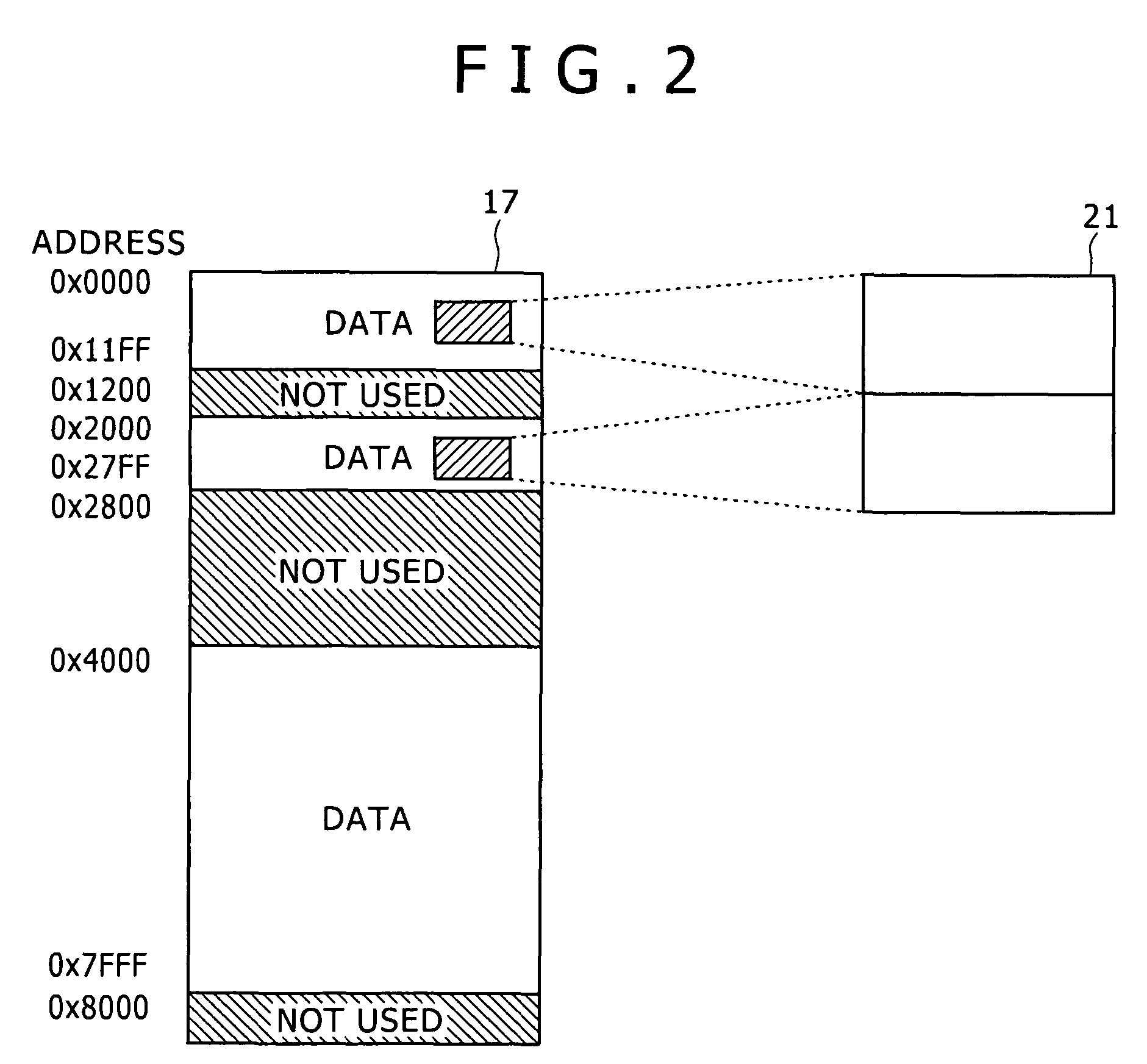

InactiveUS20090100225A1Efficient cache memory utilizationReduce cache memory sizeMemory architecture accessing/allocationMemory adressing/allocation/relocationAccess methodMemory controller

The present invention provides a data processing apparatus for processing data by causing a plurality of function blocks to share a single shared memory, the data processing apparatus including: a memory controller configured to cause the plurality of function blocks to write and read data to / and from the shared memory in response to requests from any one of the function blocks; a cache memory; and a companding section configured to compress the data to be written to the cache memory while expanding the data read therefrom.

Owner:SONY CORP

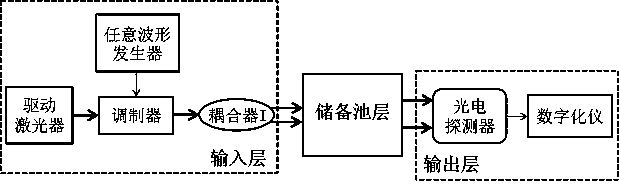

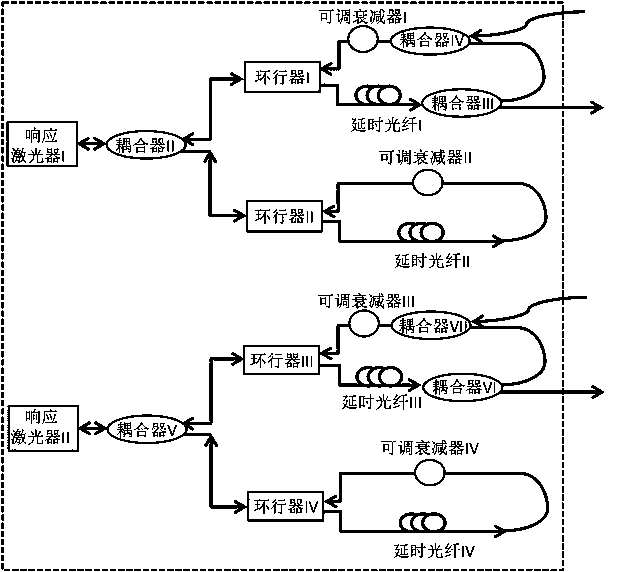

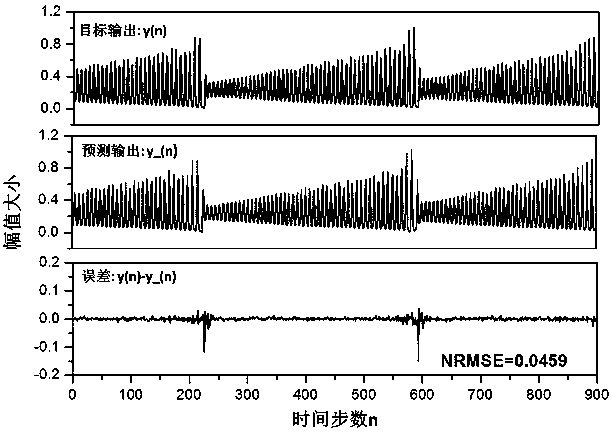

Parallel dual optical feedback semiconductor laser reservoir computing system

ActiveCN110247299AReduce cache sizeLow costLaser detailsLaser output parameters controlFiberPhotovoltaic detectors

The invention discloses a parallel dual optical feedback semiconductor laser reservoir computing system which comprises an input layer, a reservoir layer and an output layer. The input layer includes a driving laser, an arbitrary waveform generator, a modulator, and a coupler I and is used for dividing an input signal into two parts to be input into the reservoir layer. The reservoir layer consists of two response lasers with dual optical feedback loops and is used for generating a rich nonlinear dynamic response. Each optical feedback loop is composed of a circulator, a delay fiber, and an adjustable attenuator. Each response laser has a feedback loop and two couplers and is used for receiving the input signal and outputting a portion of light to the output layer. The output layer includes a pair of photoelectric detectors and a dual-channel digitizer to obtain the response status of the reservoir. The parallel dual optical feedback semiconductor laser reservoir computing system can reduce the number of virtual nodes of the feedback loop receiving the input signal and the period of a mask signal without degrading the performance, thereby reducing the requirement of the reservoir for the cache size of the arbitrary waveform generator.

Owner:SHANGHAI UNIV

Cache memory storage

ActiveUS8990396B2Reduce accessReduce cache sizeMultiplex system selection arrangementsSpecial service provision for substationData streamData transmission

Owner:AVAYA COMM ISRAEL

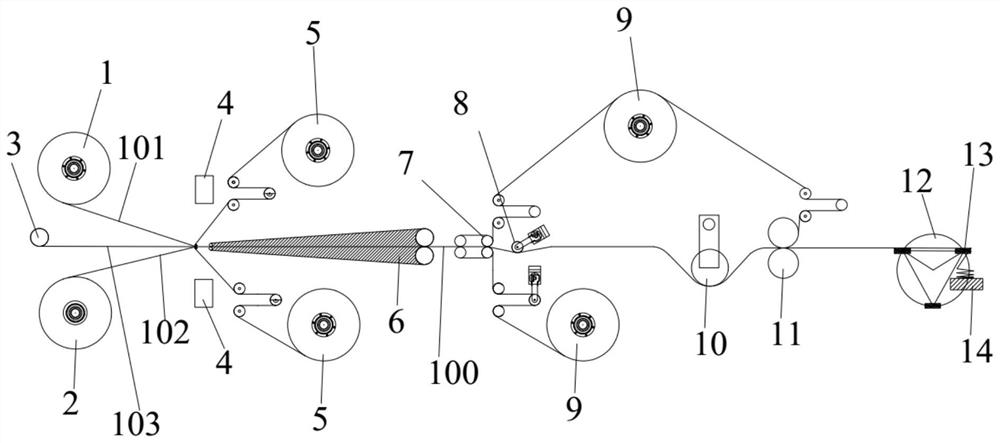

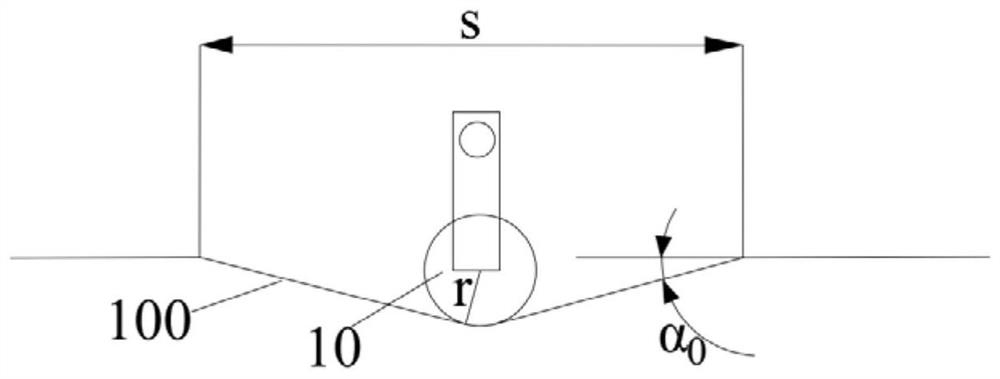

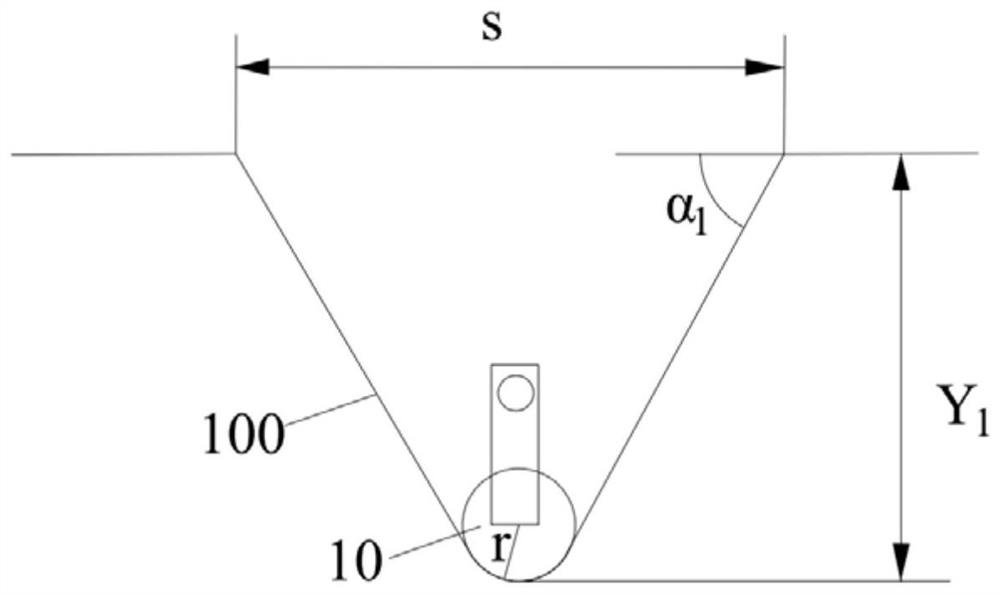

Battery pole piece laminating equipment and battery cell laminating method

ActiveCN112635809AGuaranteed insulationImprove quality and pass rateAssembling battery machinesFinal product manufactureEngineeringPower battery

The invention relates to the technical field of power battery manufacturing, and discloses battery pole piece laminating equipment and a battery cell laminating method, a battery pole piece comprises a positive pole piece, a negative pole piece and a diaphragm strip material, and the positive pole piece and the negative pole piece are correspondingly arranged on two sides of the diaphragm strip material. The battery pole piece laminating equipment comprises a heat sealing unit, a buffering unit, a laminating unit and a driving unit. And the heat sealing unit is arranged at the downstream of the feeding unit and is used for heating and pressing the battery pole piece. The buffering unit is arranged at the downstream of the heat sealing unit and comprises a buffering roller and a lifting driving piece, and the lifting driving piece can drive the buffering roller to ascend or descend so as to reduce or increase the buffer length of the battery pole piece. The lamination unit is arranged at the downstream of the buffering unit and is used for folding the battery pole piece, the lamination unit comprises a turret mechanism and a lamination platform, the turret mechanism comprises a turntable and a clamping jaw, the clamping jaw can clamp the battery pole piece, and the turntable can drive the clamping jaw to rotate to the lamination platform. The battery pole piece laminating equipment can adapt to the changed lamination speed, and the quality and the qualification rate of battery cell products are improved.

Owner:SVOLT ENERGY TECHNOLOGY CO LTD

Data processing apparatus and shared memory accessing method

InactiveUS8010746B2Reduce cache sizeTime requiredMemory architecture accessing/allocationMultiple digital computer combinationsAccess methodFlash memory controller

The present invention provides a data processing apparatus for processing data by causing a plurality of function blocks to share a single shared memory, the data processing apparatus including: a memory controller configured to cause the plurality of function blocks to write and read data to / and from the shared memory in response to requests from any one of the function blocks; a cache memory; and a companding section configured to compress the data to be written to the cache memory while expanding the data read therefrom.

Owner:SONY CORP

Method and apparatus for call setup within a voice frame network

InactiveUS7181522B2Improve fidelityImprove efficiencySpecial service provision for substationDigital computer detailsOperational systemInternet operating system

The method involves transmitting plural endpoint probes to produce plural endpoint probe results indicating the preparedness of the endpoints for calls routed thereto; identifying similarly situated endpoints; and representing each of the similarly situated endpoints by a reduced number of recorded endpoint probe results that substantially represent the inter-connective preparedness of each of the similarly situated endpoints. The apparatus includes a mapping mechanism for mapping the probe results for the similarly situated endpoints into a reduced number of endpoint probe results that substantially represent the inter-connective preparedness of each of the similarly situated endpoints, and a recording mechanism for recording the reduced number of endpoint probe results. Such mapping permits an Internet operating system (IOS) router / gateway to act as an real-time responder / service assurance agent (RTR / SAA) proxy on behalf of non-RTR / SAA capable voice over Internet protocol (VoIP) endpoints.

Owner:CISCO TECH INC

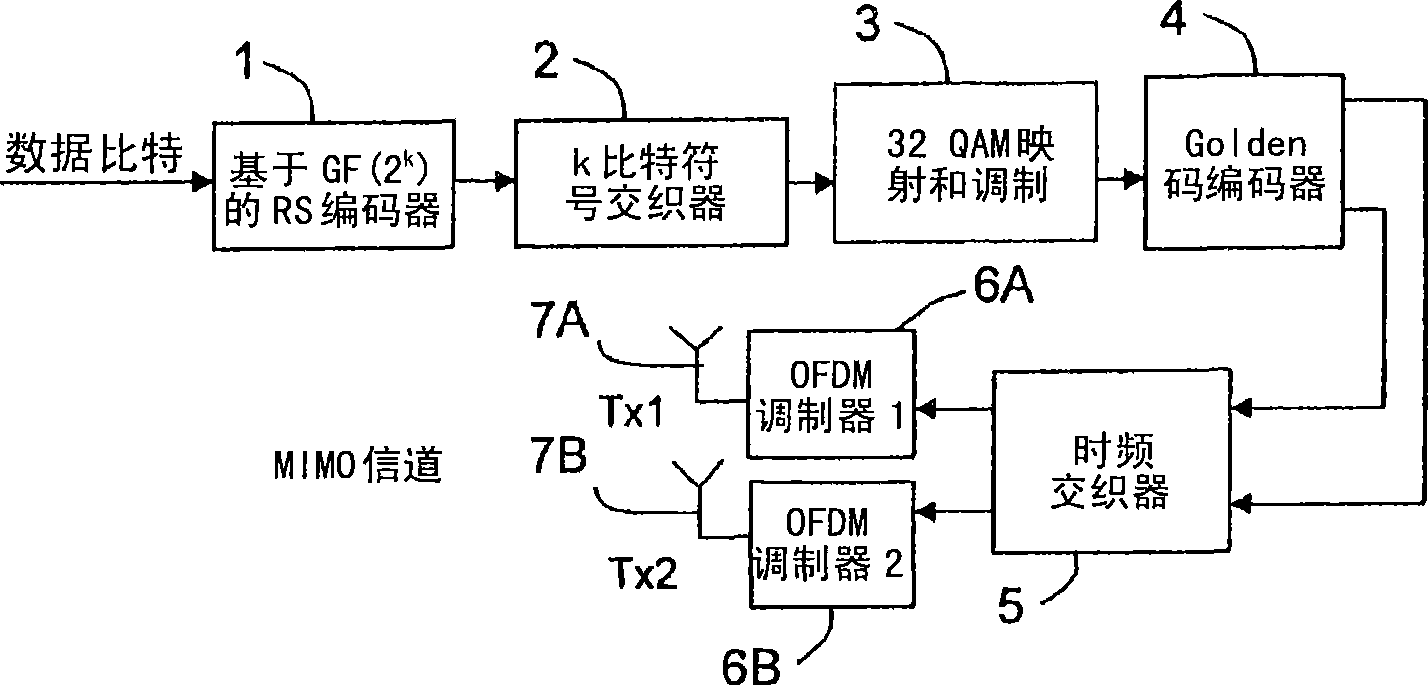

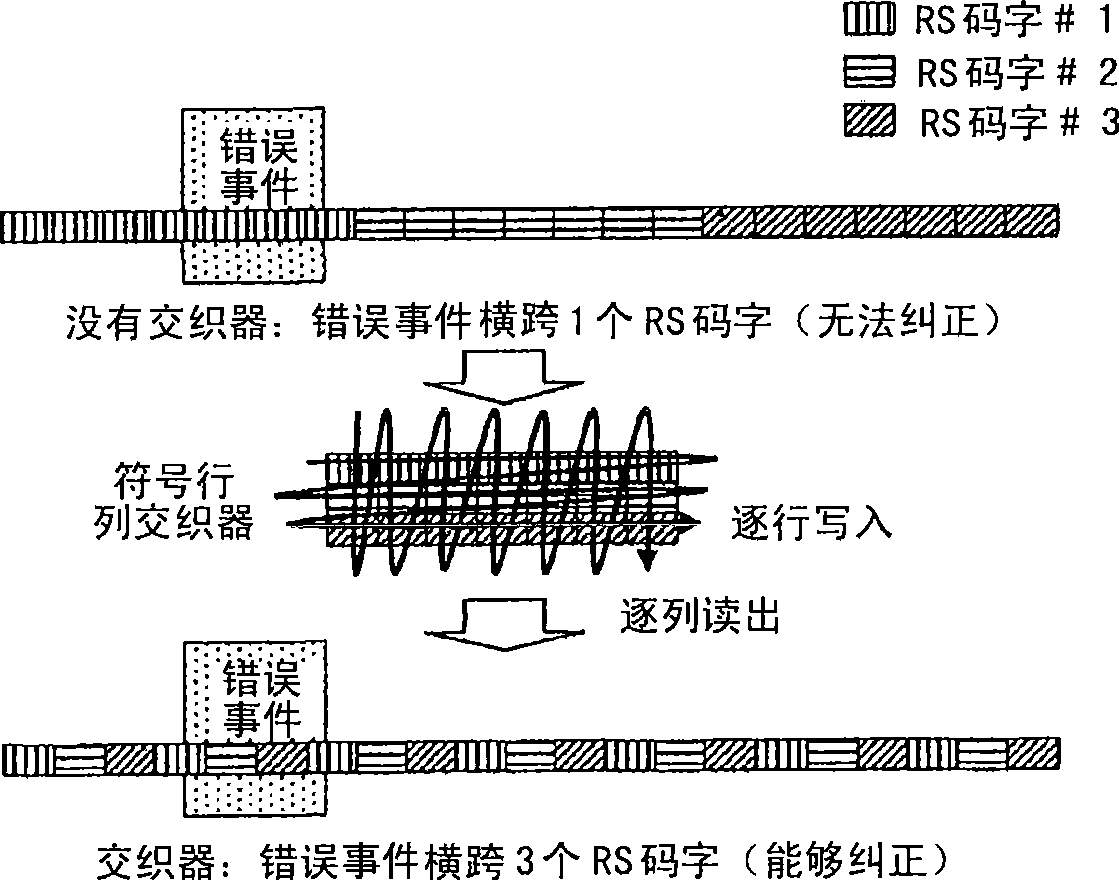

Method for transmitting a stream of data in a wireless system with at least two antennas and transmitter implementing said method

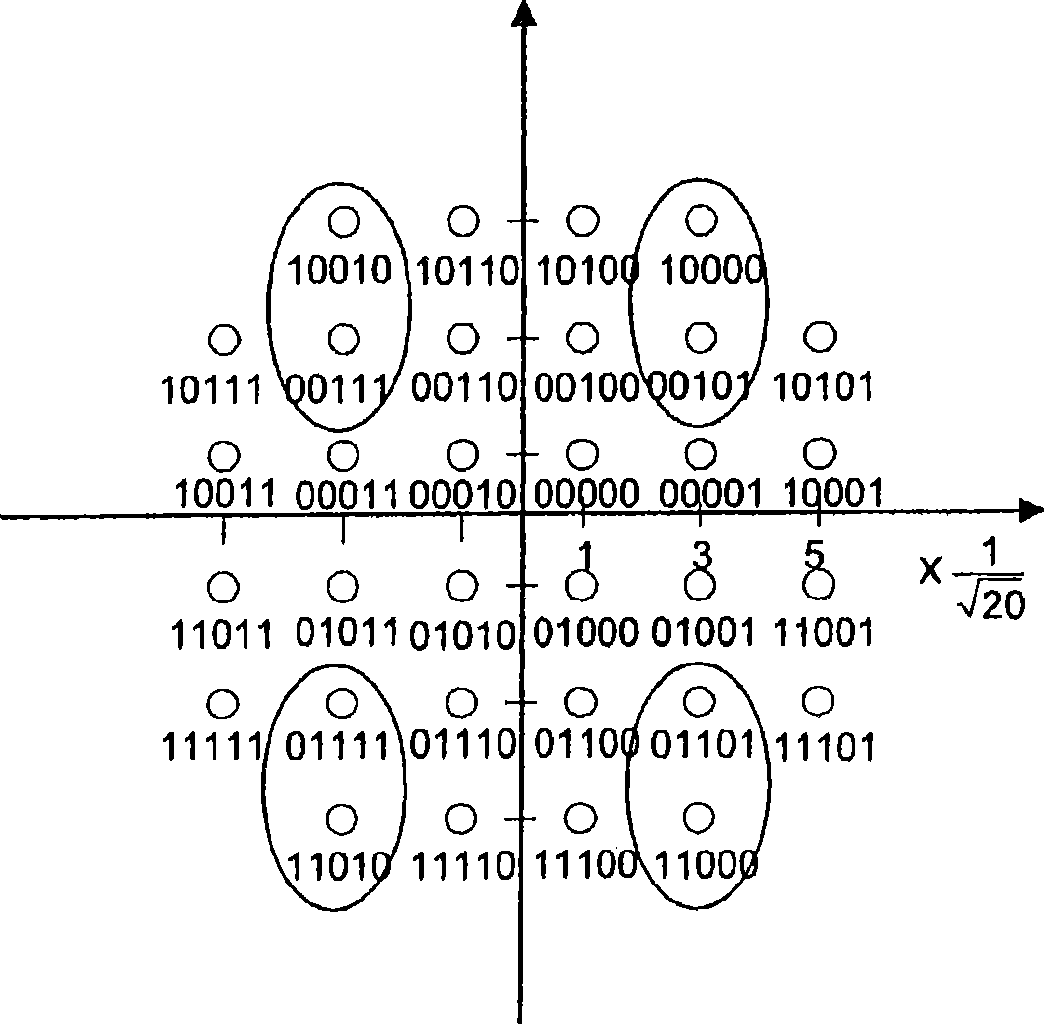

InactiveCN101523789ALow SNRExpand the transmission rangeModulated-carrier systemsCode conversionConstellationWireless systems

The present invention relates to a method for transmitting a stream of data in a communication system with at least two transmission antennas and a transmitter implementing said method. The method comprises the steps of: dividing the stream of data elements in first words of k bits, encoding (1) the first words with a non binary code, the first words being the symbols of the non binary code and the size (k) in bits of the code symbols dividing the number of bits per space-time codeword, interleaving (2) the encoded symbols by a symbol wise interleaver, splitting the interleaved bit stream thus obtained into groups of log2 (M) bits, mapping (3) the groups of log2 (M) bits onto complex symbols using a constellation of M points, then coding (4) the complex symbols using a space-time code to obtain space-time code words.

Owner:INTERDIGITAL CE PATENT HLDG

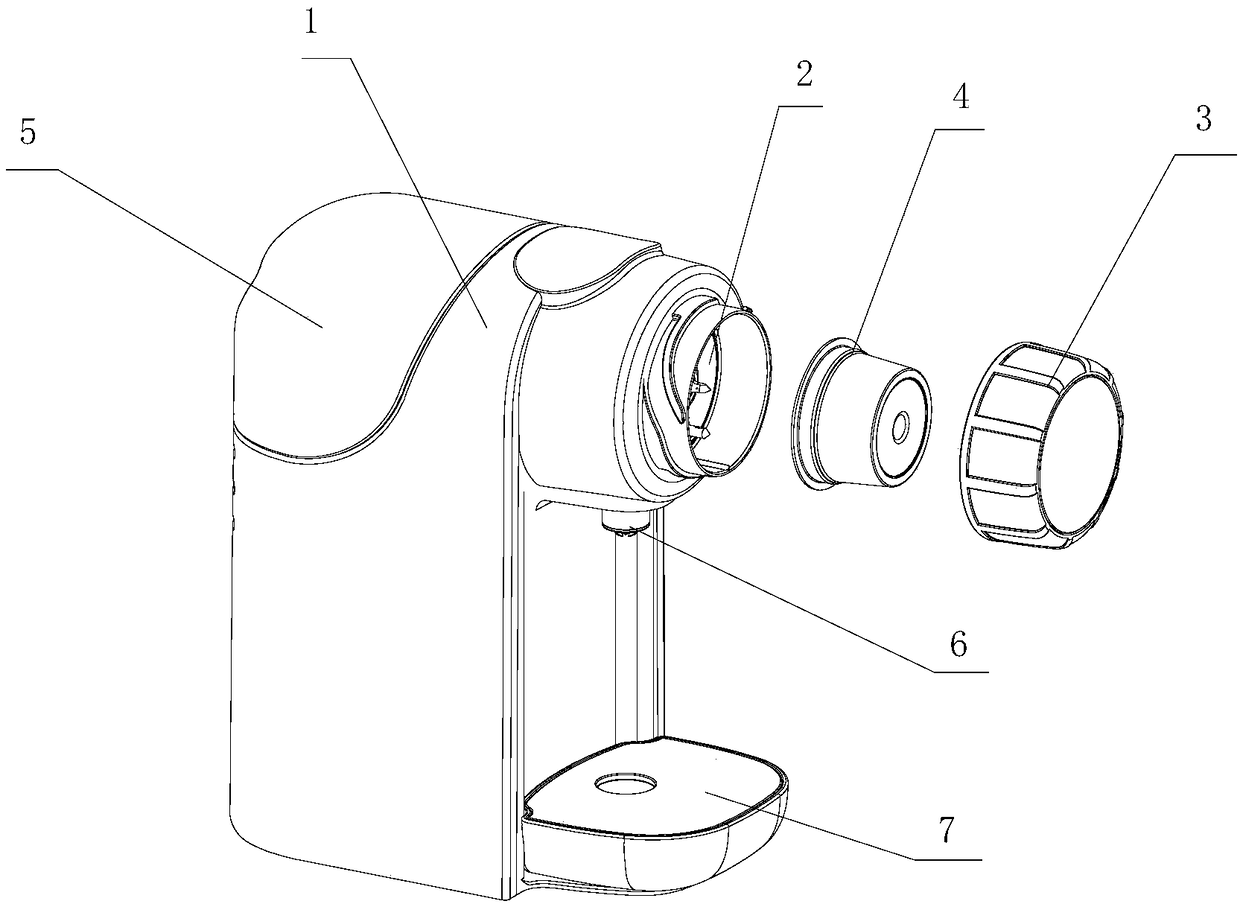

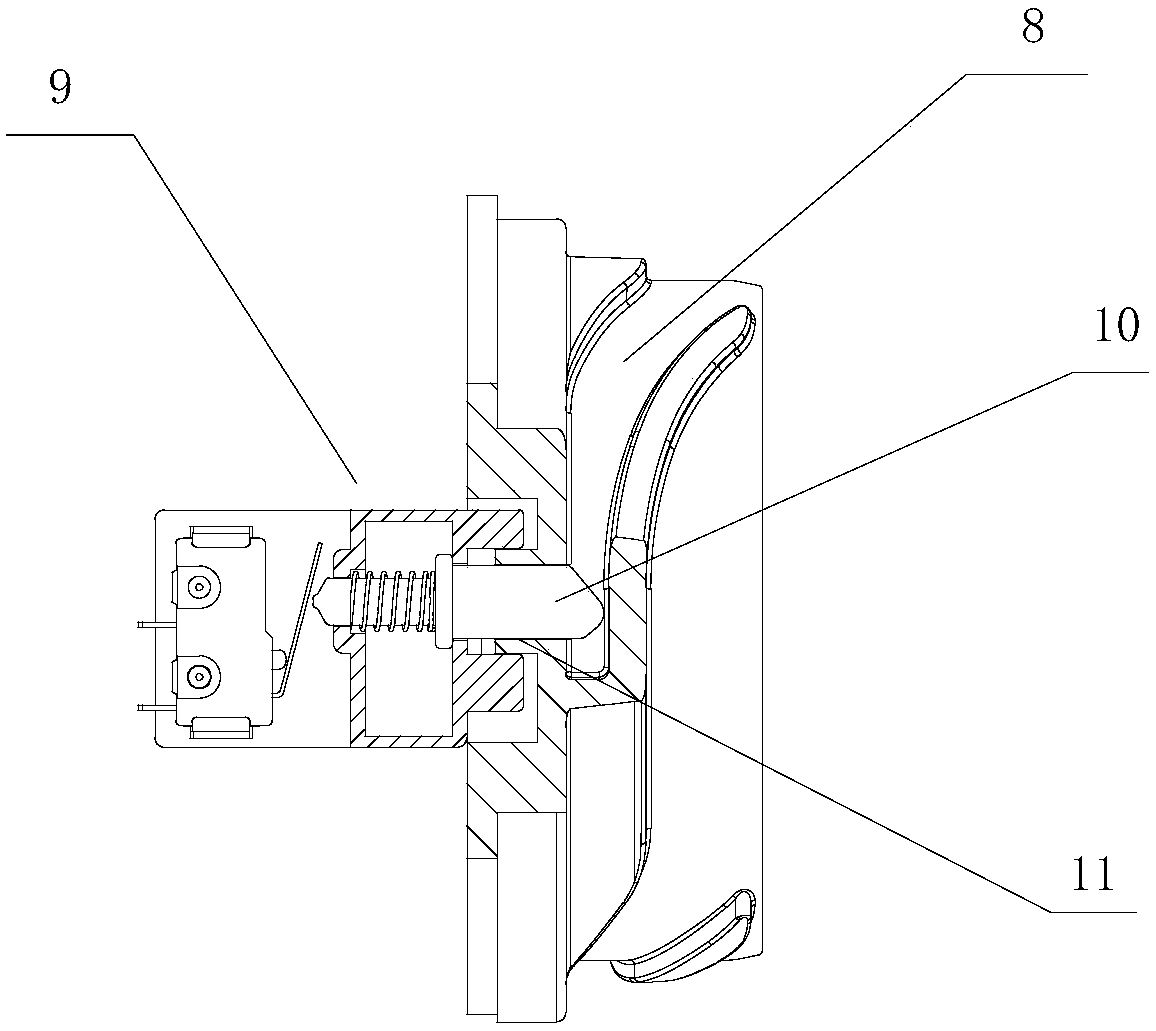

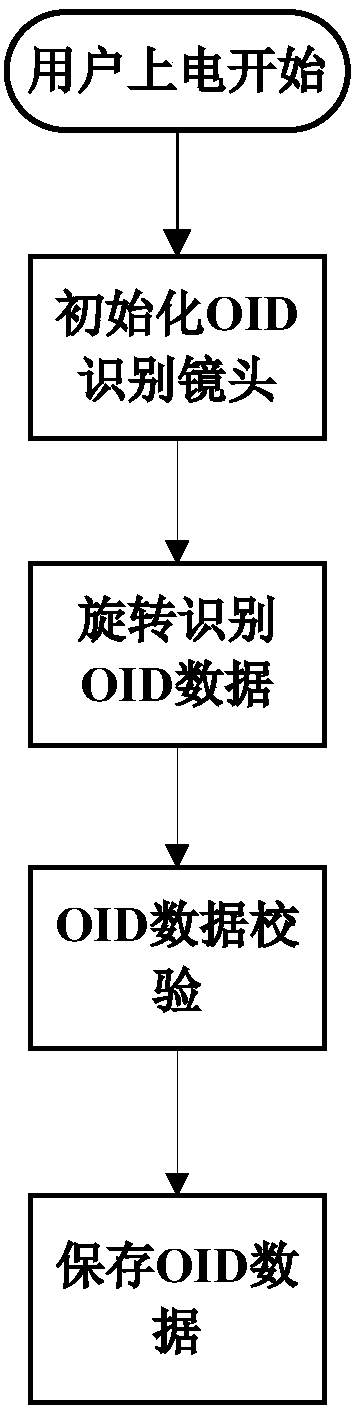

Capsule identification control method for beverage machine

ActiveCN108125563AShort manufacturing timeEasy to useBeverage vesselsIdentification deviceMechanical engineering

The invention provides a capsule identification control method for a beverage machine. The beverage machine comprises a base, a brewing cup, a brewing head and an identification device, the brewing head and the identification device are both arranged on the base, a capsule is contained in the brewing cup, the brewing cup is rotatably matched with the brewing head, and the capsule comprises a diaphragm having an identification code. The capsule identification control method comprises a rotation identification step, wherein the brewing cup drives a capsule relative identification device to rotate, and the identification device detects the identification code on the diaphragm in real time and obtains identification data. According to the control method, in the rotation identification step, inthe process when the brewing cup is rotatably matched with the brewing head and the diaphragm is in a rotating state, the identification device detects the identification code and obtains the identification data. In the process of installing the brewing cup, the capsule identification process is completed, the identification efficiency is high, and the time for preparing a beverage is saved. In addition, the identification device and a brewing device are integrated, the structure is simple, the identification and brewing operation is continuous, and more convenience is provided for a user during use.

Owner:杭州九创家电有限公司

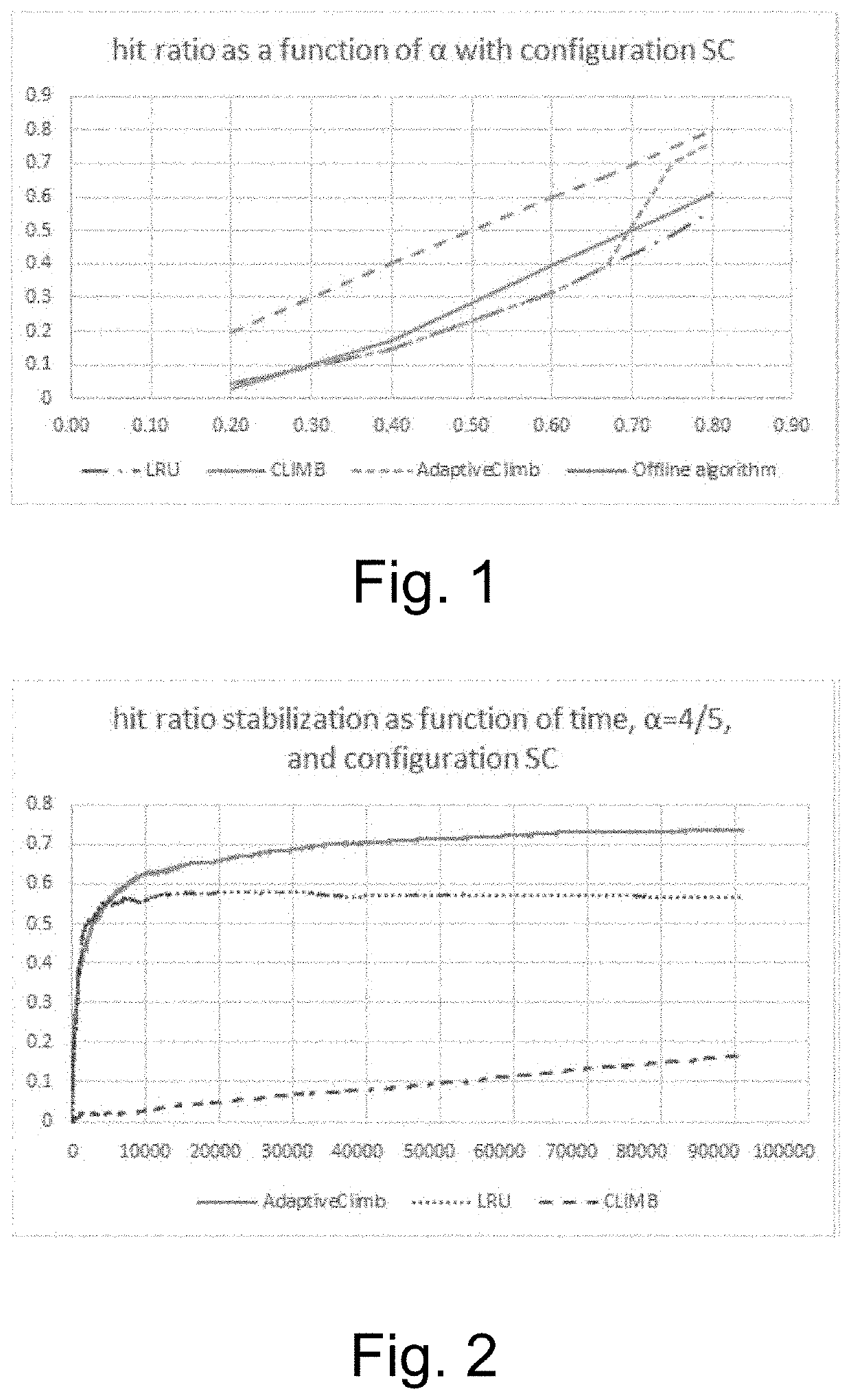

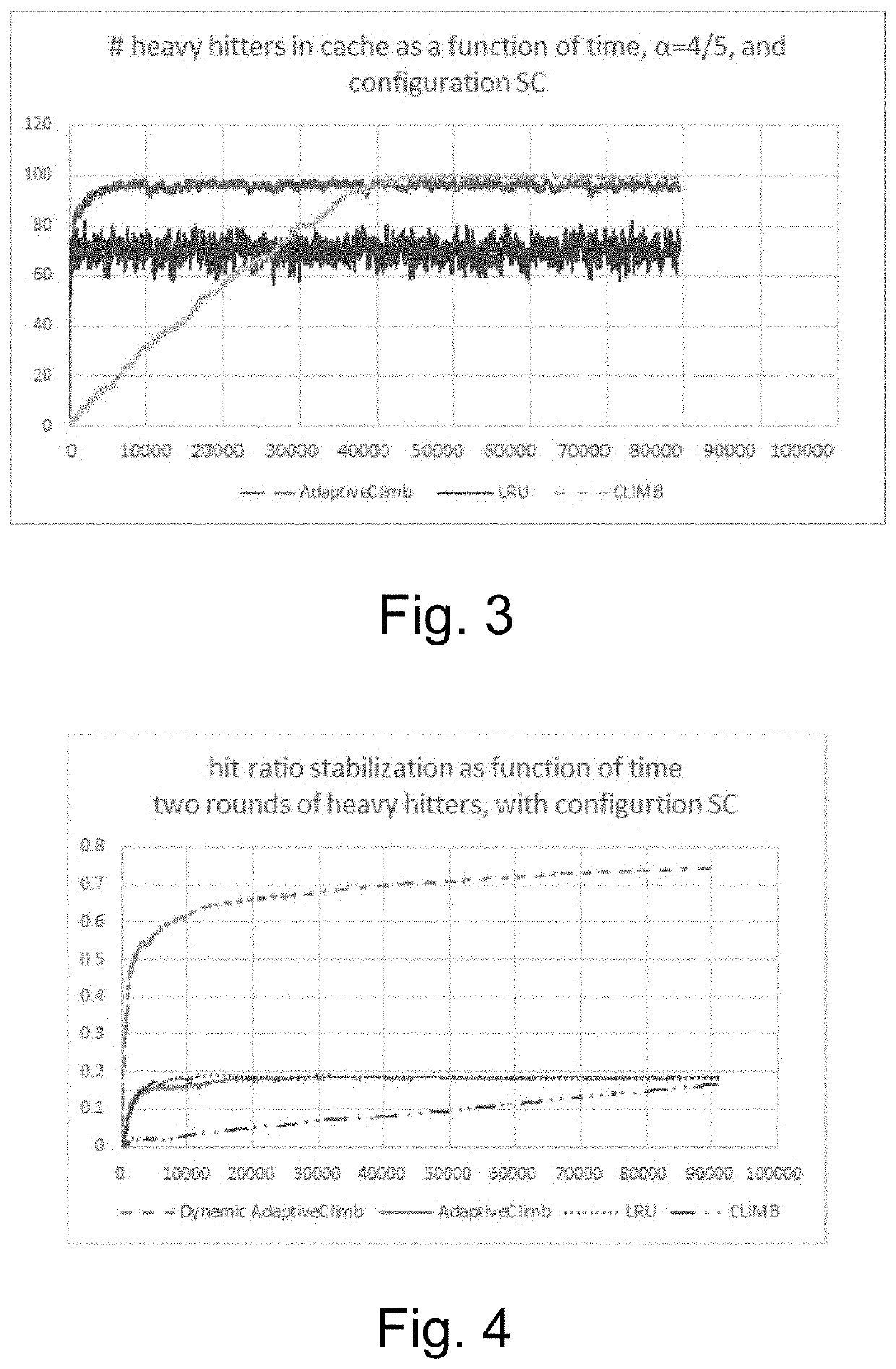

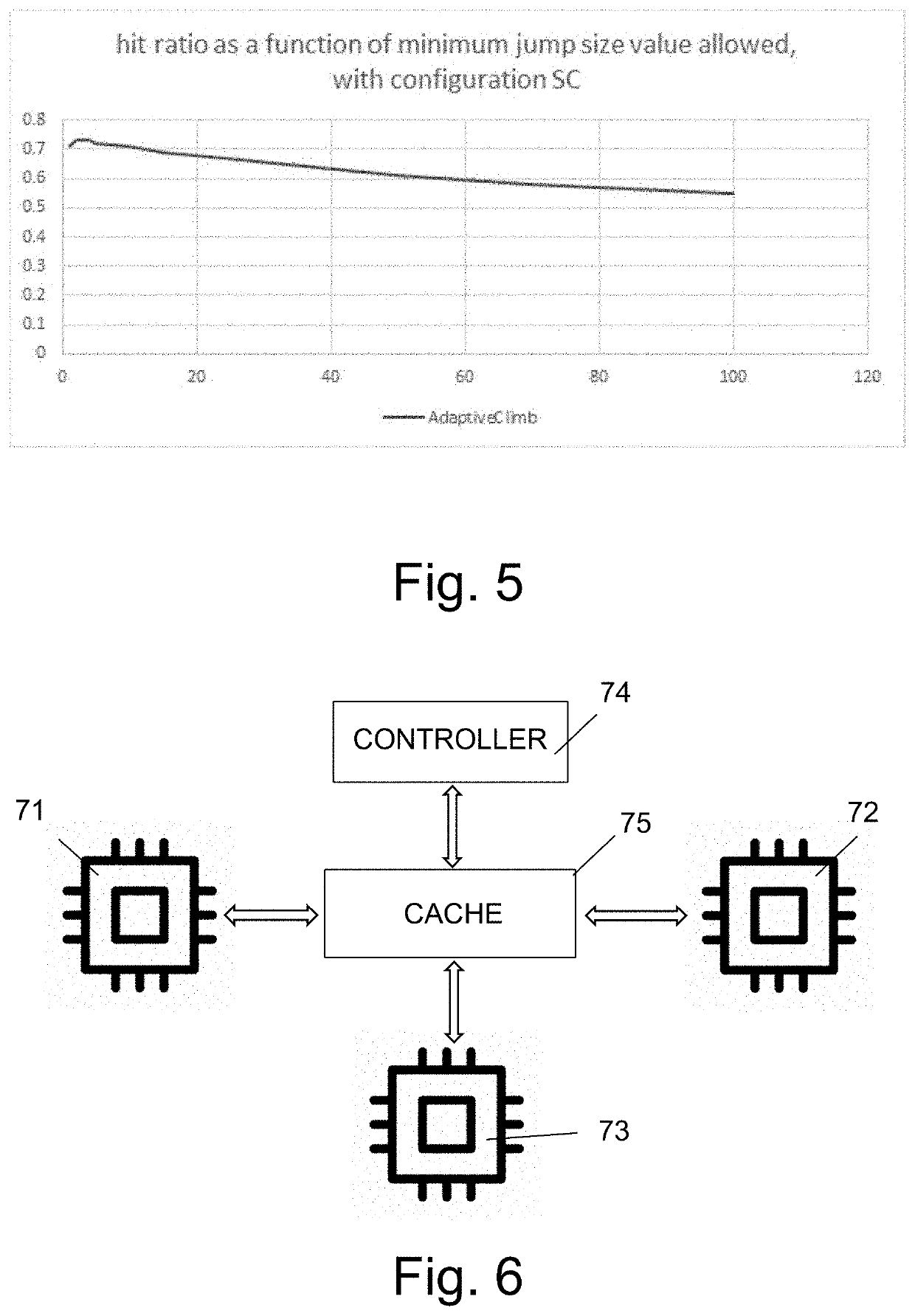

Method and system for performing adaptive cache management

ActiveUS20200210349A1Increasing cache sizeReduce cache sizeMemory architecture accessing/allocationMemory systemsParallel computingCache management

A method for efficiently method for performing adaptive management of a cache with predetermined size and number of cells with different locations with respect to the top or bottom of the cache, for storing at different cells, data items to be retrieved upon request from a processor. A stream of requests for items, each of which has a temporal probability to be requested is received and the jump size is incremented on cache misses and decremented on cache hits by automatically choosing a smaller jump size and using a larger jump size when the probability of items to be requested is changed. The jump size represents the number of cells by which a current request is promoted in the cache, on its way from the bottom, in case of a cache hit, or from the outside in case of a cache miss, towards the top cell of the cache.

Owner:B G NEGEV TECH & APPL LTD

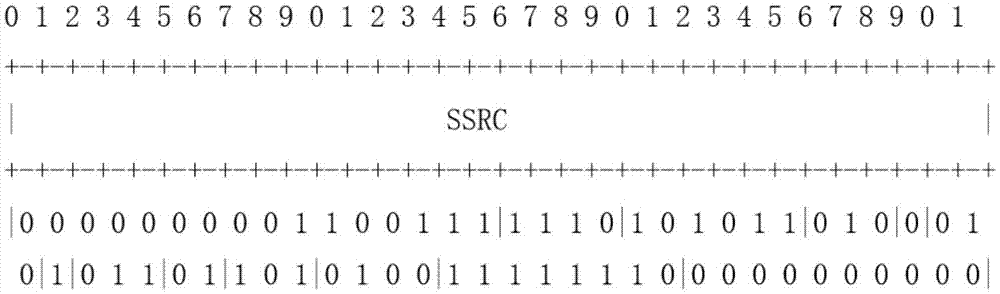

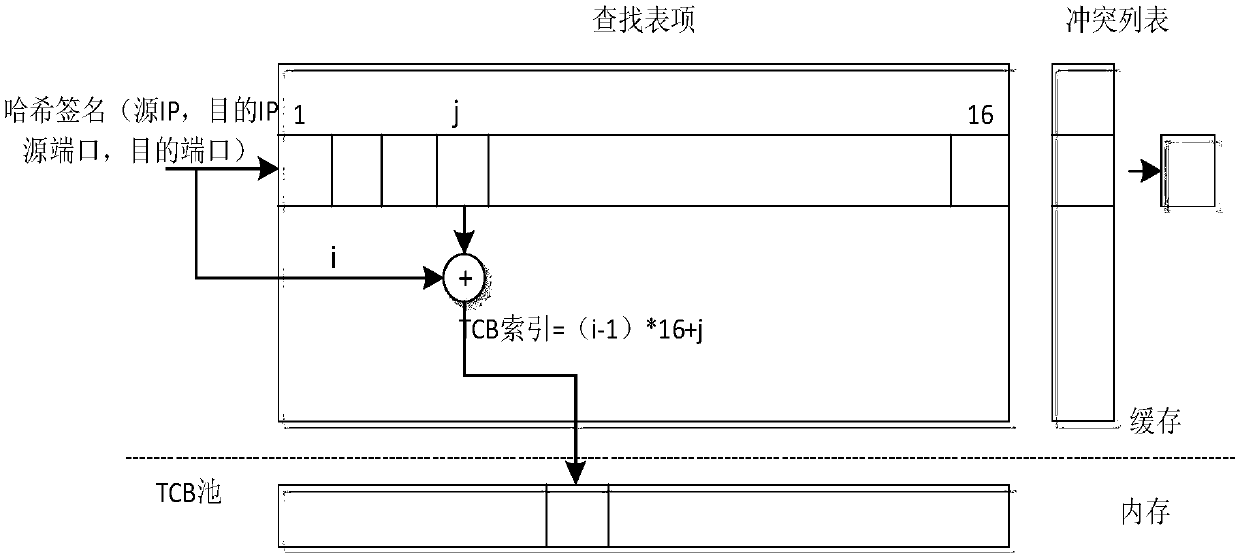

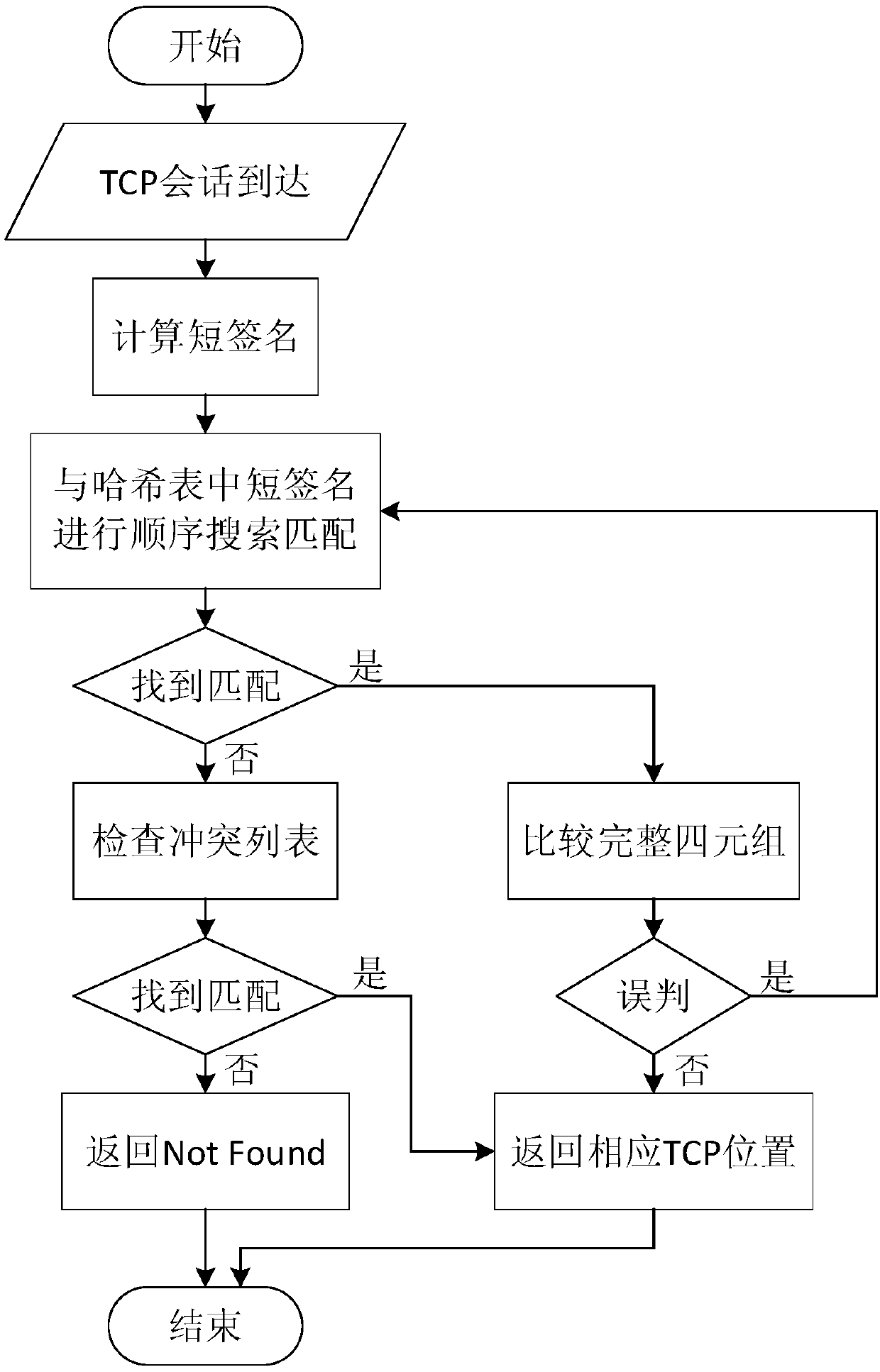

TCP search optimization method under high performance calculating network

ActiveCN107294855AImprove the ability to handle millions or even billions of TCP sessionsImprove performanceData switching networks16-bitShort signature

The invention discloses a TCP search optimization method under a high performance calculating network; the method comprises the following steps: 1, in TCP conversation processing, preferably calculating TCP conversations to obtain 32-bit short signatures if the TCP conversation order of magnitude is at the million level, carrying out XOR for the front 16-bit and rear 16-bit of the 32-bit short signatures obtained on the previous basis if the TCP conversation order of magnitude is at 100-million level, thus obtaining 16-bit short signatures; 2, respectively using the 32- bit short signatures and the 16-bit short signatures to replace a TCP tetrad identification TCP conversations; 3, building corresponding relations between the front P TCP conversation short signatures and Hash slots one by one; mapping the front P TCP conversation short signatures and Hash slots one by one if arrived TCP conversations exceed P, and assigning the TCP conversation short signatures (starting from P+1) from a TCB pool to a conflict list. The method can reduce the searching taken cache size, and can reduce the Hash conflict probability.

Owner:STATE GRID CORP OF CHINA +3

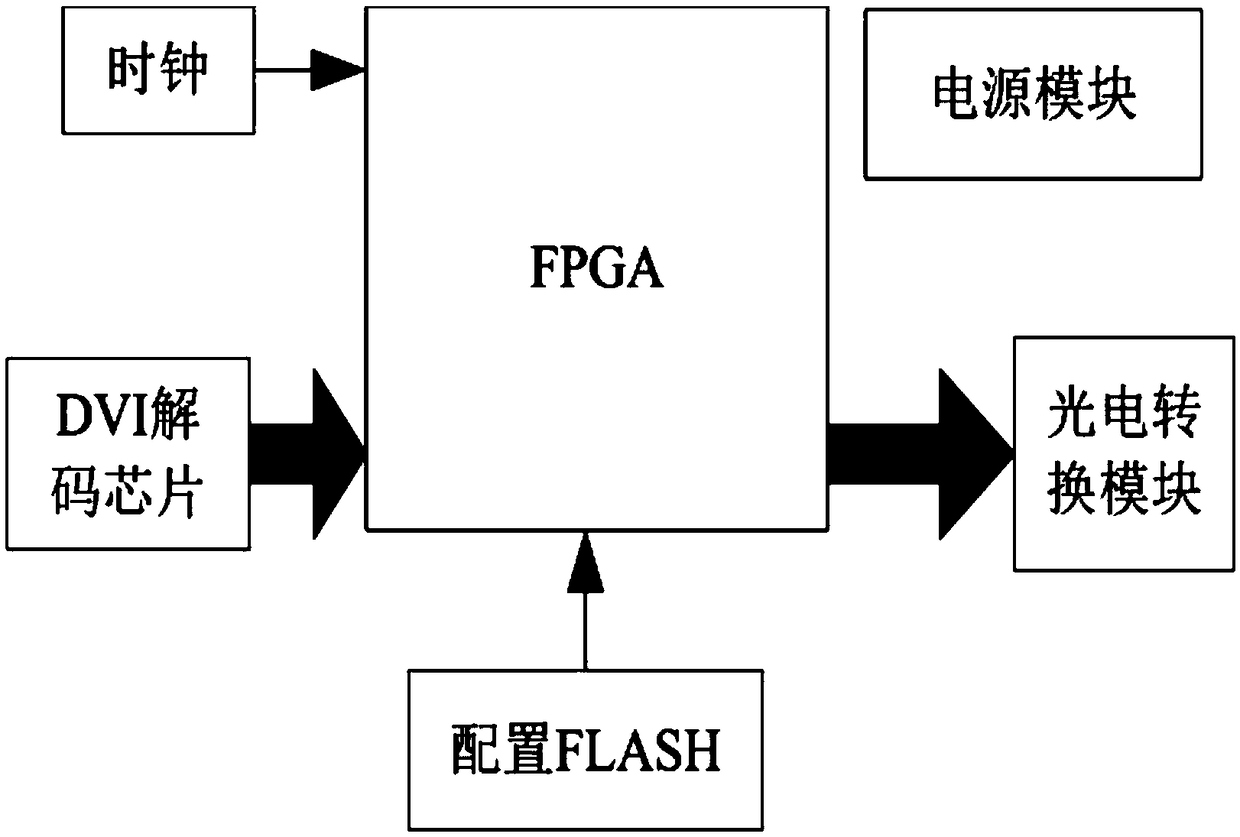

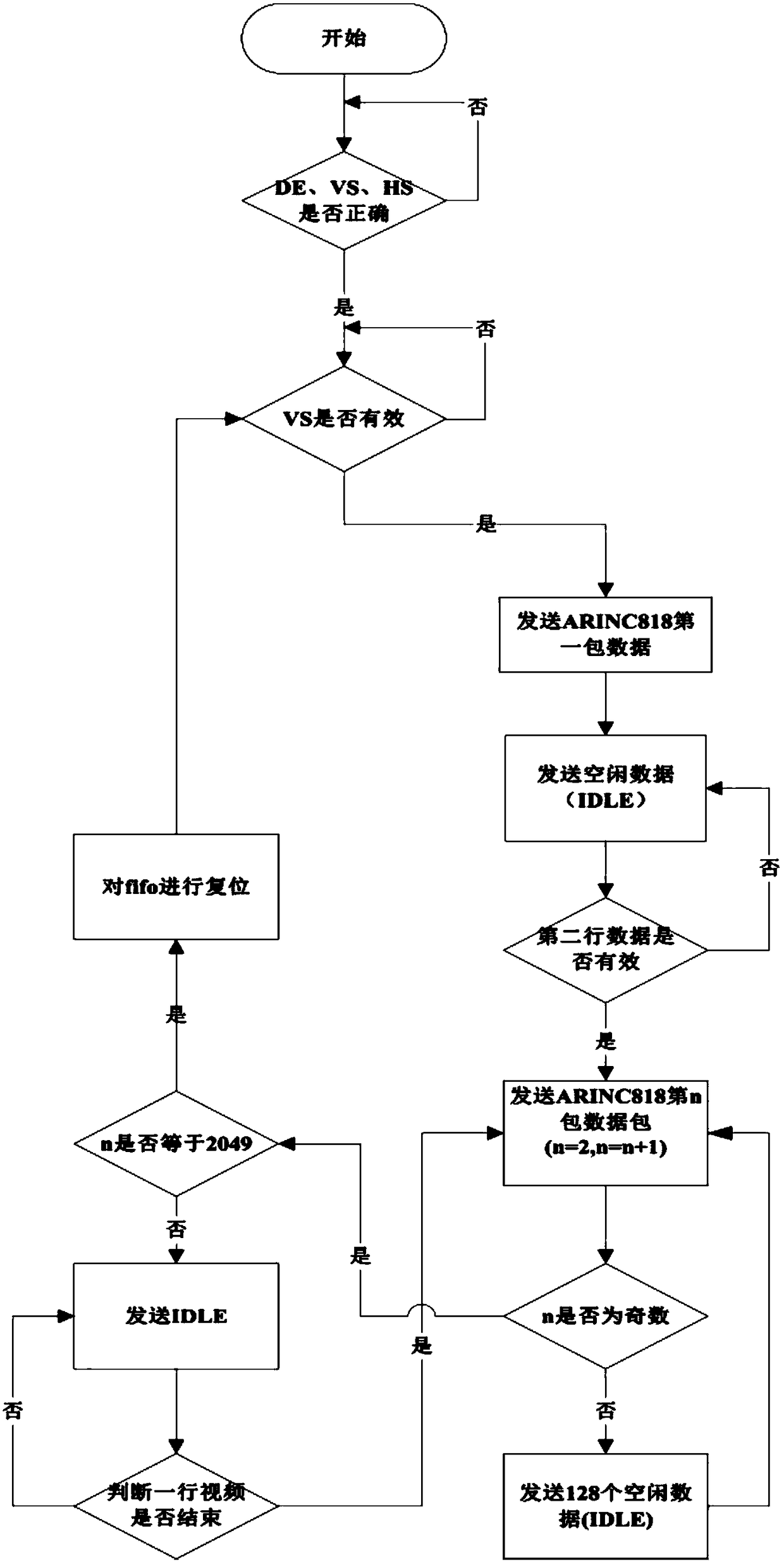

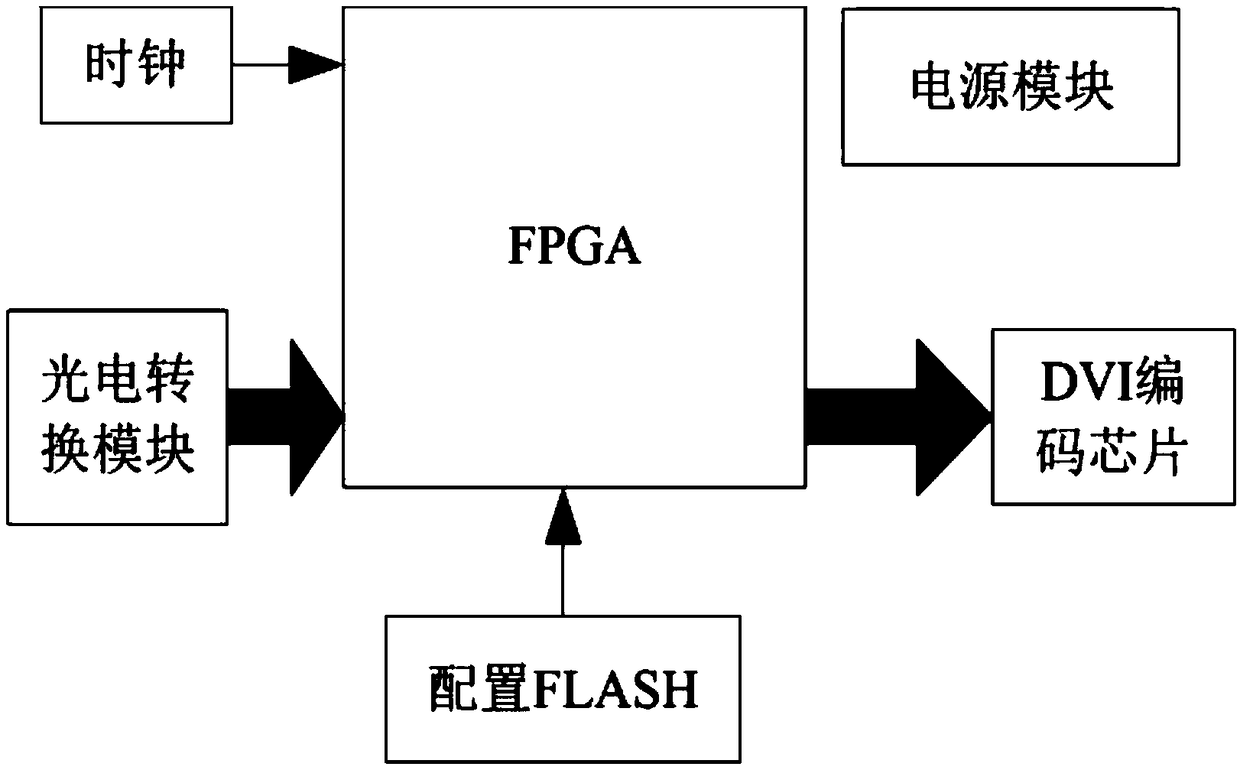

Low-latency ARINC 818 bus transceiving method

The invention proposes a low-latency ARINC 818 bus transceiving method. During the realization of mutual conversion between a VESA protocol and an ARINC 818 protocol according to the FPGA, the bufferamount of the data at a transmitting end can be reduced from the current frame to 1 line, and the buffer amount of the data at a receiving end can be reduced from the current frame to 40 lines due tothe optimization of a control algorithm, which greatly improves the real-time performance of video transmission. At the same time, resources required by the caching of a new algorithm can be satisfiedby the internal resources of the FPGA with no external memory needed, which reduces the difficulty of hardware design, reduces the resource overhead, and reduces the power consumption.

Owner:LUOYANG INST OF ELECTRO OPTICAL EQUIP OF AVIC

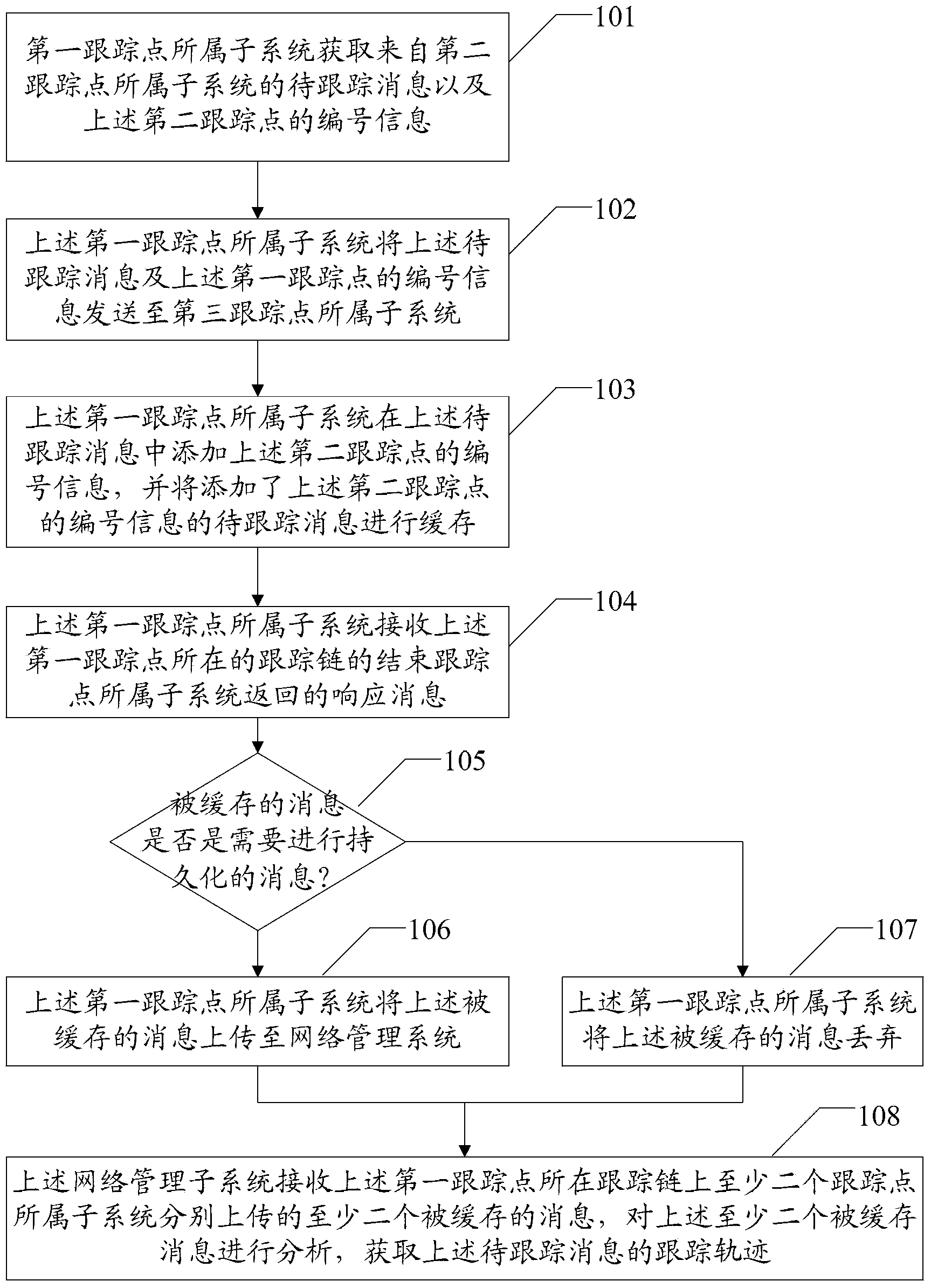

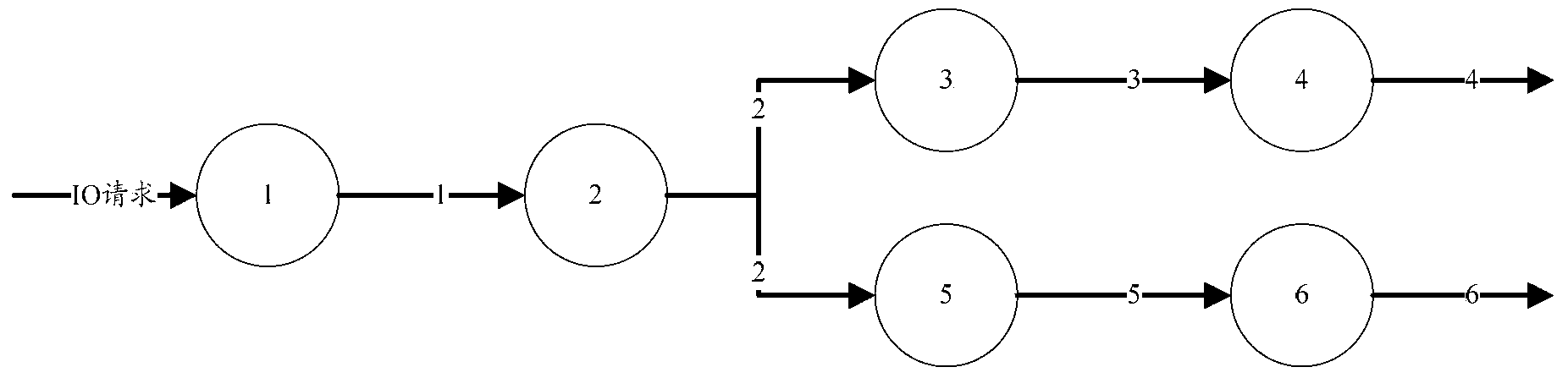

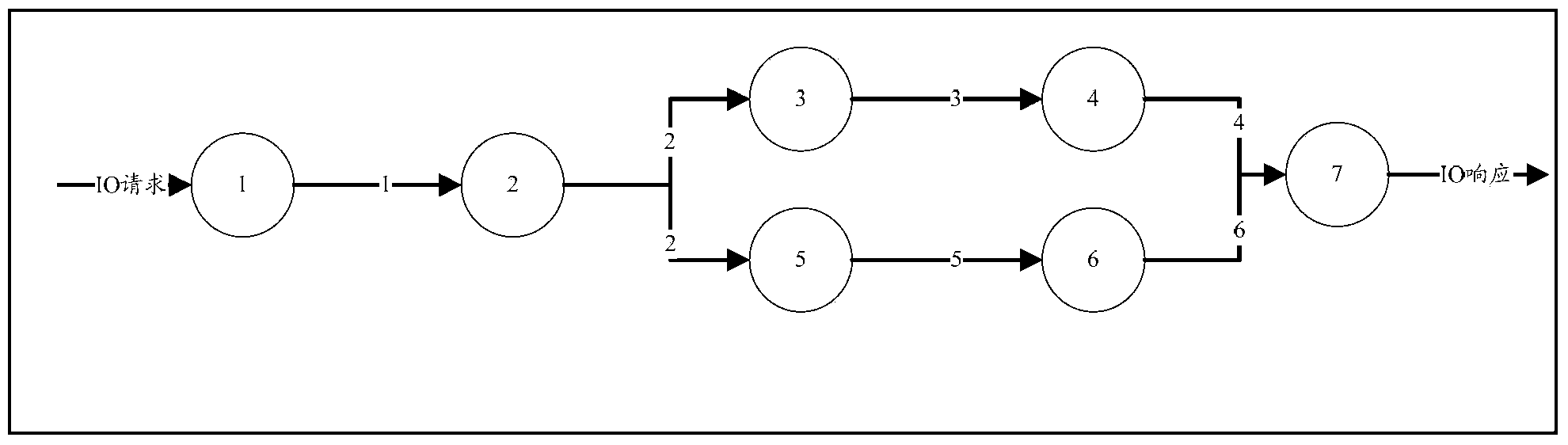

Approach and system for implementing message tracking

ActiveCN104243191ARealize instant analysisGood for backtrackingData switching networksNetwork managementSerial code

The embodiment of the invention relates to the technical field of communication and discloses an approach and system for implementing message tracking. The approach includes the first step that a subsystem which a first track point belongs to obtains to-be-tracked messages from a subsystem which a second track point belongs to and serial number information of the second track point; the second step that the to-be-tracked messages and serial number information of the first track point are sent to a subsystem which a third track point belongs to; the third step that the serial number information of the second track point is added to the to-be-tracked messages and is cached; the fourth step that a response message fed back by a subsystem which a track stop point of a track chain belongs to is received; the fifth step that whether the cached to-be-tracked messages need persistence is judged, and if yes, the cached to-be-tracked messages are uploaded to a network management subsystem; the sixth step that the network management subsystem analyzes at least two uploaded and cached messages and obtains a track trace of the to-be-tracked messages. Through the adoption of the approach, the cached track messages of the network management subsystem can be reduced, consumption of system resources can be reduced, and fault location speed can be improved.

Owner:海宁市黄湾镇资产经营有限公司

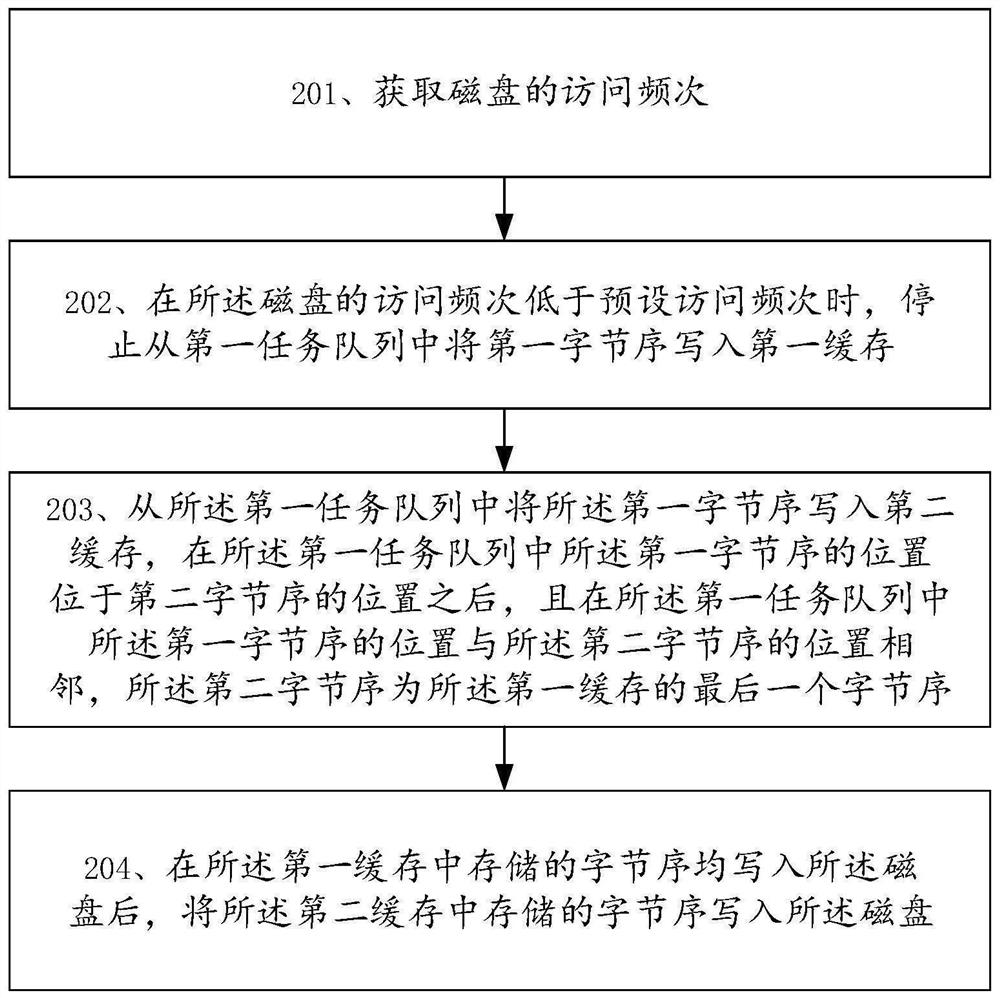

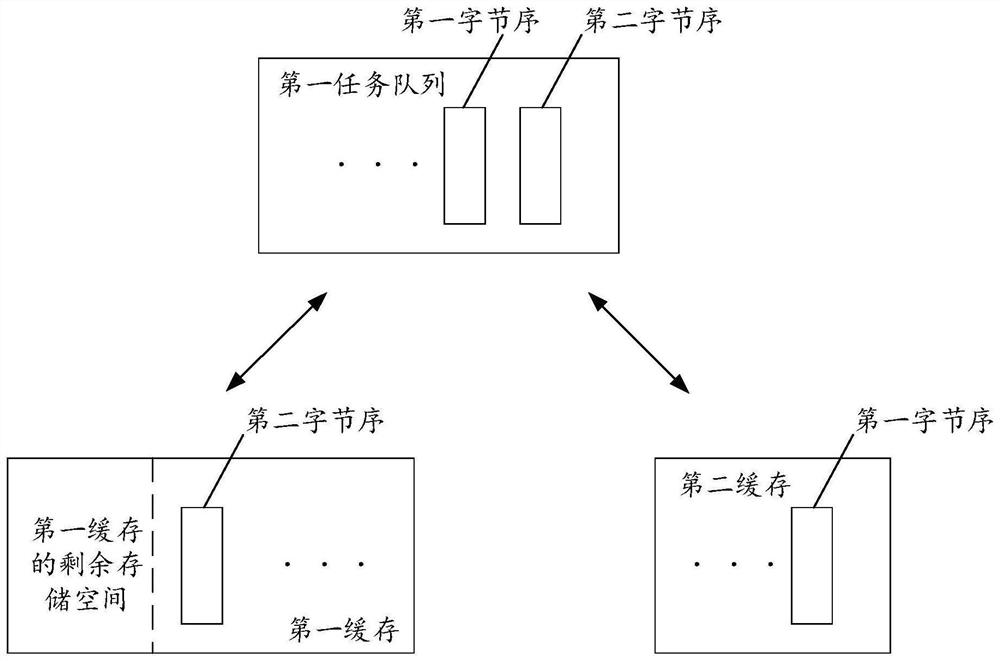

Data processing method and device, electronic equipment and storage medium

PendingCN111984202AIncrease profitAvoid the problem of high utilization againInput/output to record carriersAccess frequencyEngineering

The invention relates to a block storage system, and discloses a data processing method and device, electronic equipment and a storage medium. The method comprises the steps of obtaining an access frequency of a disk; when the access frequency of the disk is lower than a preset access frequency, stopping writing the first byte order into a first cache from a first task order; writing the first byte order into a second cache from the first task queue, wherein the position of the first byte order in the first task queue is located behind the position of a second byte order, the position of the first byte order in the first task queue is adjacent to the position of the second byte order, and the second byte order is the last byte order of the first cache; and after the byte orders stored in the first cache are written into the disk, writing the byte orders stored in the second cache into the disk. By implementing the embodiment of the invention, the disk writing efficiency is improved.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

Data processing apparatus and method thereof

InactiveUS7952589B2Good value for moneyReduce cache sizeMemory adressing/allocation/relocationCathode-ray tube indicatorsMemory addressComputer hardware

A data processing apparatus generates a memory address corresponding to a first memory, and interpolates data read out from the first memory. The data processing apparatus selects a part of the memory address, checks if the first memory stores data corresponding to the selected part of the memory address, and transfers the data, for which it is determined that the first memory does not store the data, and which corresponds to the part of the memory address, from a second memory to the first memory. The data processing apparatus determines to change a part to be selected of the memory address based on the checking result indicating that the first memory does not store the data corresponding to the selected part of the memory address, and changes the part of the memory address corresponding to the characteristics of the memory address.

Owner:CANON KK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com