Technology for restoring depth image and combining virtual and real scenes based on GPU (Graphic Processing Unit)

A depth map and scene technology, applied in the fields of somatosensory interaction, computer vision, and augmented reality, can solve problems such as Kinect depth map vulnerability repair, and achieve high-quality realistic effects and augmented reality effects.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in combination with specific examples and with reference to the accompanying drawings.

[0021] 1. Method overview

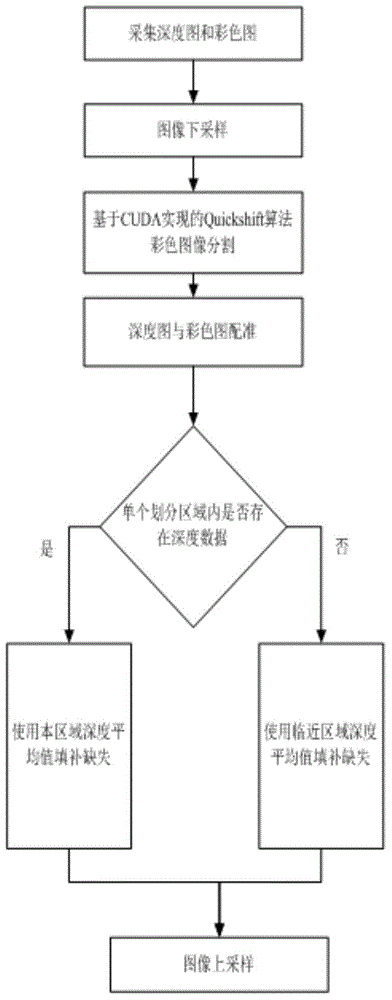

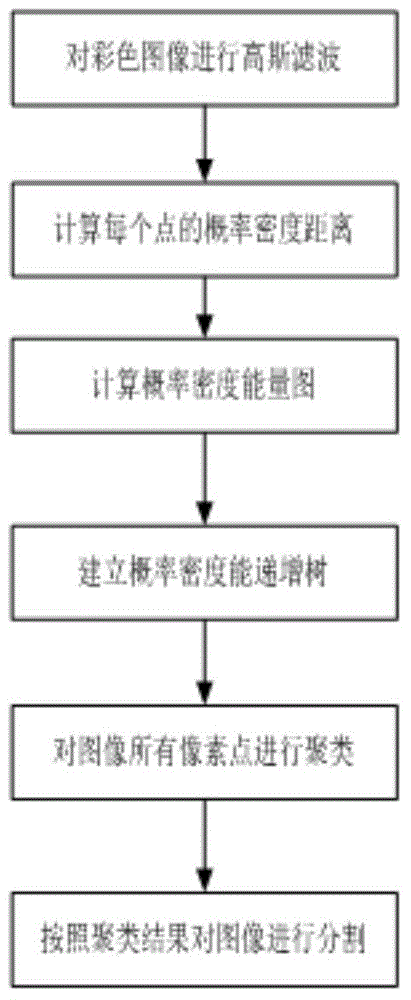

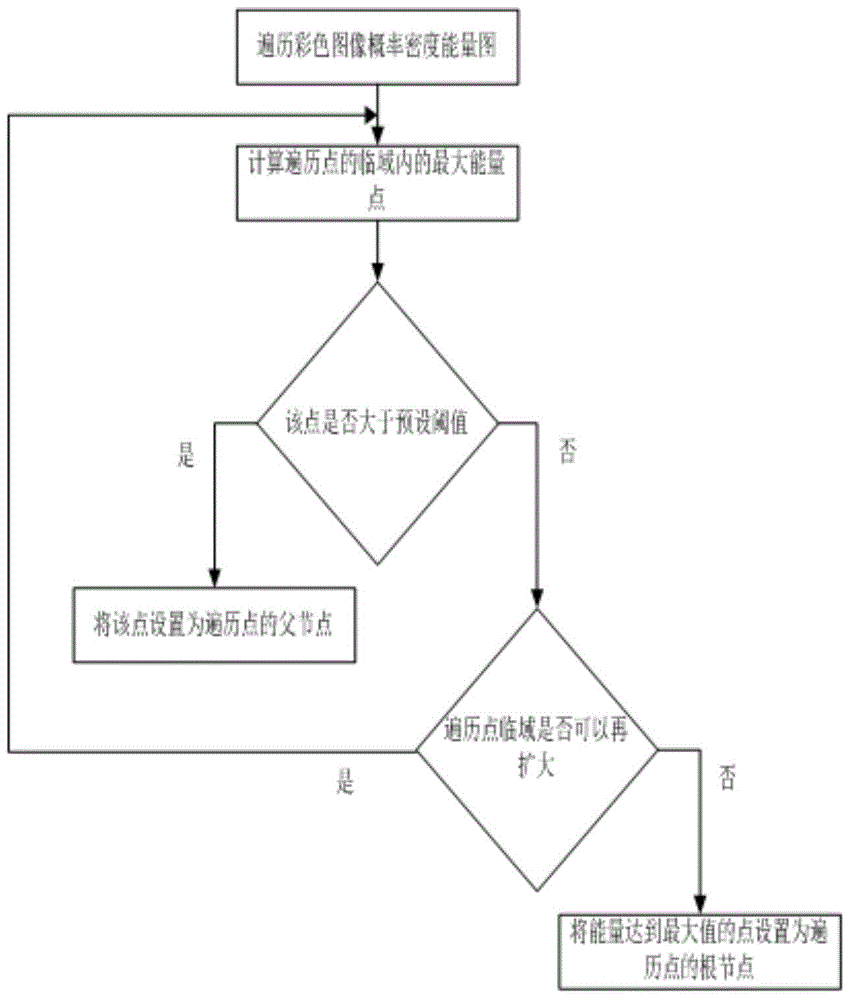

[0022] Such as figure 1 As shown, the method of the present invention is mainly divided into three steps: (1) use the QuickShift algorithm to segment the color image, and the implementation of the specific algorithm uses CUDA based on GPU computing; (2) the depth map and the color map of Kinect Carry out registration; (3) Use the segmented color image and the registered depth and color image relationship to repair the missing depth image. The specific steps are: if there is depth data in this area, use the average depth of this area value to fill the missing area; if all the depth information of this area is missing, the average depth value of the adjacent area is used to fill it.

[0023] (1) T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com