Image based outdoor illumination environment reconstruction method

An environment and pixel technology, applied in 3D image processing, image enhancement, image analysis, etc., can solve the problems of estimation information difference, discontinuity of light and shadow effects of virtual objects, ignoring the correlation between video frames and frames, etc., to achieve smooth light and shadow effect of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The present invention will be further described below in conjunction with specific embodiments.

[0056] A video-based outdoor lighting environment reconstruction method, the method includes the following steps:

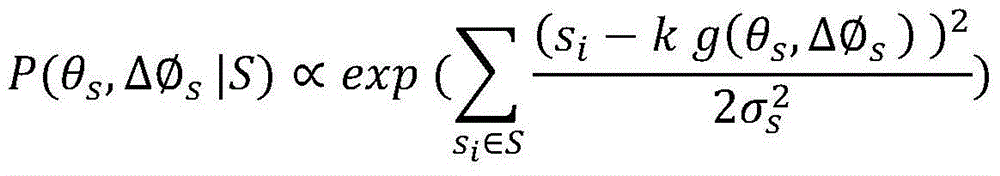

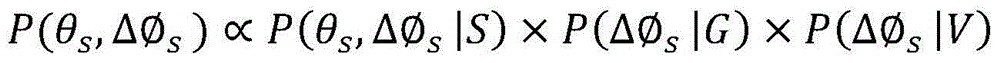

[0057] 1. Extract video key frames from the video at equal time intervals, and then use the sky, ground, and vertical surfaces in the video key frame images as clues to estimate the probability distribution map of the position of the sun calculated and inferred by each clue, Combined with the sun position probability obtained from the video sky, ground and vertical surface, the probability distribution map of the sun position in the video key frame scene is deduced, and the sparse radiance map of the video scene key frame is generated;

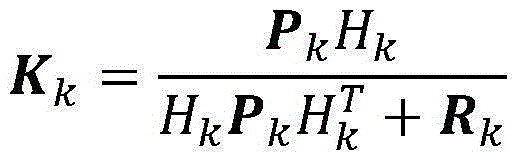

[0058] 2. Through the lighting parameter filtering algorithm, the lighting estimation result of the key frame of the video is used to correct the lighting estimation result of the non-key frame of the video, and the virtual an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com