Memory allocation method and delay perception-memory allocation apparatus suitable for memory access delay balance among multiple nodes in NUMA construction

A technology of memory allocation and memory access, applied in the direction of multi-program device, resource allocation, program control design, etc., can solve the problems of unfairness of shared memory resources, increase of process application performance difference, overall application performance fluctuation, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

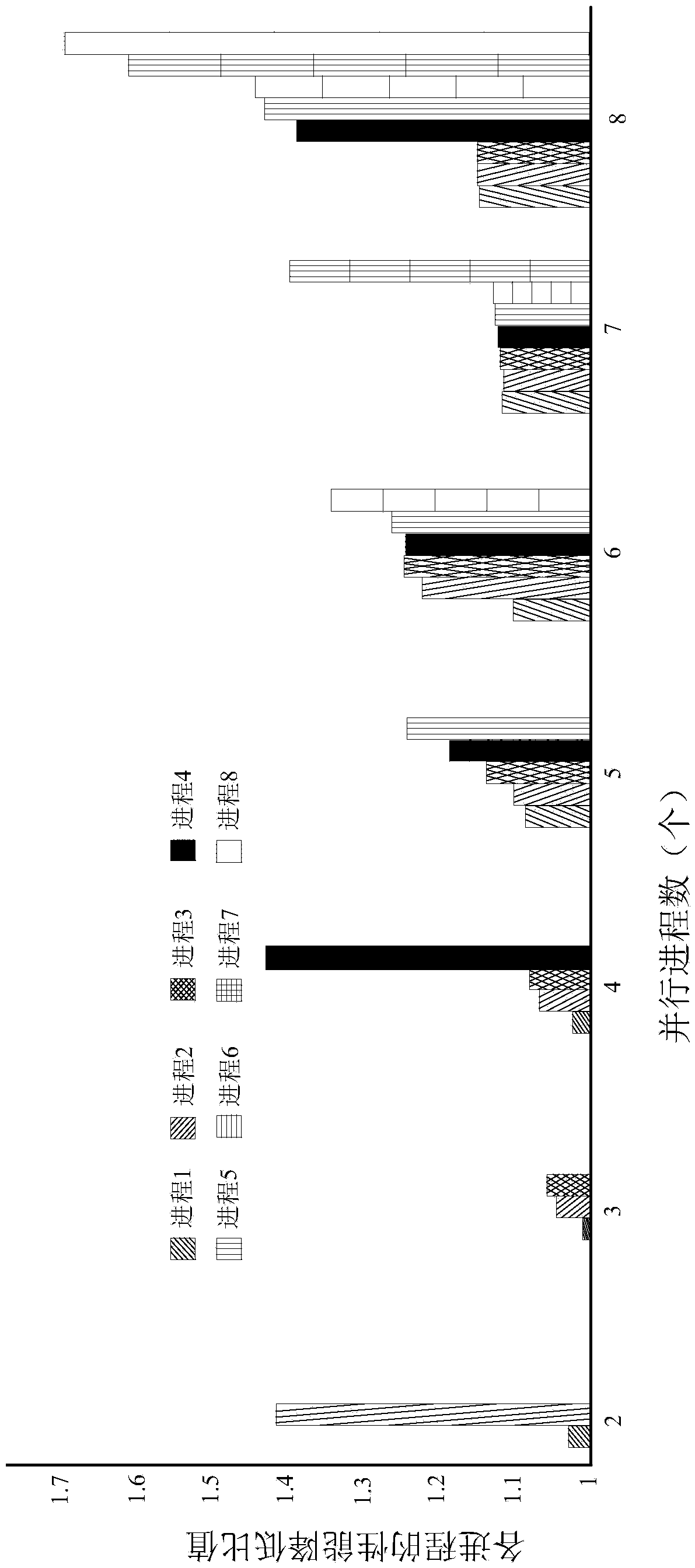

[0110] The embodiment adopts a NUMA architecture with two memory nodes, and uses the memory allocation method for memory access delay balance between multiple nodes and the delay-aware-memory allocation device of the present invention to perform a delay-aware balanced memory allocation test.

[0111] Experimental conditions: a server with two Intel E5620 processors and two memory nodes, the server is an IBM blade server, using the RedHat CentOS6.5 operating system, the kernel version is linux-2.6.32. After starting the server, configure hyperthreading and prefetching to disable (translation, not enabled).

[0112] Test process (1): Under the running scenario of multiple parallel instances of a single application, the non-delay-perceived memory allocation process is tested and compared with the memory allocation process in the memory access delay-perceived balance state of the present invention. The number of processes running in parallel is 1 to 8, and the comparison of perfor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com