Lane line segmentation method and apparatus

A lane line and rough segmentation technology, applied in the field of maps, can solve the problems of large error, easy to be affected by noise, inaccurate segmentation of lane lines, etc., to achieve the effect of accurate segmentation and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

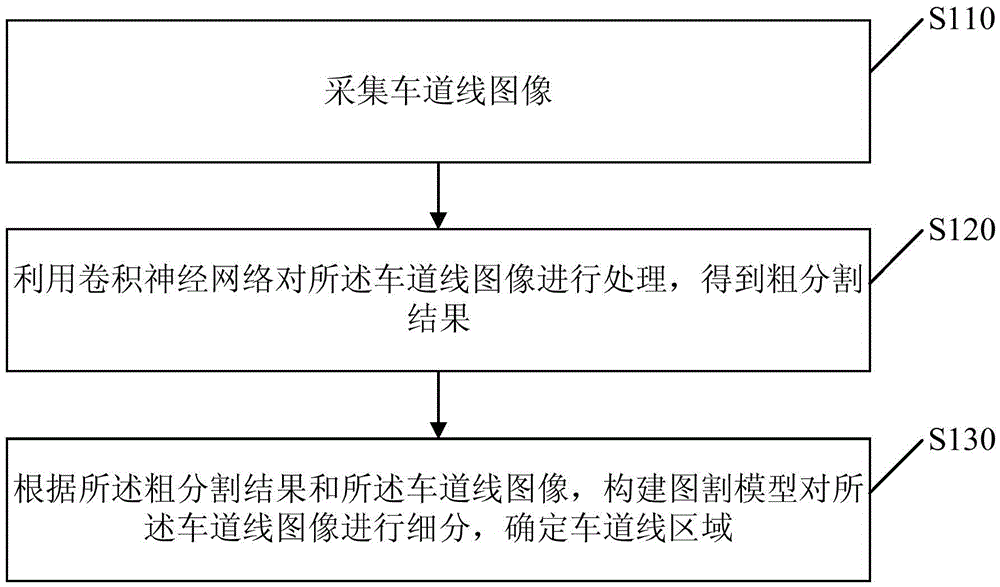

[0025] figure 1 It is a flow chart of a lane line segmentation method provided by Embodiment 1 of the present invention. This embodiment is applicable to the situation where the lane line is segmented according to the located lane line image, and the method can be executed by the lane line segmentation device. The lane line dividing device can be integrated in a terminal such as a computer or a mobile terminal, and specifically includes the following:

[0026] S110, collecting lane marking images.

[0027] When the lane line is located, the lane line image is collected through the control camera.

[0028] S120, using a convolutional neural network to process the lane line image to obtain a rough segmentation result.

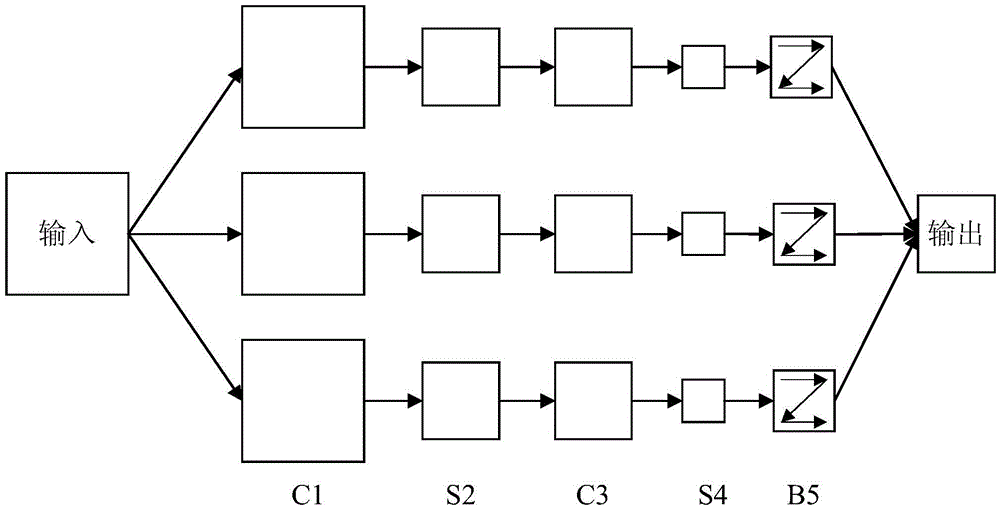

[0029] Among them, Convolutional Neural Network (CNN) is a feed-forward neural network whose artificial neurons can respond to surrounding units within a part of the coverage area, and have excellent performance for large-scale image processing. The spatial re...

Embodiment 2

[0045] Figure 5 It is a flow chart of a lane line segmentation method provided in Embodiment 2 of the present invention. In this embodiment, according to the rough segmentation result and the lane line image in Embodiment 1, constructing a graph cut model for the lane line image Carry out subdivision and determine that the lane line area has been optimized, including as follows:

[0046] S510, collecting lane line images.

[0047] S520. Process the lane line image by using a convolutional neural network to obtain a rough segmentation result.

[0048] S530. Determine an absolute foreground area, an absolute background area, and an uncertain area in the lane marking image according to the rough segmentation result.

[0049] This step is mainly to realize the initialization of the graph cut model, that is, by processing the rough segmentation result, point out which pixels in the lane line image belong to the absolute foreground area (lane line), and which pixels belong to the...

Embodiment 3

[0075] Figure 6 It is a flow chart of a lane line segmentation method provided by Embodiment 3 of the present invention. This embodiment optimizes Embodiment 1. On the basis of Embodiment 1, the method of verifying the edge points of the lane line area is added. The content, specifically includes the following:

[0076] S610. Collect images of lane lines.

[0077] S620. Process the lane line image by using a convolutional neural network to obtain a rough segmentation result.

[0078] S630. According to the rough segmentation result and the lane line image, construct a graph cut model to subdivide the lane line image, and determine a lane line area.

[0079] S640. Check the edge points of the lane line area to determine the outline of the lane line.

[0080] Use the RANSAC (RANdomSAmpleConsensus, Random Sampling Consensus) algorithm to verify the edge points of the lane line area, and eliminate the wild points, that is, the pixels judged to be the lane line area instead of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com