Self-paced cross-modal matching method based on subspace

A matching method and cross-modal technology, applied in the field of pattern recognition, can solve the problems of being unable to meet people's daily needs, manually labeling data time-consuming and laborious, and being unable to deal with unlabeled information, achieving good application prospects, improving robustness and accuracy sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0014] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

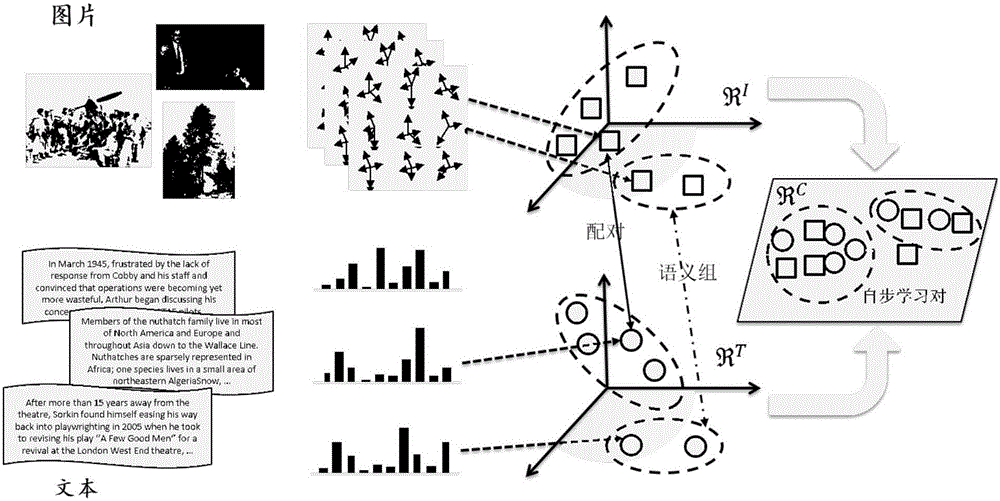

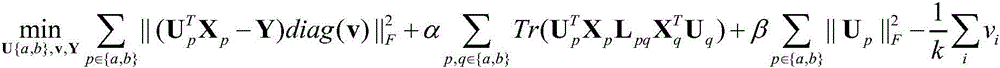

[0015] The present invention maps data of different modalities to the same subspace by learning two mapping matrices, and performs sample selection and feature learning while mapping, and uses multi-modal graph constraints to keep the data modal and modal The similarity between them; the similarity of data of different modalities is measured in the learned subspace to achieve cross-modal matching.

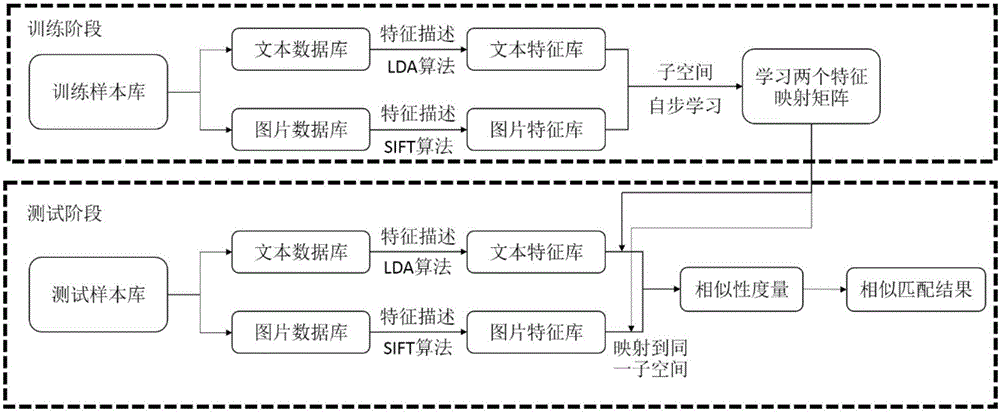

[0016] see figure 1 As shown, a subspace-based self-stepping mode matching method includes the following steps:

[0017] Step S1, collecting data samples of different modalities, establishing a cross-modal database, and dividing the cross-modal database into a training set and a test set;

[0018] It should be noted that the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com