A semantic human-computer natural interaction control method and system

An interactive control, human-computer technology, applied in the direction of user/computer interaction input/output, semantic analysis, mechanical mode conversion, etc., can solve the problems of prone to false triggering, low reliability of gesture coding, etc., and achieve a low false trigger rate. , Improve the control accuracy and reliability, the effect of high reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

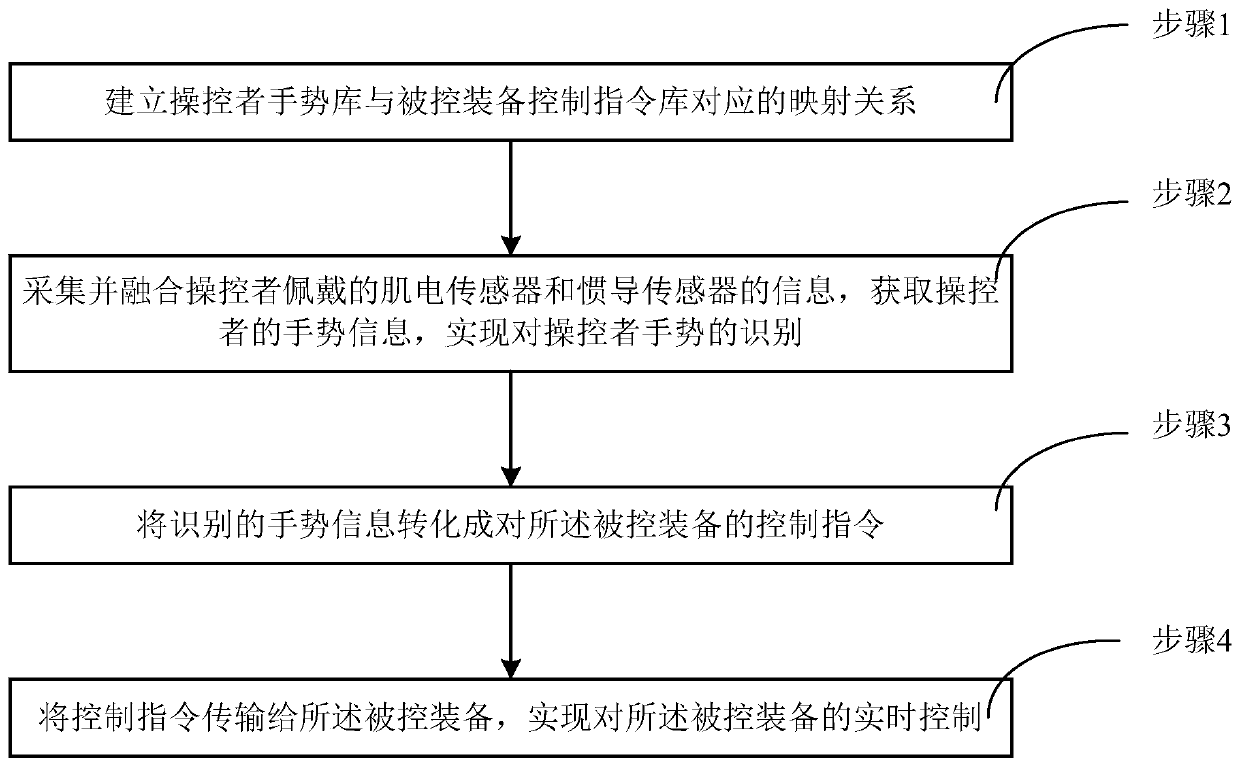

[0046] Example 1, such as figure 1 As shown, a semantic human-computer natural interaction control method according to an embodiment of the present invention includes:

[0047] Step 1: According to the control modes of the controlled equipment, these control modes are represented by the operator's gestures, and the corresponding mapping relationship between the operator's gesture library and the controlled equipment control command library is established.

[0048] Among them, the control modes of the controlled equipment are divided into basic control, compound control and mission control.

[0049] Basic control refers to the control of the most commonly used states of the controlled equipment, using basic gestures that conform to human expression habits to directly express these most commonly used states, which is vivid, simple and convenient. Among them, when the accused equipment is a drone, its most commonly used states include takeoff, landing, forward, backward, return,...

Embodiment 2

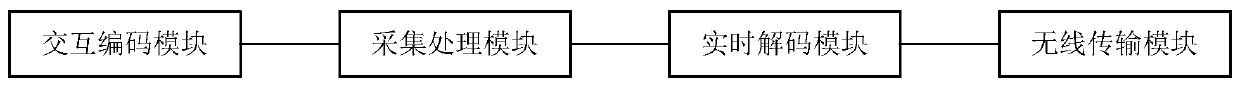

[0060] Example 2, such as figure 2 As shown, the present invention also provides a semantic human-computer natural interaction control system, characterized in that the system includes:

[0061] The interactive coding module, according to the control modes of the controlled equipment, expresses these control modes through the operator's gestures, and establishes the corresponding mapping relationship between the operator's gesture library and the controlled equipment control command library.

[0062] Among them, the control modes of the controlled equipment are divided into basic control, compound control and task control.

[0063] Basic control refers to the control of the most commonly used states of the controlled equipment, using basic gestures in line with human expression habits to directly express these most commonly used states. Among them, the most commonly used states include takeoff, landing, forward, backward, return, and left turn , turning right, the correspond...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com