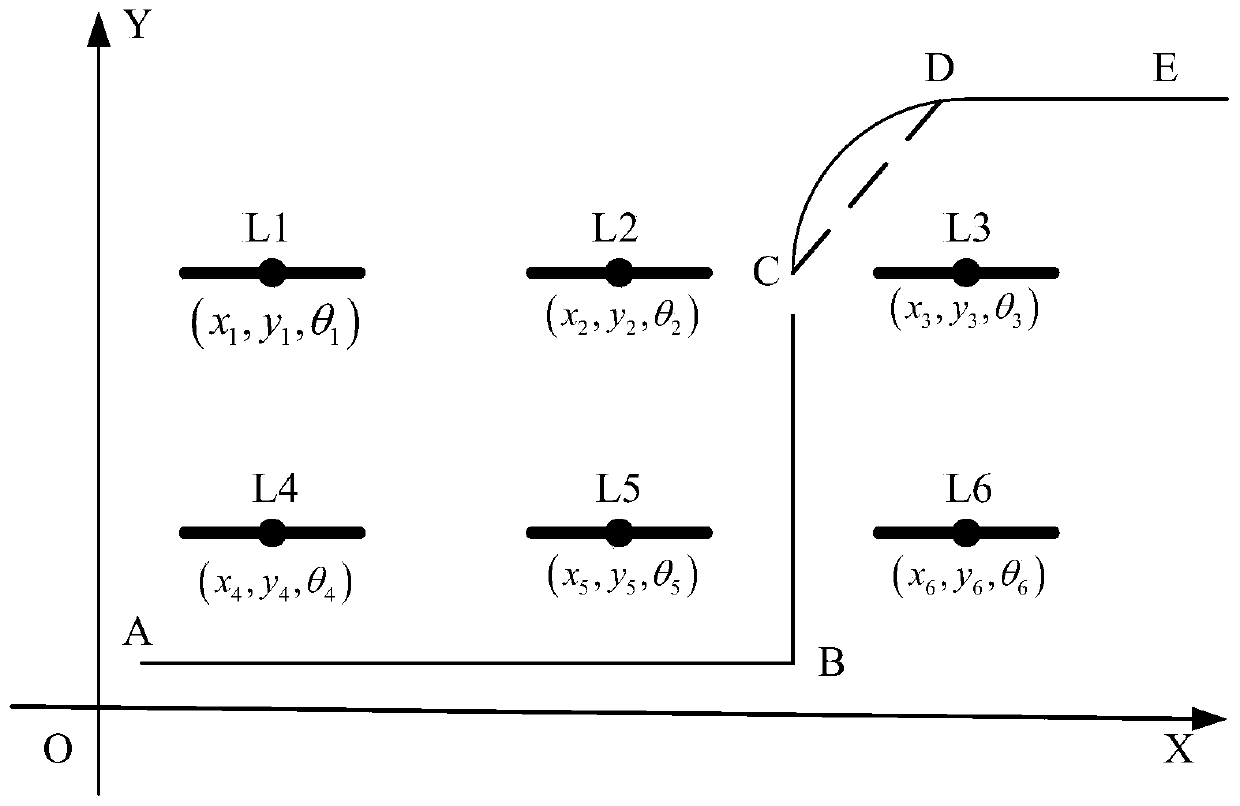

Visual navigation method of mobile robot based on indoor lighting

A mobile robot and visual navigation technology, applied in the field of intelligent visual navigation, can solve the problems of system lag, markers are easily blocked, and image processing efficiency is low, and achieve the effect of improving real-time performance and simple image processing algorithm

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

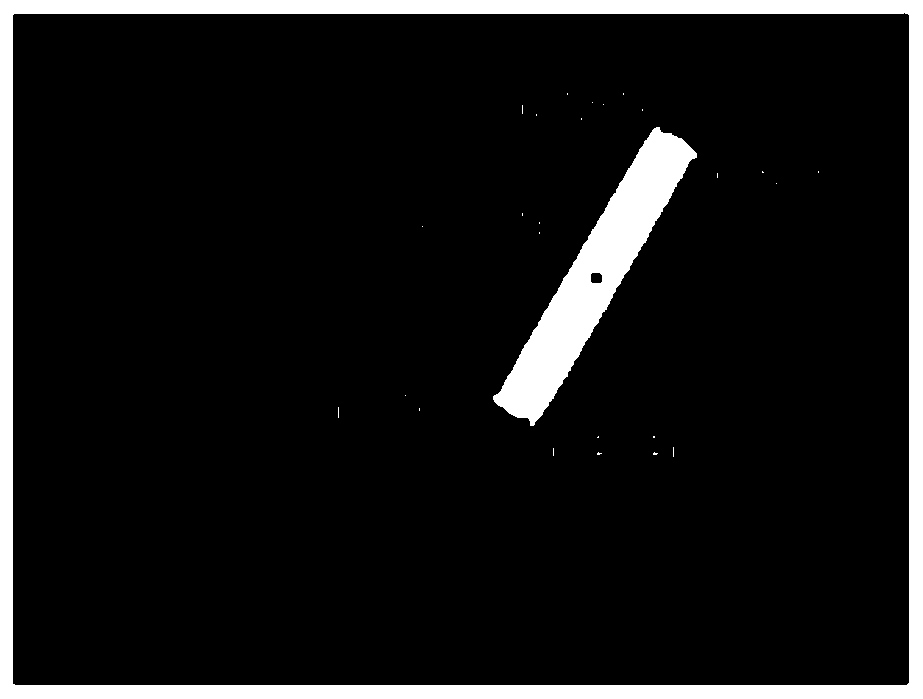

Examples

Embodiment

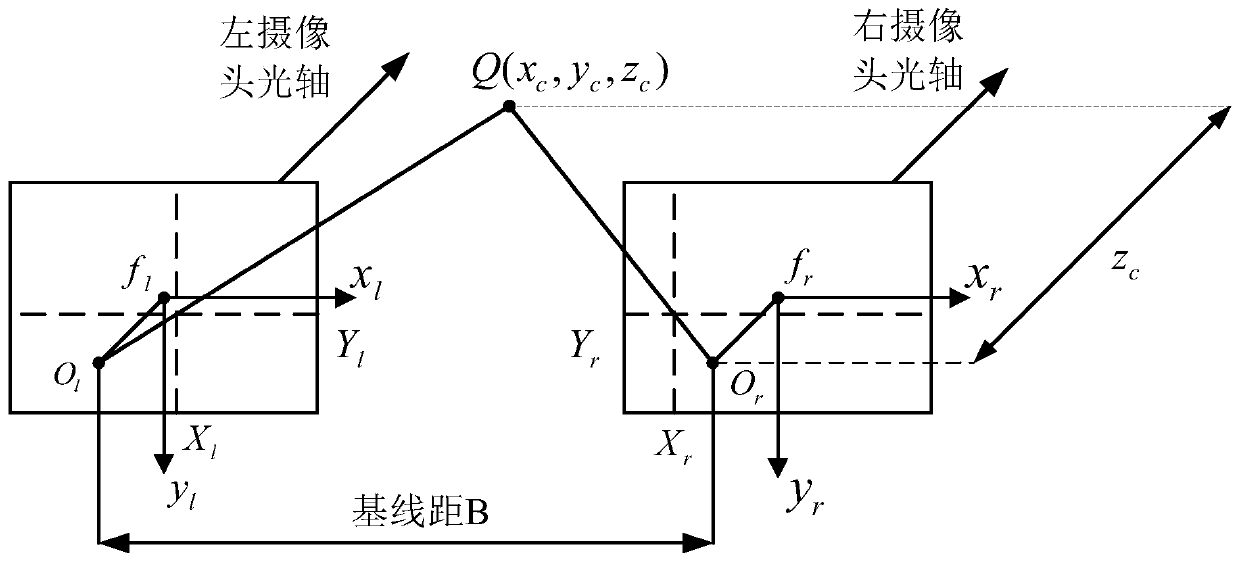

[0058] The binocular camera adopts a binocular black-and-white camera. The main parameters are: the lens distance B is 5cm, 25 frames per second; the lens parameters include: the focal length f is 4mm, the specification is 1 / 3inch, the aperture is F1.6, the viewing angle is 70.6 degrees, and the CMOS.

[0059] The left and right cameras are calibrated through the calibration board, and the internal parameters of the binocular camera are obtained as shown in Table 1.

[0060] Table 1. Internal parameters of the binocular camera

[0061]

[0062] Utilize above-mentioned method of the present invention, control the moving position of mobile robot, obtain mobile robot position error less than 3%;

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com