Human body posture feature extracting method based on 3D joint point coordinates

A technology of human body posture and joint points, applied in the field of human-computer interaction, can solve problems such as inability to distinguish actions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but should not be used to limit the scope of the present invention.

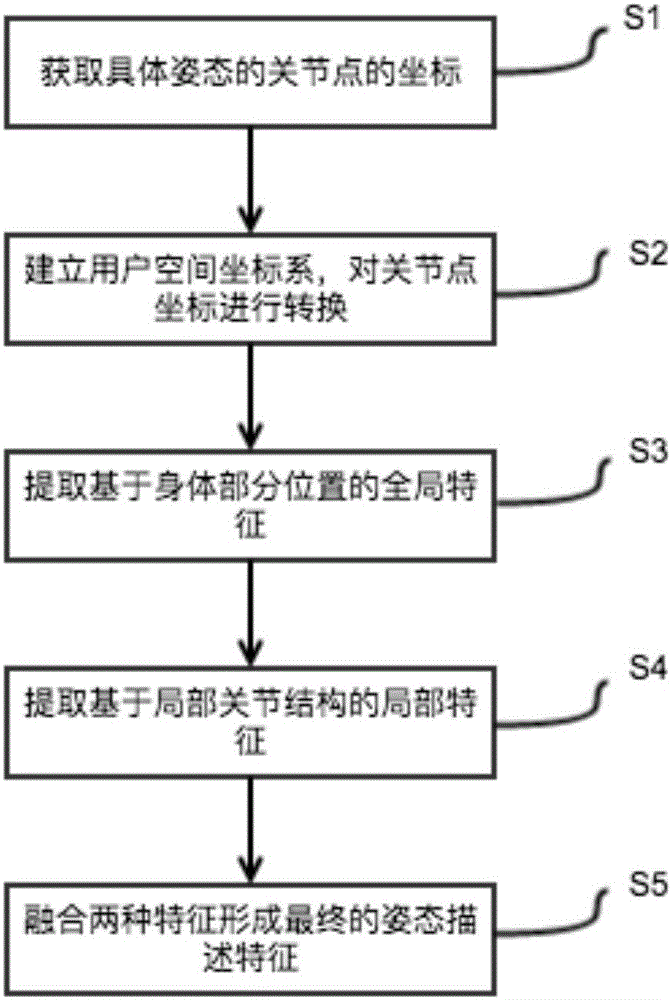

[0056] A human body posture feature extraction method based on 3D joint point coordinates, such as figure 1 As shown, the method includes the following steps:

[0057] S1. Obtain the joint point coordinates of the specific posture;

[0058] S2. Establish a user space coordinate system, and convert the joint point coordinates in the device coordinate system to the user space system;

[0059] S3, extracting global features based on the position of the body part;

[0060] S4. Extracting local features based on the local joint structure;

[0061] S5. Fusion of global features and local features to form a final pose description feature.

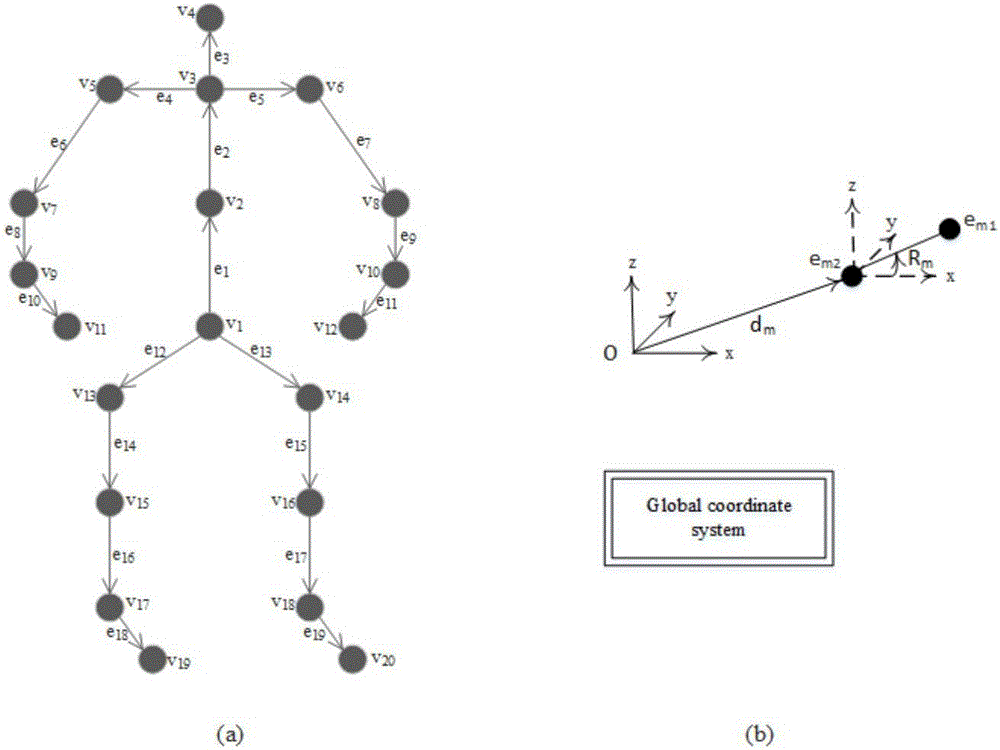

[0062] Preferably, obtaining the joint point coordinates of the specifi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com