A Hand Region Segmentation Method with Deep Fusion of Saliency Detection and Prior Knowledge

A technology of area segmentation and prior knowledge, applied to computer parts, character and pattern recognition, instruments, etc., can solve problems such as unusable, single technical means, poor algorithm robustness, etc., and achieve the effect of eliminating interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

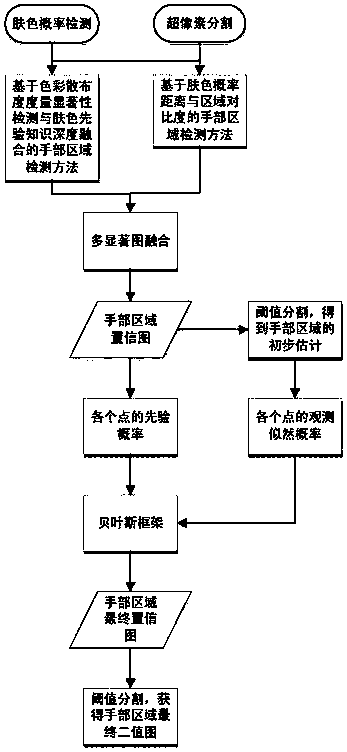

[0076] A hand region segmentation method that deeply fuses saliency detection and prior knowledge, such as Figure 9 As shown, the specific steps include:

[0077](1) Perform SLIC superpixel segmentation on the original image to obtain N regions of R1, R2...RN. The original image is as follows figure 1 Shown:

[0078] (2) Through the hand region detection method based on the fusion of the saliency detection of the color spread measure and the skin color prior knowledge, the preliminary detection of the hand region is realized, including:

[0079] a. In the RGB color space, quantize the color of each channel to obtain t different values, so that the total number of colors is reduced to t 3 kind;

[0080] b. Calculate the salience of each color separately; for any color i, i∈1,2...t 3 , its significance S e The calculation formulas (I) and (II) of (i) are as follows:

[0081] S e (i)=P skin (i)exp(-E(i) / σ e ) (I)

[0082]

[0083] In formulas (I) and (II), the param...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com