Multi-mode output method and apparatus applied to intelligent robot

An intelligent robot and output method technology, applied in the field of intelligent robots, can solve the problems of mismatching voice output and action output, poor robot intelligence and anthropomorphism, loss and other problems, and achieve the effect of improving intelligence and anthropomorphism

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

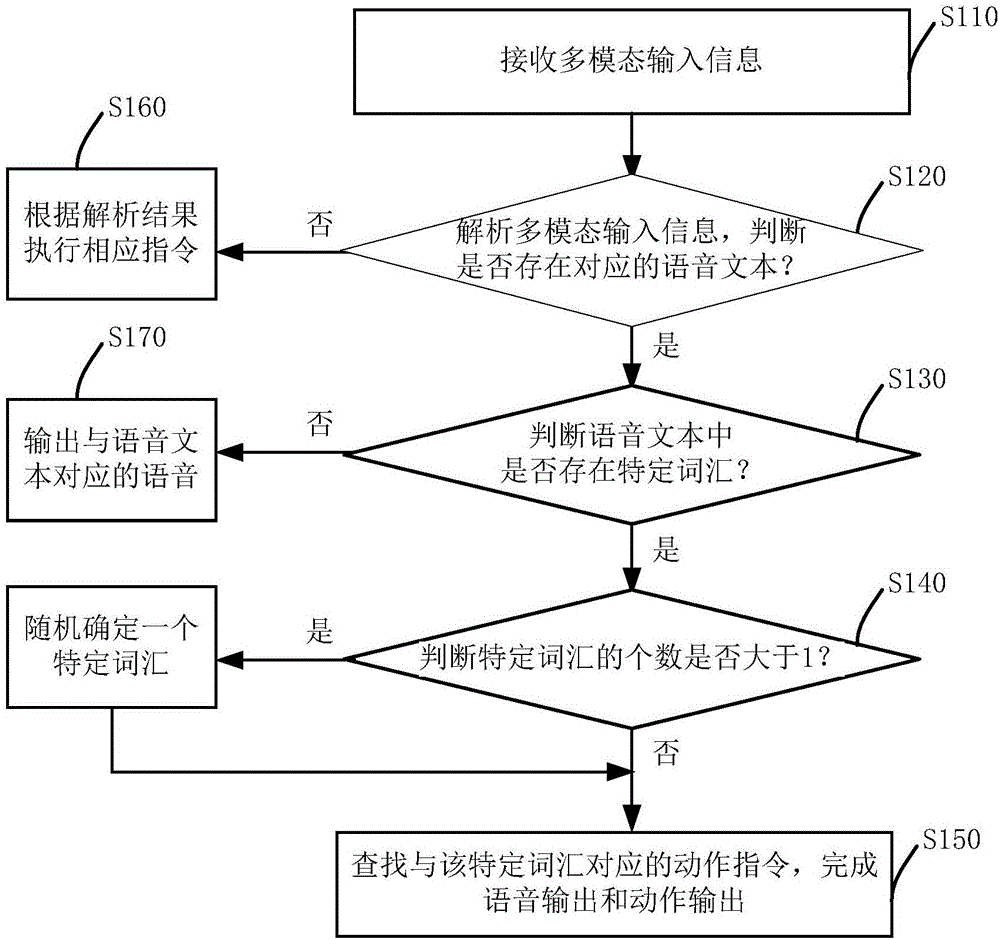

[0030] figure 1 It is a schematic flow chart related to Example 1 of the multimodal output method applied to an intelligent robot of the present invention, and the method in this embodiment mainly includes the following steps.

[0031] In step S110, the robot receives multimodal input information.

[0032] Specifically, in the process of interaction between the user and the robot, the robot may receive multimodal input information through a video collection unit, a voice collection unit, a human-computer interaction unit, and the like. Among them, the video acquisition unit can be composed of an RGBD camera, the voice acquisition unit needs to provide complete voice recording and playback functions, and the human-computer interaction unit can be a touch input display screen through which the user inputs multimodal information.

[0033] It should be noted that the multimodal input information mainly includes audio data, video data, image data, and program instructions for enab...

Embodiment 2

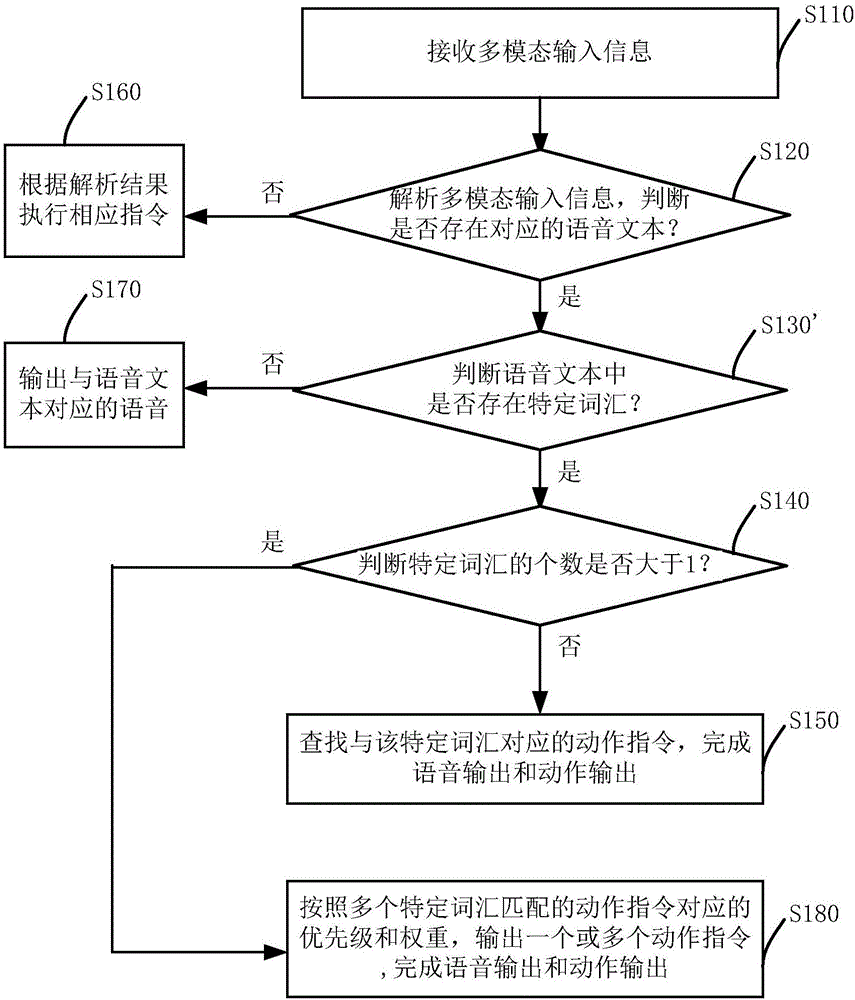

[0051] figure 2 It is a schematic flow chart of Example 2 of the multi-modal output method applied to intelligent robots of the present invention. The method of this embodiment mainly includes the following steps, wherein the steps similar to those of Embodiment 1 are marked with the same symbols, and are not The specific content thereof will be described again, and only the distinguishing steps will be described in detail.

[0052] In step S110, the robot receives multimodal input information.

[0053] In step S120, the multimodal input information is analyzed, and it is judged whether there is corresponding speech text information according to the analysis result, if the judgment result is "yes", then step S130' is executed, otherwise, step S160 is executed according to the analysis result for processing.

[0054] In step S130', it is judged whether there is a specific vocabulary in the obtained phonetic text information, if yes, then step S140 is executed, otherwise, step...

Embodiment 3

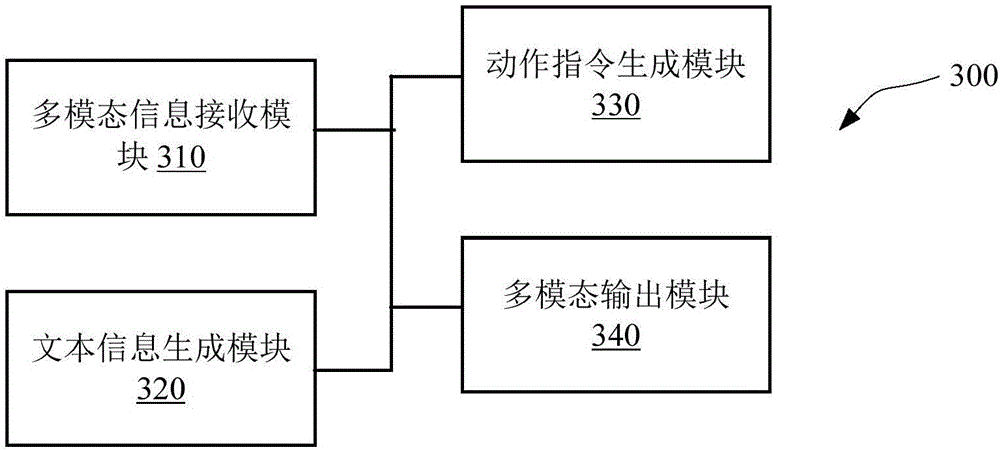

[0066] image 3 It is a structural block diagram of a multimodal output device 300 applied to an intelligent robot according to an embodiment of the present application. Such as image 3 As shown, the multimodal output device 300 of the embodiment of the present application mainly includes: a multimodal information receiving module 310 , a text information generating module 320 , an action command generating module 330 and a multimodal output module 340 .

[0067] A multimodal information receiving module 310, which receives multimodal input information.

[0068] The text information generating module 320 is connected with the multimodal information receiving module 310, analyzes the multimodal input information, and generates corresponding speech and text information according to the analysis result.

[0069]The action instruction generation module 330 is connected with the text information generation module 320, extracts specific vocabulary in the speech text information, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com