Image content marking method and apparatus thereof

An image content and image technology, applied in the field of image processing, can solve problems such as slow completion speed, achieve the effect of reducing workload, ensuring accuracy, and improving completion efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

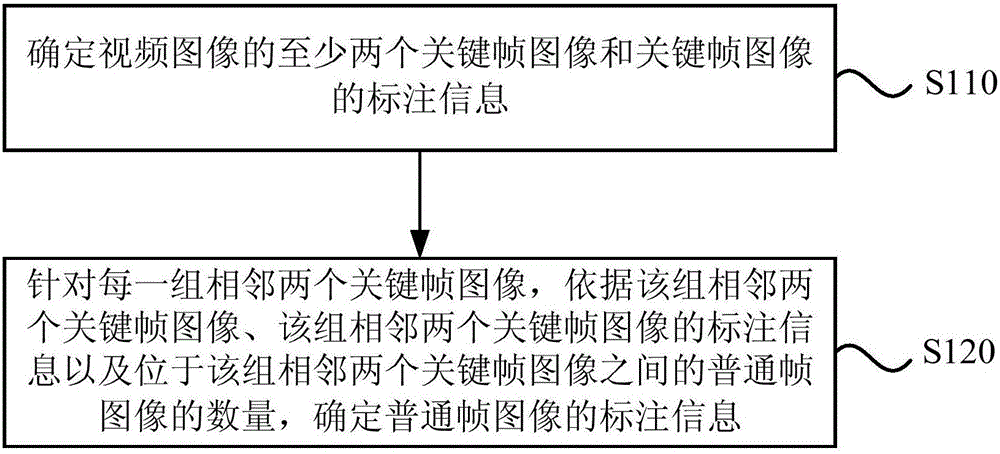

[0018] figure 1 It is a flow chart of an image content labeling method provided in Embodiment 1 of the present invention. This embodiment is applicable to the situation of collecting video image training sets. The method can be executed by an image content labeling device, which can be implemented by software and / or implemented by hardware. Such as figure 1 As shown, the method includes:

[0019] S110. Determine at least two key frame images of the video image and label information of the key frame images.

[0020] Video images can be dynamic images in various storage formats, such as digital video formats, including DVD, QuickTime and MPEG-4 formats; and video tape video formats, including VHS and Betamax formats. The video images include multiple key frame images and common frame images between different key frame images.

[0021] The key frame image is adjusted and determined according to the generation efficiency of the annotation information, and is usually determine...

Embodiment 2

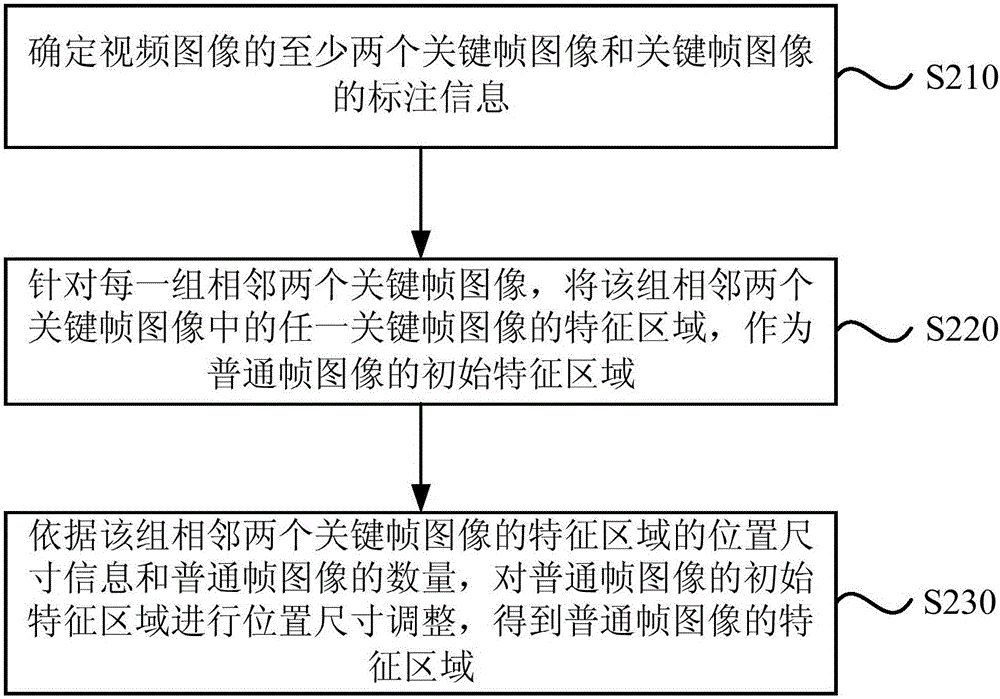

[0033] figure 2 It is a schematic flow chart of a game level processing method in Embodiment 2 of the present invention. On the basis of Embodiment 1, this embodiment further elaborates the generation operation of the annotation information of ordinary frame images, such as figure 2 As shown, the method includes:

[0034] S210. Determine at least two key frame images of the video image and annotation information of the key frame images.

[0035] The annotation information of key frame images also includes feature regions. The content in the feature area is the object to be labeled. Feature regions can be of arbitrary shape. For better operation, the shape of the feature region is preferably a regular polygon.

[0036] S220. For each group of two adjacent key frame images, use the feature area of any key frame image in the group of two adjacent key frame images as the initial feature area of the normal frame image.

[0037] The initial feature area can be obtained by...

Embodiment 3

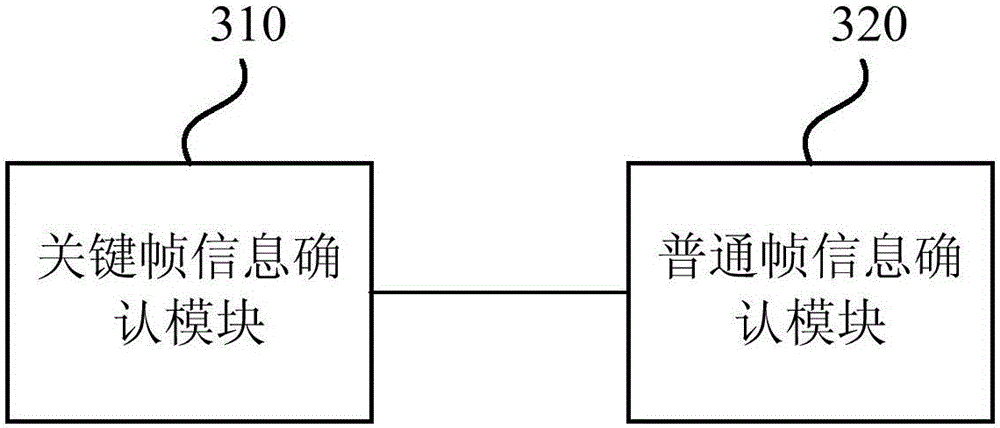

[0049] image 3 Shown is a schematic structural diagram of an image content tagging device provided by Embodiment 3 of the present invention, as shown in image 3 As shown, the image content labeling device includes: a key frame information confirmation module 310 and a normal frame information confirmation module 320 .

[0050] Wherein, the key frame information confirming module 310 is configured to determine at least two key frame images of the video image and annotation information of the key frame images.

[0051] The common frame information confirmation module 320 is used for each group of adjacent two key frame images, according to the group of adjacent two key frame images, the label information of the group of adjacent two key frame images, and the group of adjacent key frame images. The number of normal frame images between two key frame images determines the annotation information of normal frame images.

[0052] Further, the common frame information confirmation...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com