Target object recognition and positioning method based on color images and depth images

A technology of target objects and color images, applied in scene recognition, character and pattern recognition, instruments, etc., can solve problems such as inability to distinguish target objects well, false detection of feature points, etc., to achieve high real-time performance and improve efficiency. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be further described below in conjunction with specific drawings.

[0031] The method for identifying and locating a target object based on a color image and a depth image of the present invention comprises the following steps:

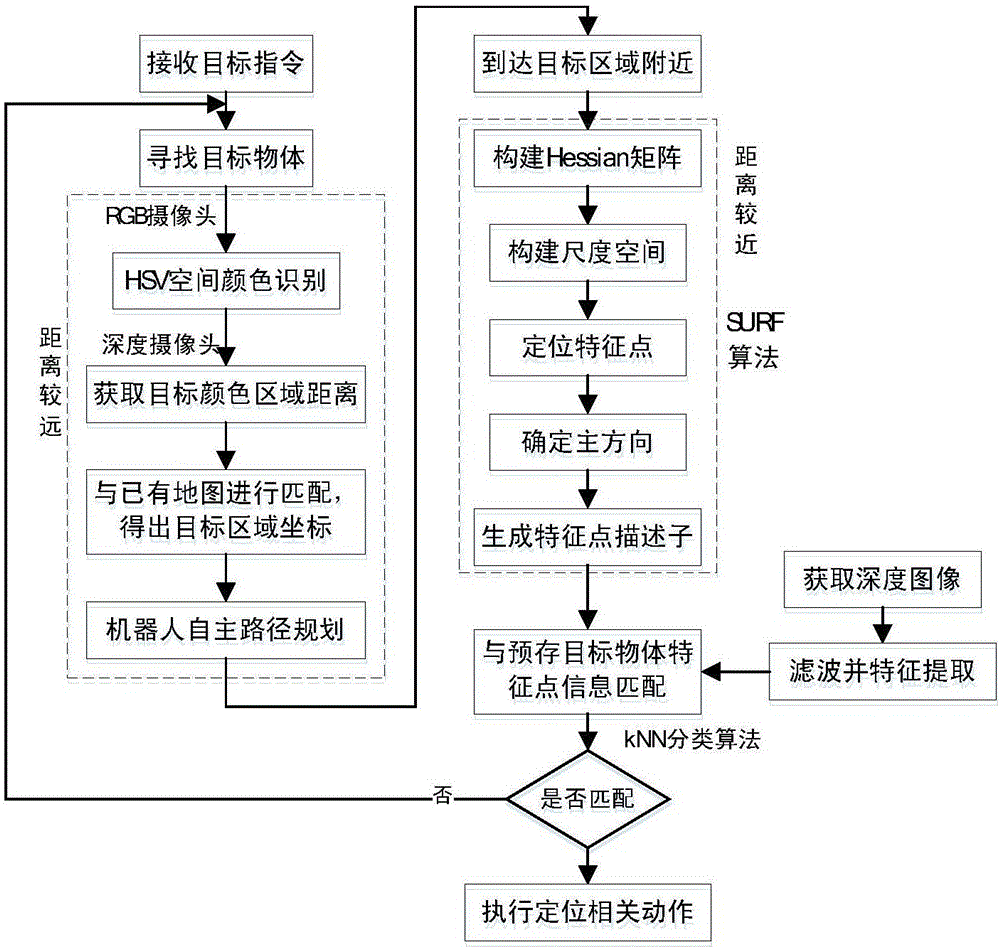

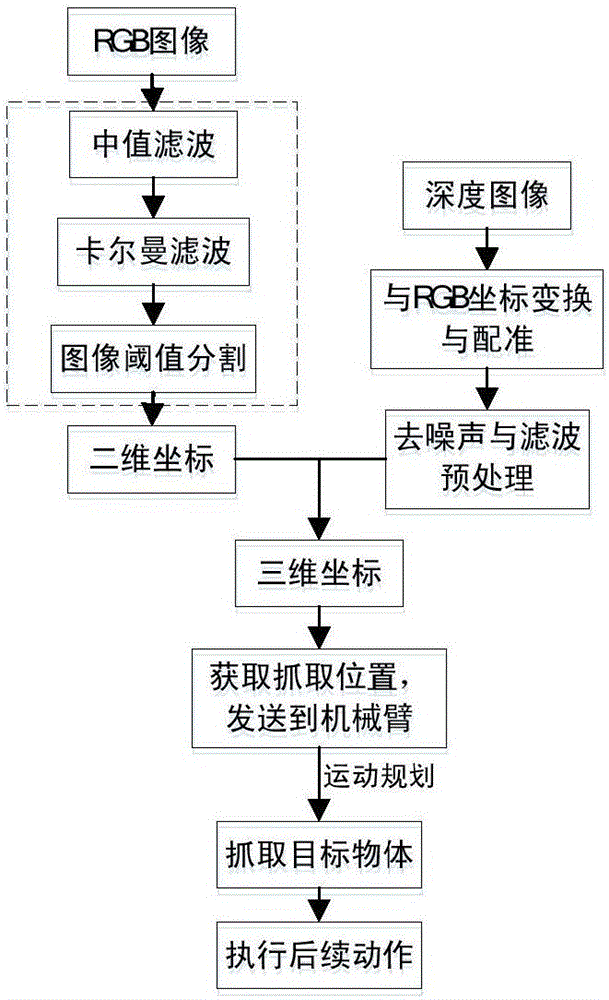

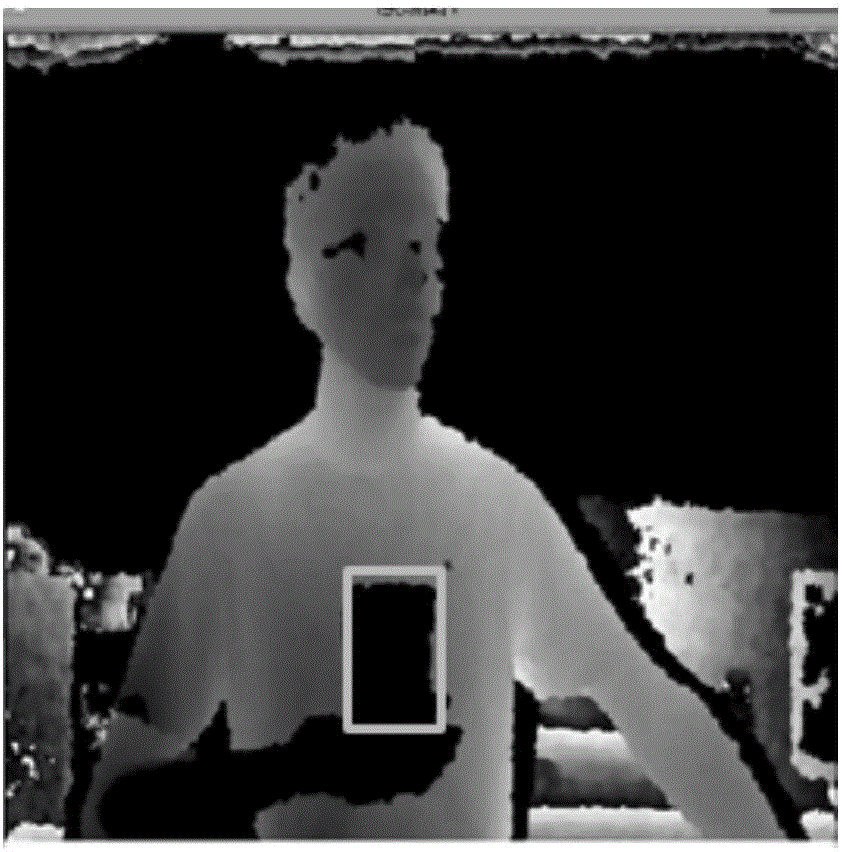

[0032] (1) The mobile robot adopts long-range HSV color recognition, short-range SURF feature point detection, and removes obstacles through depth images to identify target objects;

[0033] Specifically, such as figure 1 Shown: In order to solve the limited working space caused by the fixed position of the camera, the camera and the robotic arm are mounted on the mobile platform and move together with the robot. When receiving an instruction to grab an object, the robot will search for the target object in the surrounding environment through the camera. When the distance from the target object is far away, the feature points of the target object will not be prominent enough at this time, and the recognition accuracy...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com