Lost re-examination method for weak moving target tracking based on ncc matching frame difference

A target tracking and moving target technology, which is applied in the field of weak and small moving target tracking loss and re-examination based on NCC matching frame difference, can solve the problems of background clutter, target continuous tracking failure, etc., achieve high time efficiency, effective and fast tracking, and reduce artificial Participation effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

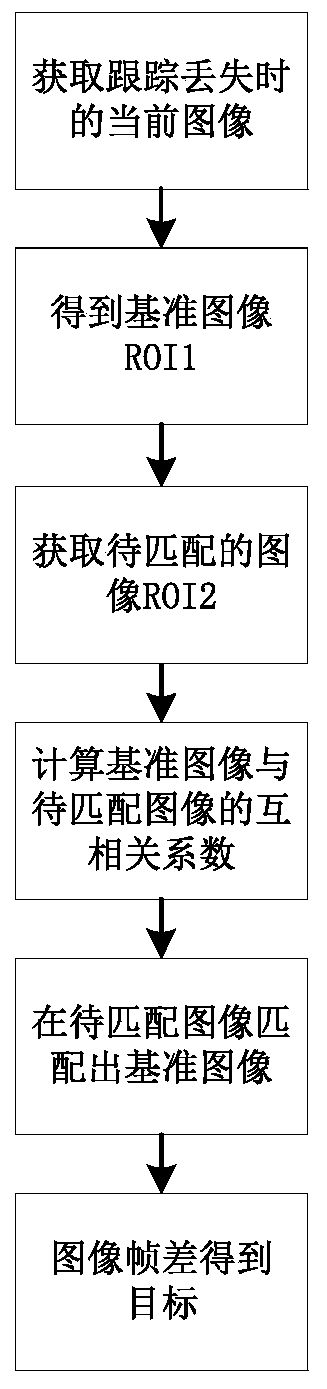

[0039] Embodiment 1. A weak and small moving target tracking loss re-examination method based on NCC matching frame difference. The process is as follows figure 1 shown, including the following steps:

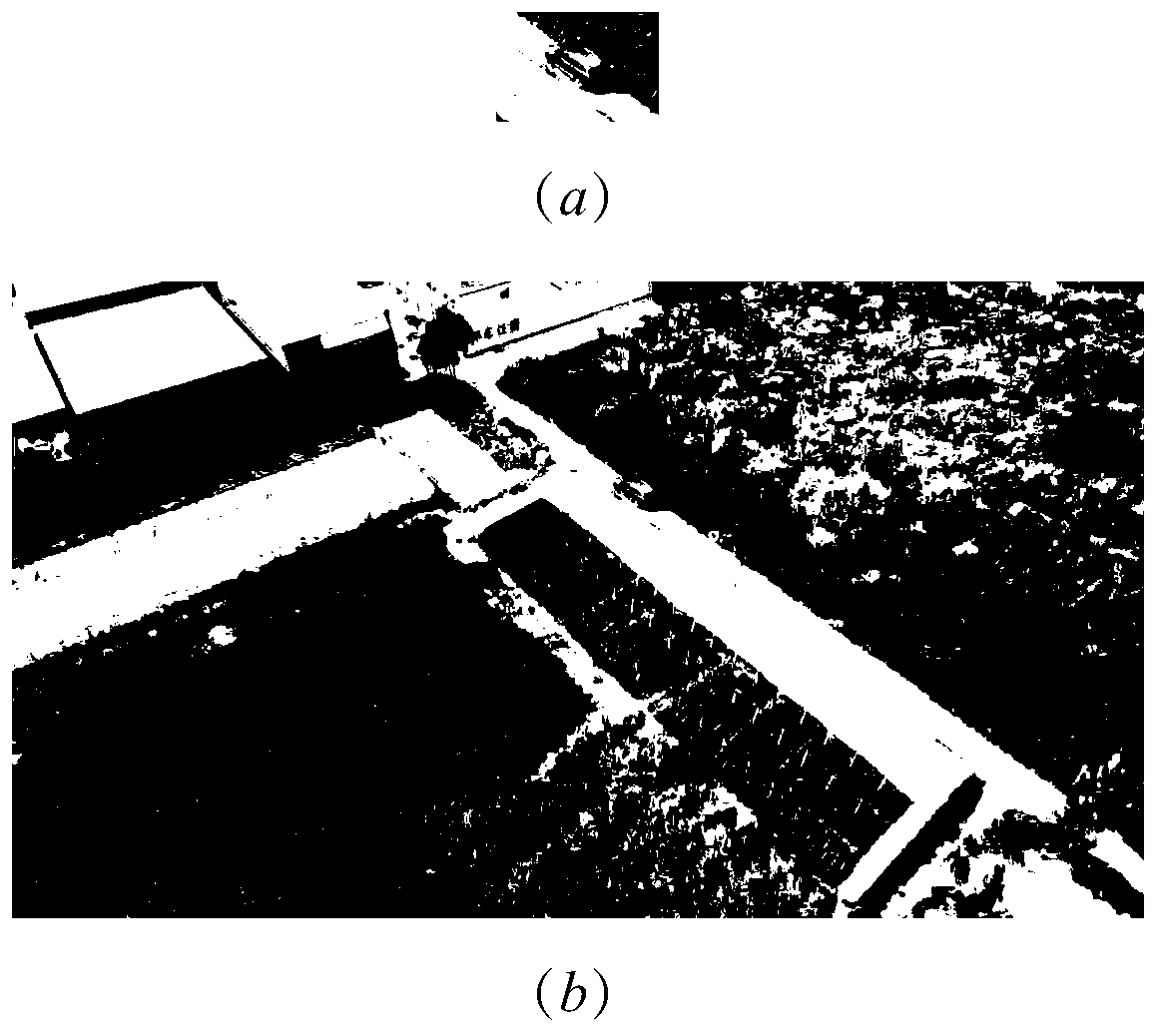

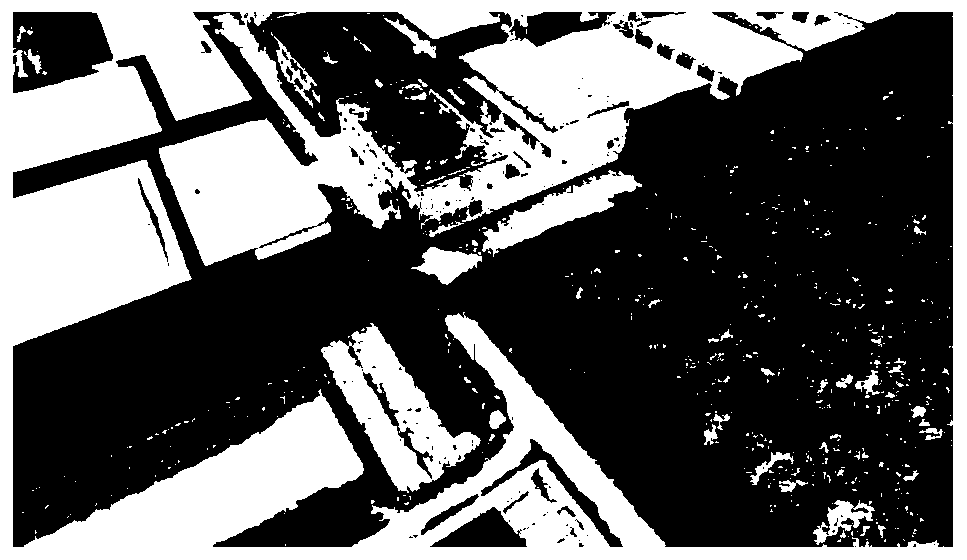

[0040] S1: Collect and obtain video data, the video data is composed of continuous frame images; in this embodiment, the drone is used to shoot autonomously, the image size of the collected video is color data of 720×1280 pixels, and the flying height of the drone is 103 meters. The video frame rate is 100 frames per second. In this embodiment, for the target to be tracked and the video data to be processed, such as figure 2 Shown in (a) and (b).

[0041] S2: Use the target tracking algorithm to track the target on the continuous frame images. For the continuous multi-frame images that track the target, calculate the multi-dimensional features of the target area tracked in each frame image as normal features, and calculate the normal feature of each frame. The offset relati...

Embodiment 2

[0059] Embodiment 2, in the technical solution as described in Embodiment 1, the multi-dimensional features used in this embodiment include length, width, aspect ratio, duty cycle, area of the smallest circumscribed rectangle, space expansion, and compactness and symmetry.

[0060] The length, width and aspect ratio are the length, width and aspect ratio of the target region or the candidate region.

[0061] The duty cycle is the ratio of the area of the target area or the candidate area to the area of the smallest bounding rectangle.

[0062] The area of the minimum bounding rectangle is the area of the minimum bounding rectangle of the target area or the candidate area.

[0063] The spatial expansion degree is the sum of the distances from all points in the target area or the candidate area to the main axis of the area normalized by the length of the main axis.

[0064] Compactness is the degree to which the shape of the target area or the candidate area deviates...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com