Saturation based quality evaluation method for colored multi-exposure fusion image

A technology that integrates images and quality evaluation. It is used in image enhancement, image analysis, and image data processing. It can solve problems such as information loss, ignoring background information, ignoring image texture information and human eye perception characteristics, and achieves a good degree of preservation. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be described in detail below with reference to the accompanying drawings and examples.

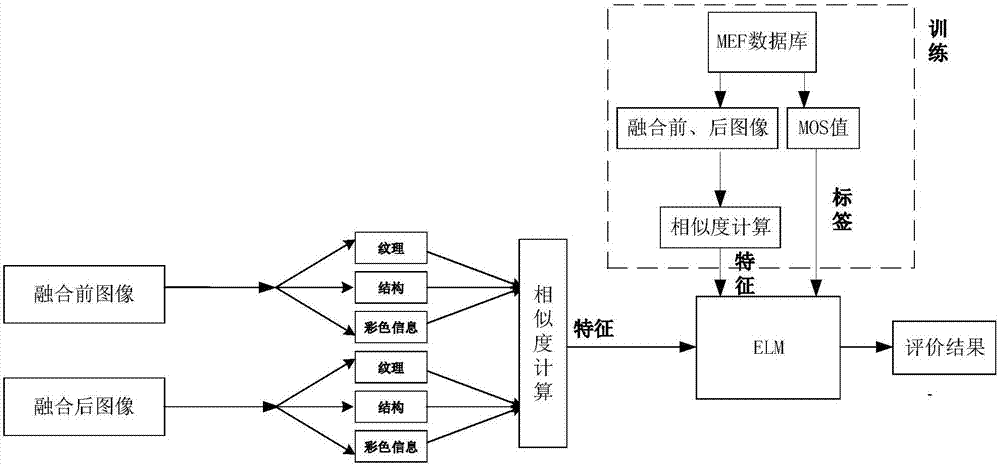

[0028] The invention provides a method for evaluating the quality of a color multi-exposure fusion image based on saturation, the specific process is as follows figure 1 shown.

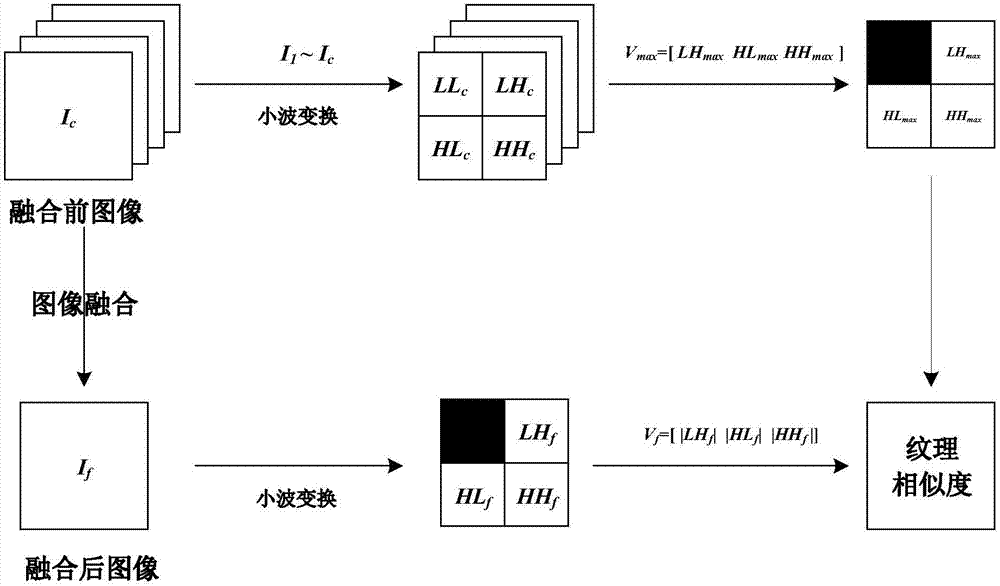

[0029] Step 1. Using the multi-exposure images and their fusion images in MEF (Multi-exposure Image Fusion Database) as training samples, the multi-exposure images and fusion images are extracted based on saturation and wavelet coefficients to obtain texture information, structure information and Color information; according to the texture information, structure information and color information of the images before and after fusion, the texture similarity, structure similarity and color similarity are calculated respectively; the texture information similarity, structure information similarity and color information similarity are used as feature values, Combined with the given e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com