Approximate-computation-based binary weight convolution neural network hardware accelerator calculating module

A binary weight convolution and hardware accelerator technology, applied in biological neural network models, physical implementation, etc., can solve problems such as limited power consumption, and achieve the effects of accelerated computing speed, small area, and low power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

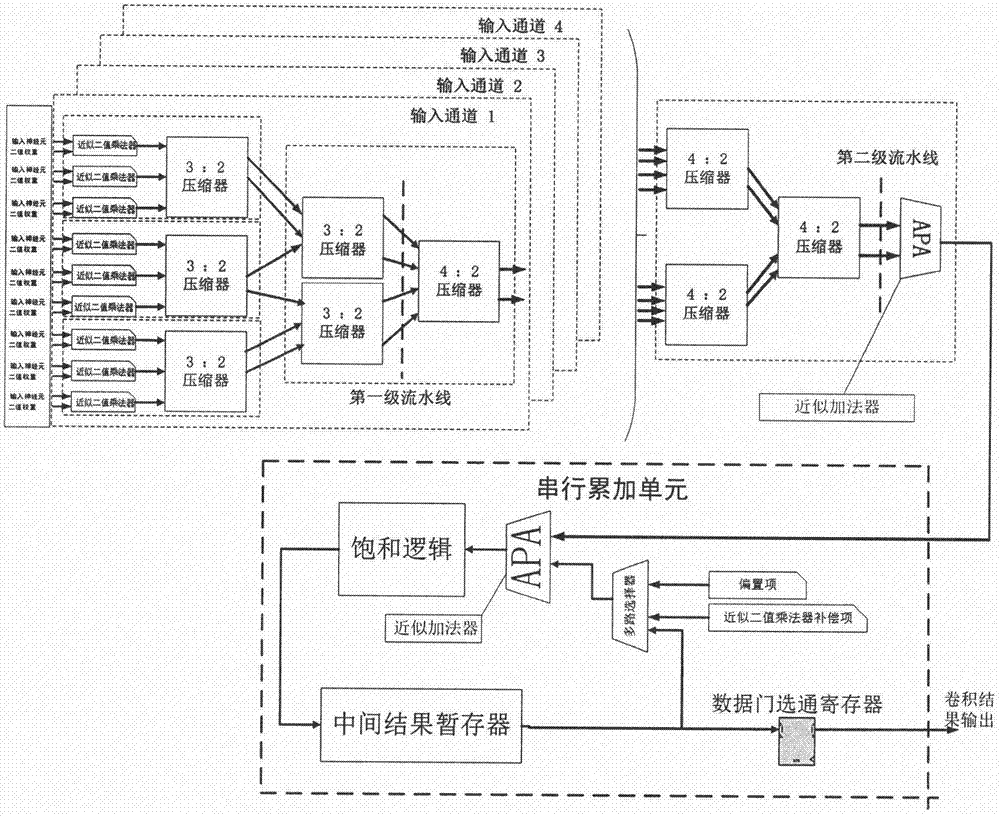

[0034] Embodiments of the invention are described in detail below, examples of which are illustrated in the accompanying drawings. Where the same name is used throughout to refer to modules with the same or similar functionality. The implementation example described below with reference to the accompanying drawings takes a convolution kernel size of 3×3 as an example, and the number of parallel input channels is set to 4, which is intended to explain the present invention, but should not be construed as a limitation of the present invention.

[0035] In addition, the terms "first" and "second" are only used for descriptive purposes, and cannot be understood as indicating or implying relative importance or implying the quantity of indicated technical features. Thus, a feature defined as "first" or "second" may explicitly or implicitly include one or more of these features. In the description of the present invention, "plurality" means two or more, unless otherwise specifically...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com