BP neural network parallelization method for multi-core computing environment

A BP neural network and computing environment technology, applied in the field of BP neural network parallelization, can solve problems such as excessive synchronization and limited efficiency improvement, and achieve the effects of reducing cache replacement, improving parallelism, and giving full play to hardware performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0061] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

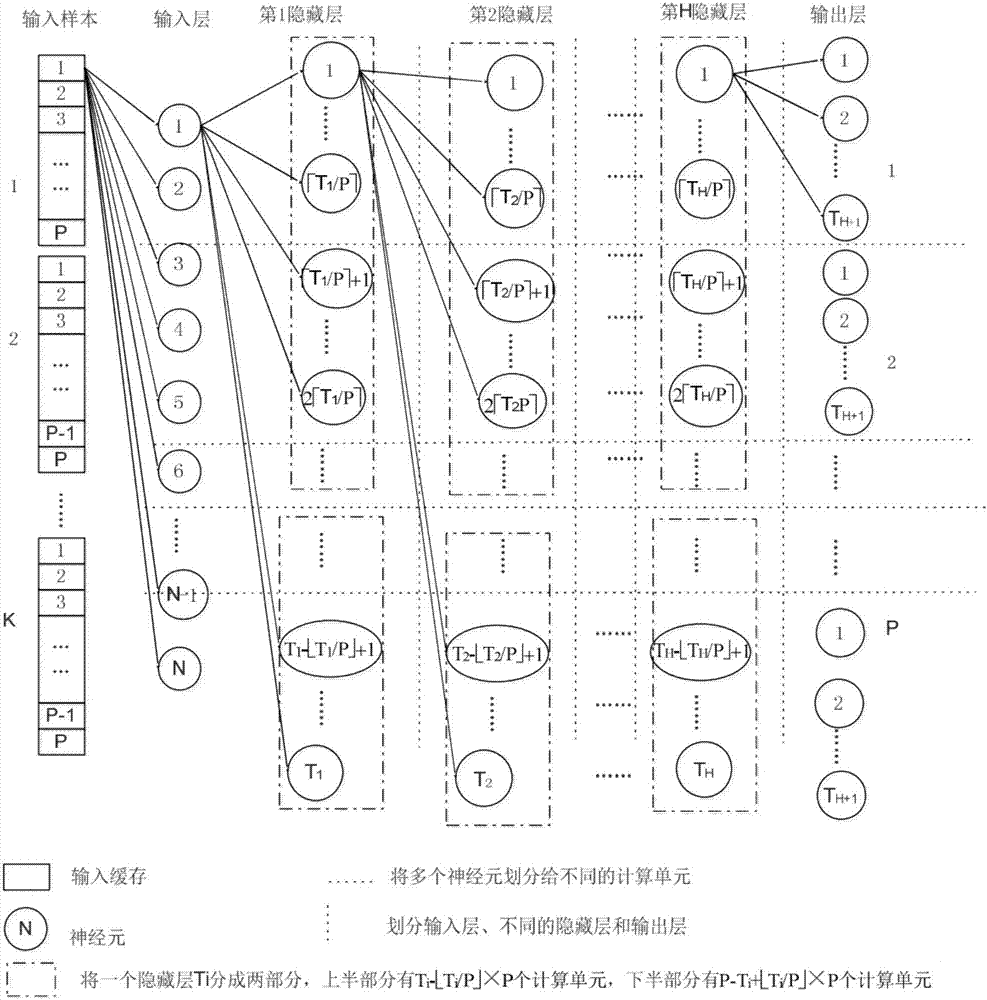

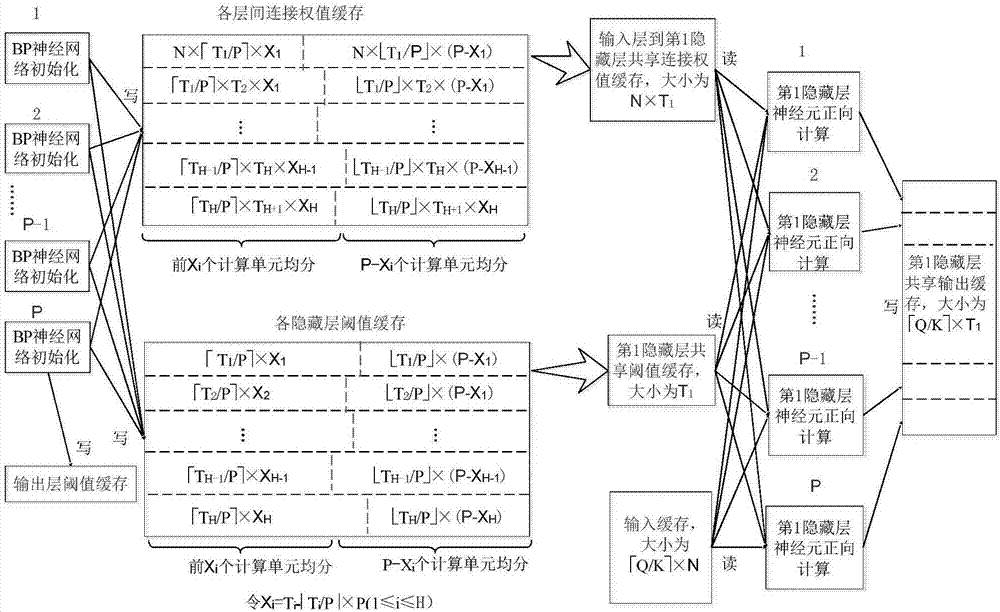

[0062] Assuming that the number of available computing units is P, the size of the second-level shared cache of the processor is C bytes, the input layer is the 0th layer, the input vector dimension is N, and the BP neural network has at most H hidden layers, of which the i-th hidden layer has T i neurons, the output layer is H+1 layer, with T H+1 neurons, the sample set size is Q, and the maximum number of training times is M(P, N, H, i, T i , T H+1 , Q, M are all normal numbers greater than 1).

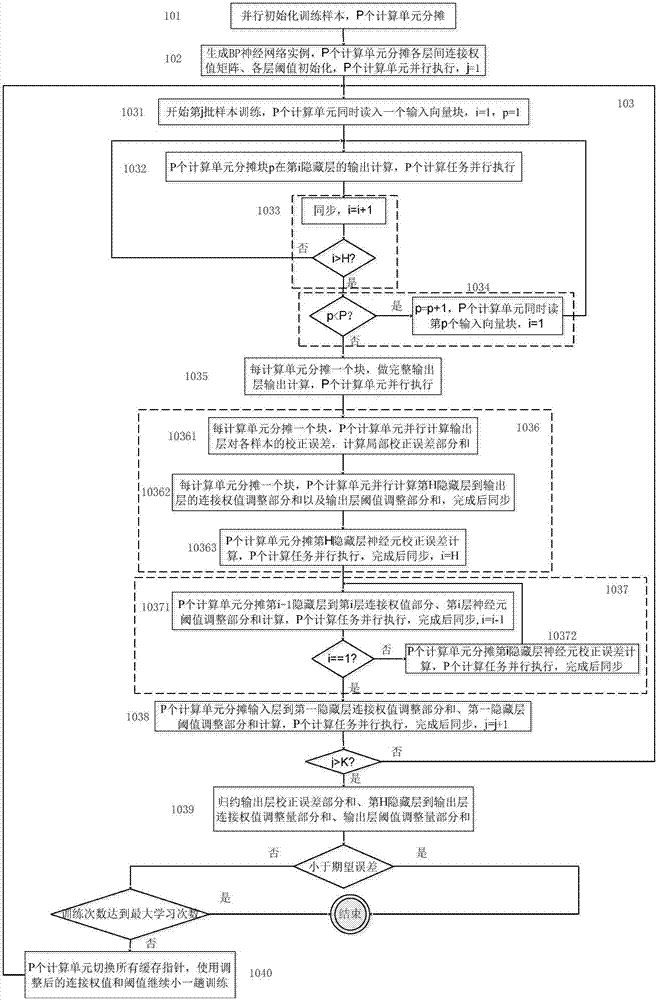

[0063] For a hardware platform with P computing units, BP neural network training is divided into P initial tasks and P training tasks. Each initialization task includes:

[0064] Subtask 101: sample initialization processing subtask, encode...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com