An adaptive visual navigation method based on elm-lrf

A visual navigation and self-adaptive technology, applied in the field of self-adaptive visual navigation, can solve problems such as slow training speed, achieve the effect of fast training speed, less computing resources, and improve navigation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

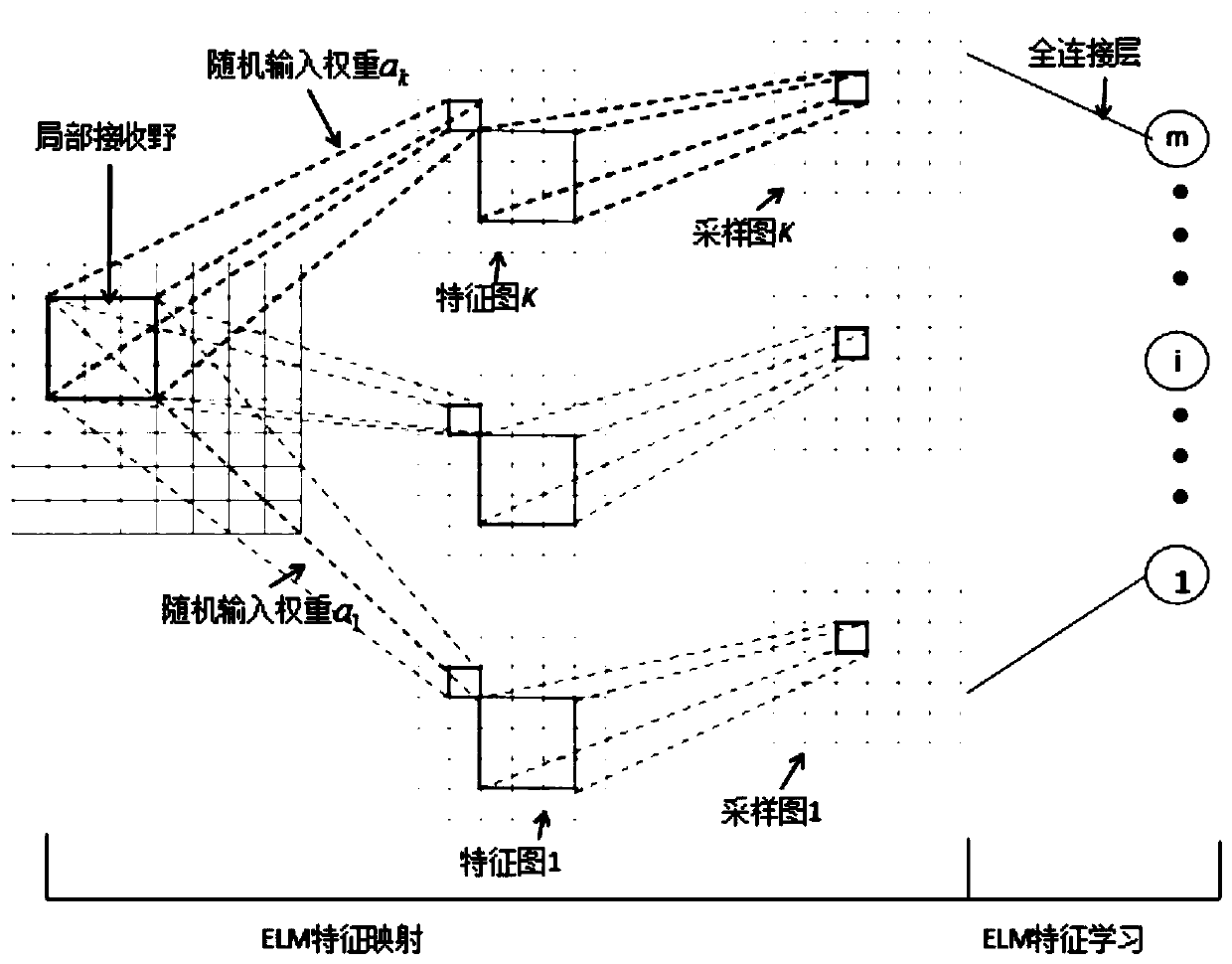

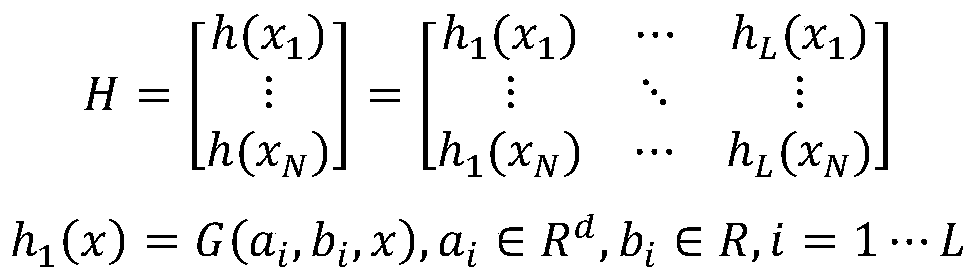

[0034] Such as figure 1 As shown, the ELM-LRF neural network architecture is as figure 1 As shown, ELM-LRF is divided into two stages: ELM feature learning and ELM feature mapping. First, the input vector is randomly assigned, the pixels in the local receiving field are randomly selected, and the weights input to the hidden layer are also randomly given. Next, downsampling is performed. , and finally output.

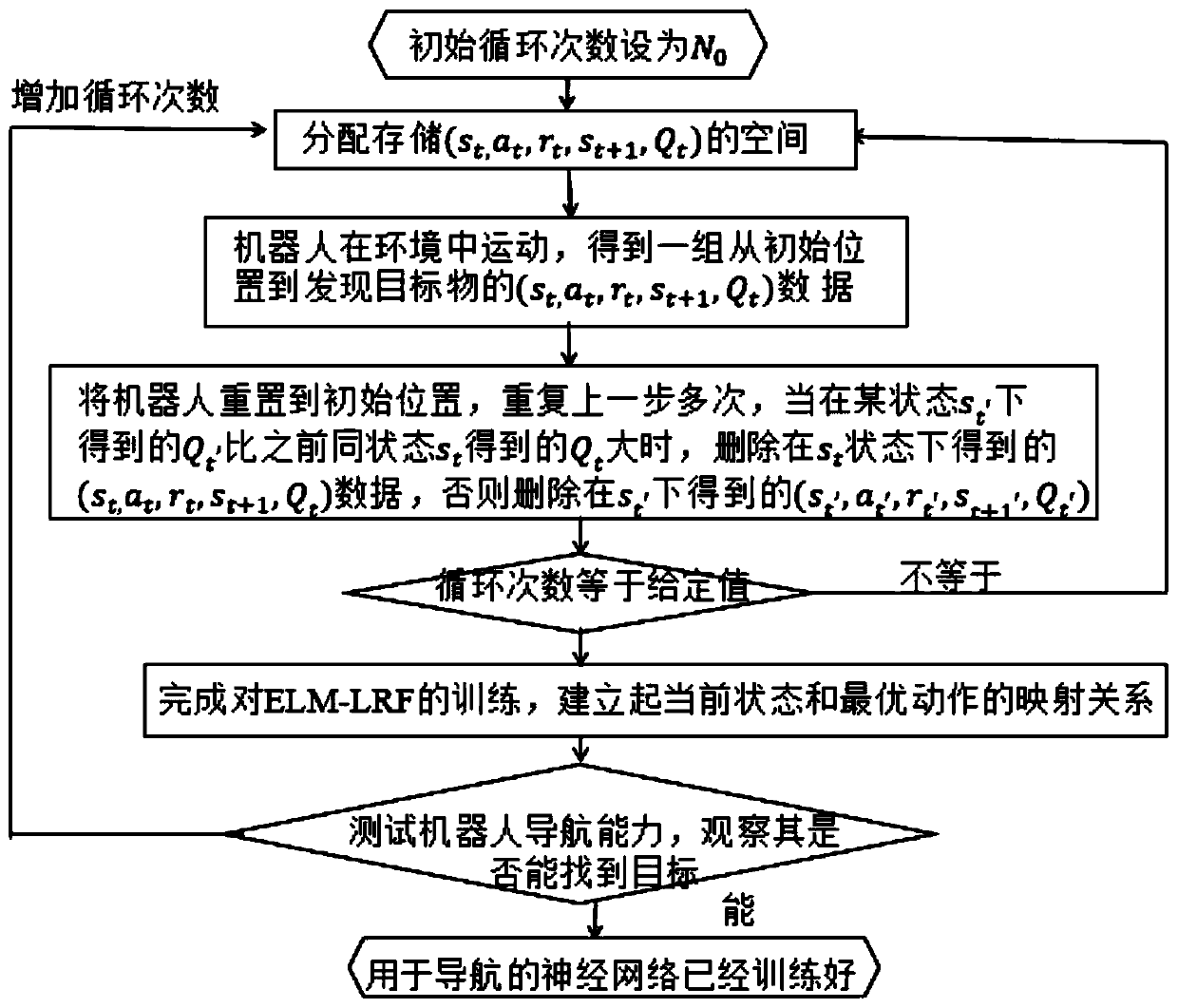

[0035] Such as figure 2 Shown, a kind of adaptive visual navigation method based on ELM-LRF of the present invention, the flow process of specific embodiment is as follows:

[0036] Step 1: Allocate energy storage (s t ,a t ,r t ,s t+1 ,Q t ) structure space. Q t Initialized to 0, according to the current state s t , randomly select motion a t (the robot can move forward, backward, left, and right), the robo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com