Image statement conversion method based on improved generative adversarial network

A conversion method and generative technology, applied in biological neural network models, character and pattern recognition, instruments, etc., can solve problems such as incoherent sentence expressions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

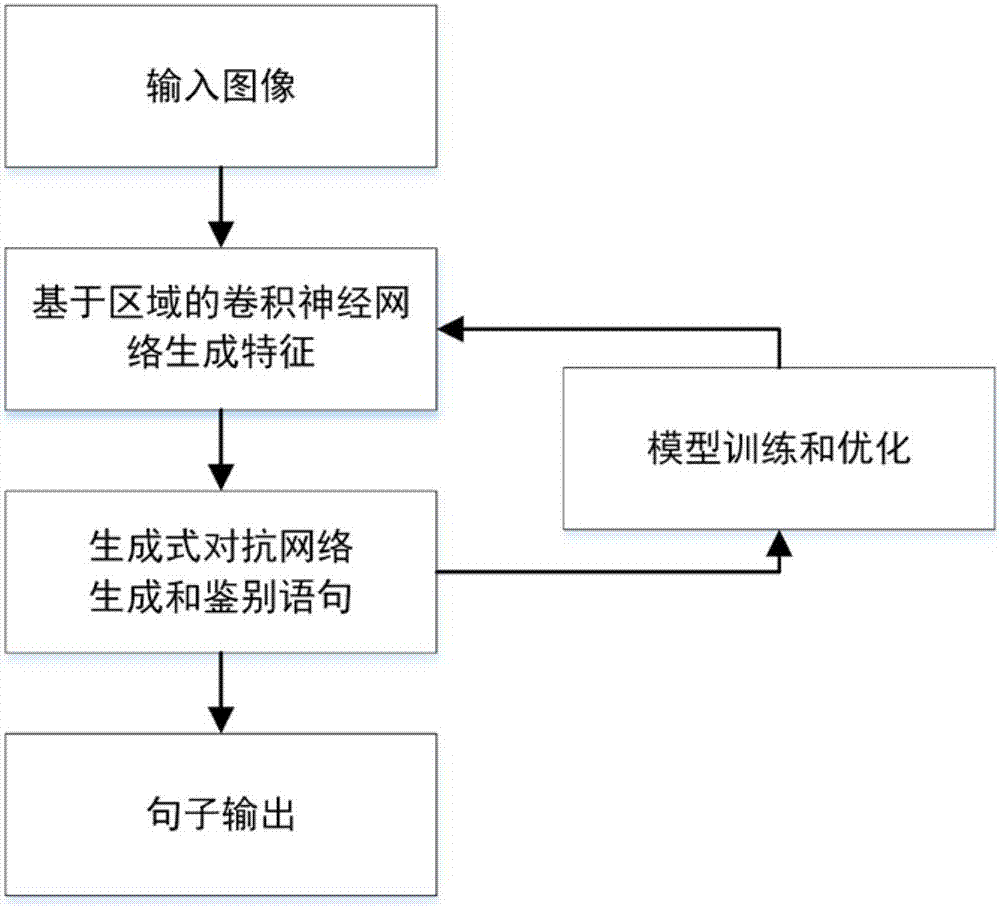

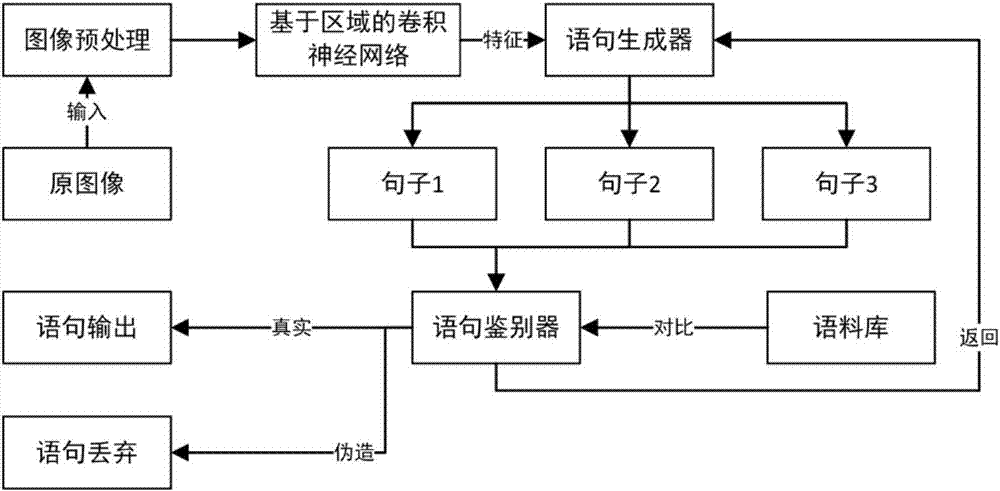

[0033] The present invention will be described below in conjunction with the accompanying drawings and specific embodiments. in figure 1 An image-to-sentence translation process based on an improved generative adversarial network is described.

[0034] Such as figure 1 Shown, the present invention comprises the following steps:

[0035] (1) Input the image, and use the region-based convolutional neural network to extract the features of the image. According to this method, the prominent position of the image can be used as a block, and the meaning and vocabulary vector of the block can be obtained through the feature vector. This step finally obtains features as vocabulary vectors.

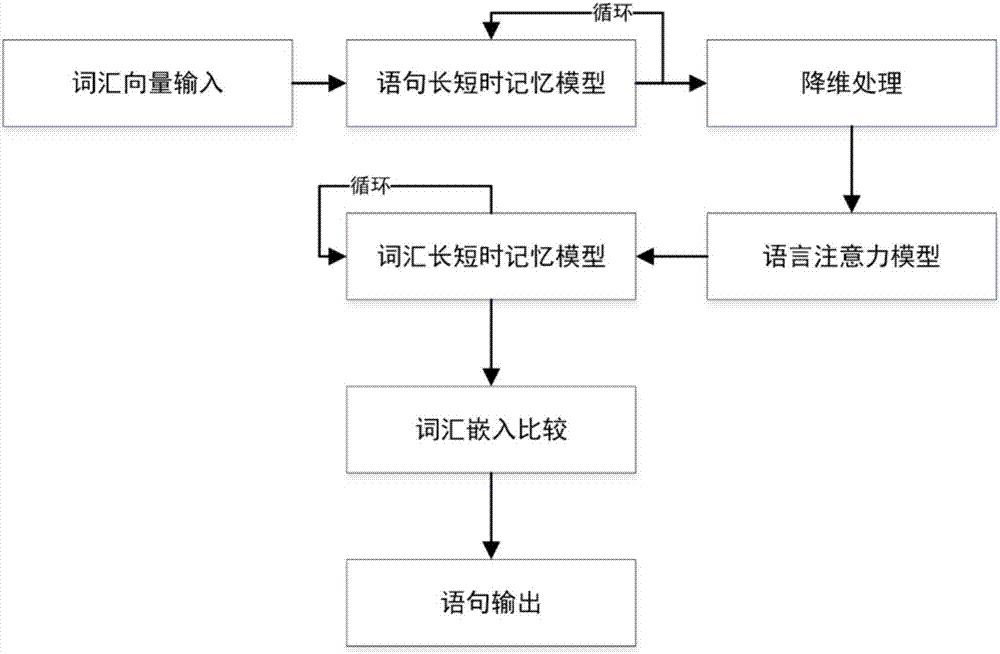

[0036] (2) Input the vocabulary vector into the generator of the generative confrontation network. The generator is composed of a long short-term memory model. The model has memory elements. The vocabulary vector is spliced according to the propagation rules, and a variety of spliced senten...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com