An Object Detection Method Based on Fully Convolutional Split Network

A technology of target detection and split network, which is applied in the field of target detection based on full volume split network, can solve high complexity problems, achieve the effect of improving the ability to extract features, improve detection accuracy, and balance speed and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

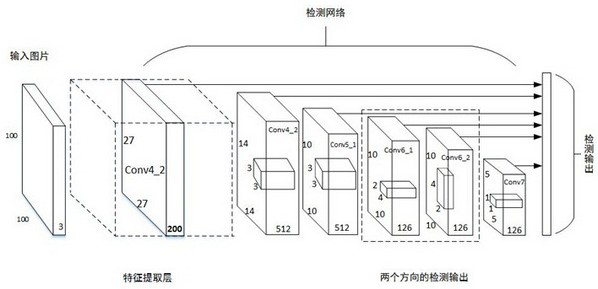

[0024] A target detection method based on a full volume split network, the specific method includes:

[0025] Preprocessing the pictures: Randomly select and crop the pictures in the collected data set. The specific cutting method is: take the preset frame of the set size of the picture length and width, and select 5 places in the picture to crop the picture of the size of the preset frame , are the four corners and the center position of the picture respectively, map the target frame corresponding to the target to the processed picture, and obtain the training picture;

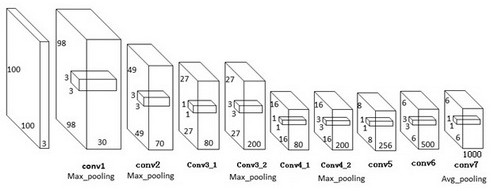

[0026] The feature extraction network structure of the feature extraction part of the feature extraction stage is: the number of layers of the special extraction network is 9 layers of convolutional layers; among them, there are n layers of convolutional layers followed by a pooling layer for downsampling; two filtering layers The output sizes of the device are respectively 1x1 (unit: pixel) and 3x3 (unit: pi...

specific Embodiment 2

[0034] On the basis of the specific embodiment 1, the preset frame with a set size is a preset frame with a size of 1 / 3 of the picture length and width.

specific Embodiment 3

[0036] On the basis of the specific embodiment 1 or 2, in the feature extraction network, the type of the pooling layer is selected as the largest pooling.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com