Method for reducing transmission consumption of neural network model update on mobile device

A neural network model and mobile device technology, applied in the sharing field of mobile devices, can solve problems such as loss of relearning ability, and achieve the effect of retraining learning and reducing transmission consumption.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

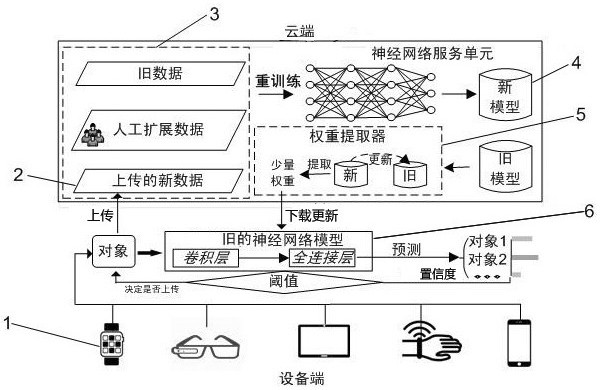

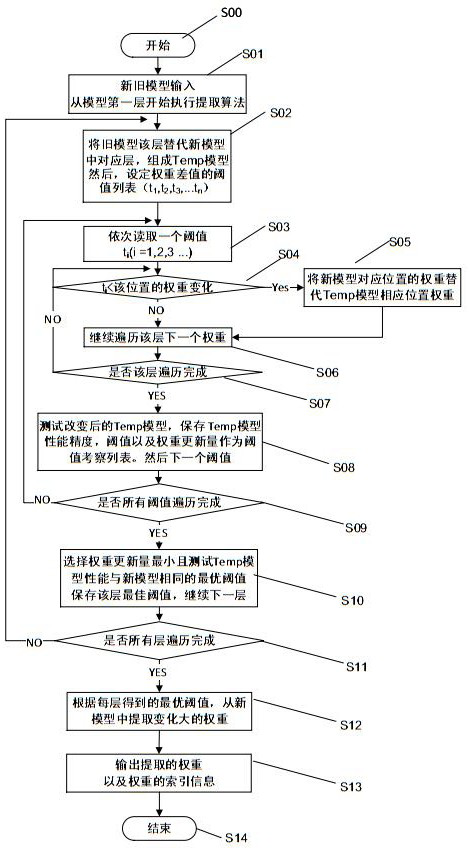

[0026] Below in conjunction with accompanying drawing and embodiment the present invention will be further described:

[0027] The operating environment of the present invention is: an embedded mobile device, which can configure a neural network to perform some intelligent identification tasks; and a cloud server, which can train the neural network.

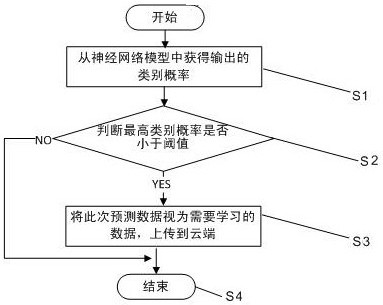

[0028] Such as figure 1 Shown, the present invention comprises the following steps:

[0029] Step 1. The mobile device selects data with low prediction confidence and uploads it to the cloud;

[0030] When the neural network predicts the data (such as identifying image information), it outputs the probabilities of multiple categories that the predicted data belongs to. These probabilities represent the confidence of each category of the predicted data; the highest probability category is selected as the final category it belongs to. When the output category probabilities are all small, the neural network is very uncertain about...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com