Patents

Literature

261 results about "Memory processing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

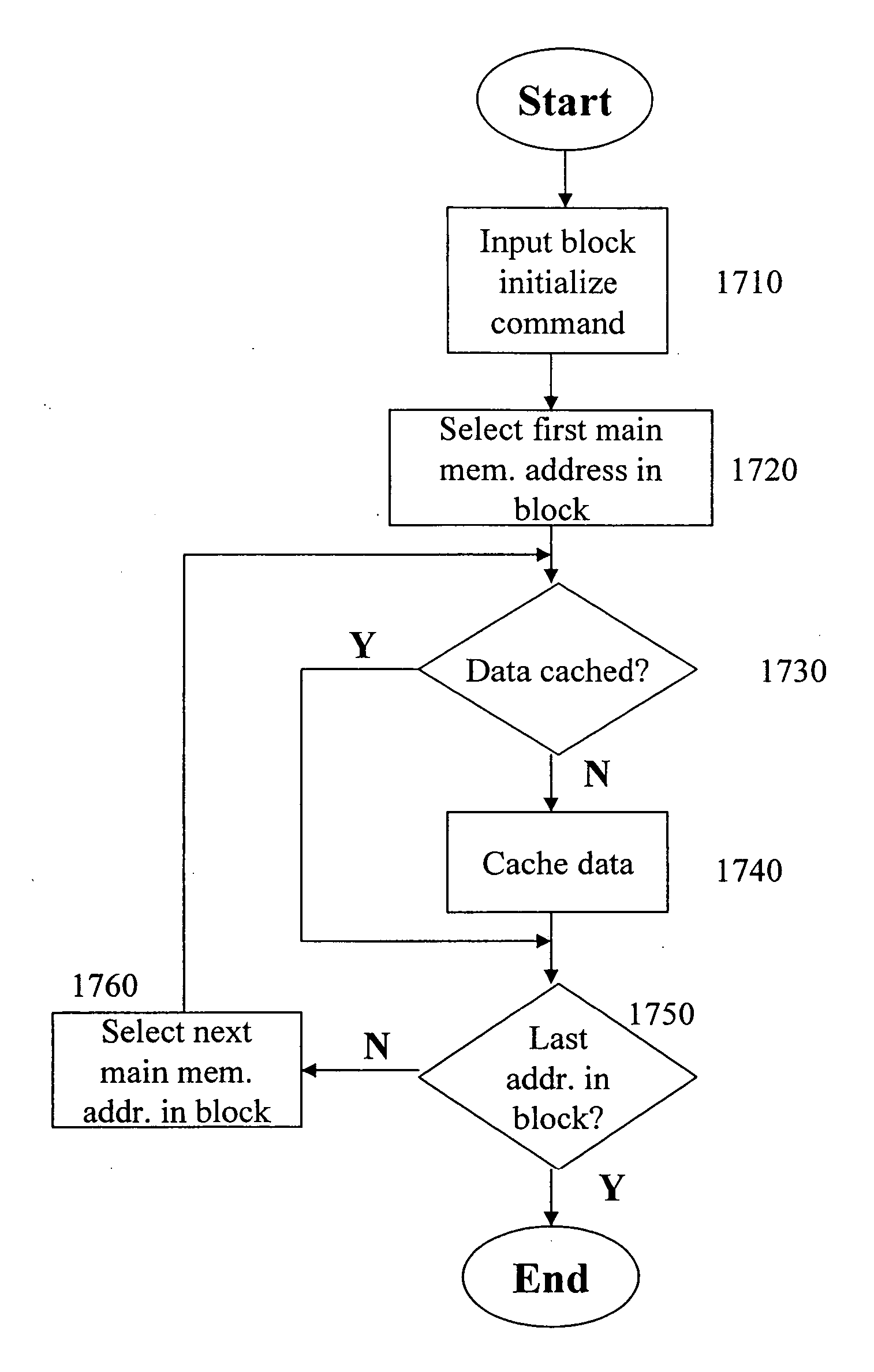

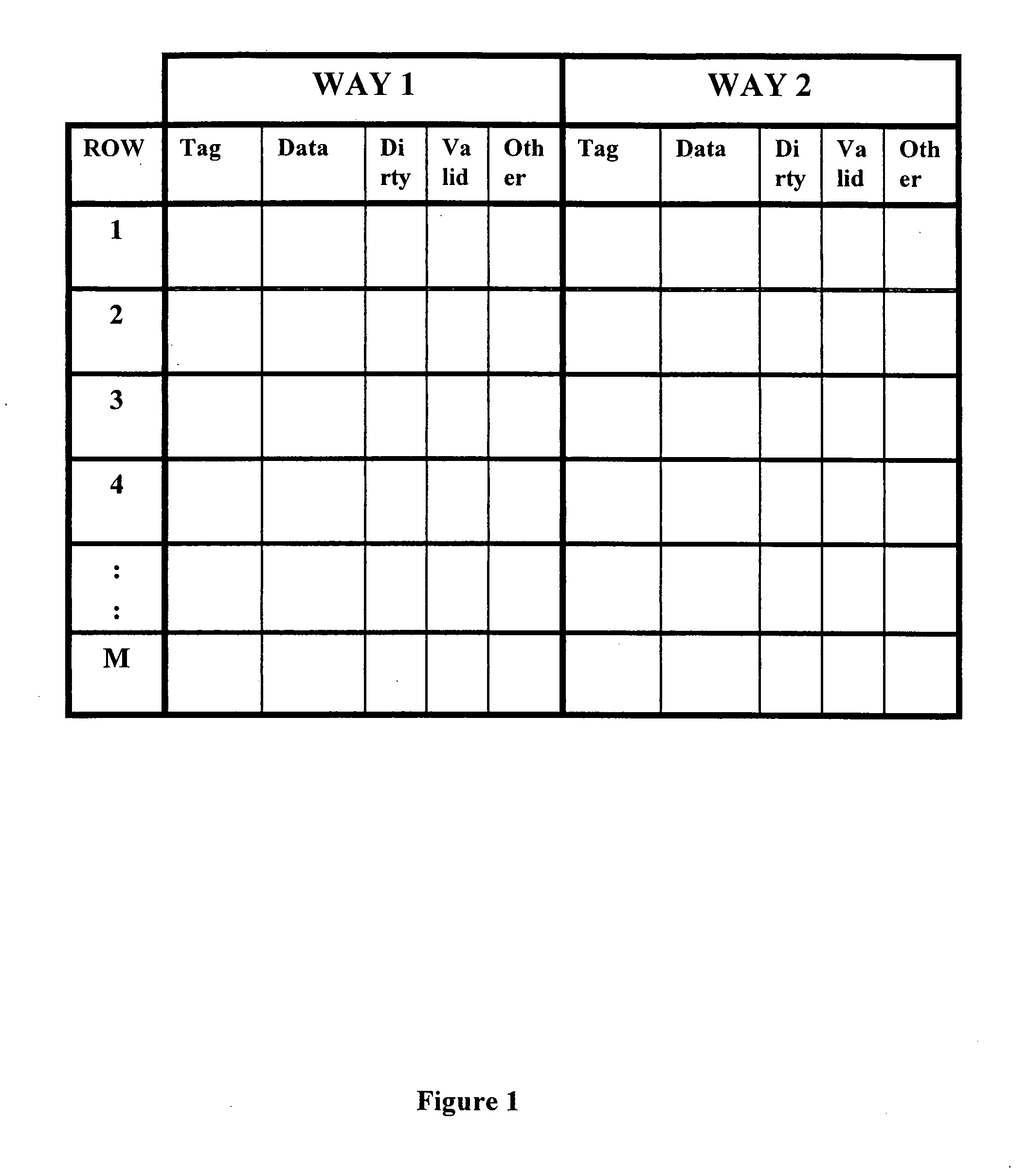

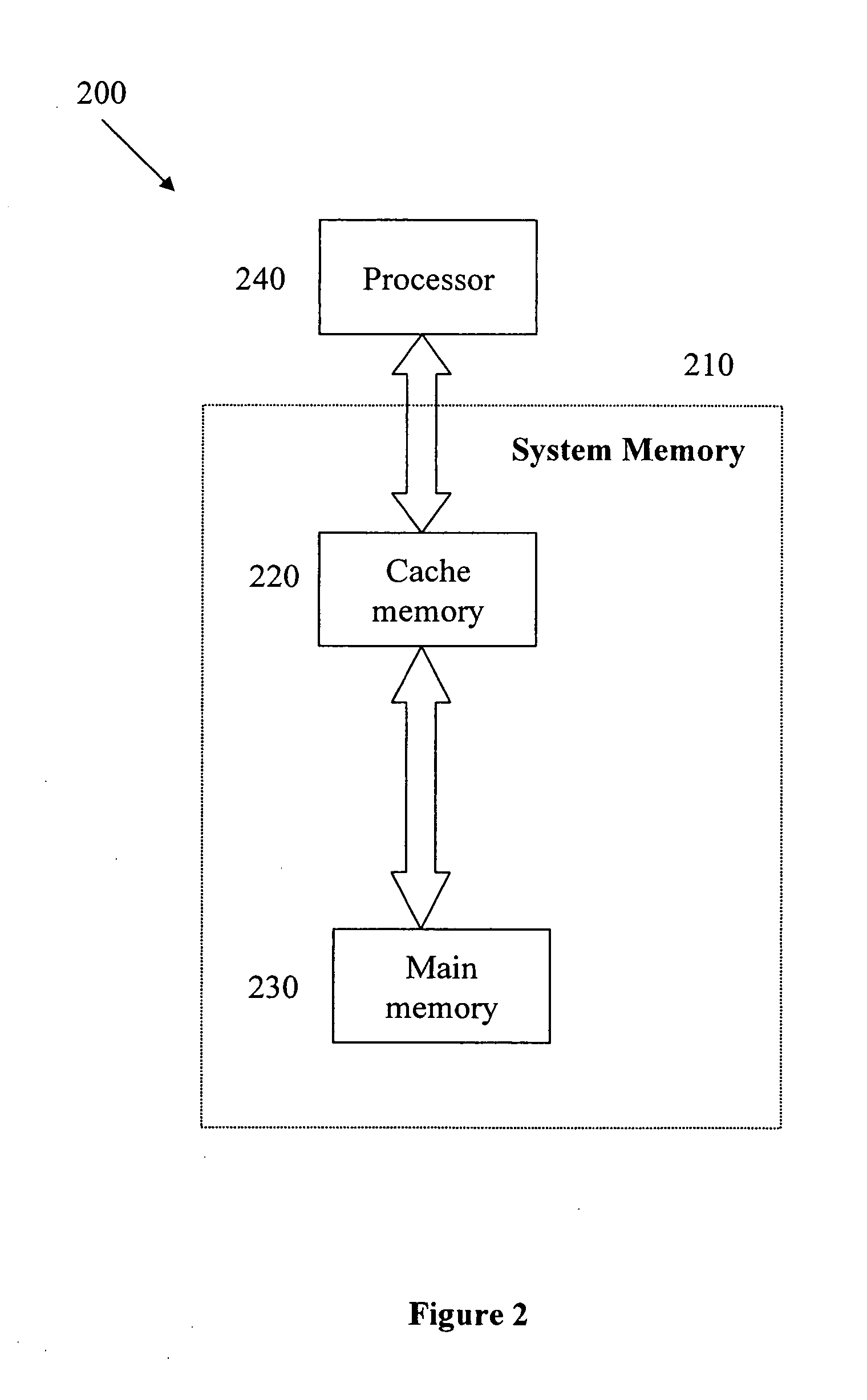

Cache memory background preprocessing

A cache memory preprocessor prepares a cache memory for use by a processor. The processor accesses a main memory via a cache memory, which serves a data cache for the main memory. The cache memory preprocessor consists of a command inputter, which receives a multiple-way cache memory processing command from the processor, and a command implementer. The command implementer performs background processing upon multiple ways of the cache memory in order to implement the cache memory processing command received by the command inputter.

Owner:ANALOG DEVICES INC

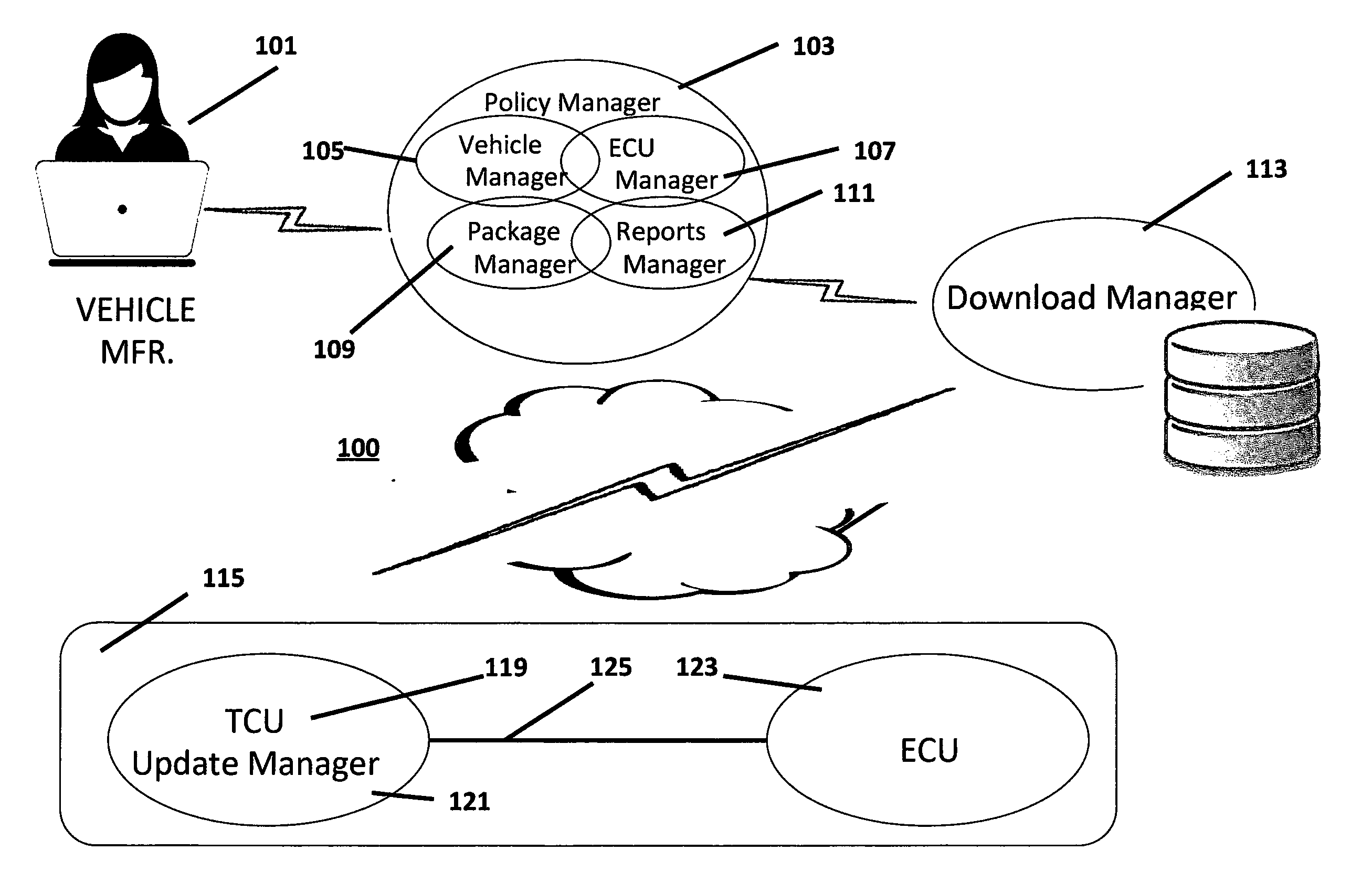

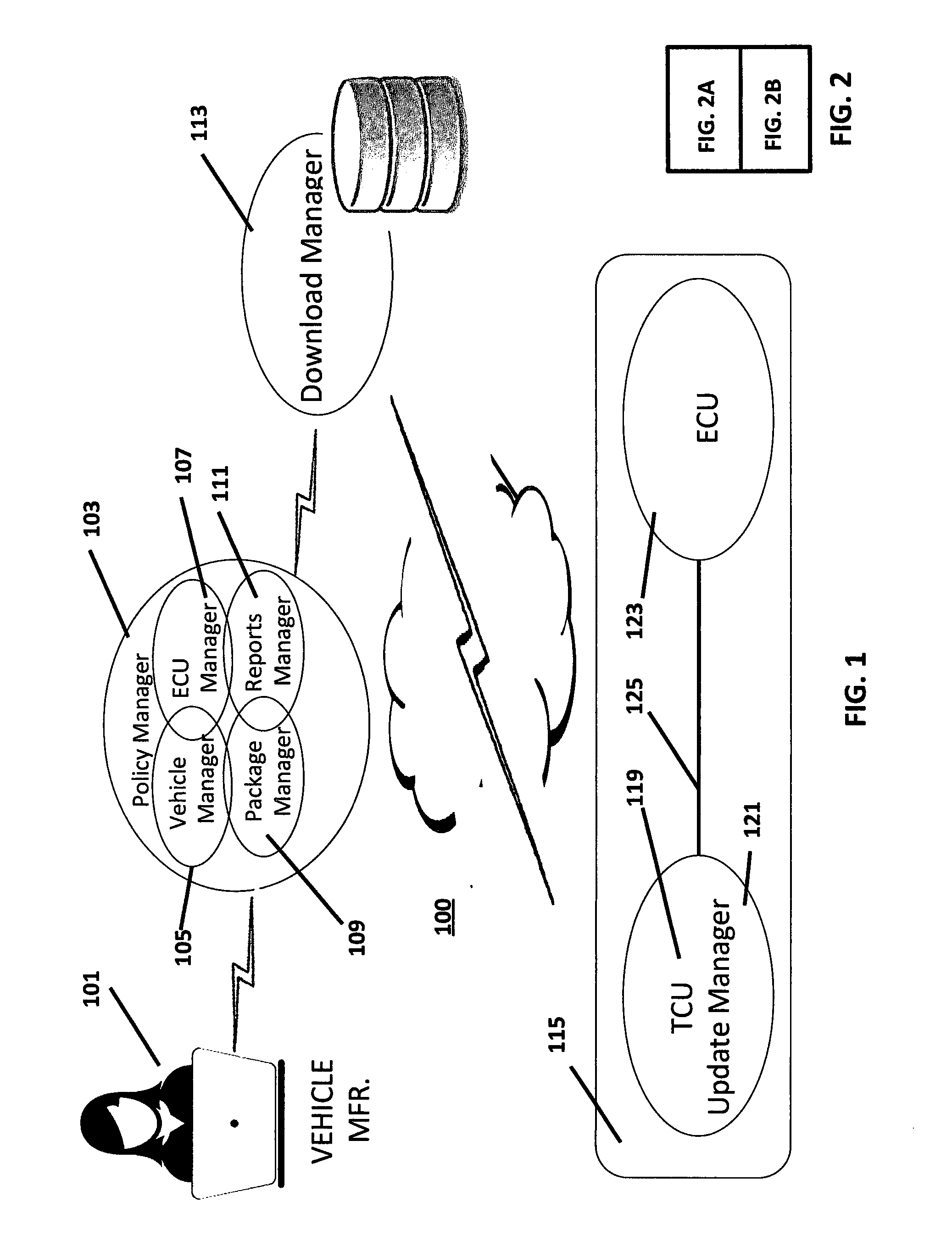

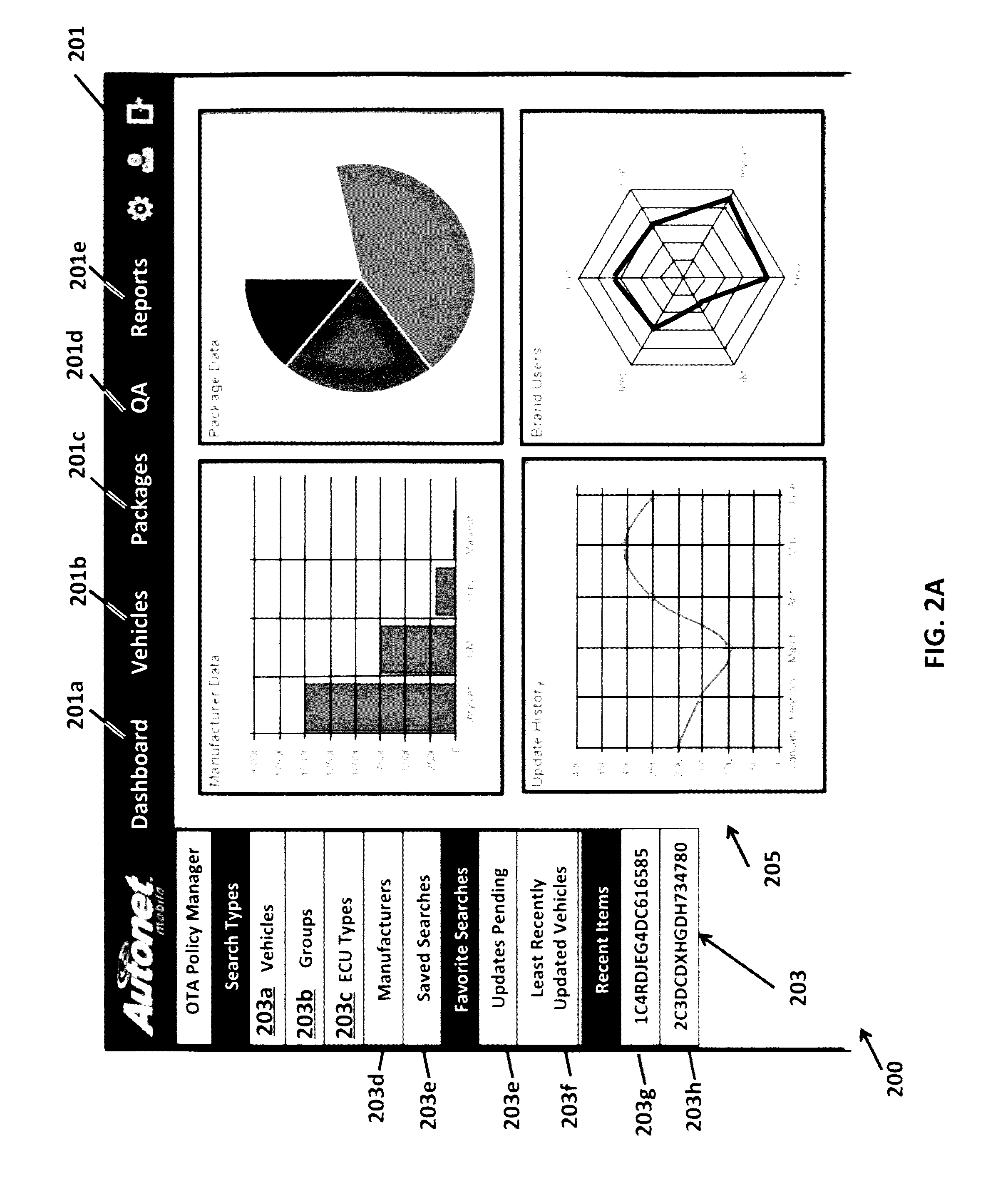

Telematics control unit comprising a differential update package

A telematics control unit (TCU) installable in a vehicle comprises: a wireless network interface; an interface to a vehicle bus coupled to a plurality of electronic control units (ECUs); a memory; a processor; a differential update package (DUP) received via the wireless network interface to provide an update to a specific one ECU. The DUP comprising a flashing tool, differential update instructions for the specific one ECU and differential update data for the flash memory of said specific one ECU. The processor utilizes the flashing tool to provide the differential update instructions to a boot loader of the specific one ECU and to update the ECU flash memory.

Owner:LEAR CORP

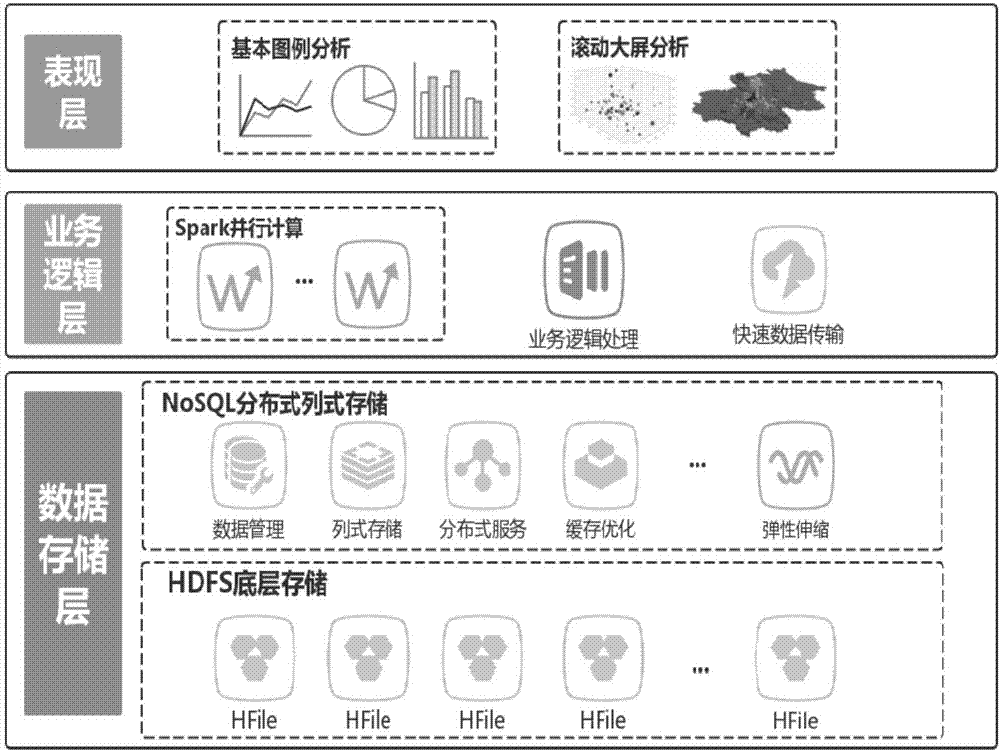

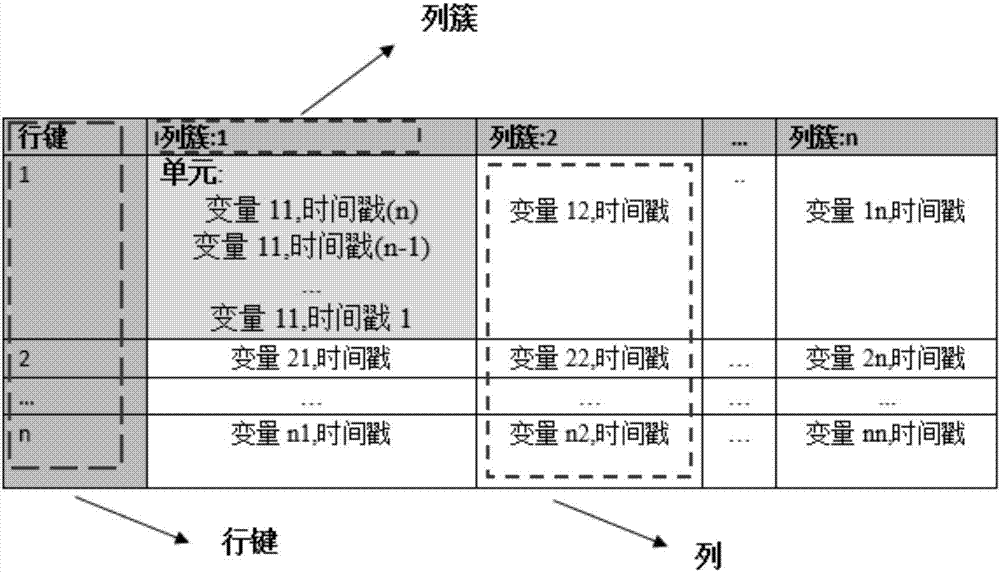

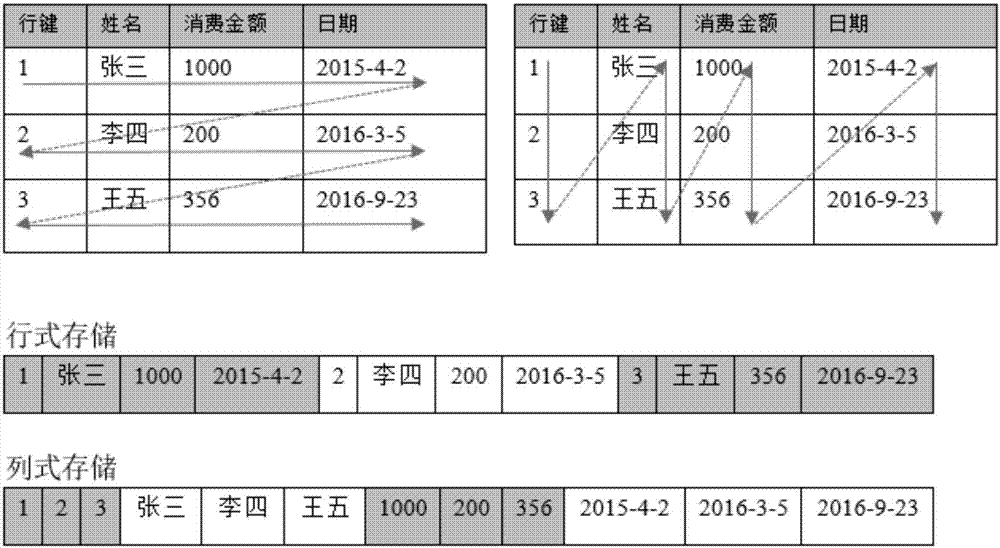

Big-data parallel computing method and system based on distributed columnar storage

InactiveCN107329982AReduce the frequency of read and write operationsShort timeResource allocationSpecial data processing applicationsParallel computingLarge screen

The invention discloses a big-data parallel computing method and system based on distributed columnar storage. Data which is most often accessed currently is stored by using the NoSQL columnar storage based on a memory, the cache optimizing function is achieved, and quick data query is achieved; a distributed cluster architecture, big data storing demands are met, and the dynamic scalability of the data storage capacity is achieved; combined with a parallel computing framework based on Spark, the data analysis and the parallel operation of a business layer are achieved, and the computing speed is increased; the real-time data visual experience of the large-screen rolling analysis is achieved by using a graph and diagram engine. In the big-data parallel computing method and system, the memory processing performance and the parallel computing advantages of a distributed cloud server are given full play, the bottlenecks of a single server and serial computing performance are overcome, the redundant data transmission between data nodes is avoided, the real-time response speed of the system is increased, and quick big-data analysis is achieved.

Owner:SOUTH CHINA UNIV OF TECH

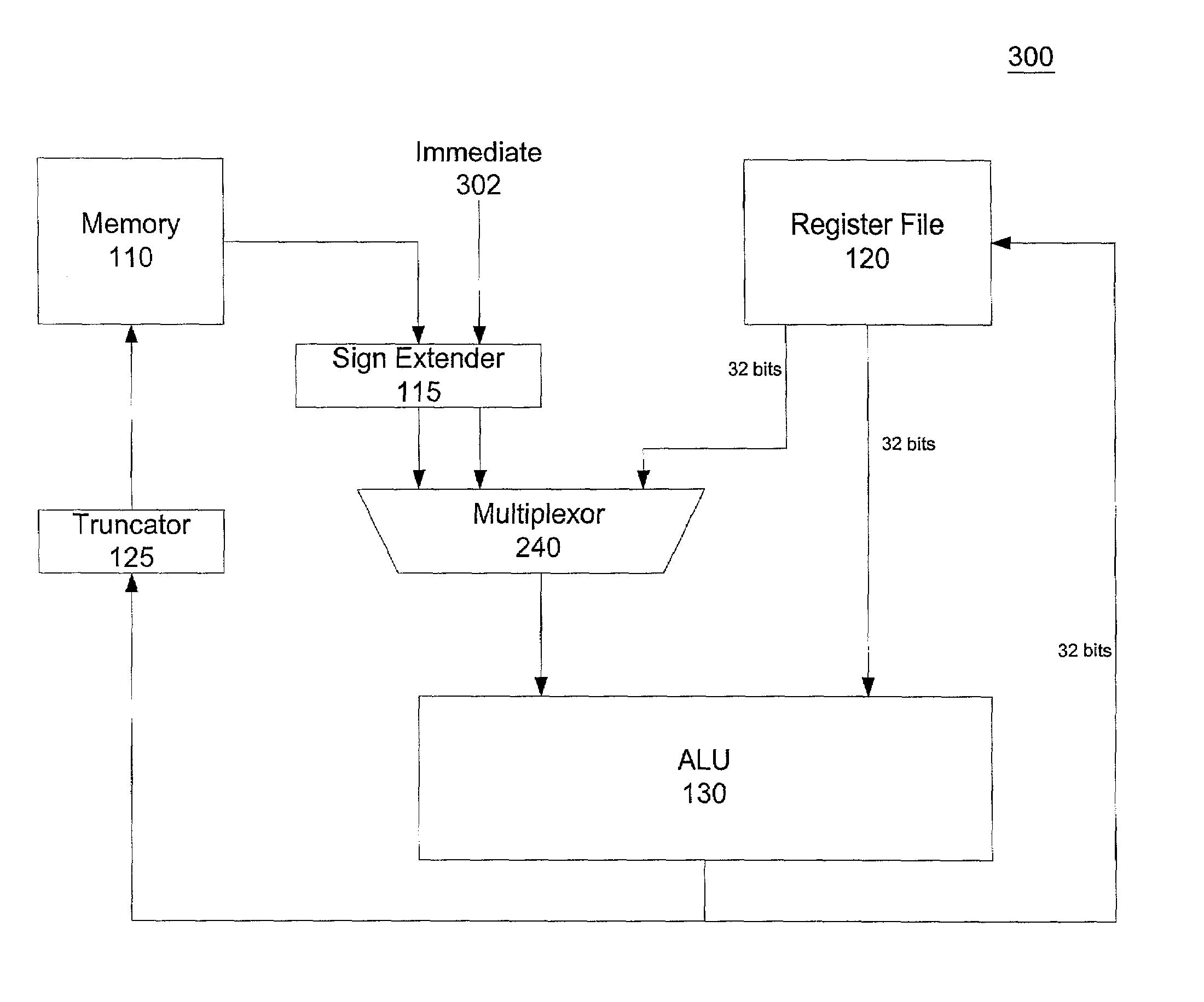

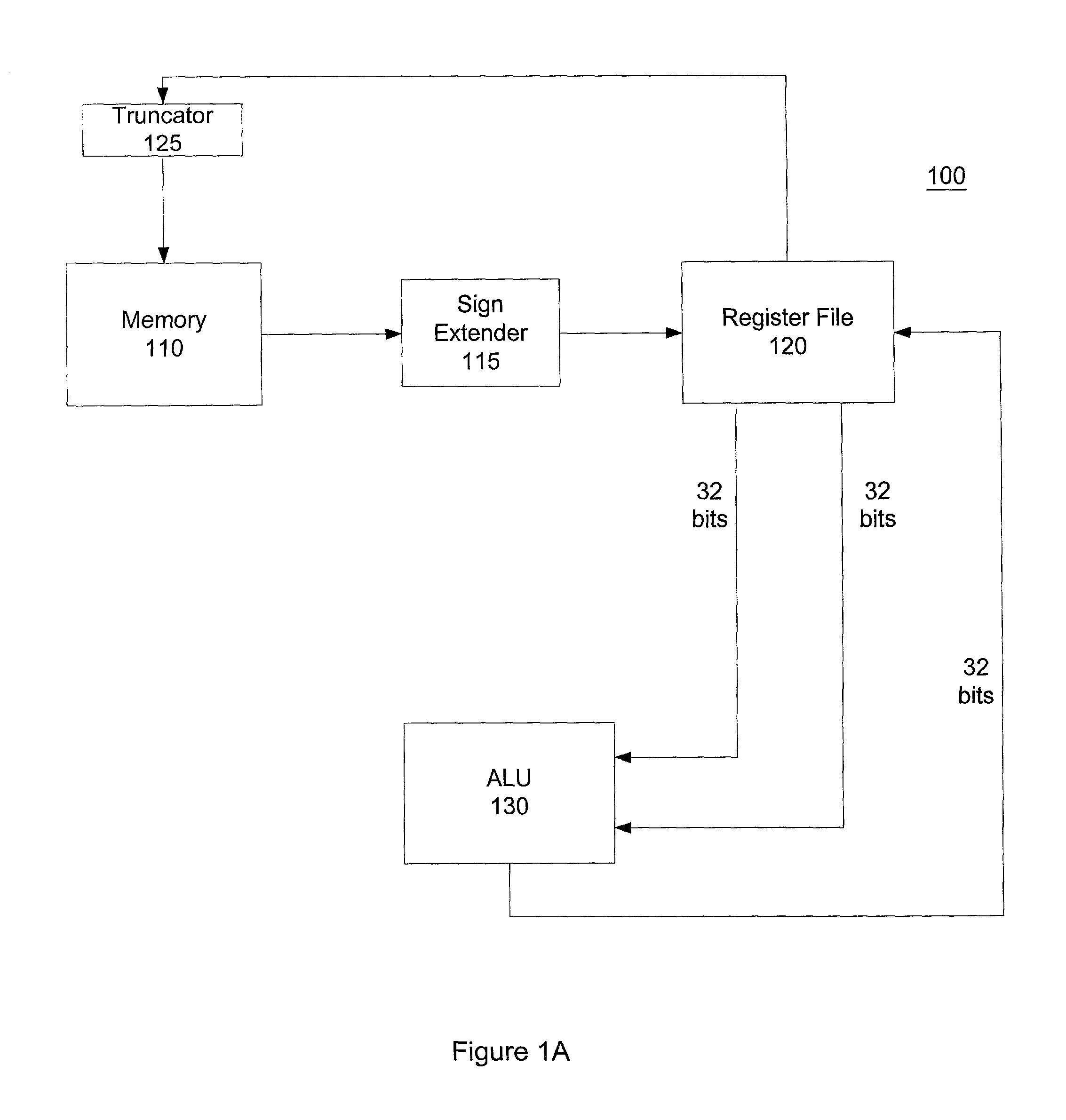

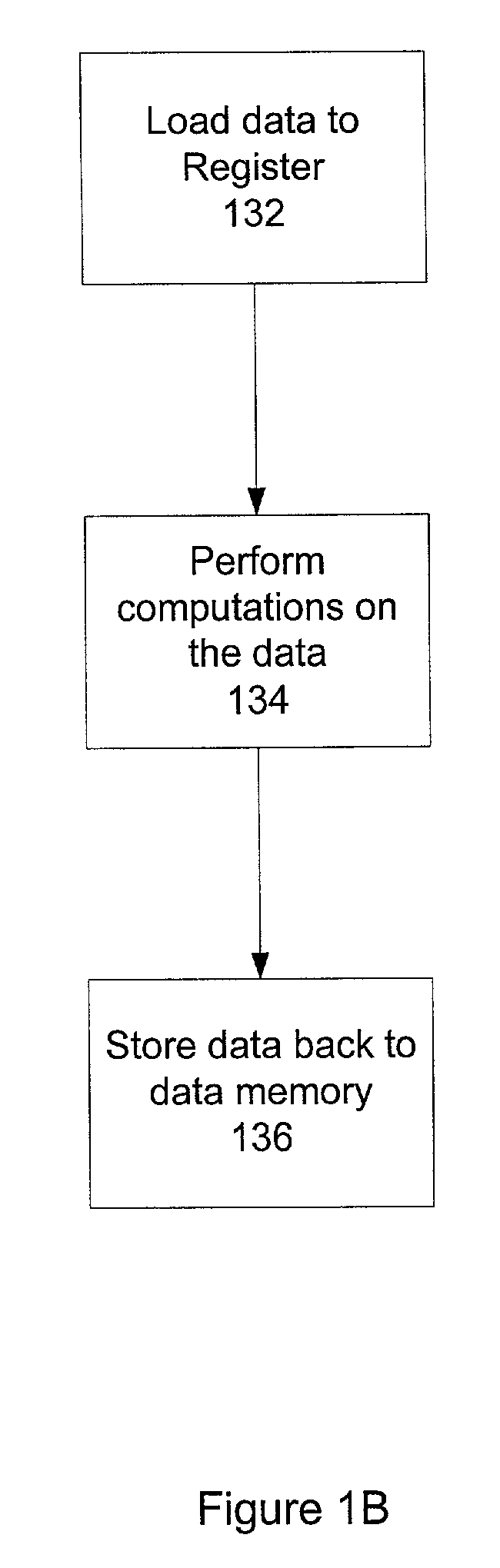

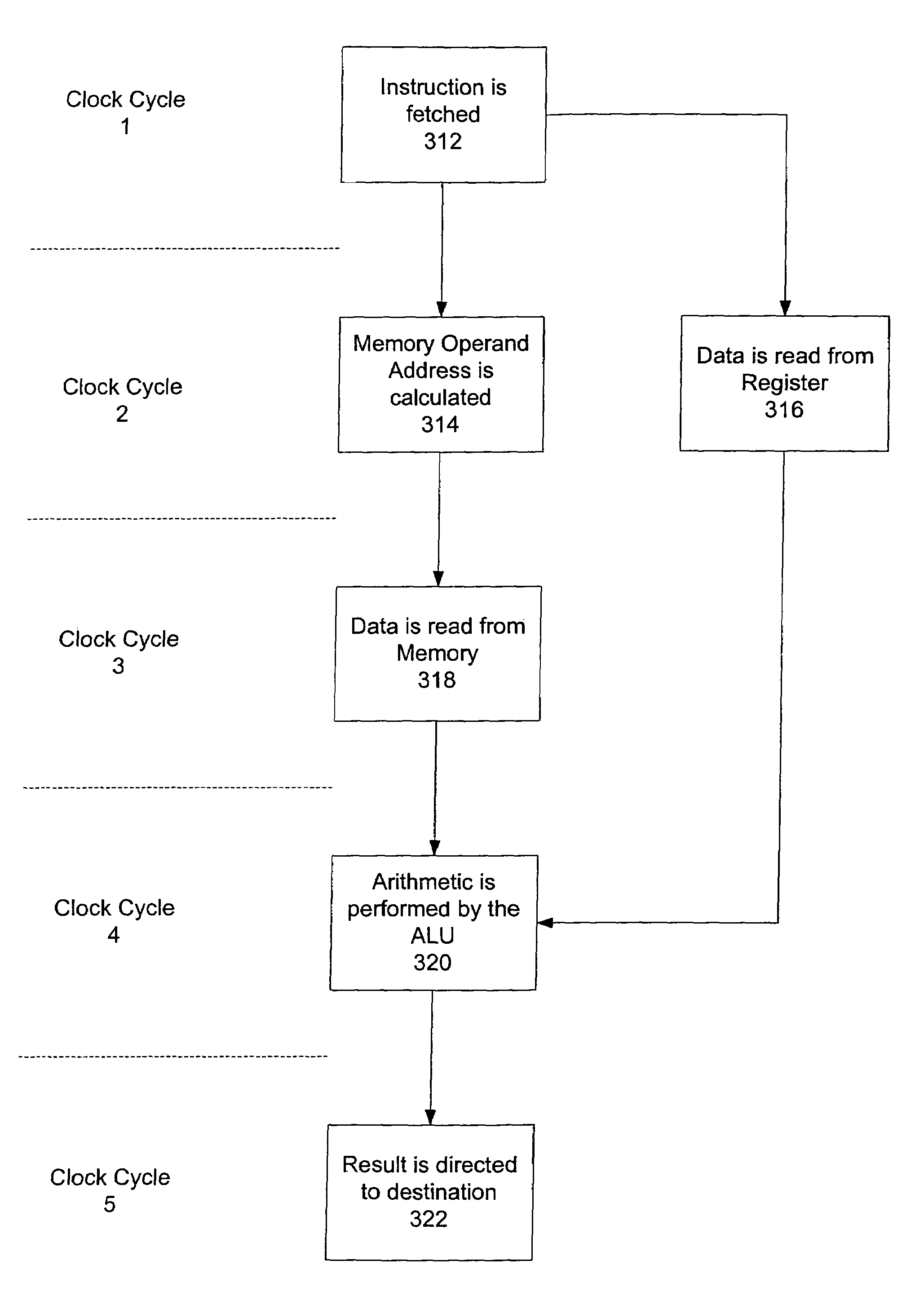

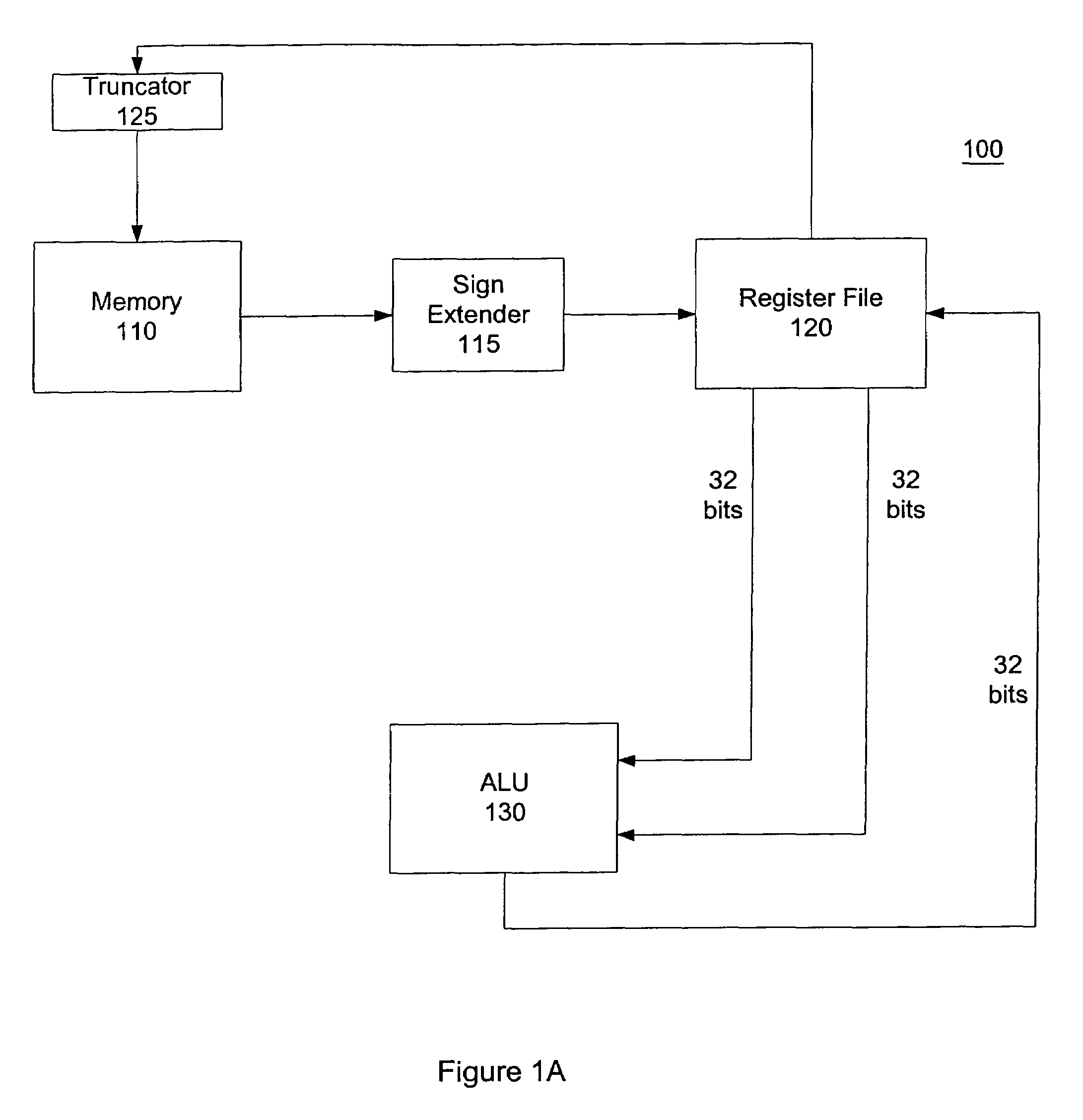

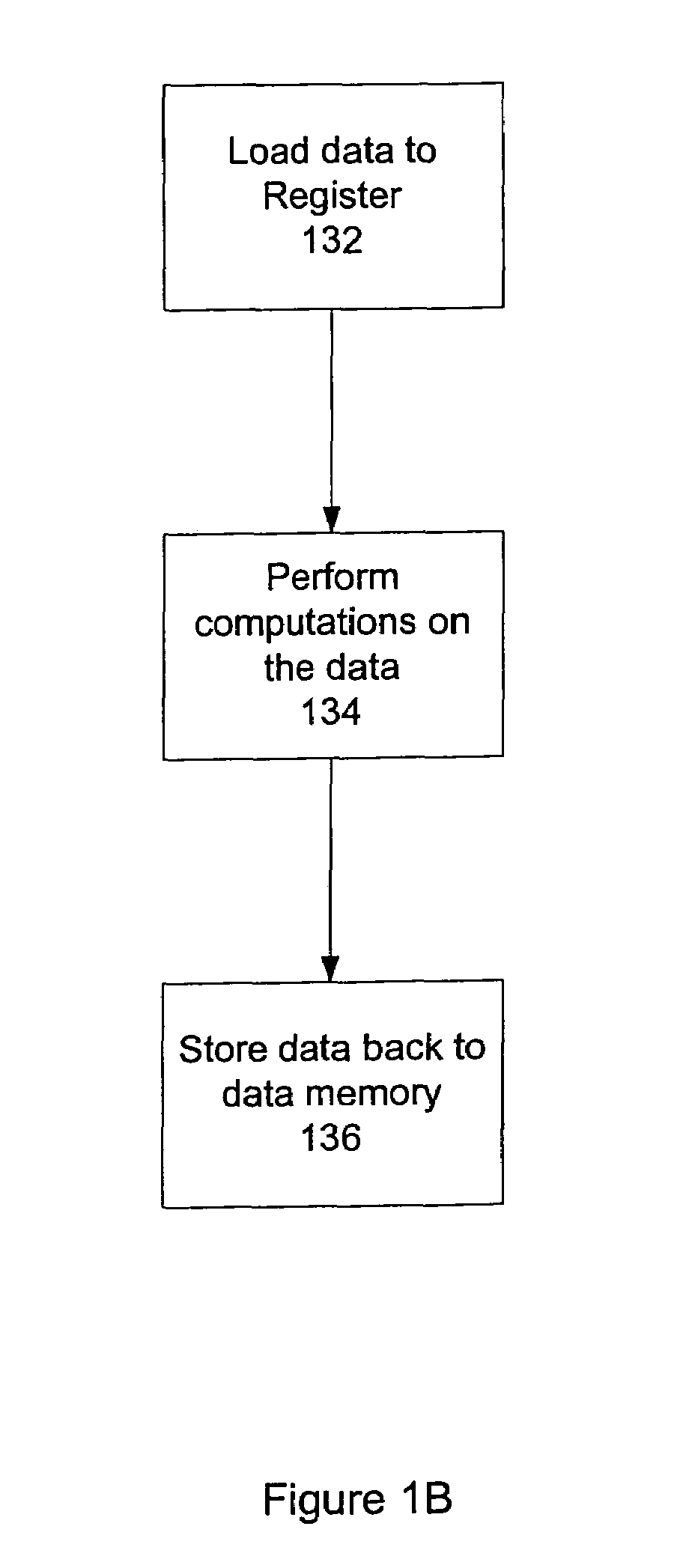

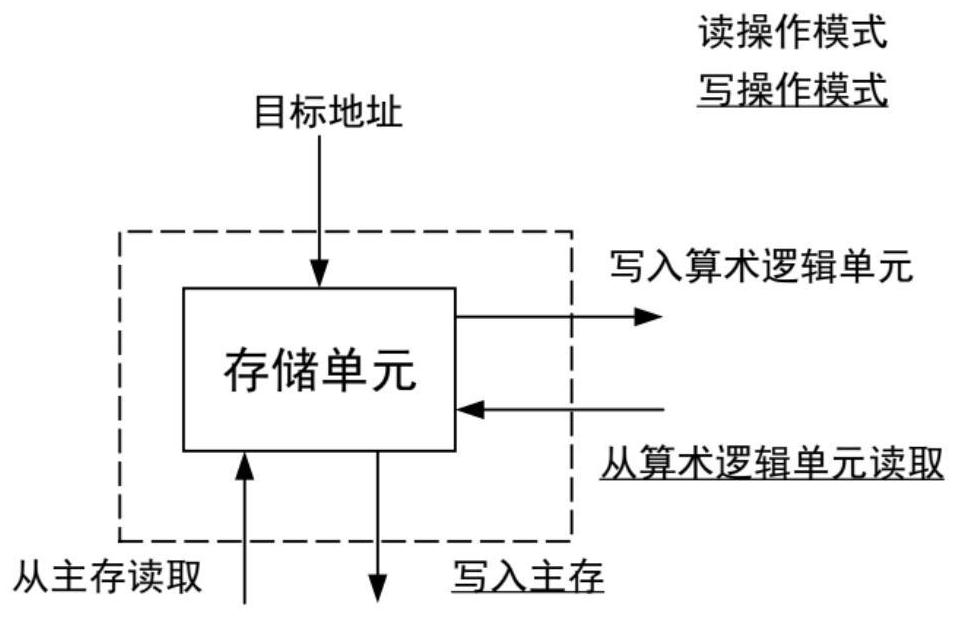

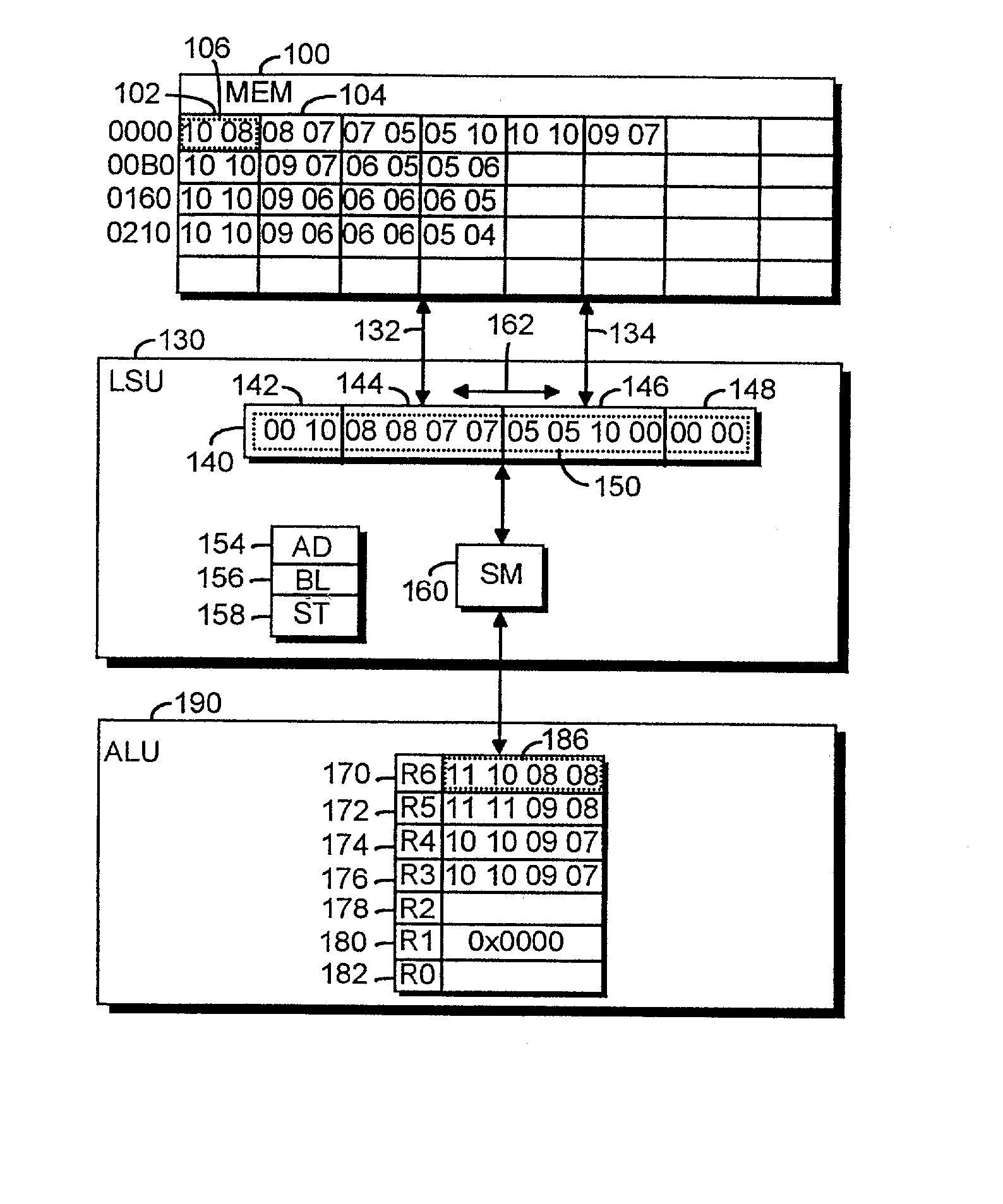

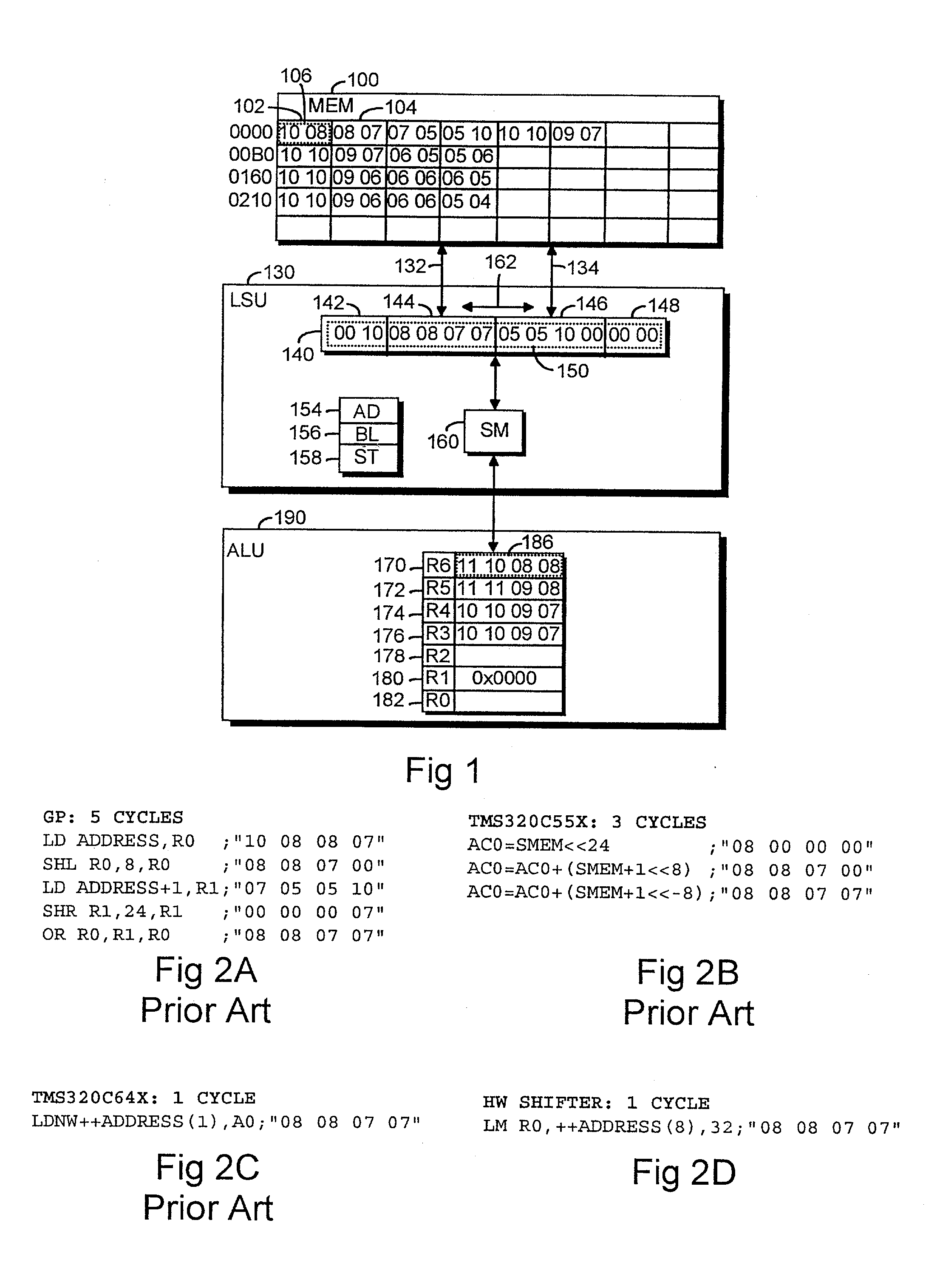

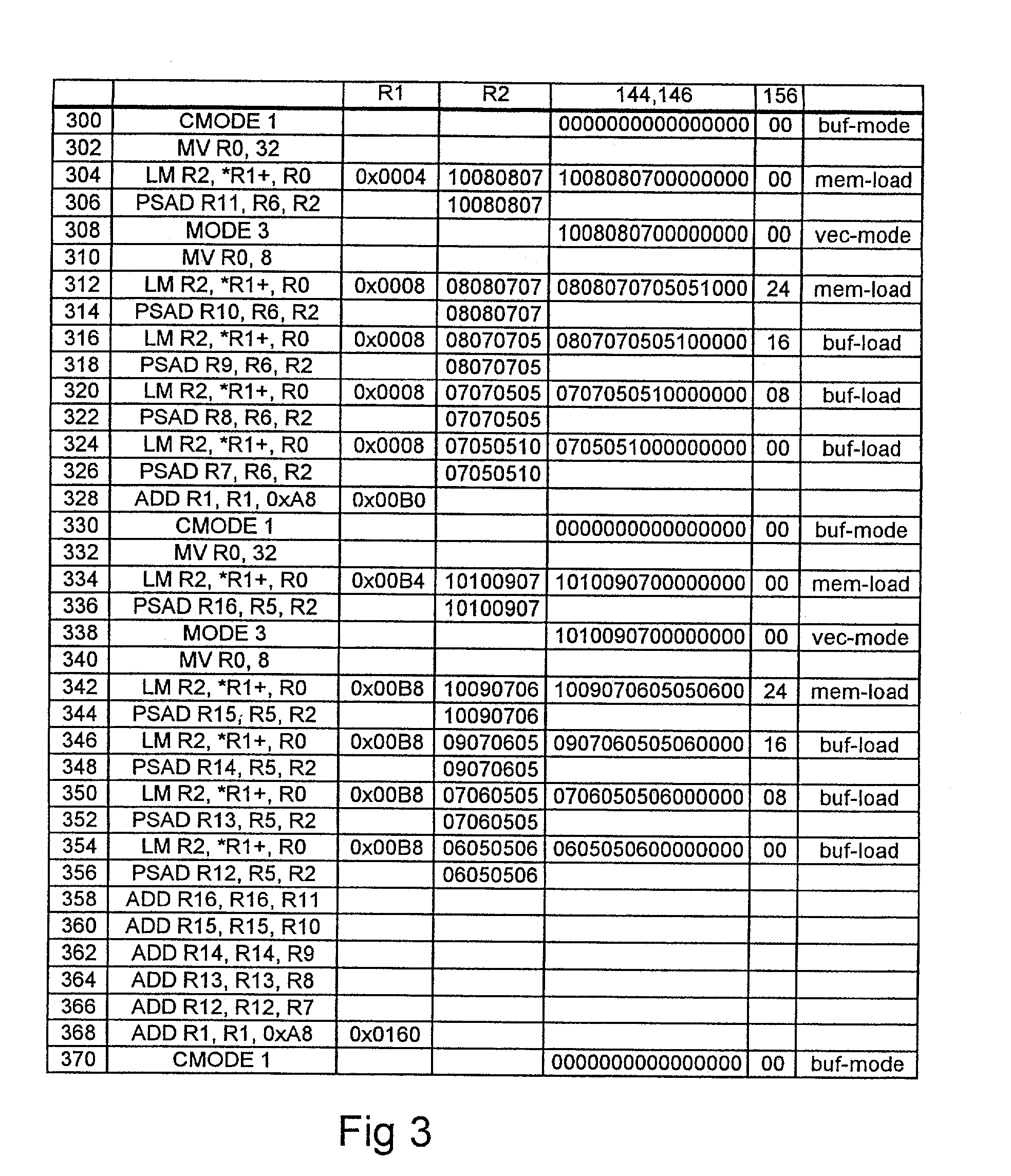

Fixed length memory to memory arithmetic and architecture for a communications embedded processor system

InactiveUS7047396B1Fast pipeline processingImprove performanceInstruction analysisGeneral purpose stored program computerMemory processingComputer architecture

A method and system for fixed-length memory-to-memory processing of fixed-length instructions. Further, the present invention is a method and system for implementing a memory operand width independent of the ALU width. The arithmetic and register data are 32 bits, but the memory operand is variable in size. The size of the memory operand is specified by the instruction. Instructions in accordance with the present invention allow for multiple memory operands in a single fixed-length instruction. The instruction set is small and simple, so the implementation is lower cost than traditional processors. More addressing modes are provided for, thus creating a more efficient code. Semaphores are implemented using a single bit. Shift-and-merge instructions are used to access data across word boundaries.

Owner:MAYFIELD XI +8

Memory centric computing

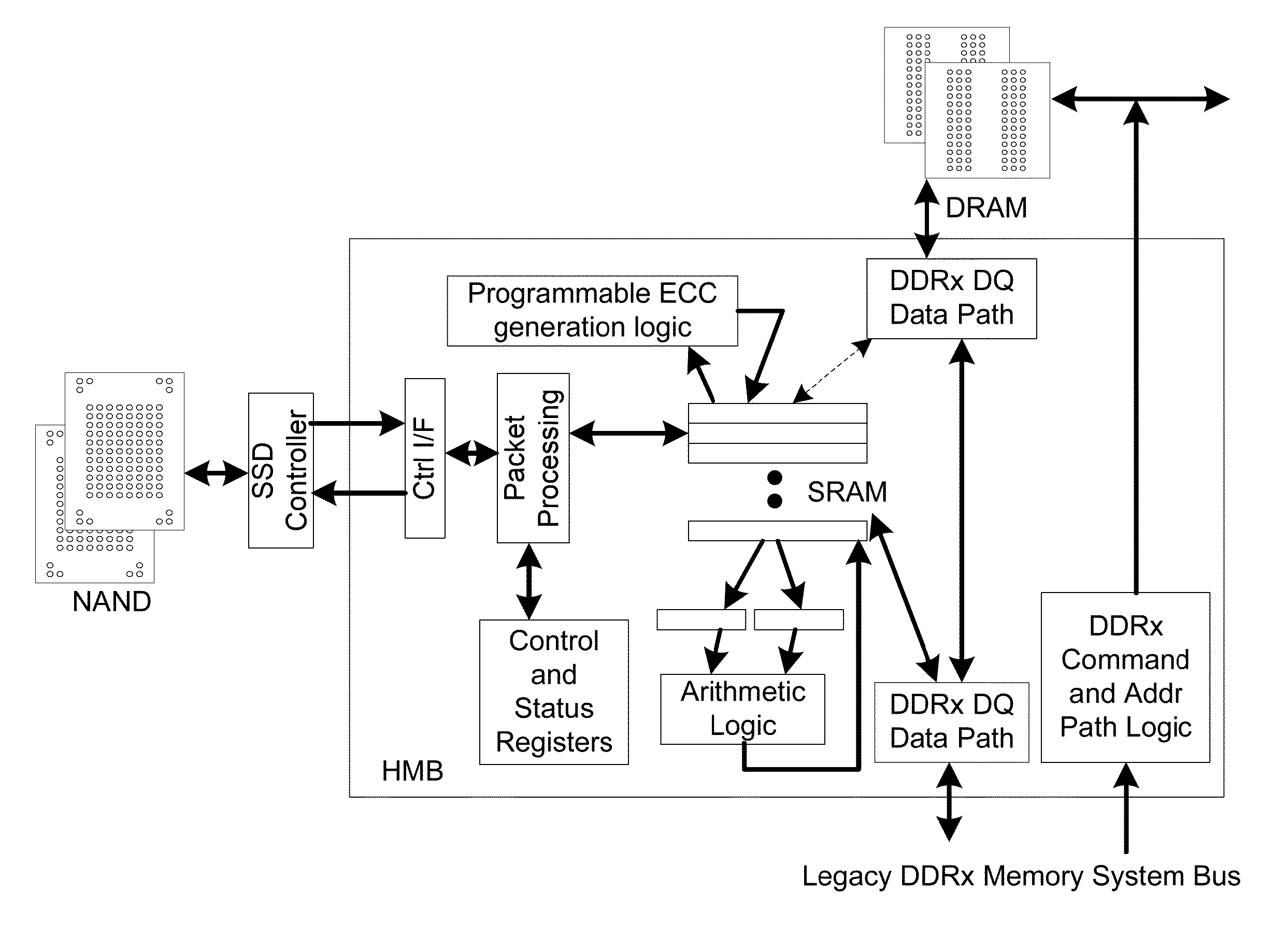

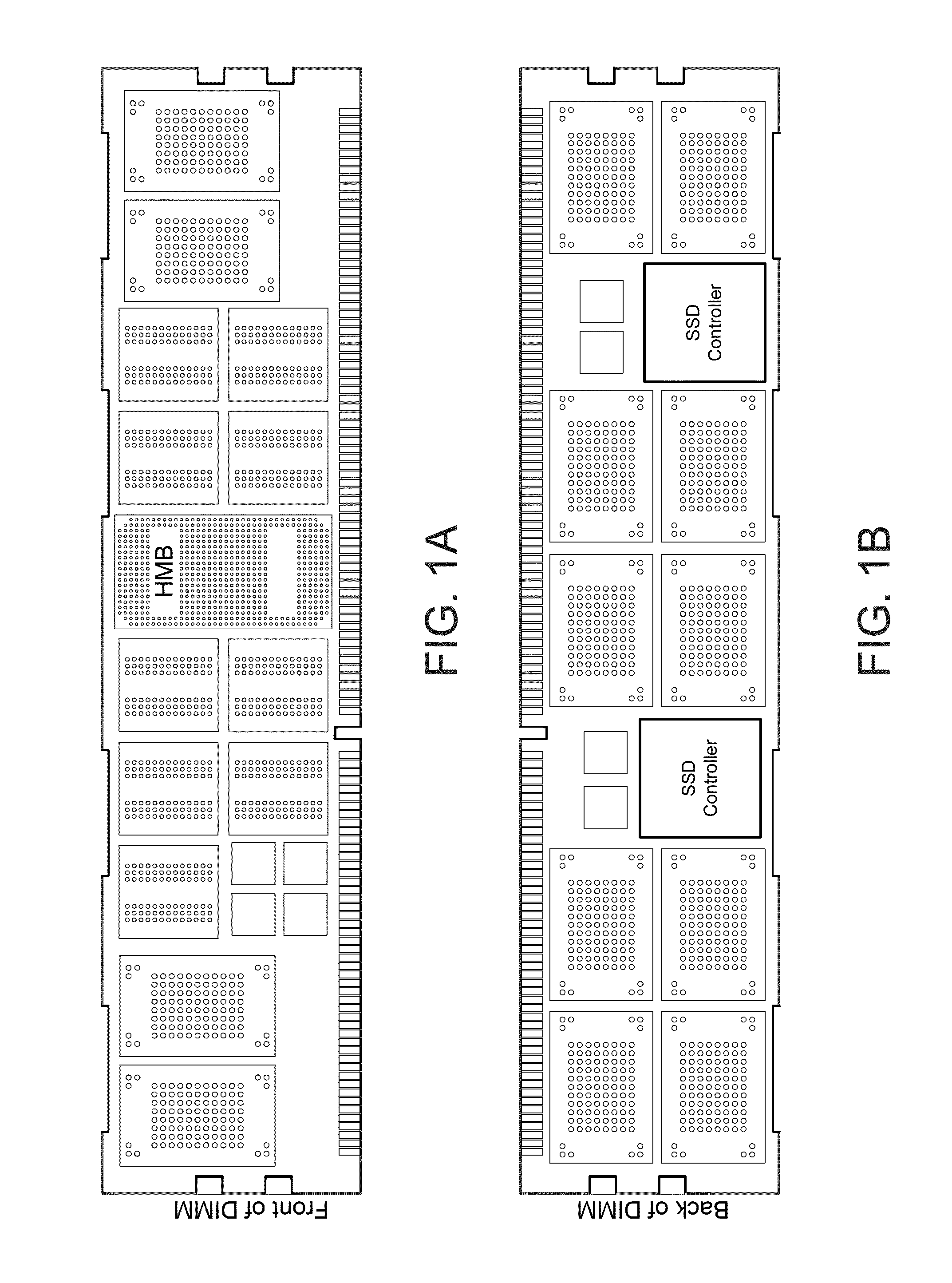

A hybrid memory system. This system can include a processor coupled to a hybrid memory buffer (HMB) that is coupled to a plurality of DRAM and a plurality of Flash memory modules. The HMB module can include a Memory Storage Controller (MSC) module and a Near-Memory-Processing (NMP) module coupled by a SerDes (Serializer / Deserializer) interface. This system can utilize a hybrid (mixed-memory type) memory system architecture suitable for supporting low-latency DRAM devices and low-cost NAND flash devices within the same memory sub-system for an industry-standard computer system.

Owner:RAMBUS INC

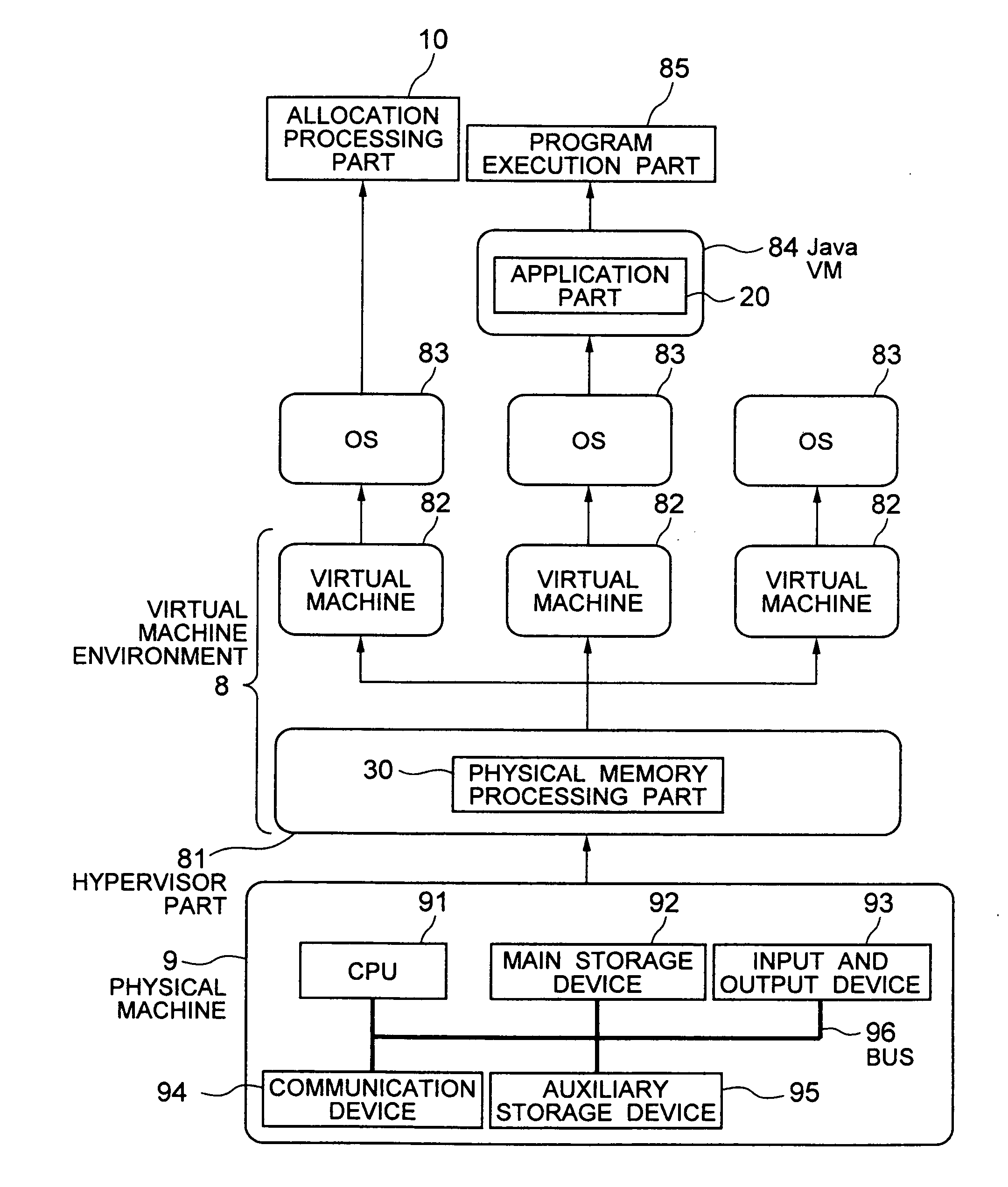

Memory management method, memory management program, and memory management device

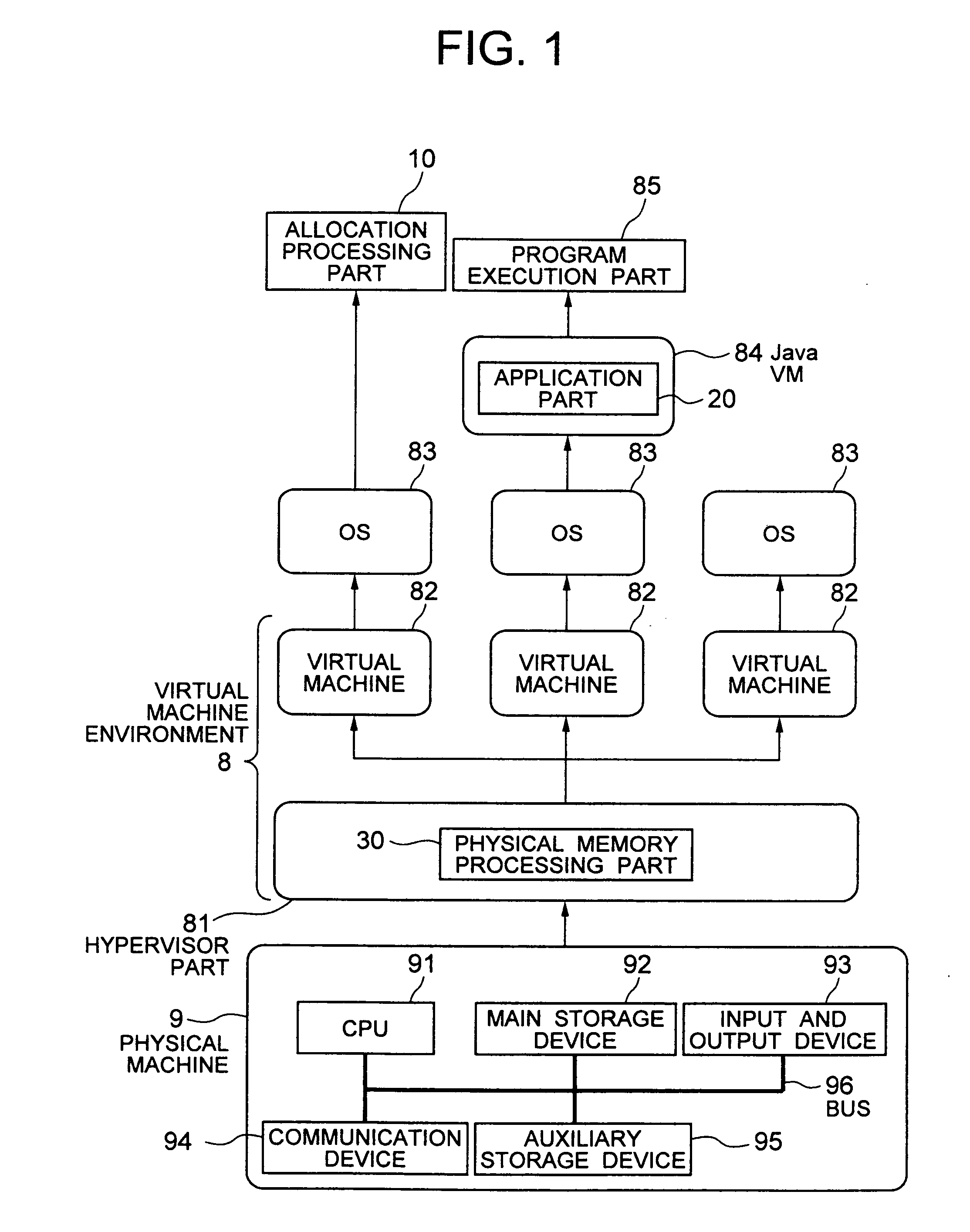

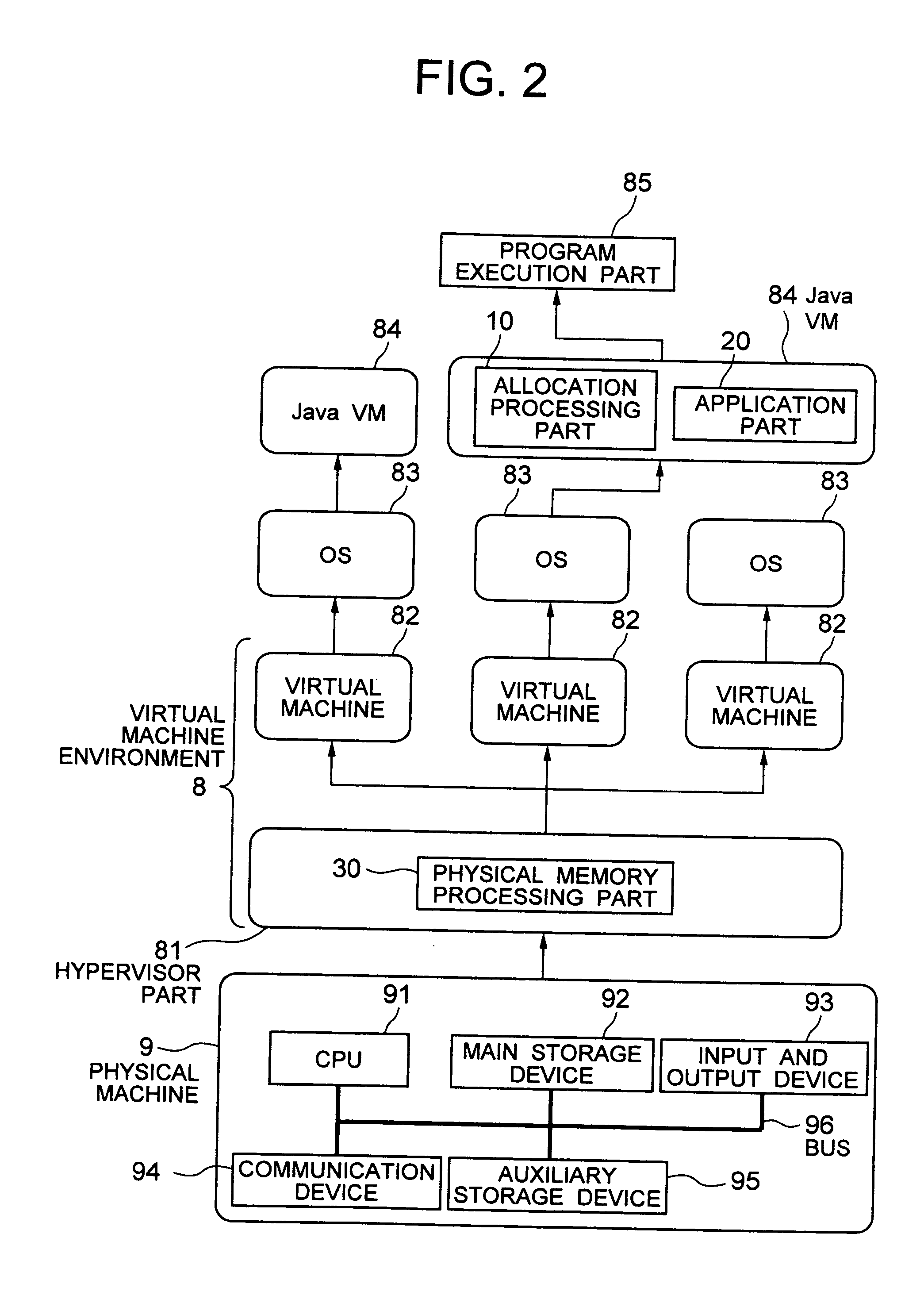

InactiveUS20100274947A1Improve utilization efficiencyMemory adressing/allocation/relocationComputer security arrangementsMemory processingParallel computing

In a virtual machine system built from a plurality of virtual machines, the utilization efficiency of utilized physical memory is raised. A memory management method in which a virtual machine environment, constituted by having one or several virtual machines and a hypervisor part for operating the same virtual machines, is built on a physical machine and in which: a virtual machine operates an allocation processing part and an application part, application part making a physical memory processing part allocate unallocated physical memory to a memory area and allocation processing part transmitting, when unallocated physical memory is scarce, an instruction for the release, from memory areas utilized by each application part, of memory pages for which physical memory is assigned but not used.

Owner:HITACHI LTD

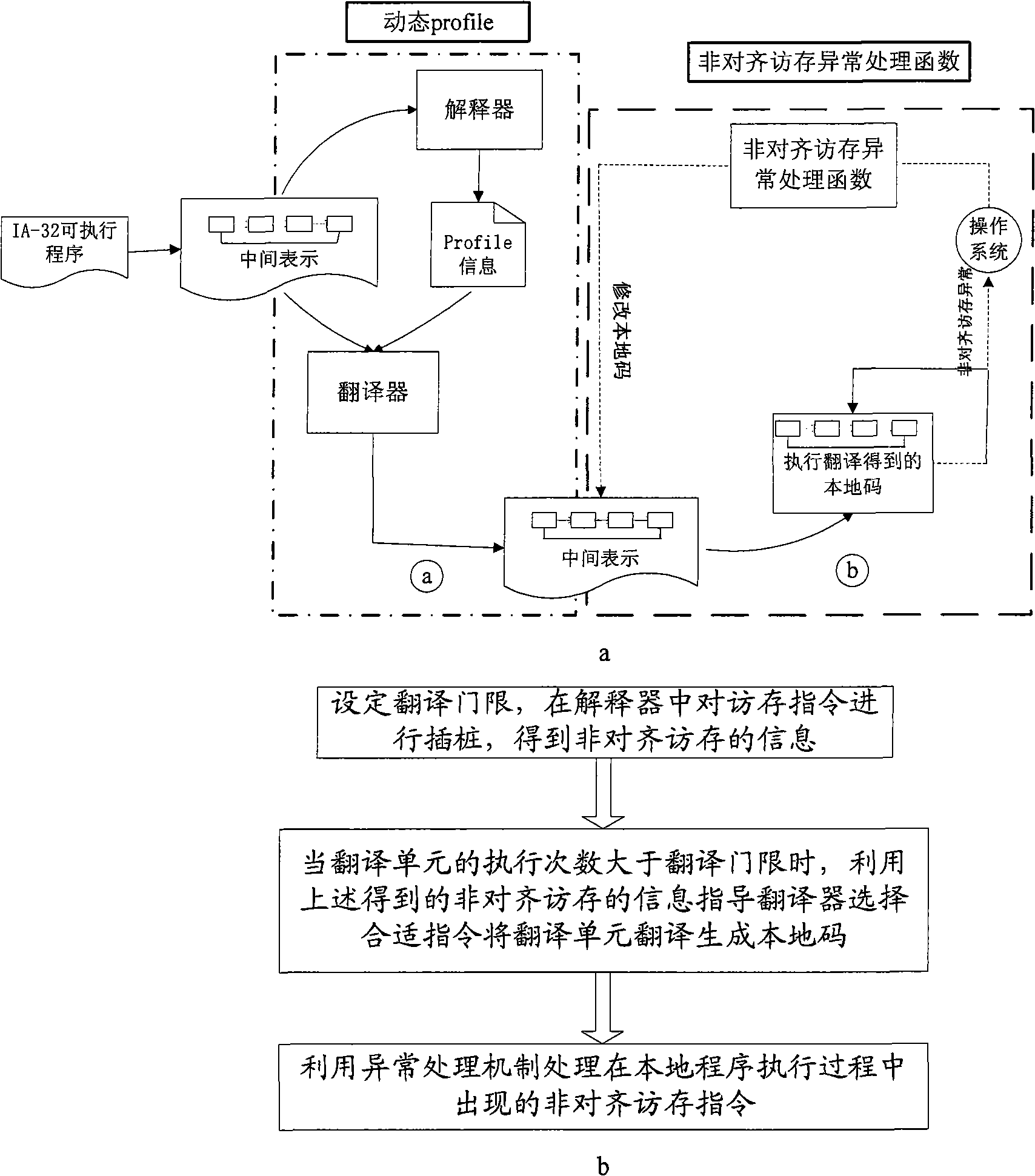

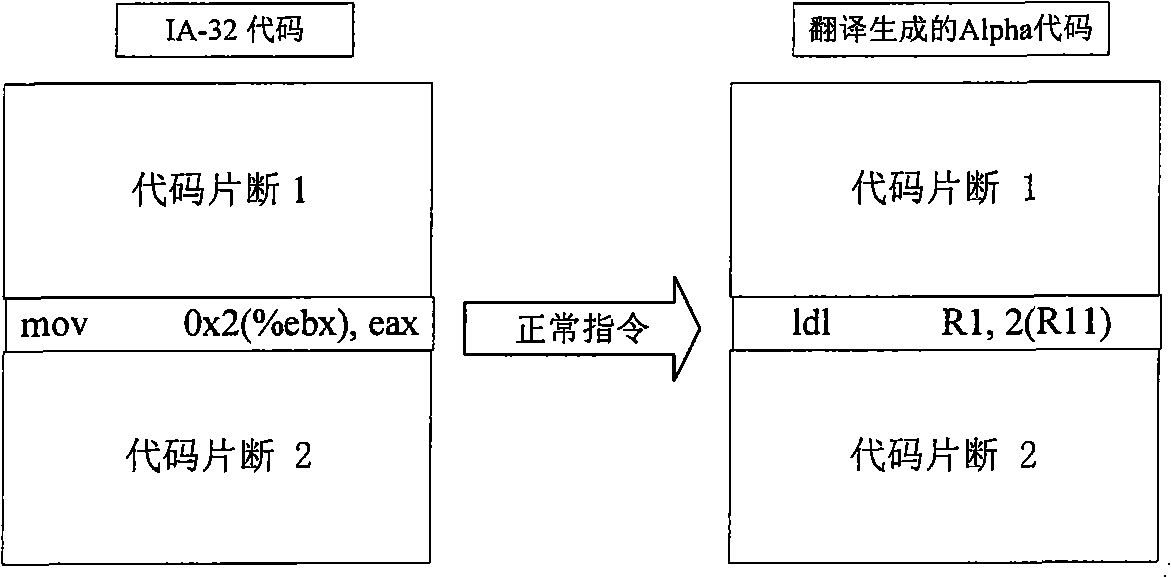

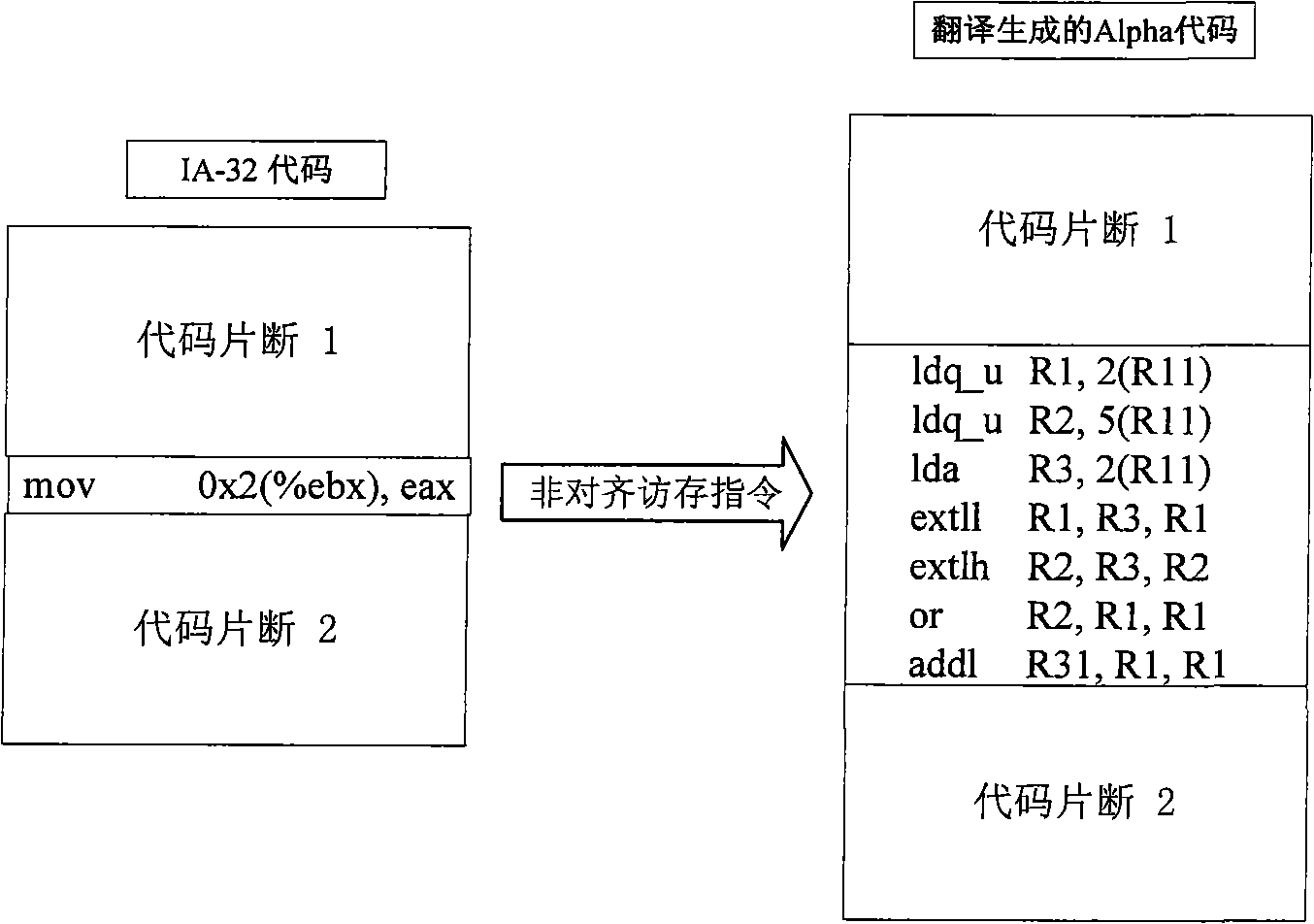

Non-aligning access and storage processing method

ActiveCN101299192AReduce the number of timesImprove efficiencyProgram controlMemory systemsHandling CodeMemory processing

A non-aligned access and memory processing method includes: setting translation threshold according to the objective set structure, executing pitching pile to the access and memory instructions in the translator, to obtain the non-aligned access and memory instruction information; when the implementation number of the translation unit is greater than the translation threshold, the non-aligned access and memory instruction information advises the translator to select a suitable instruction to translate the translation unit into the local code; the non-aligned access and memory instructions undiscovered by the translator pitching pile are generated into the corresponding non-aligned access and memory instruction sequence according to the exception handling mechanism, inserting in the exception handling address, and embedding in the executing code. Adoption of the method can largely reduce the number of exception times of the non-aligned access and memory produced in the binary translator, and improves the efficiency of the binary translator; can better handle the non-aligned access and memory exception appearing in the application program whose code implementation action varies with different input sets, and can effectively improve the operating efficiency of the binary translation system.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

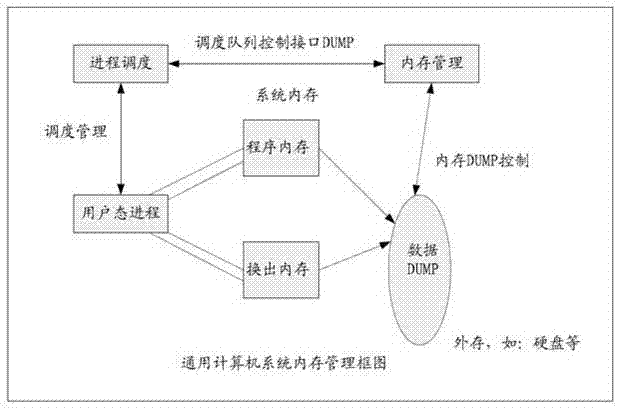

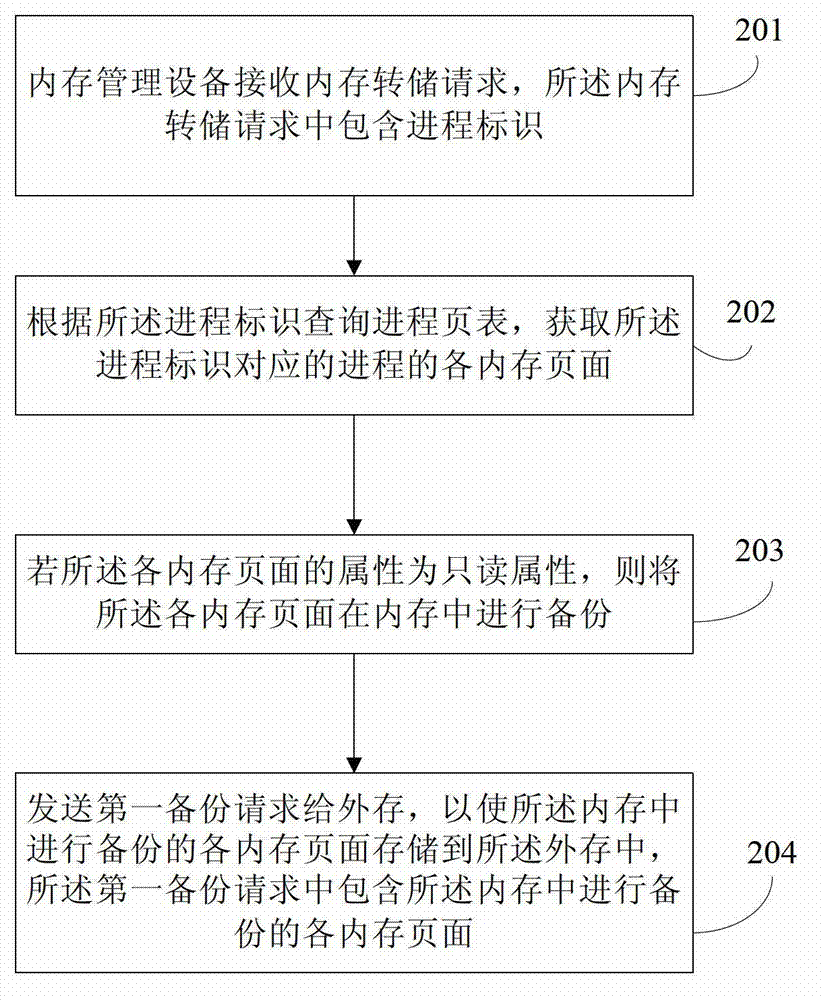

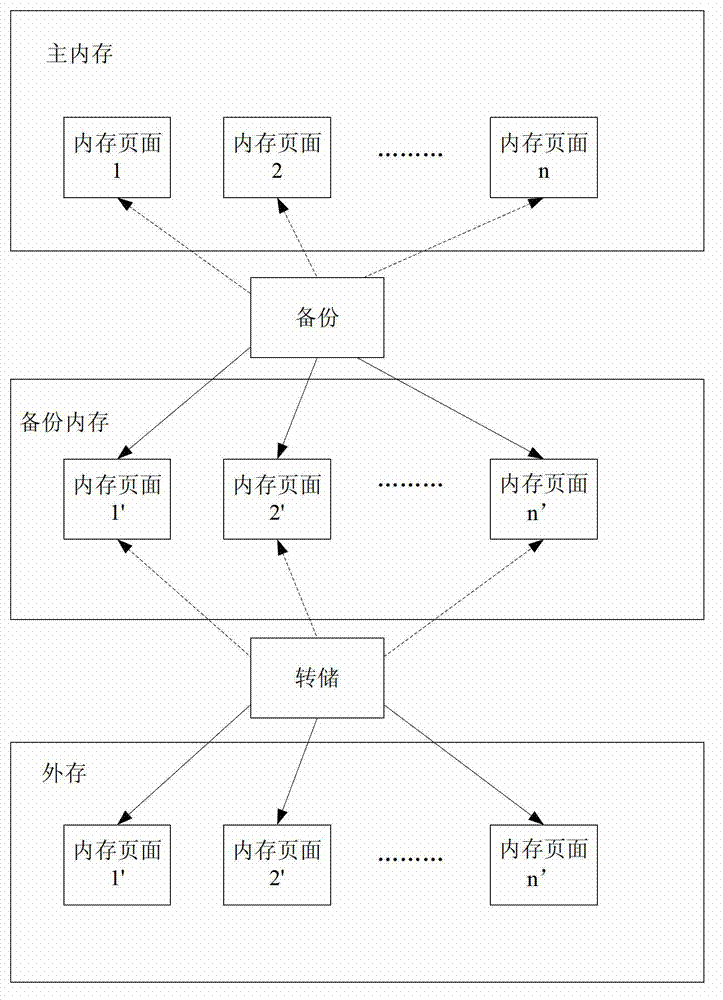

Memory processing method and memory management equipment

InactiveCN102831069AEnsure consistencyMemory adressing/allocation/relocationRedundant operation error correctionMemory processingIn-Memory Processing

The embodiment of the invention discloses a memory processing method and memory management equipment. The method comprises the steps: receiving a memory dump request by the memory management equipment, wherein the memory dump request contains a process identification (PID), and memory pages of the process corresponding to the PID are needed dump memory pages; according to the PID, querying a process page table, and acquiring the memory pages of the process corresponding to the PID; if the attributes of the memory pages are read only attributes, carrying out backups of the memory pages in a memory; and sending a first backup request to external memory so that the external memory copies the backup memory pages into the external memory, wherein the first backup request contains the memory pages of the backups, and can ensure the data consistency while realizing online memory dump.

Owner:HUAWEI TECH CO LTD

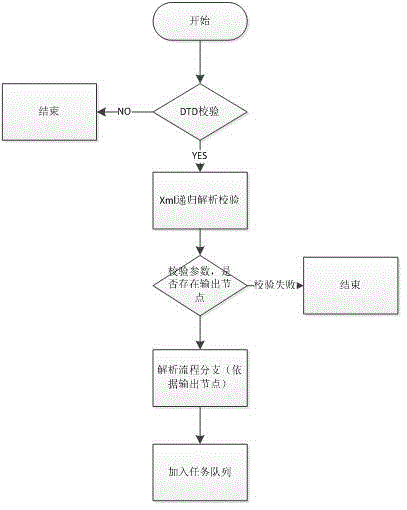

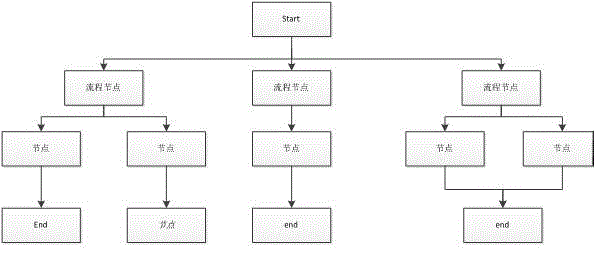

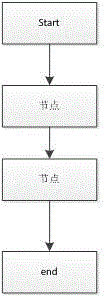

Big data process modeling analysis engine

ActiveCN105550268AEfficient and integrated processDatabase management systemsSpecial data processing applicationsMemory processingData modeling

The invention discloses a big data process modeling analysis engine. The big data process modeling analysis engine comprises an interface layer, an application logic layer, a data analysis algorithm layer and a platform layer, wherein the interface layer is used to carry out data analysis modeling operations during analytical processing of massive data, so as to produce a data analysis model; a task scheduling layer is used to analyze the data analysis model and retrieve a corresponding algorithm package to establish an executable data analysis task; the platform layer is used to calculate and store resources, so as to finally execute the task and obtain a result. The big data process modeling analysis engine provided by the invention has the advantages that based on the Spark design concept, data analysis operation steps and processes of a user are analyzed after the user carries out processed data modeling analysis operations, Spark is then called through processes, and the result is finally output after memory processing of all the data analysis operation steps of the user, so that efficient integrated processes are achieved.

Owner:JIANGSU DAWN INFORMATION TECH CO LTD

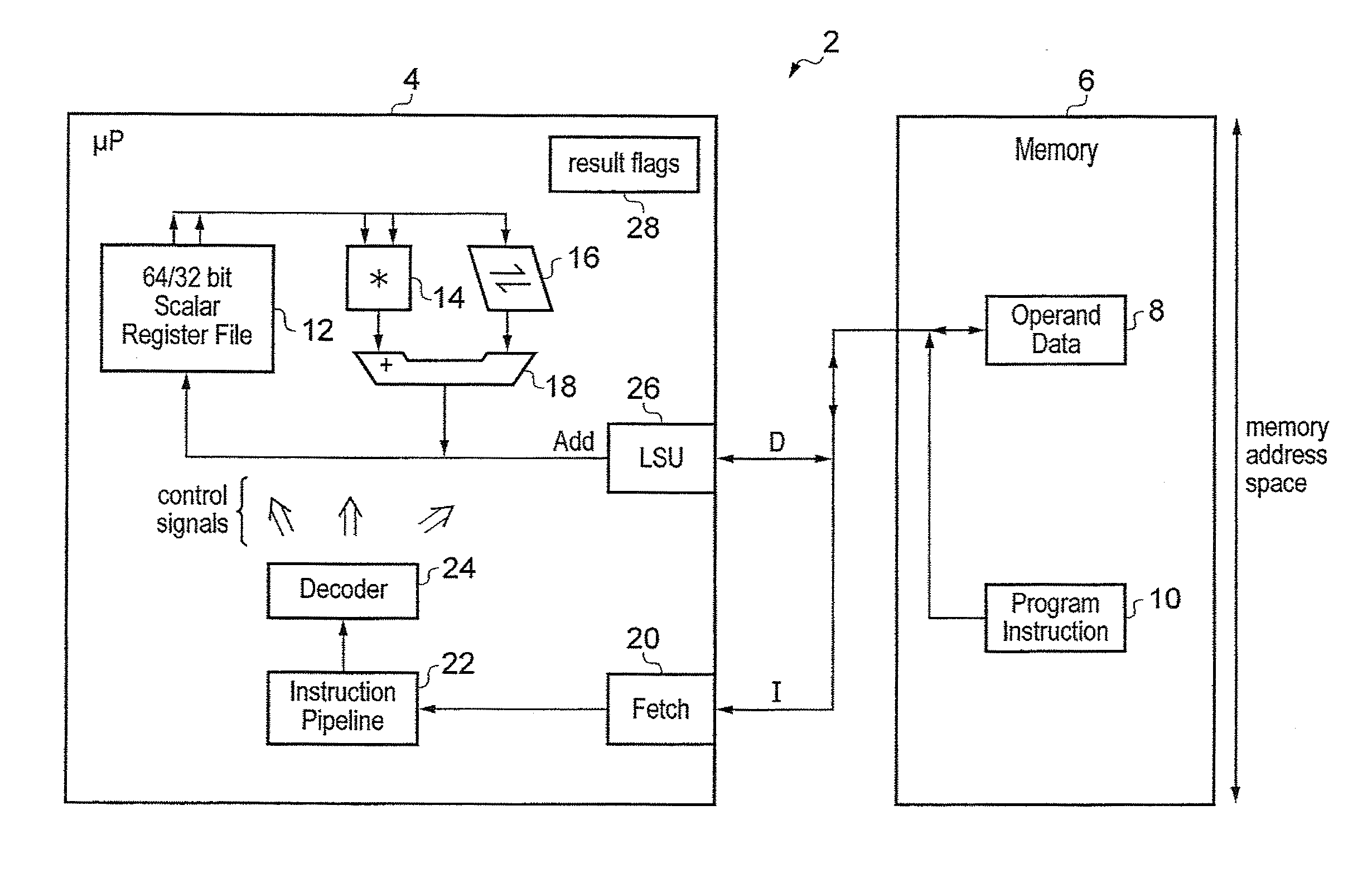

Mixed size data processing operation

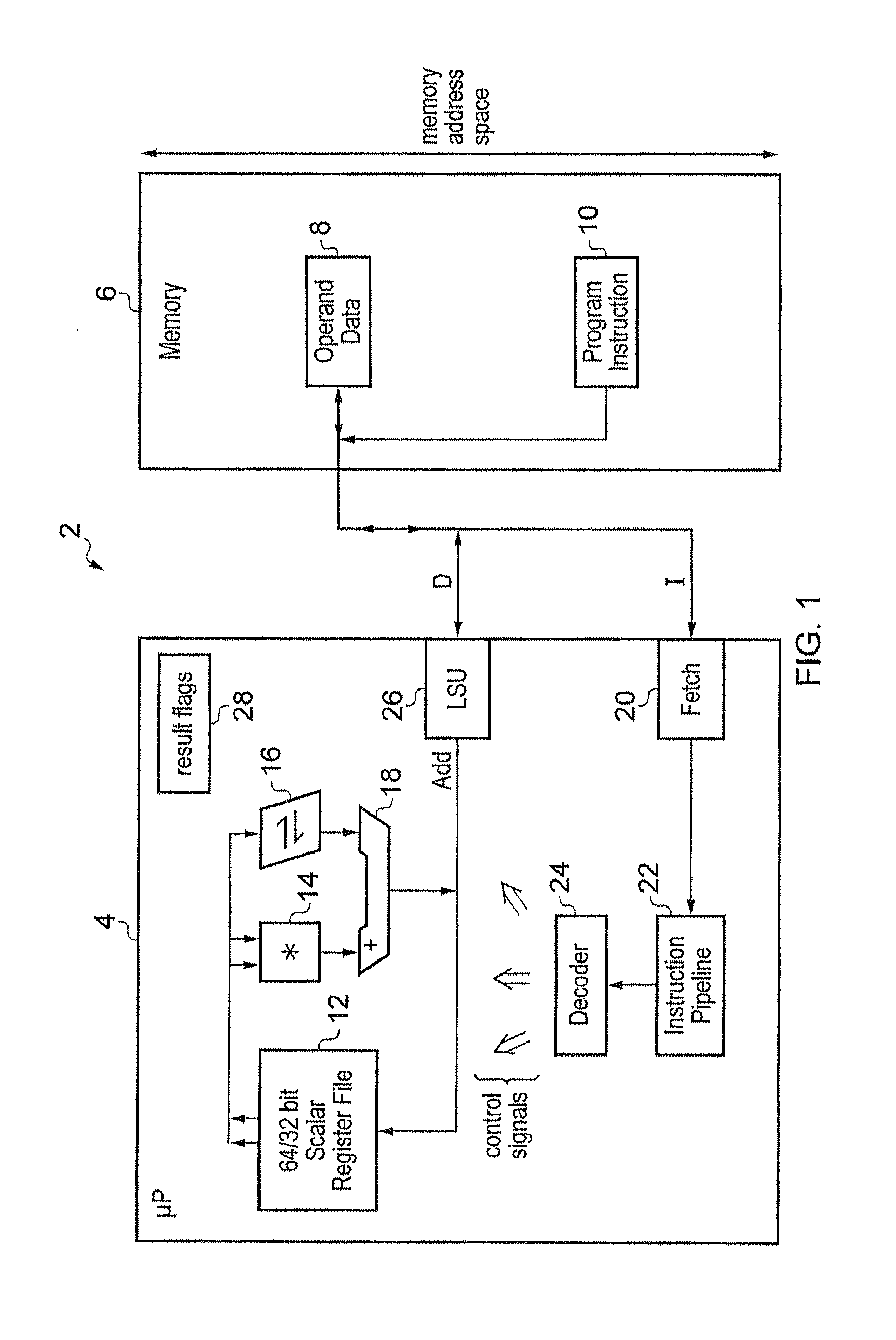

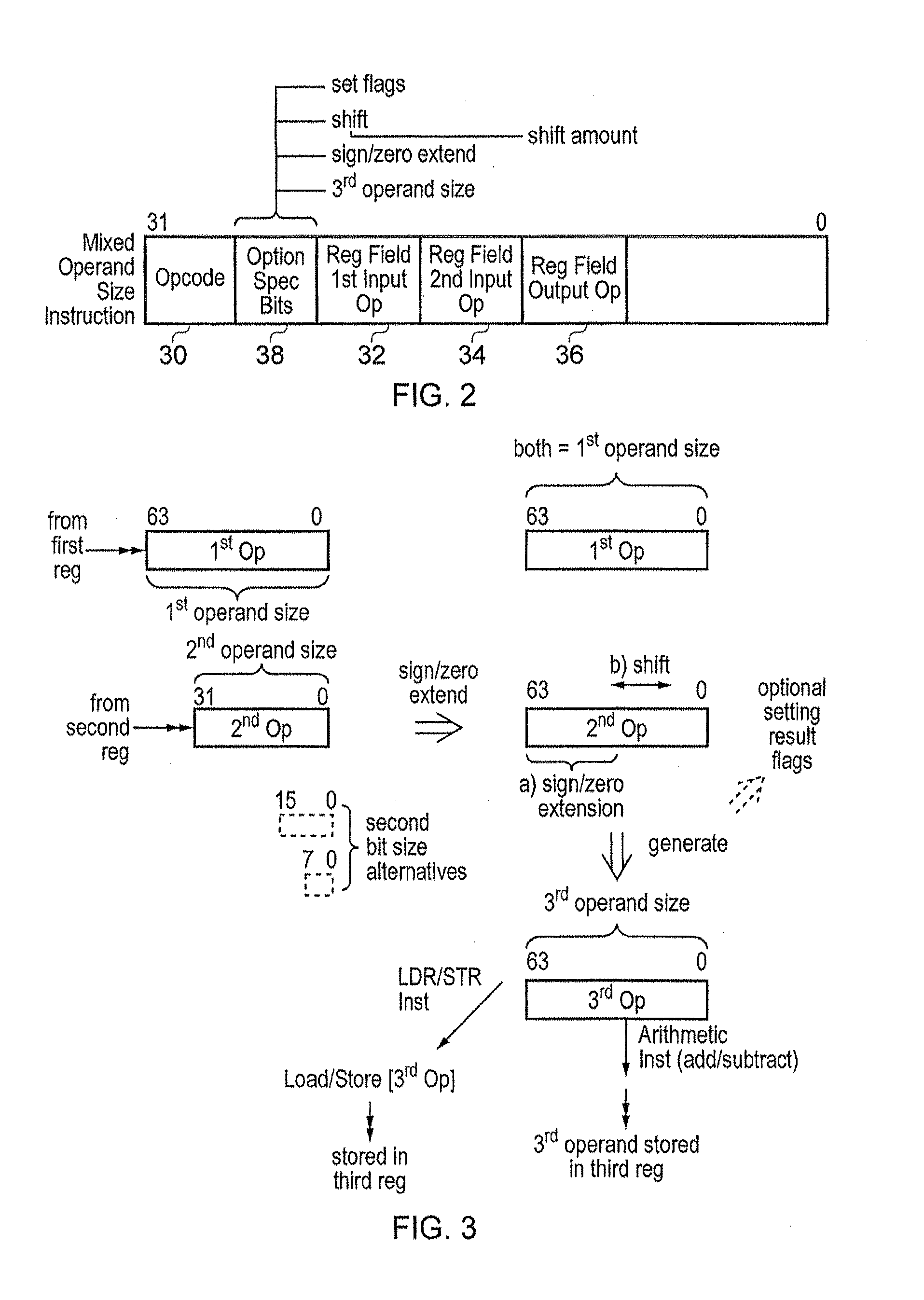

ActiveUS20120233444A1Efficiency advantageSame sizeRegister arrangementsDigital data processing detailsData processing systemProgram instruction

A data processing system 2 includes a processor core 4 and a memory 6. The processor core 4 includes processing circuitry 12, 14, 16, 18, 26 controlled by control signals generated by decoder circuitry 24 which decodes program instructions. The program instructions include mixed operand size instructions (either load / store instructions or arithmetic instructions) which have a first input operand of a first operand size and a second input operand of a second input operand size where the second operand size is smaller than the first operand size. The processing performed first converts the second operand so as to have the first operand size. The processing then generates a third operand using as inputs the first operand of the first operand size and the second operand now converted to have the first operand size.

Owner:ARM LTD

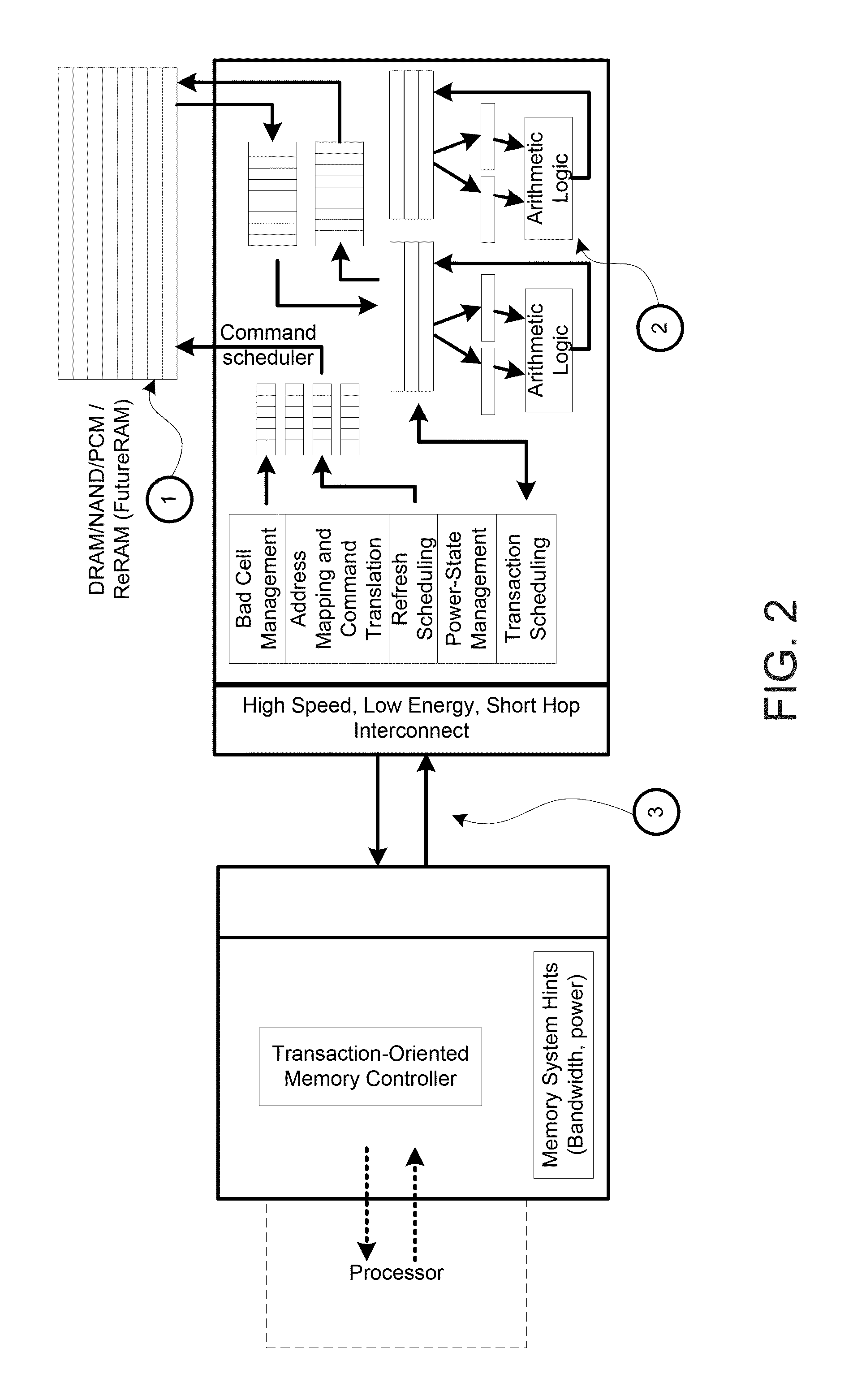

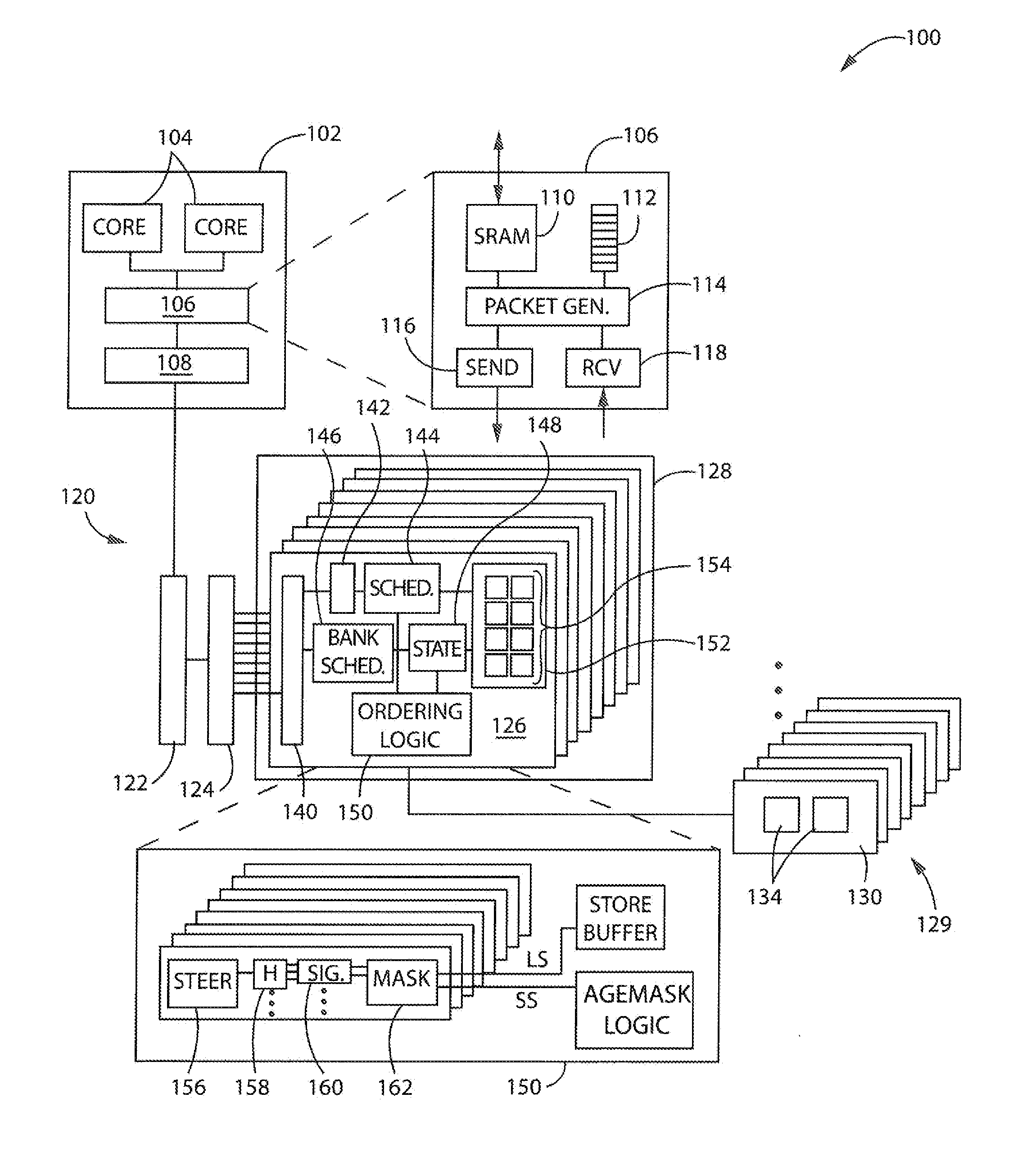

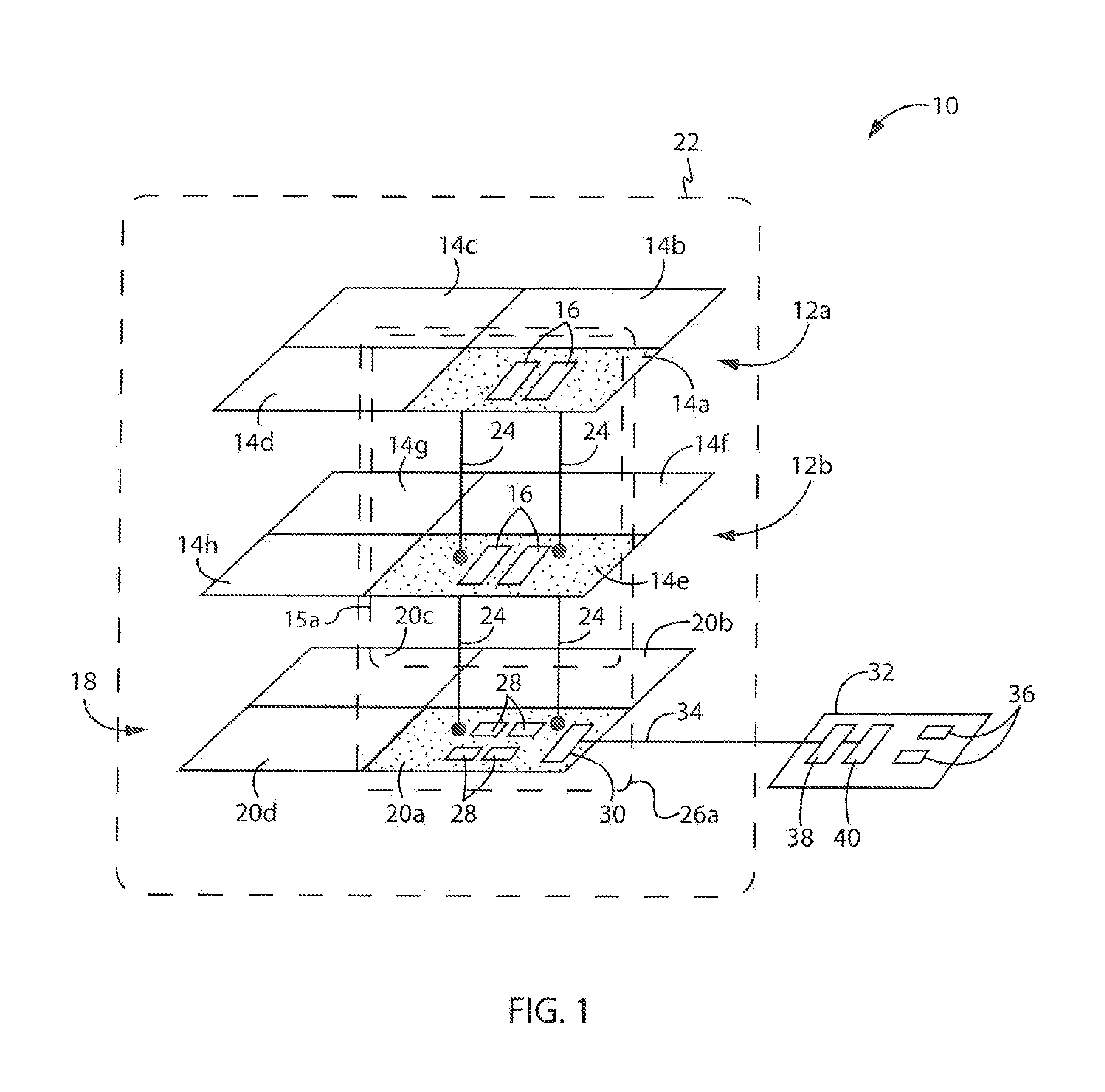

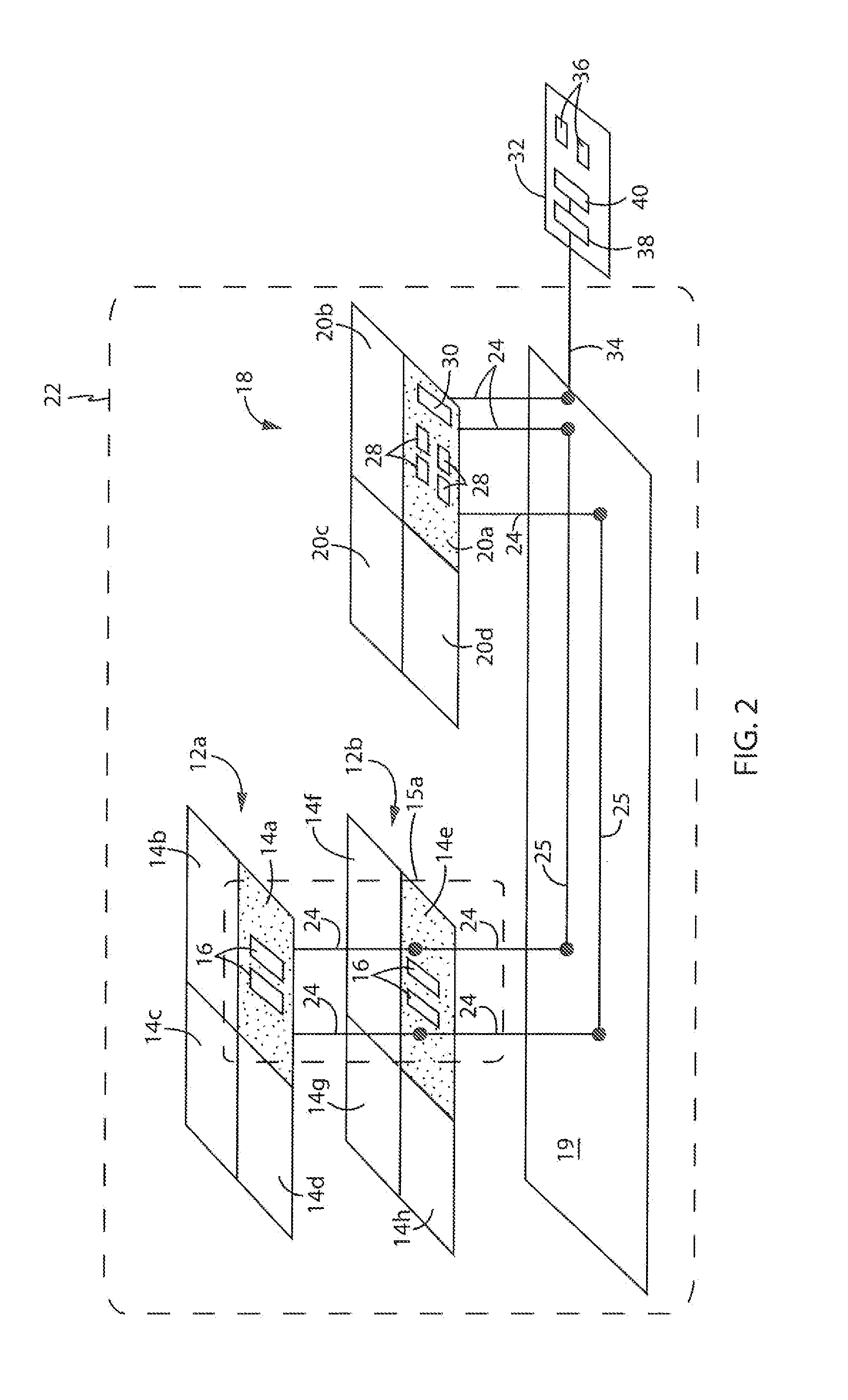

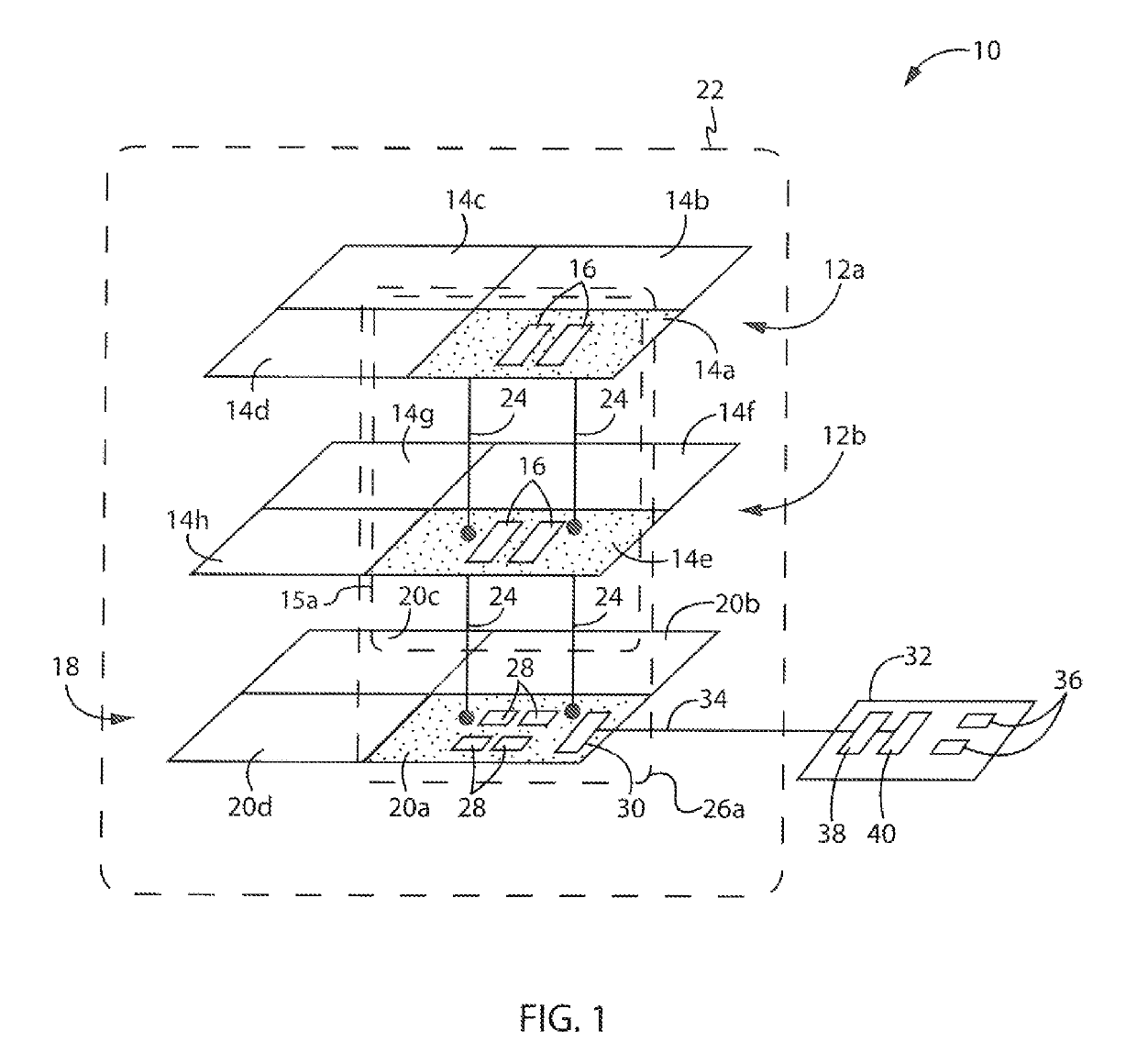

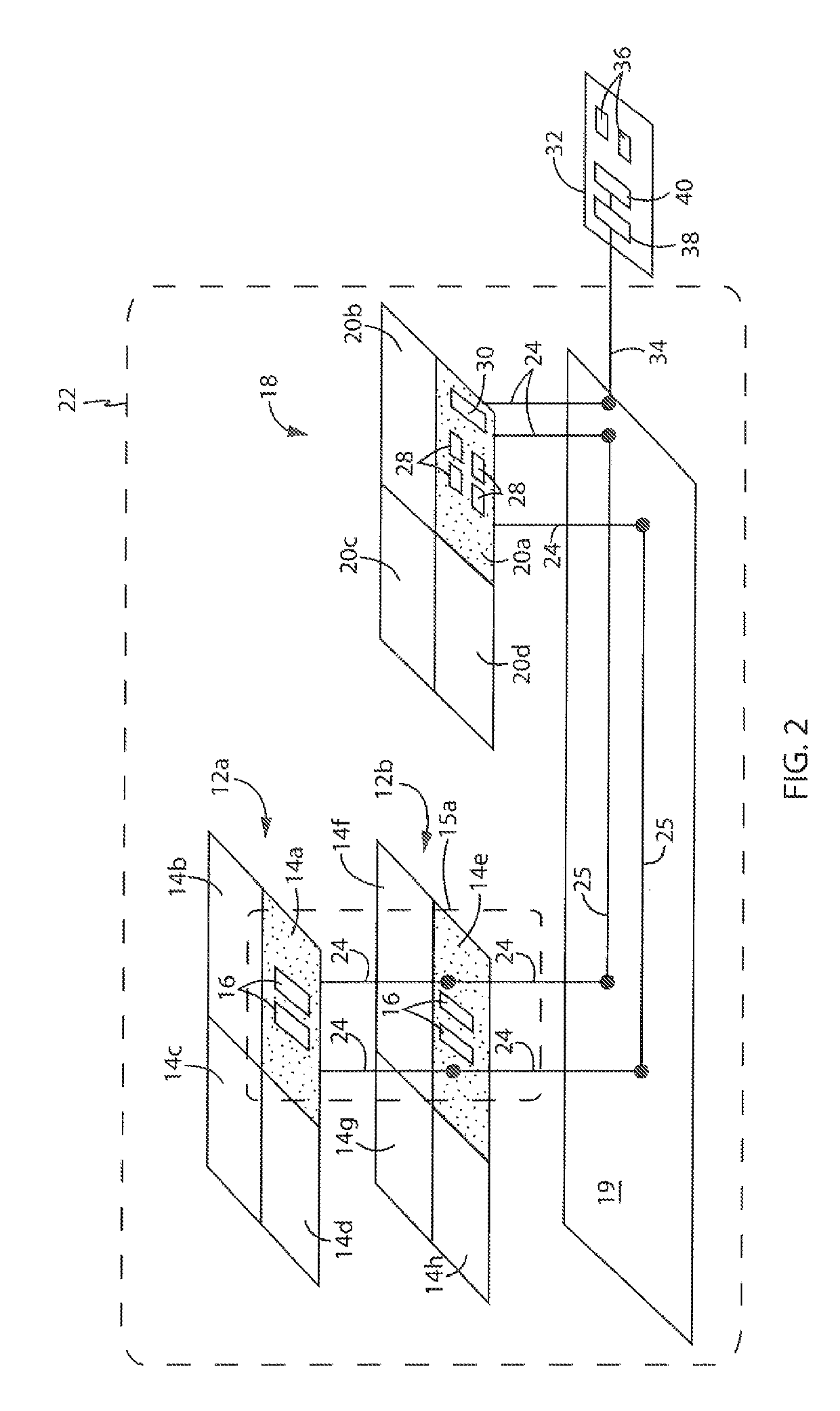

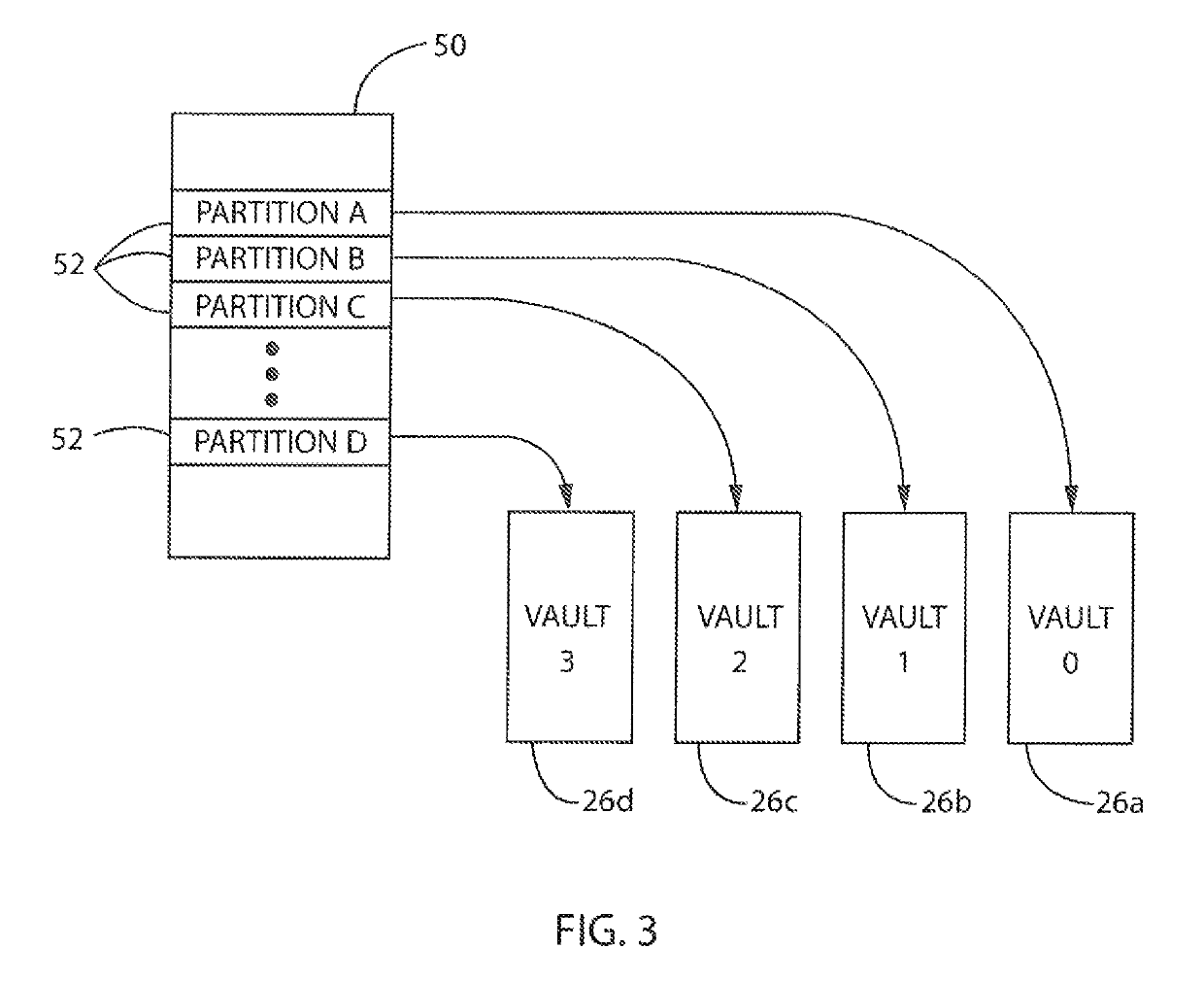

Memory Processing Core Architecture

ActiveUS20160041856A1Improve efficiencyEfficiently offload entire piecesInterprogram communicationDigital computer detailsMemory processingProcessing core

Aspects of the present invention provide a memory system comprising a plurality of stacked memory layers, each memory layer divided into memory sections, wherein each memory section connects to a neighboring memory section in an adjacent memory layer, and a logic layer stacked among the plurality of memory layers, the logic layer divided into logic sections, each logic section including a memory processing core, wherein each logic section connects to a neighboring memory section in an adjacent memory layer to form a memory vault of connected logic and memory sections, and wherein each logic section is configured to communicate directly or indirectly with a host processor. Accordingly, each memory processing core may be configured to respond to a procedure call from the host processor by processing data stored in its respective memory vault and providing a result to the host processor. As a result, increased performance may be provided.

Owner:WISCONSIN ALUMNI RES FOUND

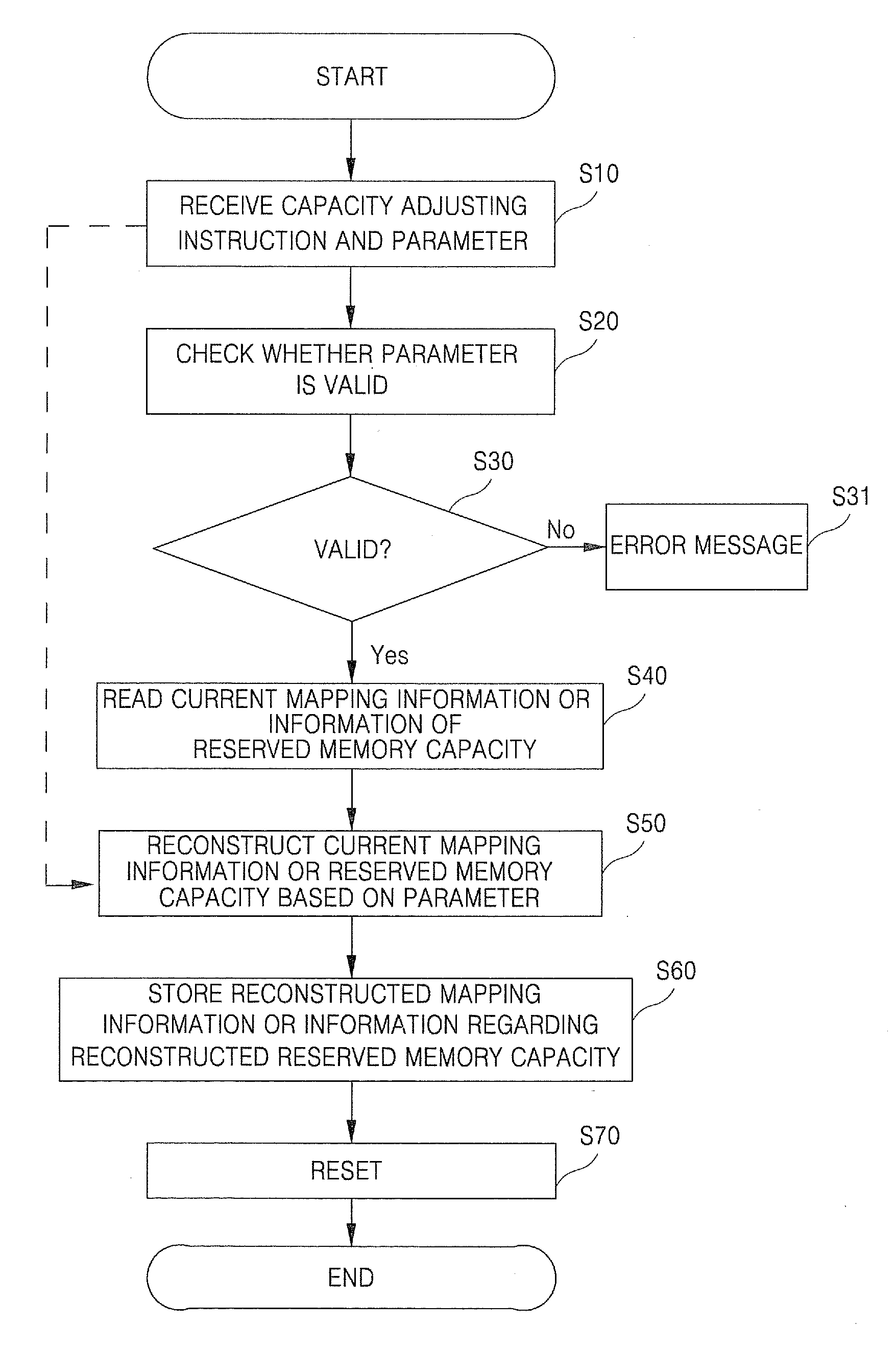

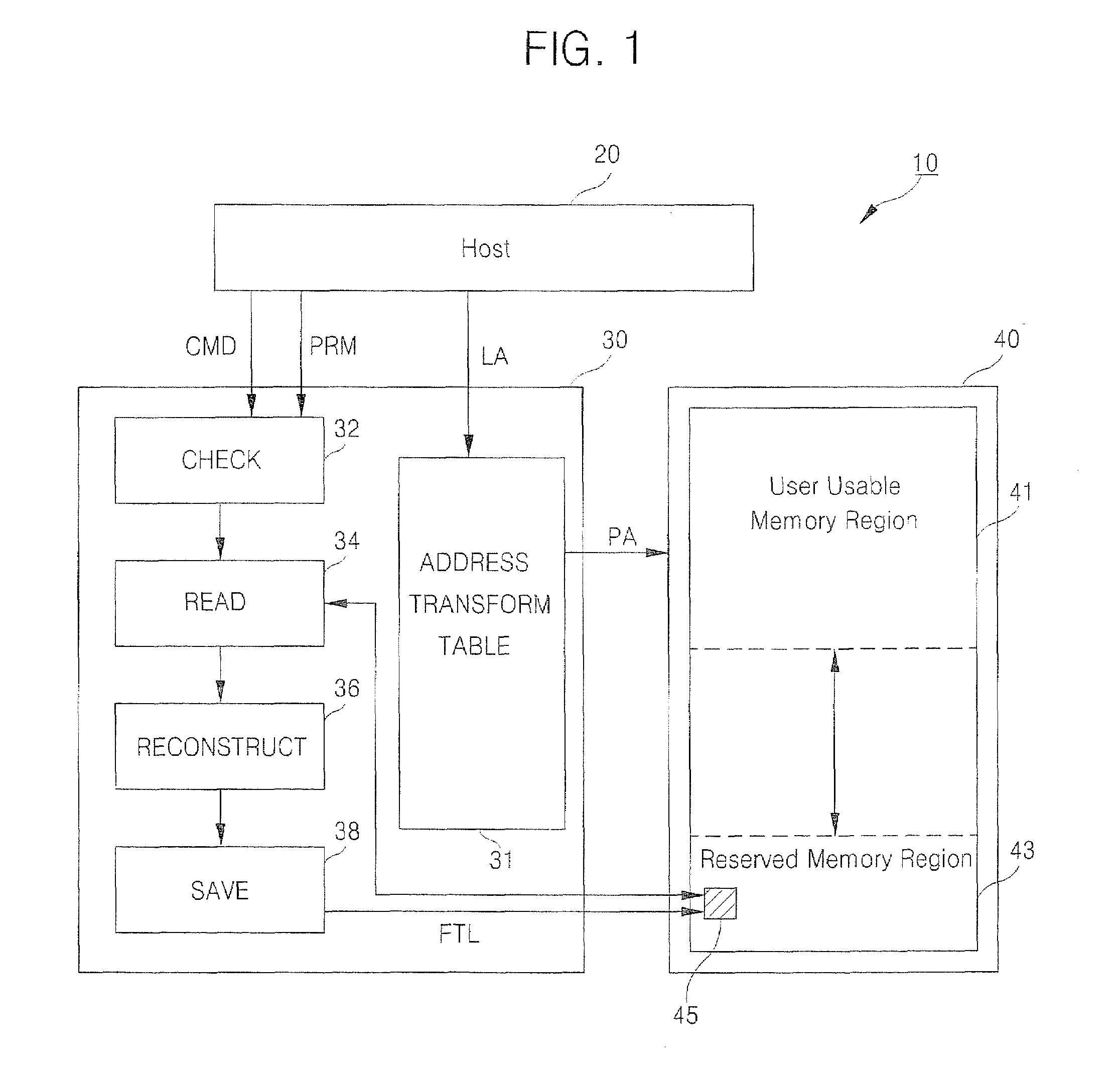

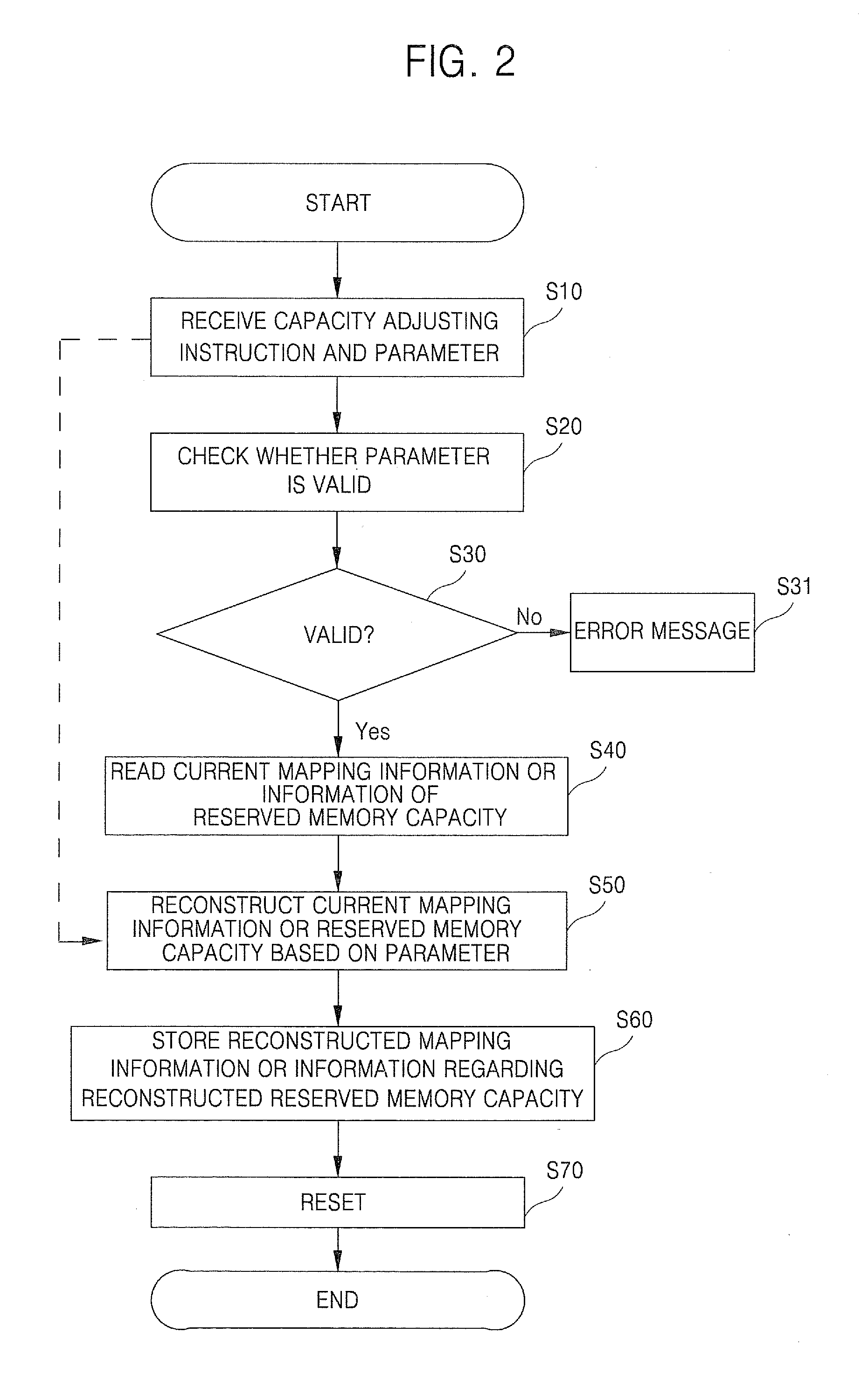

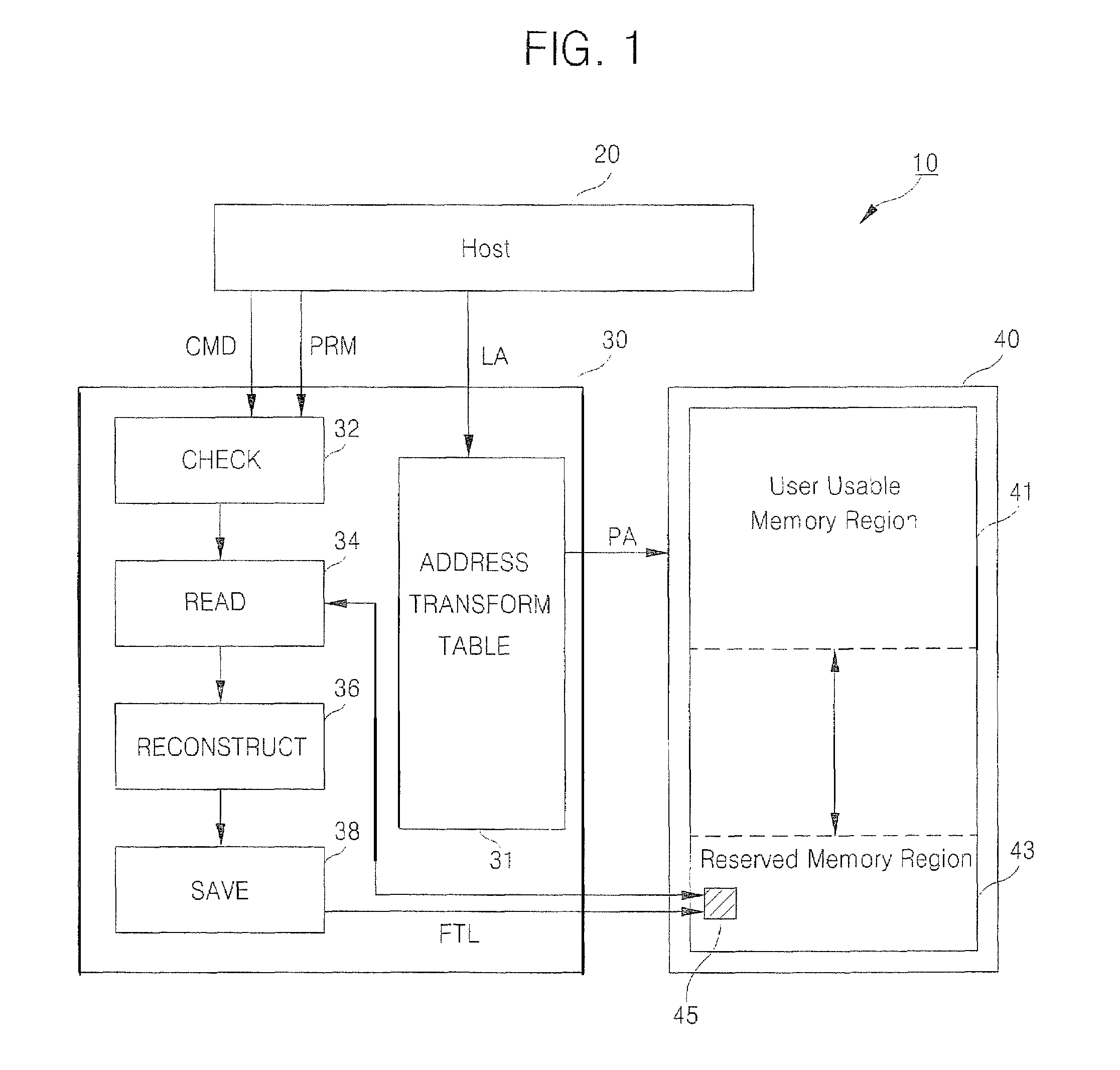

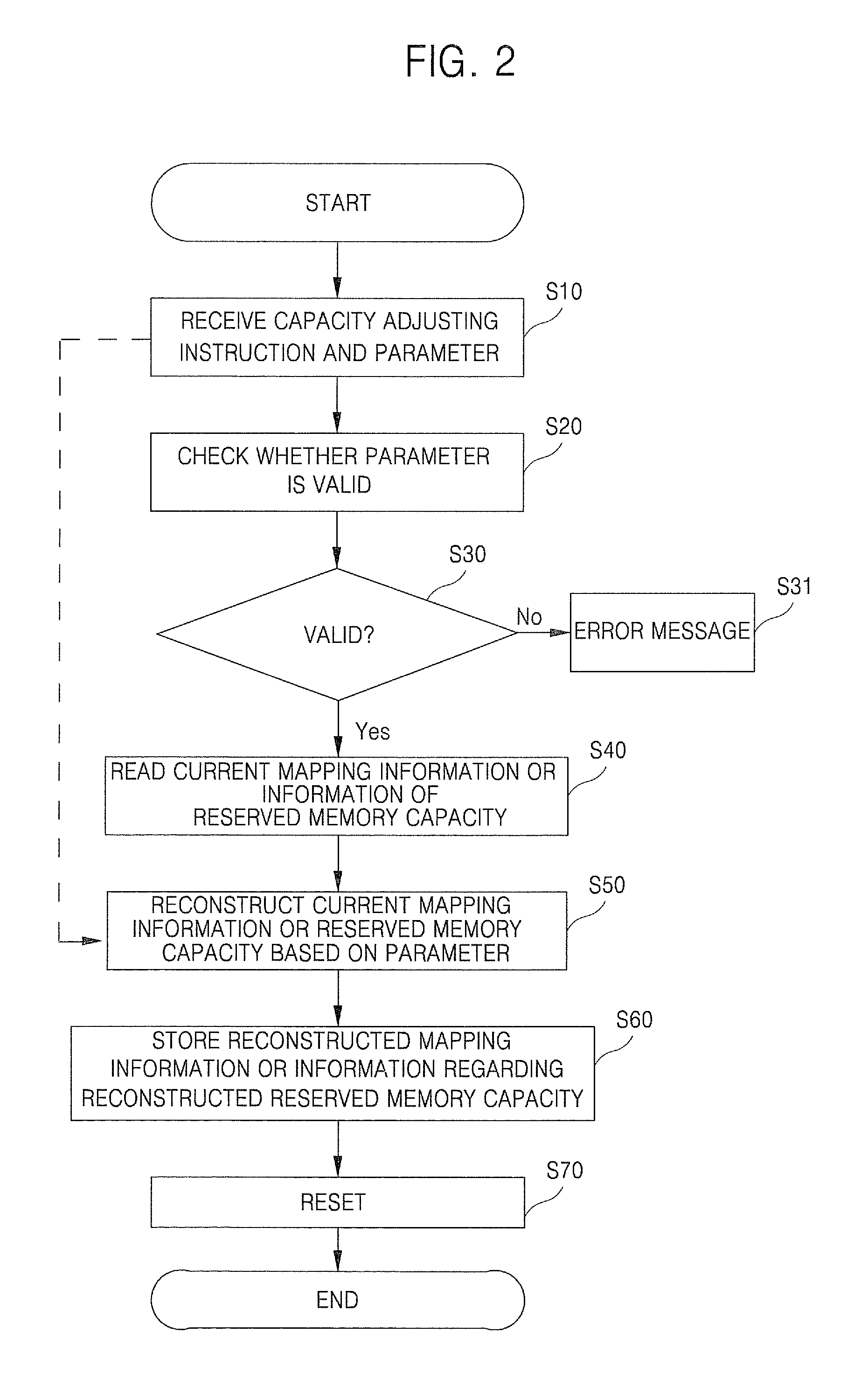

Methods and Apparatus for Reallocating Addressable Spaces Within Memory Devices

ActiveUS20080098193A1Memory architecture accessing/allocationProgram control using stored programsMemory addressMemory processing

Integrated circuit systems include a non-volatile memory device (e.g, flash EEPROM device) and a memory processing circuit. The memory processing circuit is electrically coupled to the non-volatile memory device. The memory processing circuit is configured to reallocate addressable space within the non-volatile memory device. This reallocation is performed by increasing a number of physical addresses within the non-volatile memory device that are reserved as redundant memory addresses, in response to a capacity adjust command received by the memory processing circuit.

Owner:SAMSUNG ELECTRONICS CO LTD

Shape memory processing biodegradating vitro fixed material for medical use and its preparation

InactiveCN1544096AImprove breathabilityLow densitySurgeryAbsorbent padsCaprolactoneMaterials science

The invention relates to a novel biologically degradable macromolecular external securing material for medical purposes, wherein the essential component of the material is polylactic acid, or can be added poly gamma-caprolactone, inorganic mineral substance (such as calcium carbonate, titanium white, talcum powder, barium sulfate, montmorillonite), silicon alky coupling agent or coloring agent. The invention also discloses its preparation process.

Owner:CHANGCHUN INST OF APPLIED CHEMISTRY - CHINESE ACAD OF SCI

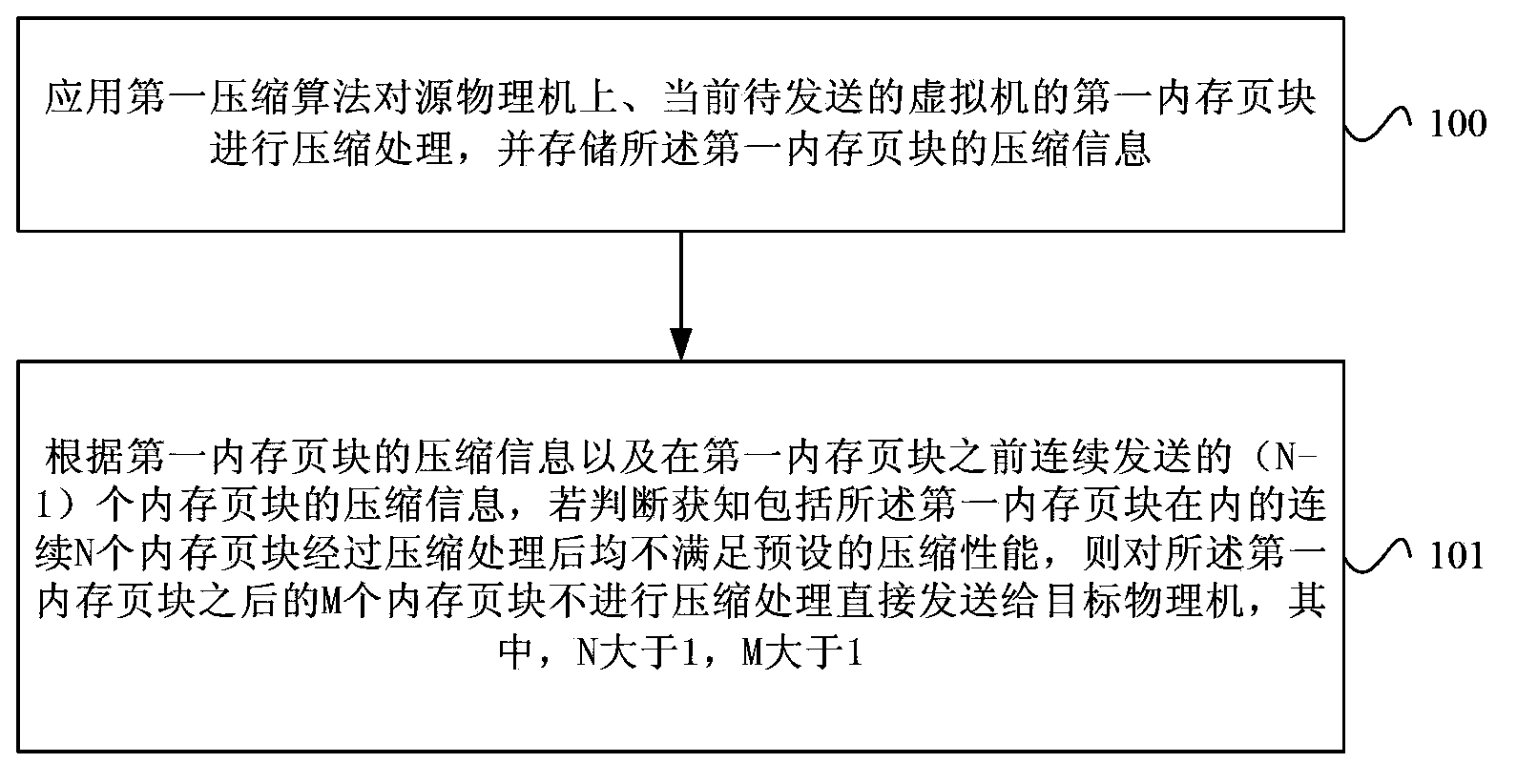

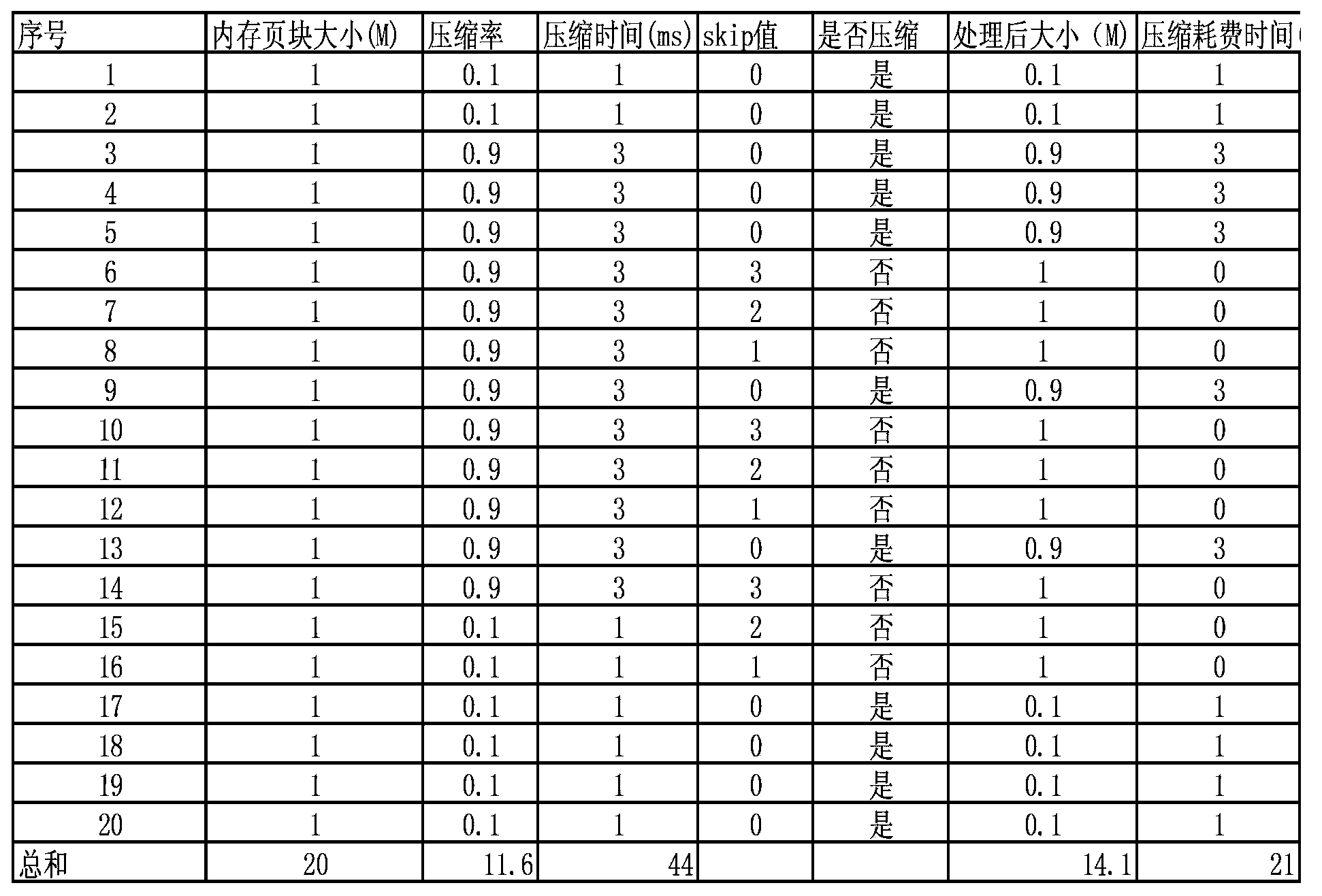

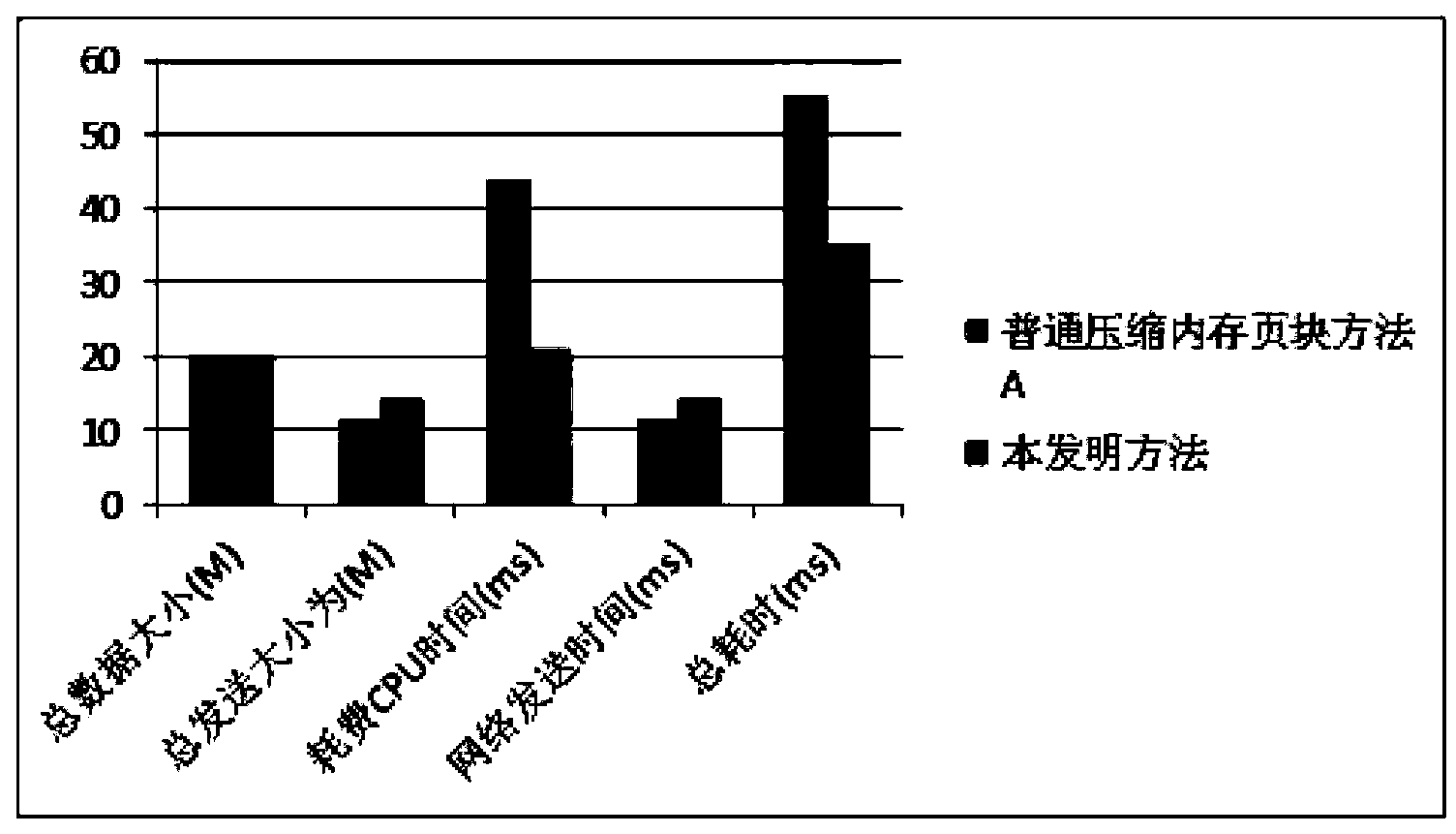

Virtual machine thermal migration memory processing method, device and system

InactiveCN103353850AImprove performanceReduce CPU overheadProgram initiation/switchingSoftware simulation/interpretation/emulationMemory processingIn-Memory Processing

The invention provides a virtual machine thermal migration memory processing method, device and system. The method comprises the following steps: compression processing of a current to-be-transmitted first memory page block of a virtual machine on a source physical machine is performed by using the first compression algorithm, and storing compression information of the first memory page block; if N memory page blocks containing the first memory page block do not meet preset compression performance after being subjected to compression processing through judging according to the compression information of the first memory page block and compression information of (N-1) memory page blocks transmitted before the first memory page block, and then M memory page blocks after the first memory page block are not subjected to compression processing and are directly transmitted to a target physical machine, wherein the N is greater than 1 and the M is greater than 1. According to the method, the device and the system provided by the invention, the thermal migration performance of the virtual machine is improved, the CPU (Central Processing Unit) overhead of the source physical machine is reduced and processing resources are saved.

Owner:HUAWEI TECH CO LTD

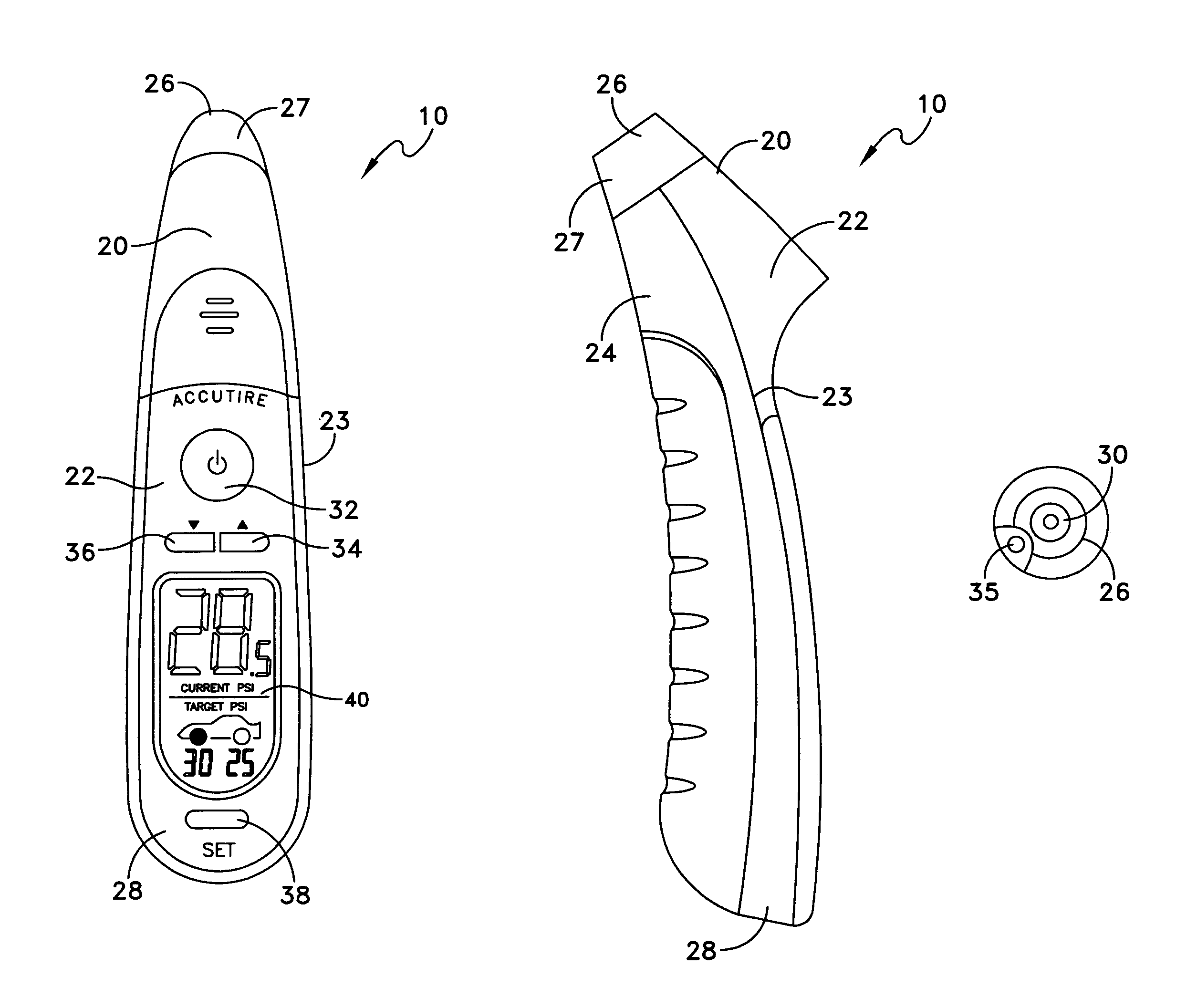

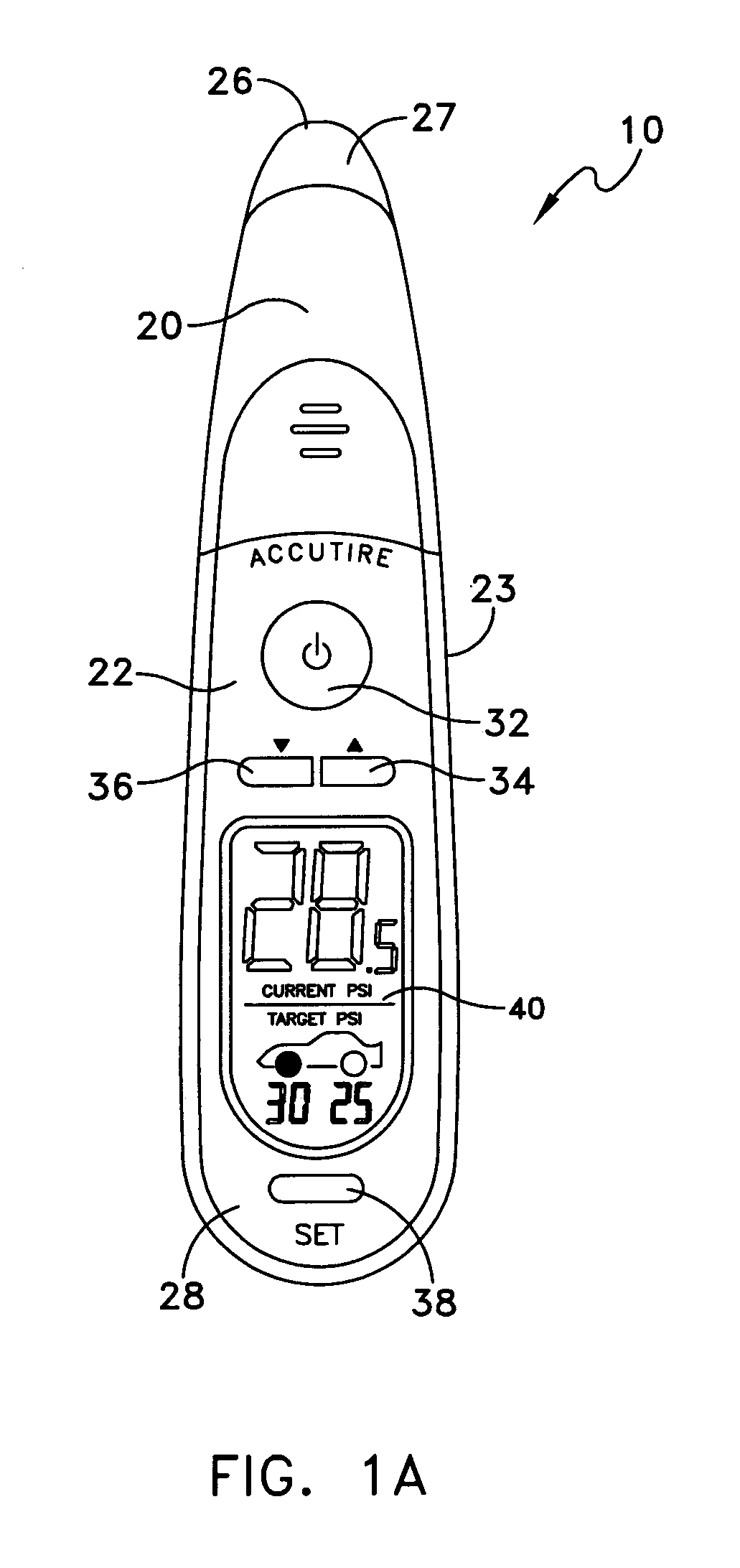

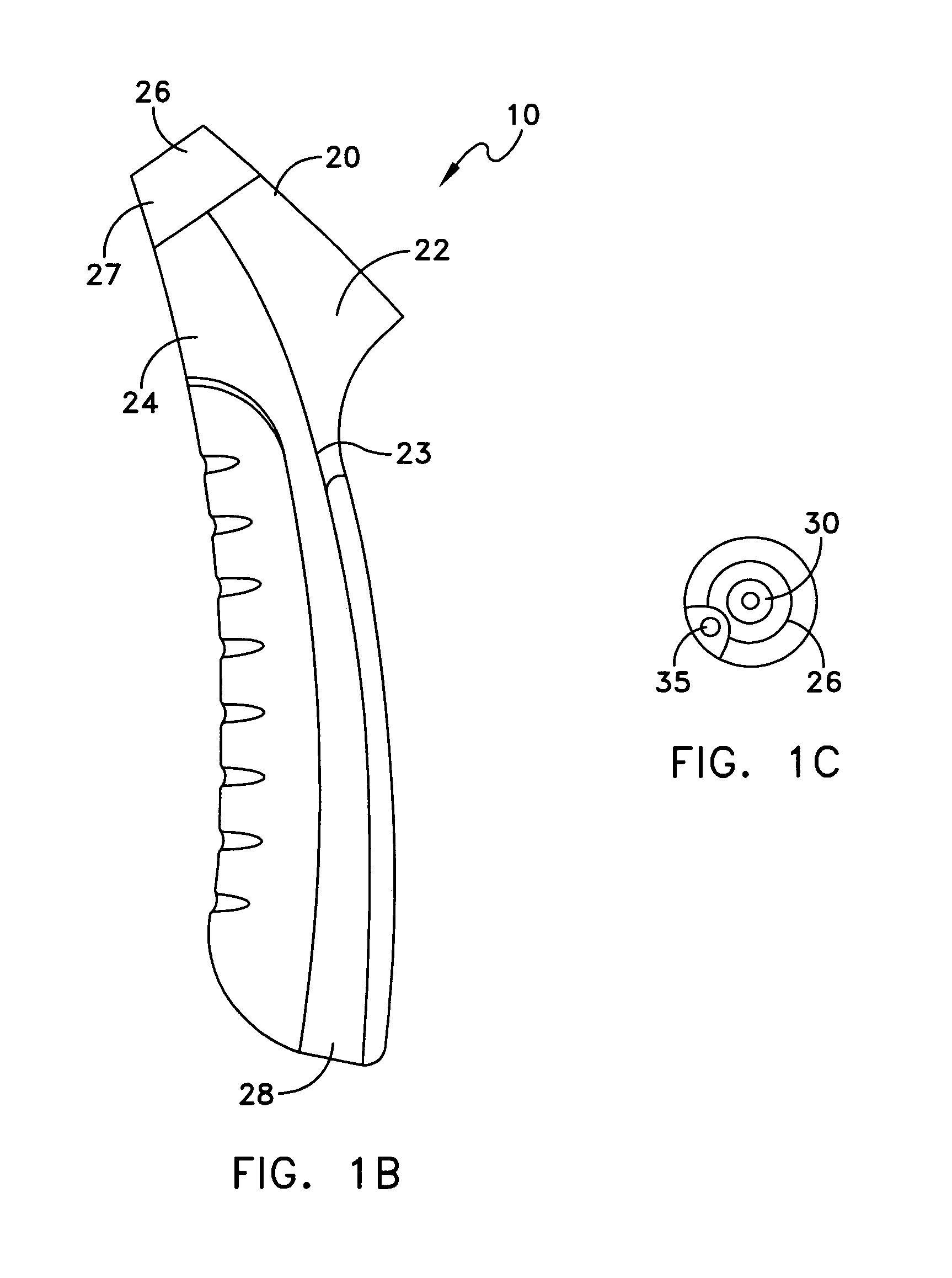

Hand-held tire pressure gauge and method for assisting a user to determine whether a tire pressure is within a target range using a hand-held tire pressure gauge

InactiveUS7251992B2Inflated body pressure measurementTyre measurementsTire-pressure gaugePressure sense

A handheld tire pressure gauge including: a display; a pressure sensor; a memory; a processor being operatively coupled to the display, pressure sensor and memory; and, code being stored in the memory and operable by the processor to: retrieve at least one value indicative of a target tire pressure from the memory, determine a pressure sensed by the pressure sensor, and, cause the display to present information indicative of the target tire pressure and sensed pressure.

Owner:MEASUREMENT

Memory processing core architecture

ActiveUS10289604B2Improve efficiencyEfficiently offload entire piecesArchitecture with single central processing unitSingle machine energy consumption reductionMemory processingComputer architecture

Aspects of the present invention provide a memory system comprising a plurality of stacked memory layers, each memory layer divided into memory sections, wherein each memory section connects to a neighboring memory section in an adjacent memory layer, and a logic layer stacked among the plurality of memory layers, the logic layer divided into logic sections, each logic section including a memory processing core, wherein each logic section connects to a neighboring memory section in an adjacent memory layer to form a memory vault of connected logic and memory sections, and wherein each logic section is configured to communicate directly or indirectly with a host processor. Accordingly, each memory processing core may be configured to respond to a procedure call from the host processor by processing data stored in its respective memory vault and providing a result to the host processor. As a result, increased performance may be provided.

Owner:WISCONSIN ALUMNI RES FOUND

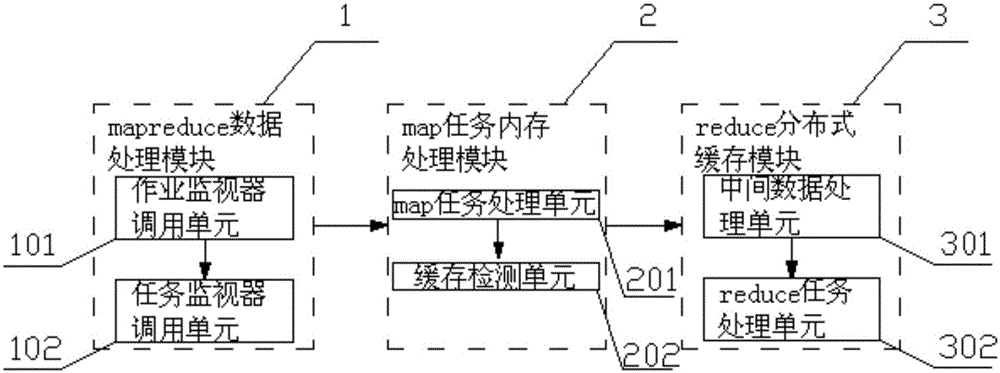

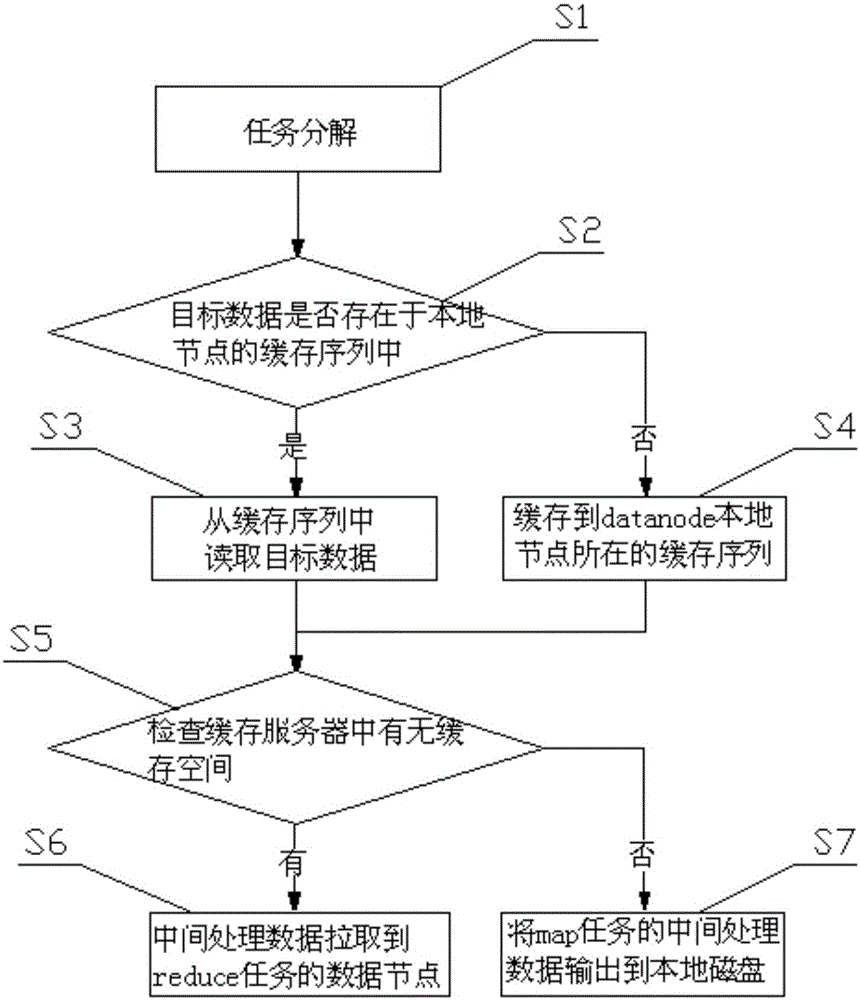

Data processing system and method based on distributed caching

ActiveCN105138679AImplement localizationImprove hit rateSpecial data processing applicationsMemory processingData processing system

The invention relates to a data processing system based on distributed caching. The data processing system comprises a mapreduce data processing module, a map task memory processing module and a reduce distributed caching module, wherein the mapreduce data processing module is used for decomposing submitted user jobs into multiple map tasks and multiple reduce tasks, the map task memory processing module is used for processing the map tasks, and the reduce distributed caching module is used for processing the map tasks through the reduce tasks. The invention further relates to a data processing method based on distributed caching. The data processing system and method have the advantages of mainly serving for the map tasks, optimizing map task processing data, ensuring that the map can find target data within the shortest time and transmitting an intermediate processing result at the highest speed; data transmission quantity can be reduced, the data can be processed in a localized mode, the data hit rate is increased, and therefore the execution efficiency of data processing is promoted.

Owner:GUILIN UNIV OF ELECTRONIC TECH

Fixed length memory to memory arithmetic and architecture for direct memory access using fixed length instructions

InactiveUS7546442B1Fast processingImprove performanceInstruction analysisDigital computer detailsMemory processingDirect memory access

A method and system for fixed-length memory-to-memory processing of fixed-length instructions. Further, the present invention is a method and system for implementing a memory operand width independent of the ALU width. The arithmetic and register data are 32 bits, but the memory operand is variable in size. The size of the memory operand is specified by the instruction. Instructions in accordance with the present invention allow for multiple memory operands in a single fixed-length instruction. The instruction set is small and simple, so the implementation is lower cost than traditional processors. More addressing modes are provided for, thus creating a more efficient code. Semaphores are implemented using a single bit. Shift-and-merge instructions are used to access data across word boundaries.

Owner:THE UBICOM TRUST

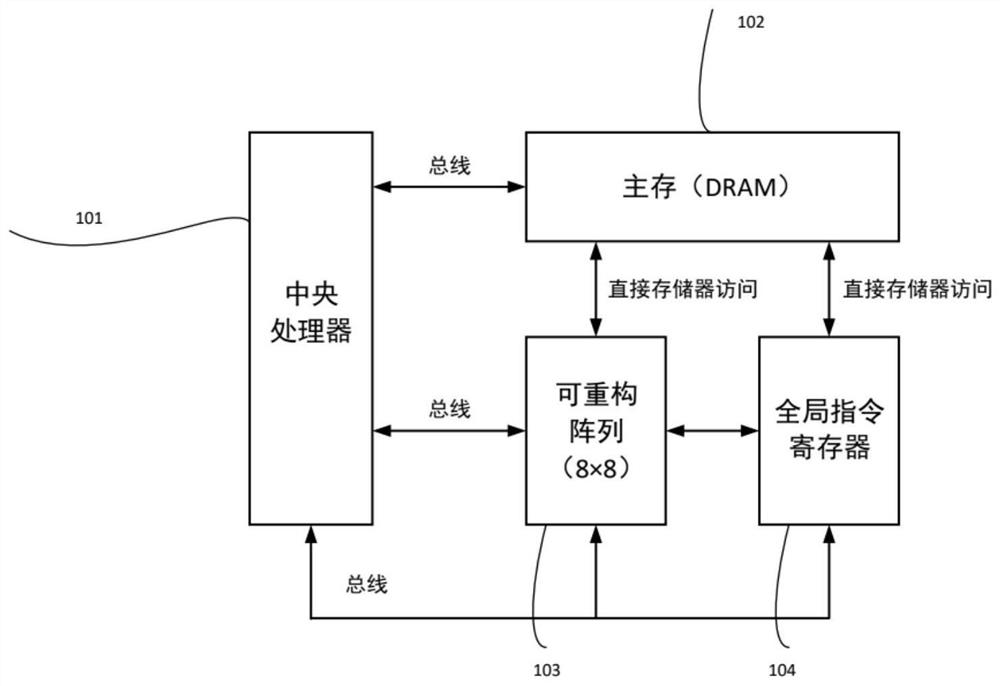

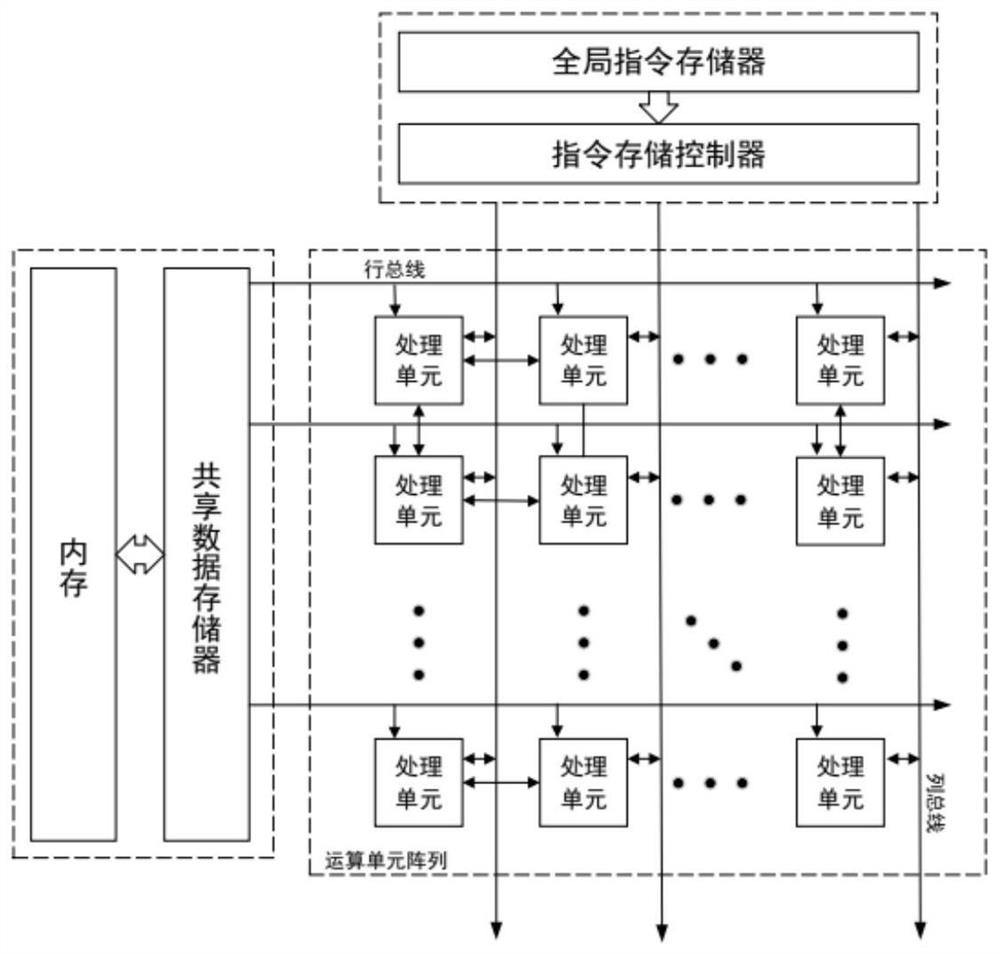

In-memory calculation method based on coarse-grained reconfigurable array

InactiveCN112463719AReduce physical distanceShorten the timeArchitecture with single central processing unitElectric digital data processingInstruction memoryComputer architecture

The invention relates to an in-memory processing system based on CGRA. The method is characterized by comprising a central processing unit, a main memory, a reconfigurable array and a global instruction register; a 3D stacking mode is adopted, each main memory block corresponds to a logic layer, and the logic layers and a memory chip are directly connected through the TSV technology; the processing unit of the reconfigurable array is configured as a storage unit or an arithmetic logic unit; the storage unit is used for exchanging data with the memory; and an arithmetic logic unit is used for performing calculating according to the register data, the nearby storage unit data and the configuration information. The in-memory processing system has the beneficial effects that the in-memory processing system has obvious performance advantages and wide application advantages, can realize the function simulation of the architecture under a simulation platform, is applied to a specific data intensive algorithm, adapts to more algorithm applications, and has higher flexibility; the reconfigurable array global instruction memories are all designed asymmetrically; and the transmission efficiency of the internal configuration data of the reconfigurable array is greatly improved.

Owner:SHANGHAI JIAO TONG UNIV

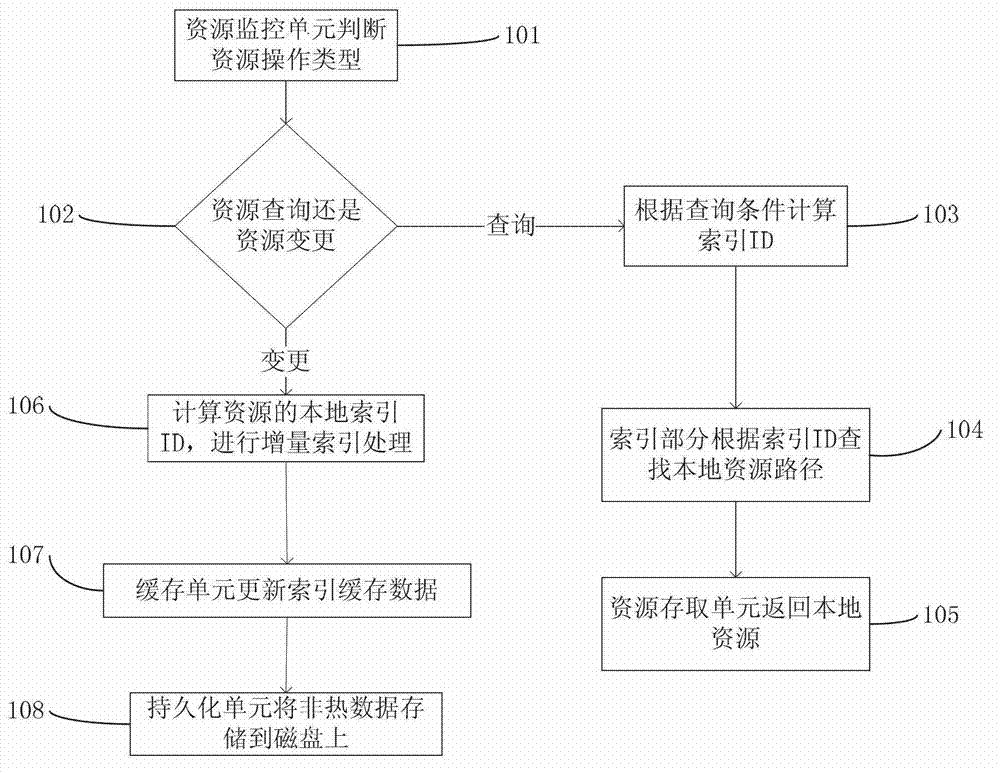

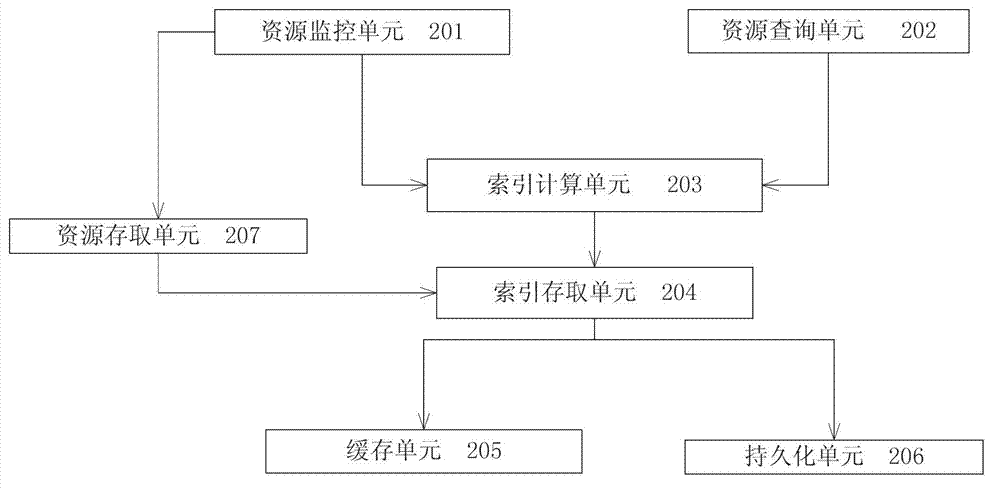

Index-based local resource quick retrieval system and retrieval method thereof

ActiveCN102968464AImprove query efficiencyImprove user experienceSpecial data processing applicationsMemory processingLow speed

The invention relates to the technical field of data retrieval and data cache, in particular to an index-based local resource quick retrieval system and a retrieval method thereof. The retrieval method comprises the following steps of: monitoring specified resource data by a resource monitoring unit to judge the operation type of a resource; performing incremental indexing operation processing on the resource by an indexing part aiming at change of the resource, updating local index data hierarchically and meanwhile performing memory processing on the changed resource by a resource access interface; and calculating a local index ID (Identity) of the resource by the indexing part according to query conditions aiming at query operation, and directly returning the resource by a resource access unit according to an index of an operation result if the resource which meets the result exists. According to the system and the method, the problem of low speed in search of a large number of small files under the conventional conditions is effectively solved, the response time is quickened, and the user experience is improved; and the system and the method can be used for retrieval of the local resource.

Owner:GUANGDONG ELECTRONICS IND INST

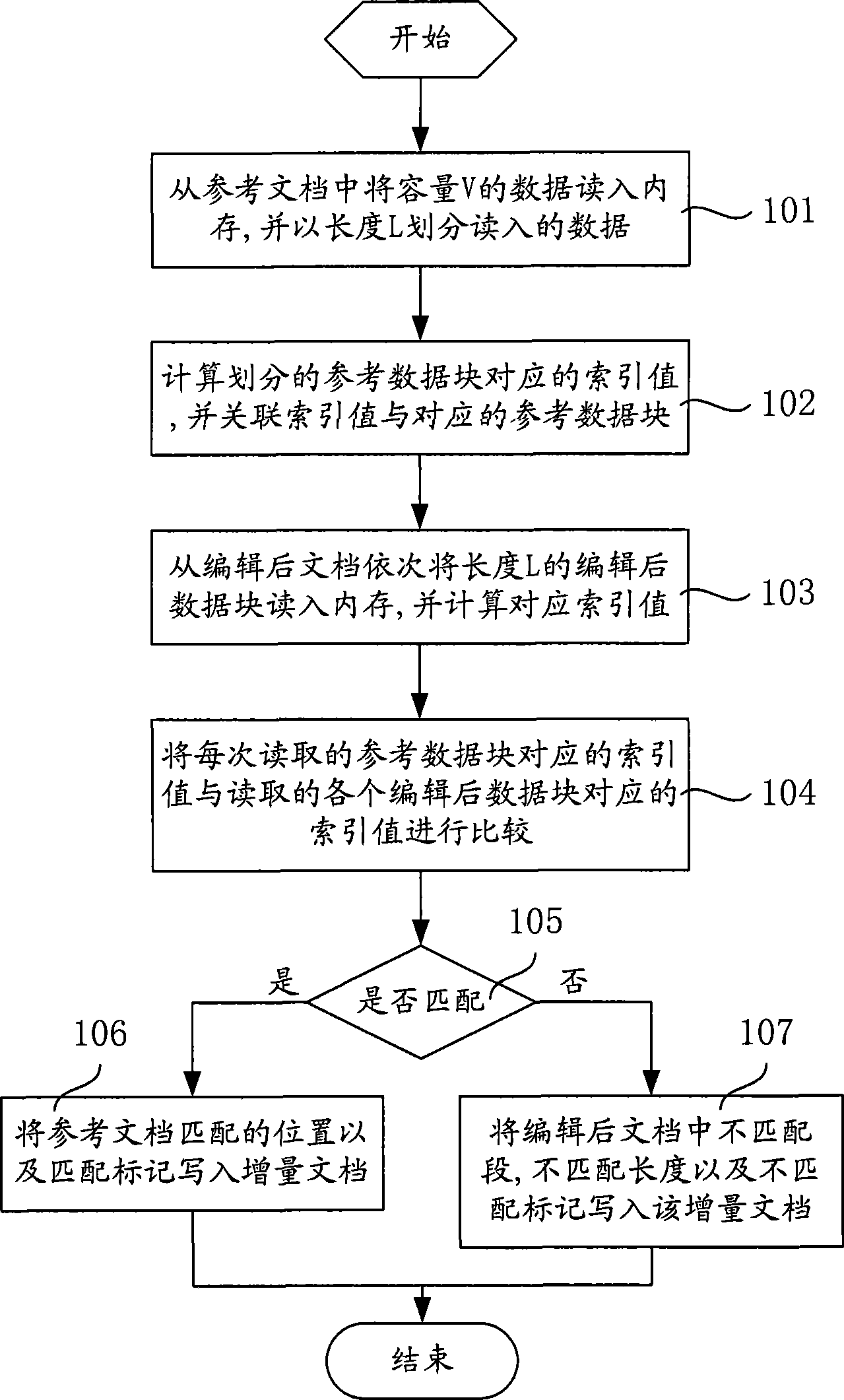

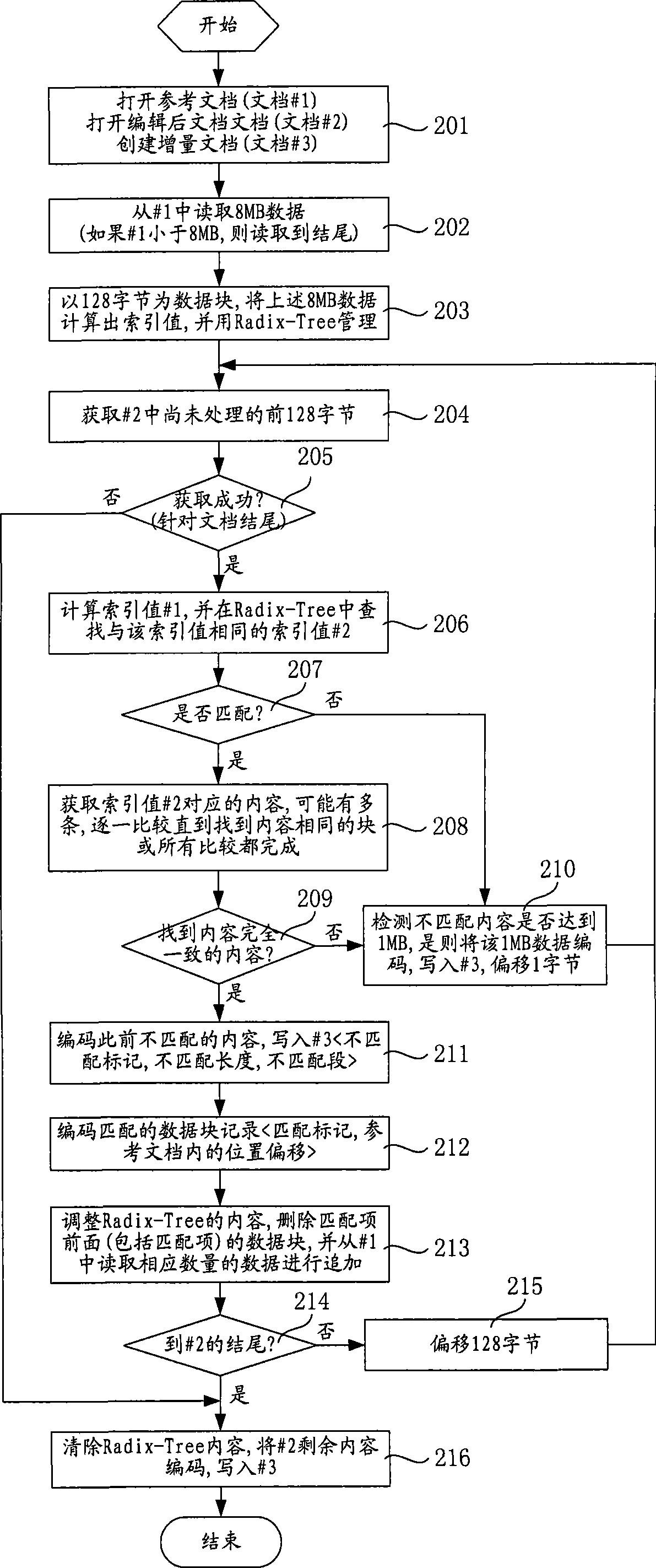

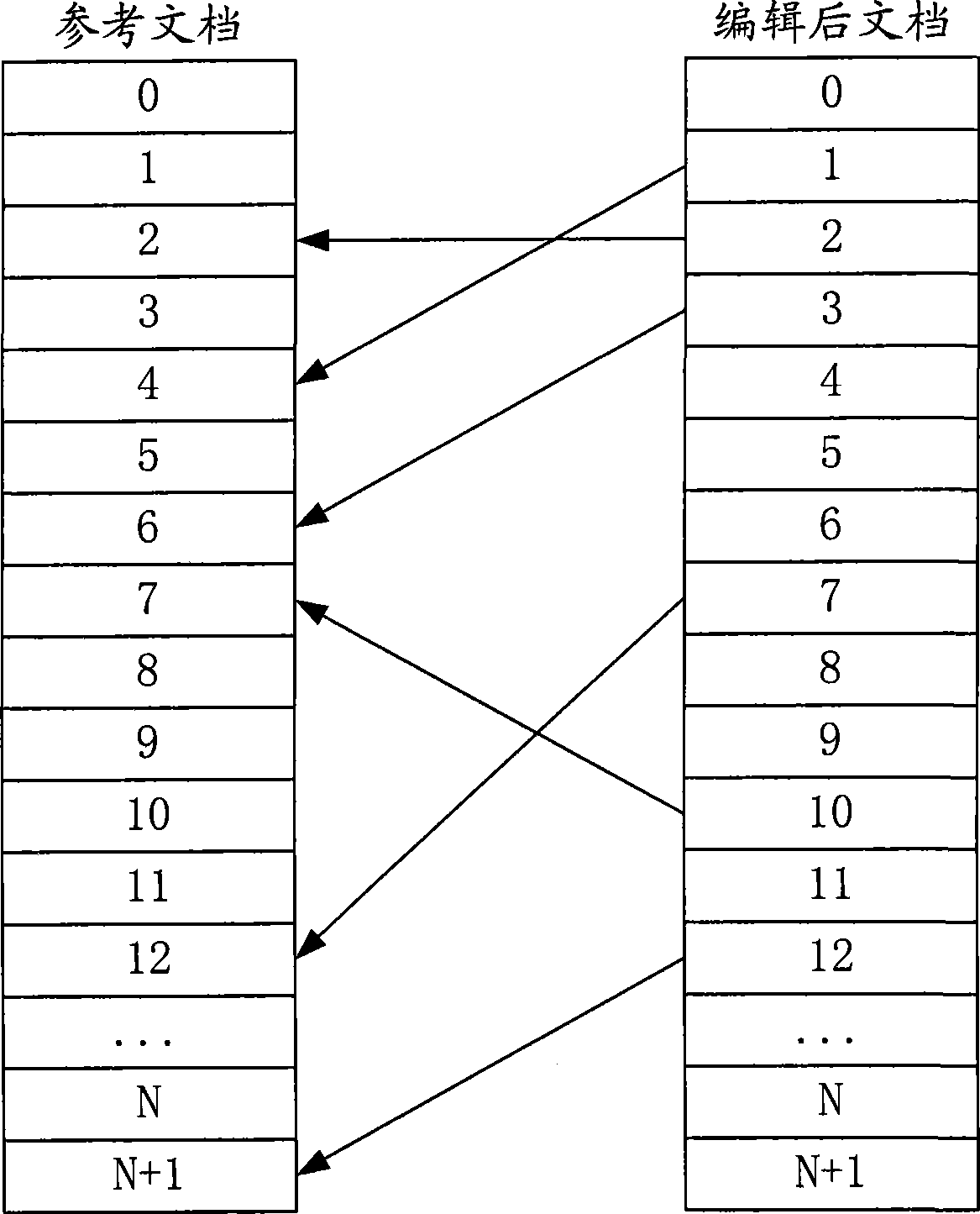

Electronic document increment memory processing method

ActiveCN101482839AReduce the burden onRedundant operation error correctionSpecial data processing applicationsInternal memoryMemory processing

The present invention relates to a method for generating incremental document after edition of electronic document, wherein the method comprises the following steps: reading the data from a reference document into an internal memory and dividing; calculating the index value corresponding with the divided reference data block and relating; sequentially reading the edited data block into the internal memory and calculating the corresponding index value; comparing the index value corresponding with the reference data block with the index value corresponding with the data block after editing, if the two index value are matching with each other, writing the matching position of reference document and matching mark into the incremental document, and otherwise writing the unmatched segment in the document after editing, the unmatched length and the unmatched mark into the incremental document. The invention also relates to a method for recovering the document after editing according to incremental document. The invention generates the incremental document through establishing a mapping relationship between two documents before and after editing and according to the recorded mapping relationship. The incremental document only requires processing for substituting the document after editing in the aspects of data storing, filing, backup, etc. for further reducing the storing burden or network transmission burden.

Owner:BEIJING 21VIANET DATA CENT

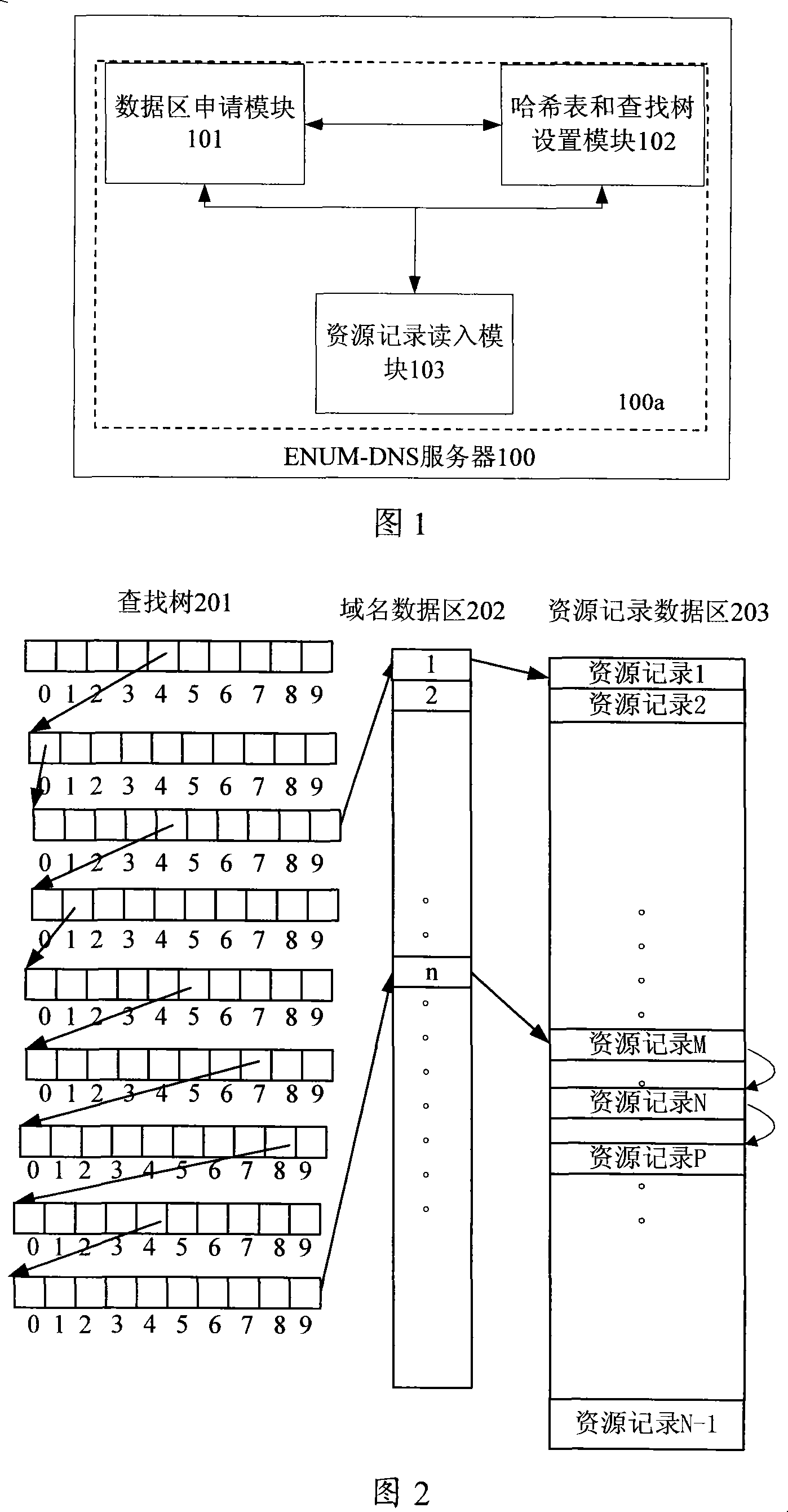

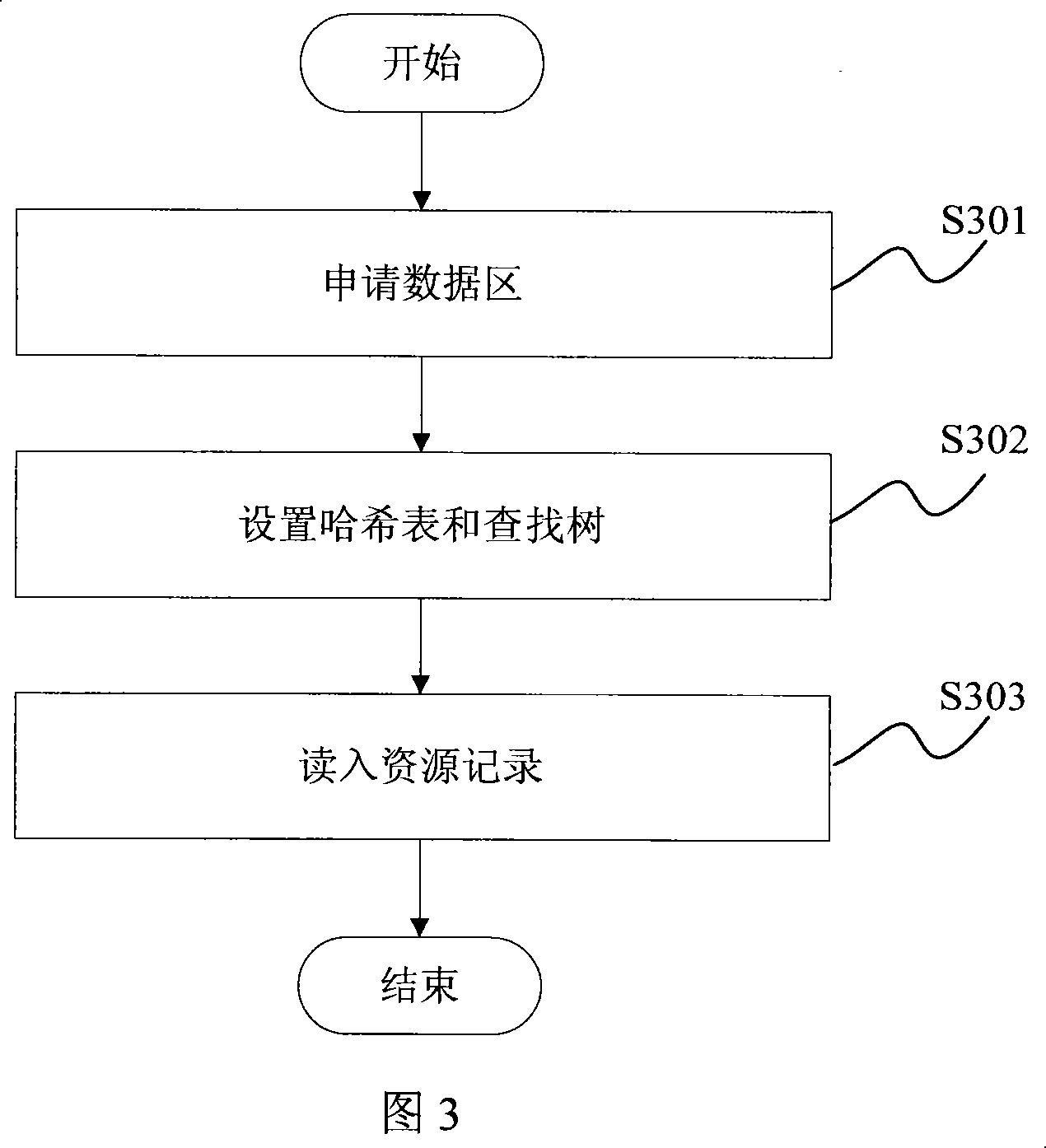

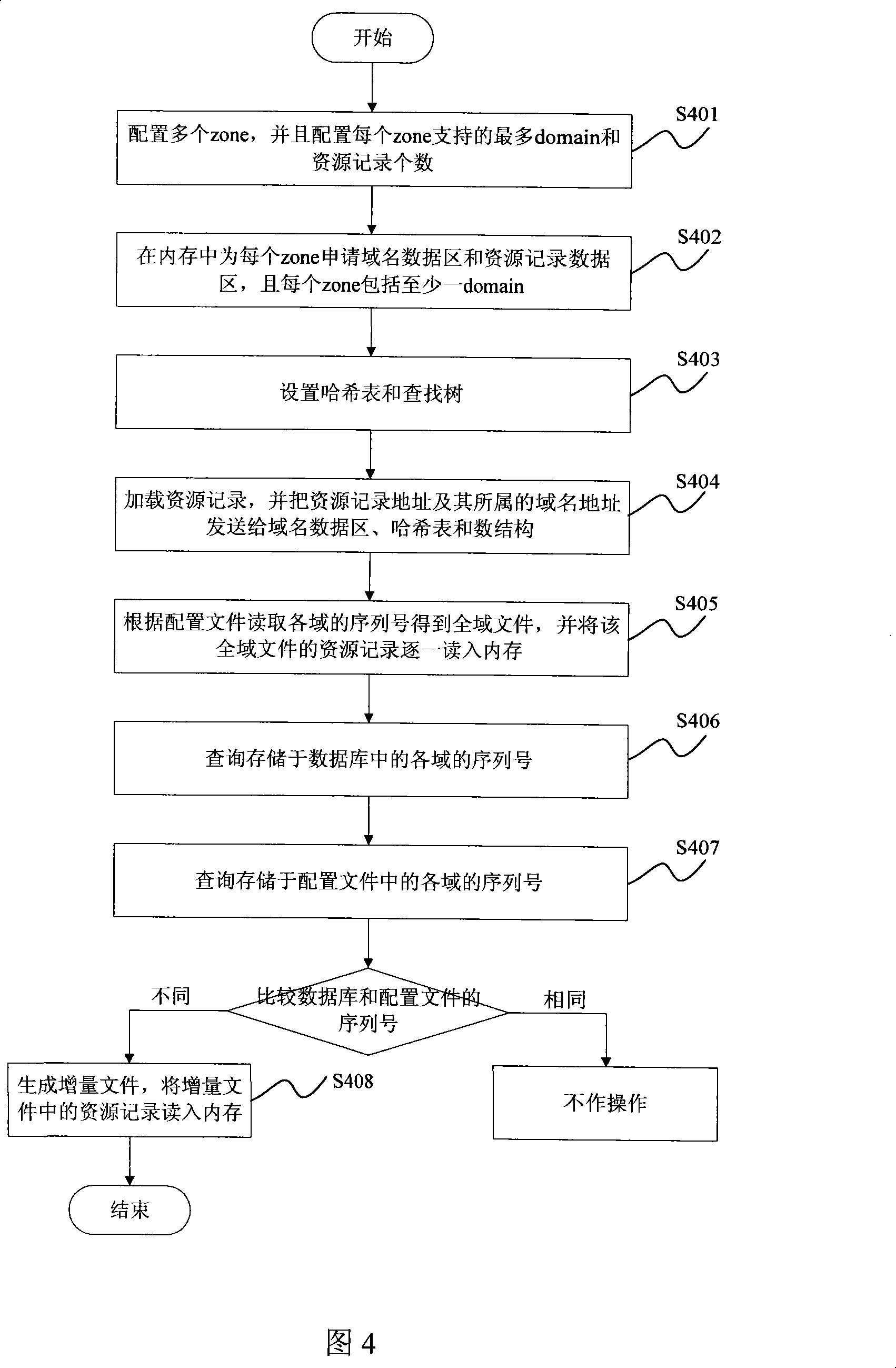

A memory processing method and device of telephone number and domain name mapping server

InactiveCN101102336AMeet the requirements for resource recordsRapid positioningTransmissionSpecial data processing applicationsDomain nameMemory processing

The method comprises: in memory, applying a domain name data area and a resource record data area for each domain; each domain comprises at least one domain name; in the memory, setting up at least one harsh table and a search tree; the node of harsh table saves non-digital portion of the domain name and its corresponding search tree location; the node of the search tree saves the digital portion of the domain name and its domain name data area location; dynamically applying the resource records, saving the initial resource records corresponding to each domain name into the domain name data area, and saving the resource records corresponding to each domain name into the resource records data area. The invention also provides a phone number mapping domain name server thereof.

Owner:ZTE CORP

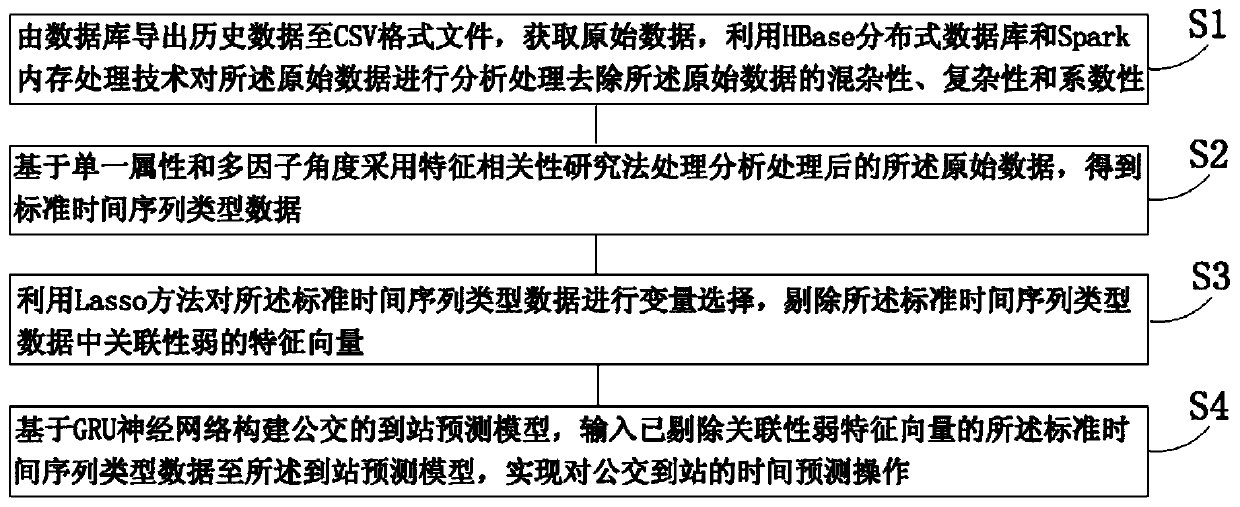

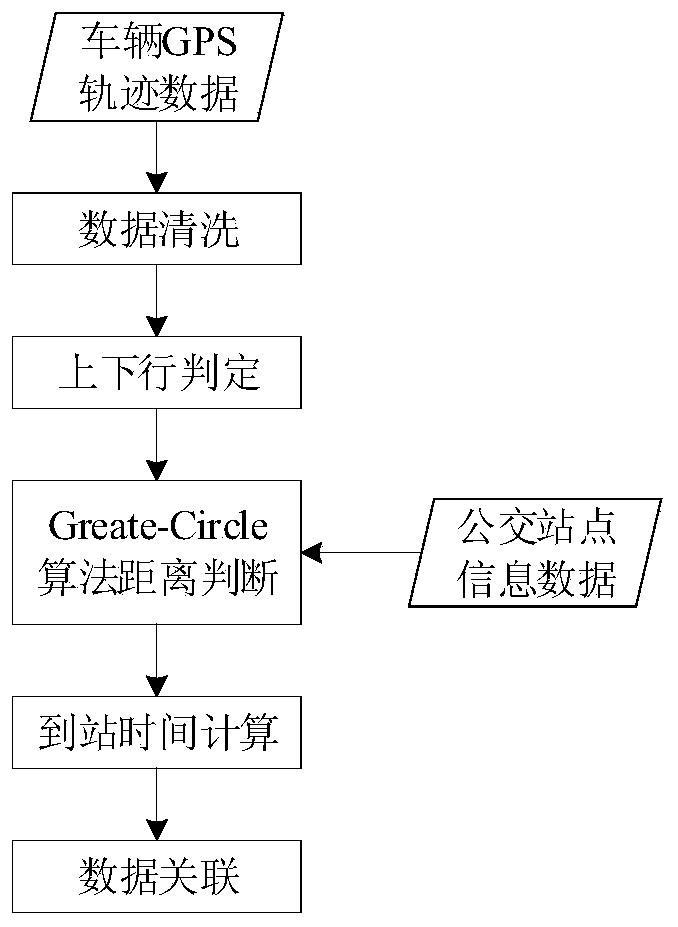

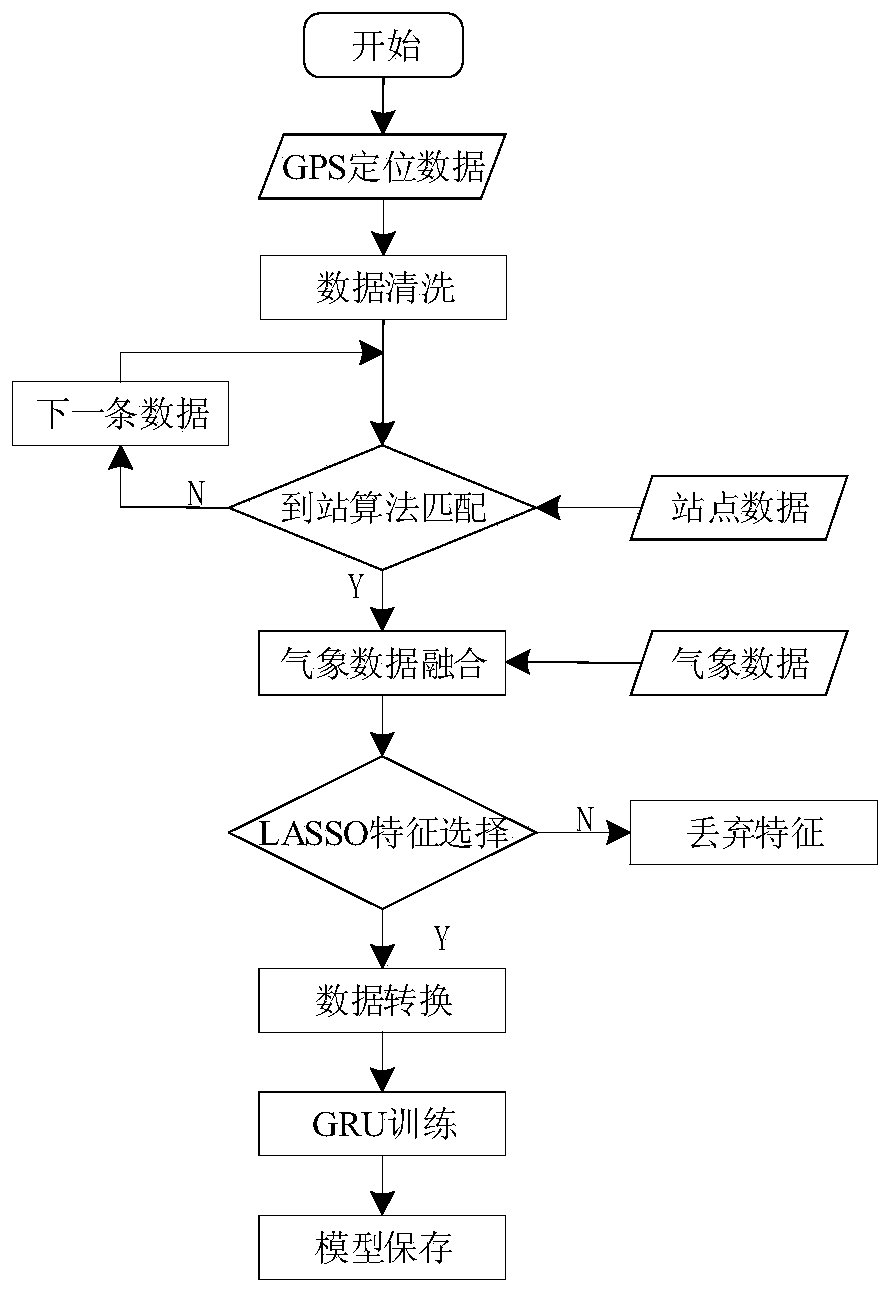

Bus arrival time prediction method based on GRU neural network

ActiveCN109920248AImplement extractionImprove accuracyDetection of traffic movementNeural architecturesOriginal dataAlgorithm

The invention discloses a bus arrival time prediction method based on a GRU neural network. The method comprises: outputting historical data from a database to a CSV format file, acquiring original data, and analyzing the original data by using an HBase distributed database and a Spark memory processing technology to remove hybridity, complexity and coefficient of the original data; on the basis of single attributes and a multi-factor perspective, analyzing and processing the processed original data by using a feature correlation research method to obtain standard time sequence type data; withthe Lasso method, carrying out variable selection on the standard time sequence type data, and rejecting a feature vector with weak correlation in the standard time sequence type data; and constructing a bus arrival prediction model based on a GRU neural network and inputting the standard time sequence type data with the feature vector with weak correlation rejected to the arrival prediction model, thereby realizing bus arrival time prediction operation. Therefore, the accuracy of the bus arrival time prediction is improved effectively.

Owner:NANTONG UNIVERSITY

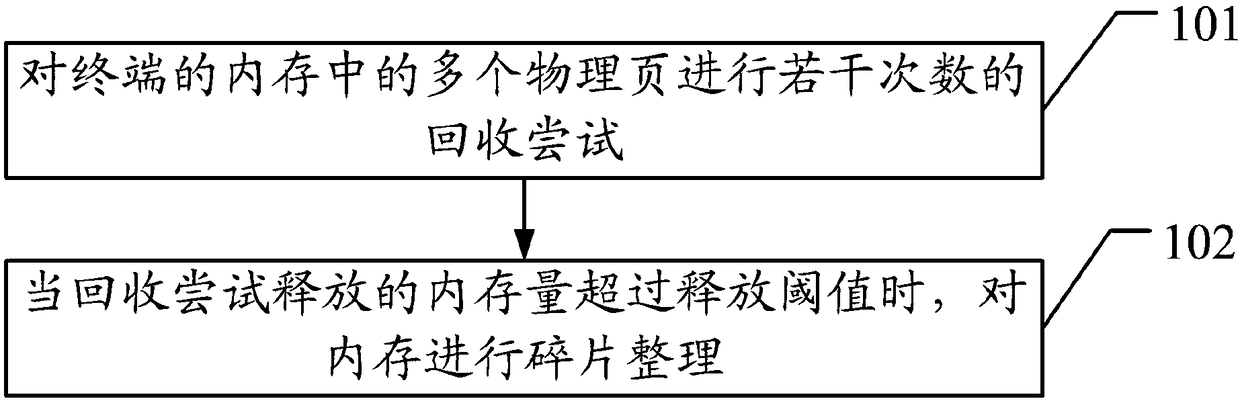

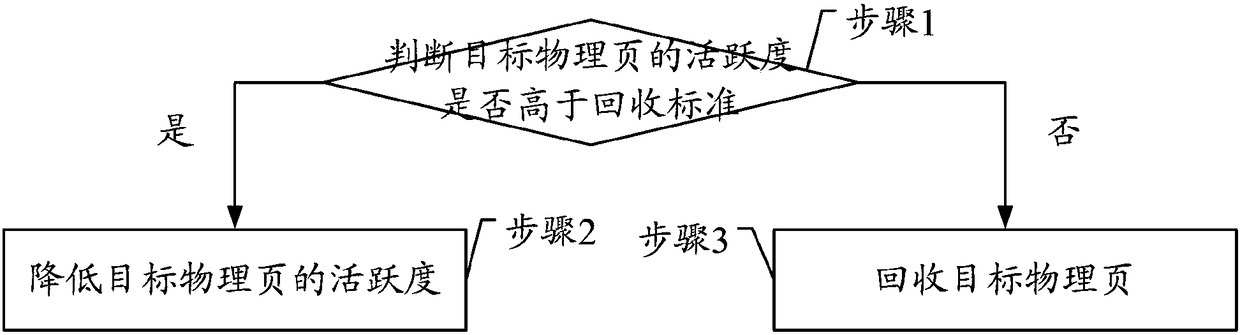

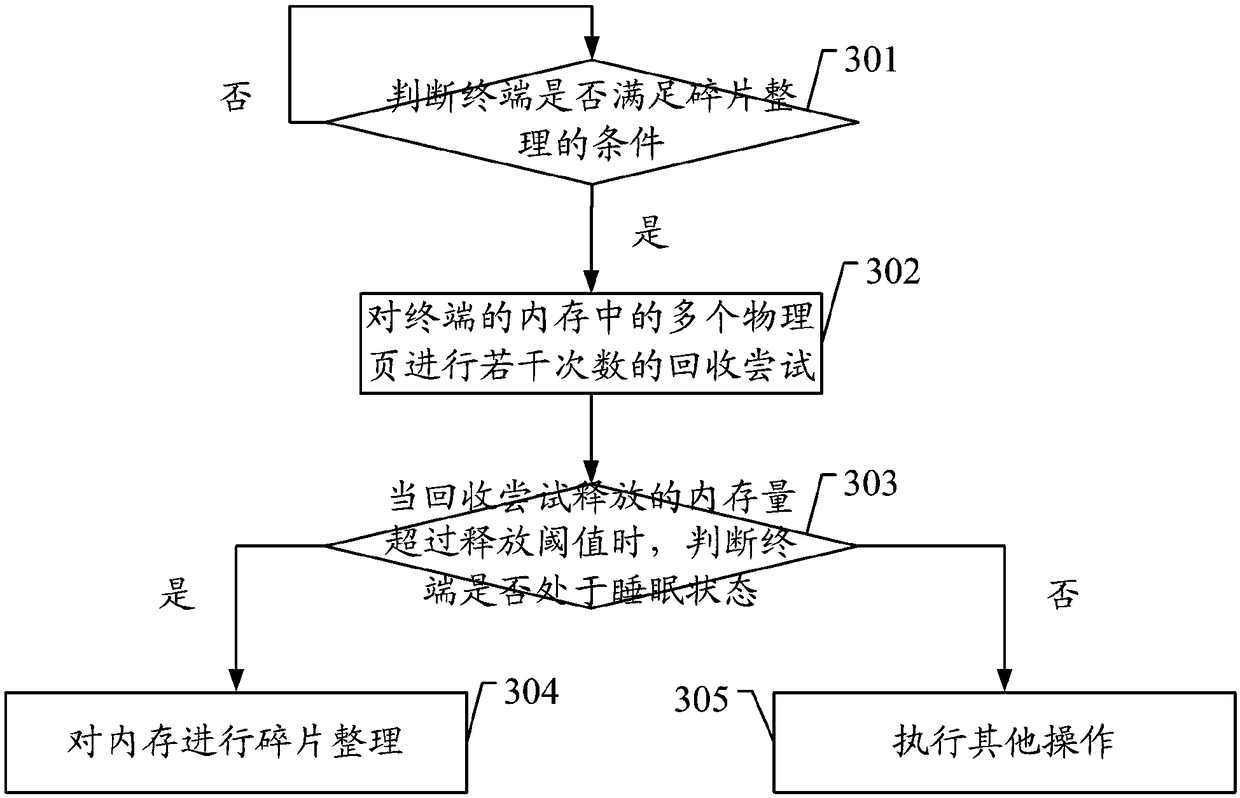

Memory processing method and apparatus, computer apparatus and computer readable storage medium

ActiveCN108205473AImprove finishing efficiencyIncrease available memory spaceResource allocationMemory adressing/allocation/relocationMemory processingComputational science

Embodiments of the invention disclose a memory processing method and apparatus, a computer apparatus and a computer readable storage medium, which relate to the technical field of computers and are used for solving the problem of difficulty in full utilization of defragmentation for expanding available memory space in the prior art. The method comprises the steps of performing recycling attempt onmultiple physical pages in a memory of a terminal for multiple times; and when the memory capacity released by the recycling attempt exceeds a release threshold, performing defragmentation on the memory, wherein the process of performing the recycling attempt on the physical pages for one time comprises the steps of judging whether the activity degrees of the physical pages are higher than a recycling standard or not, wherein the activity degrees of the physical pages are used for marking the activity levels of the physical pages, and the values of the activity degrees of the physical pages have positive correlation with the activity levels of the physical pages; and if yes, reducing the activity degrees of the physical pages, otherwise, recycling the physical pages.

Owner:MEIZU TECH CO LTD

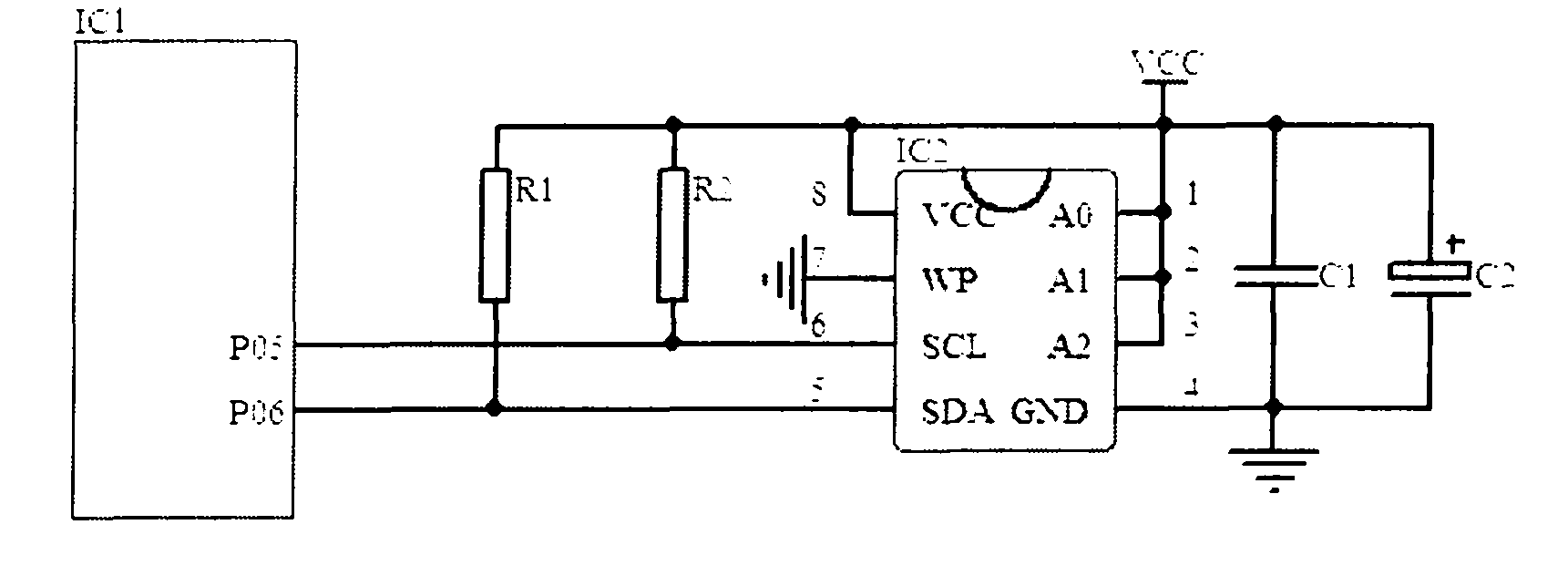

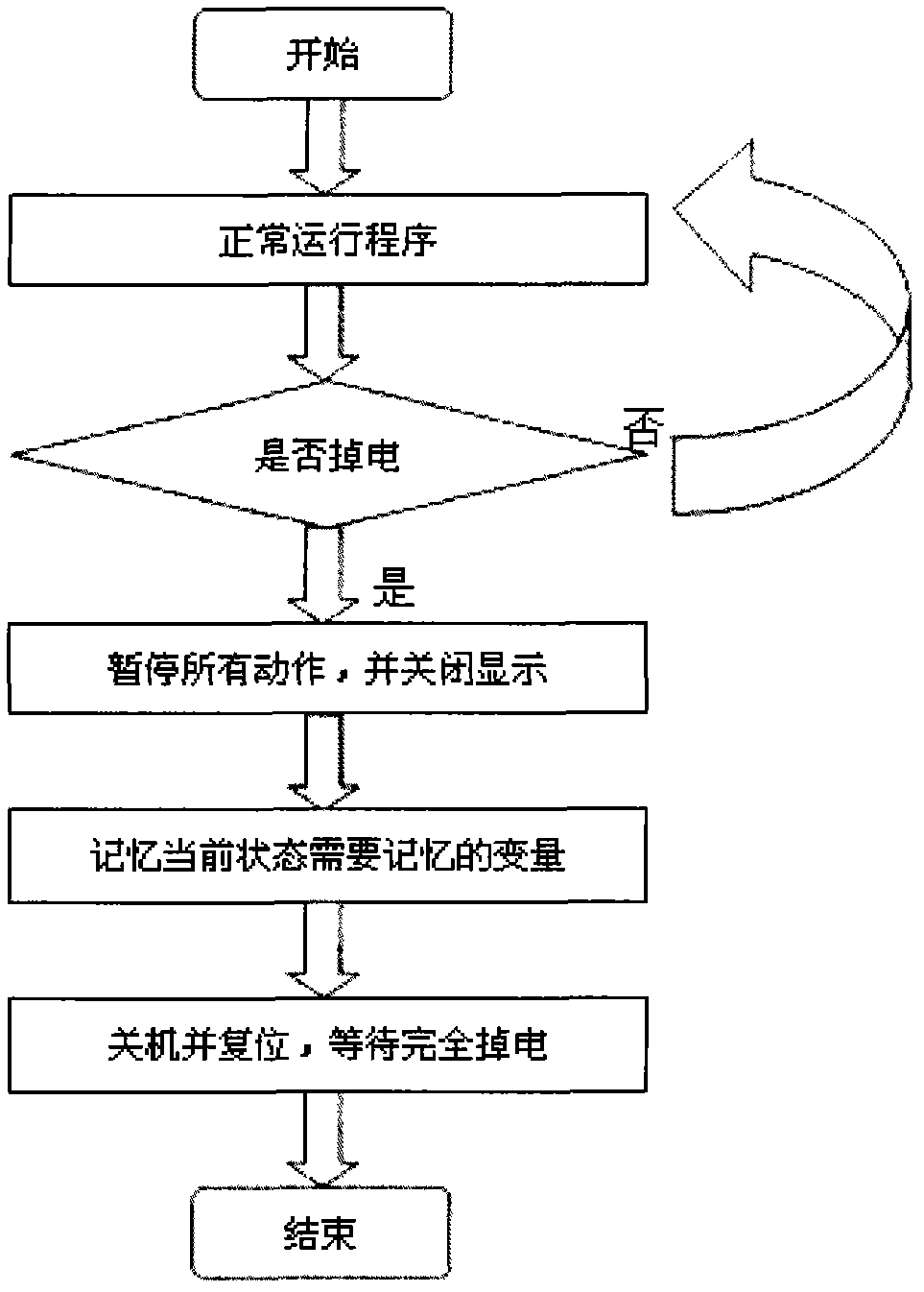

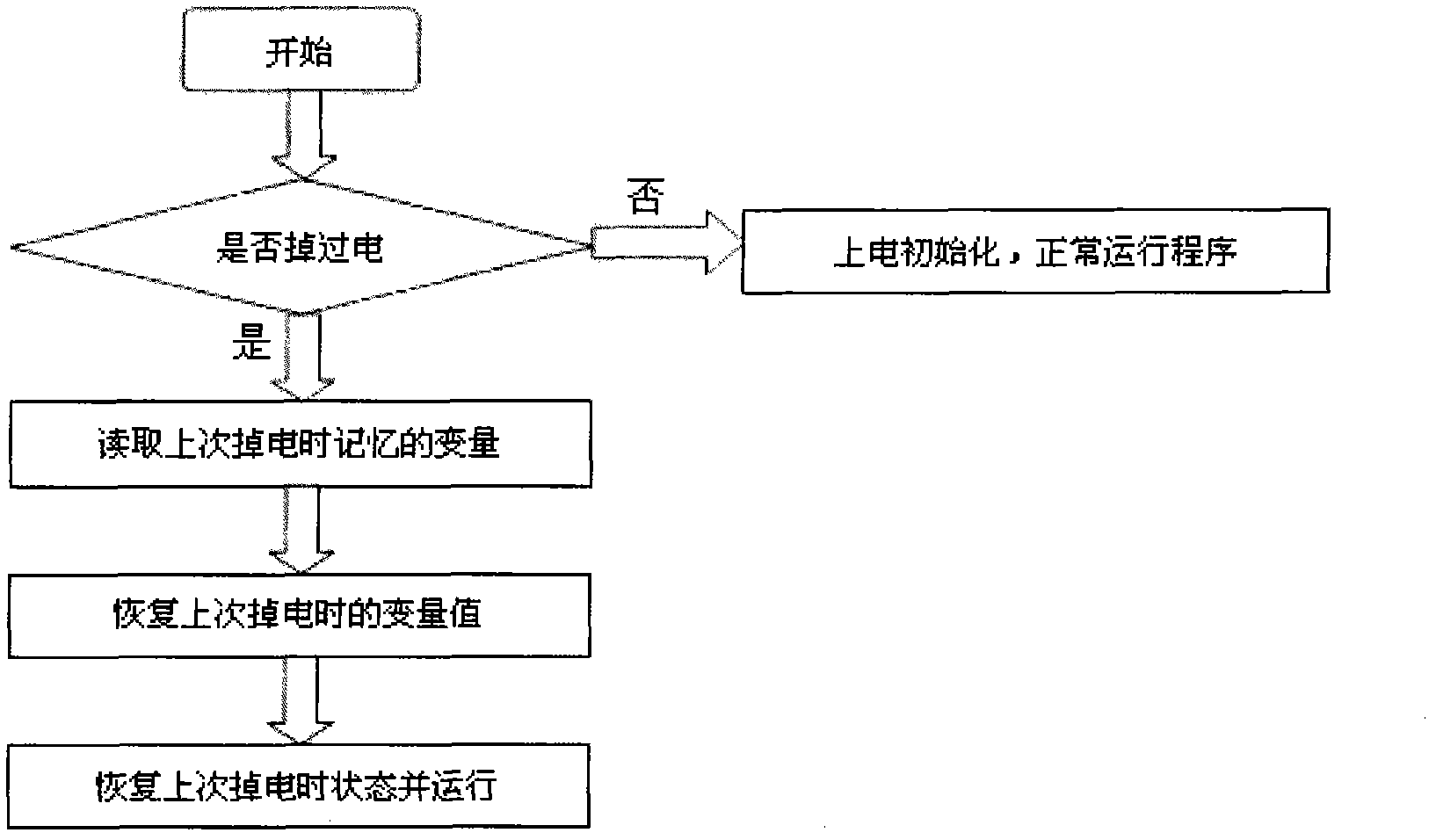

Fully automatic washing machine instantaneous power off memory circuit and processing method thereof

InactiveCN102061592ARealize the memory function of instantaneous power failureMeet needsControl devices for washing apparatusTextiles and paperMicrocontrollerMicrocomputer

The invention discloses a fully automatic washing machine instantaneous power off memory circuit and a processing method thereof. The circuit consists of a single chip microcomputer IC1, a memory chip IC2 and a peripheral circuit. When the washing machine suddenly powers off, the single chip microcomputer IC1 records the current operating mode of the washing machine and sends the record to the memory chip IC2 for storage; and after the washing machine powers on again, the single chip microcomputer IC1 recovers the original operating mode of the washing machine. By adopting the memory circuit disclosed by the invention, the original periodic power off memory processing method of the fully automatic washing machine can be changed, the additional washing time caused by sudden power off can be reduced, electricity and water resources can be saved, the instantaneous power off memory function of the fully automatic washing machine can be realized and the requirements of customers can be met.

Owner:HEFEI MIDEA WASHING MACHINE

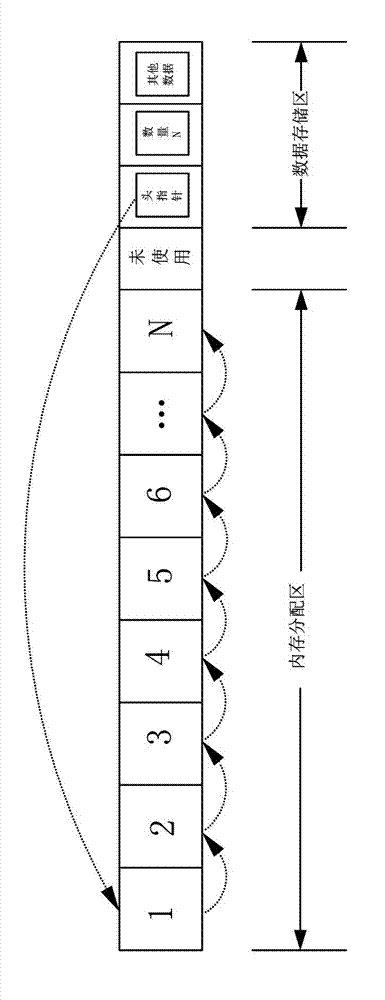

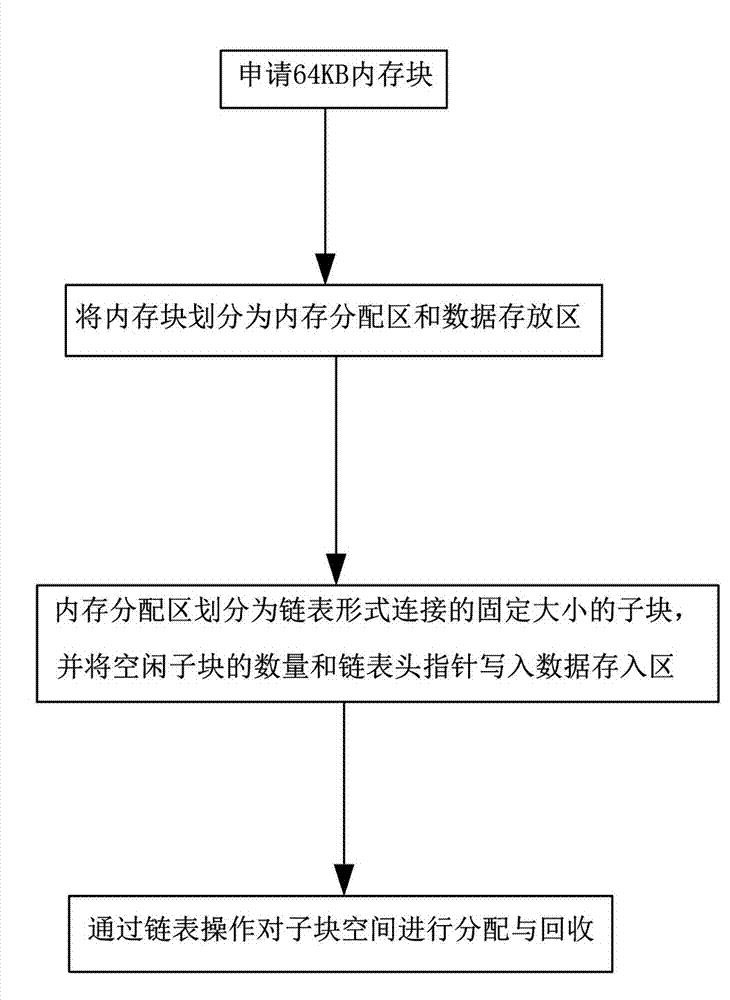

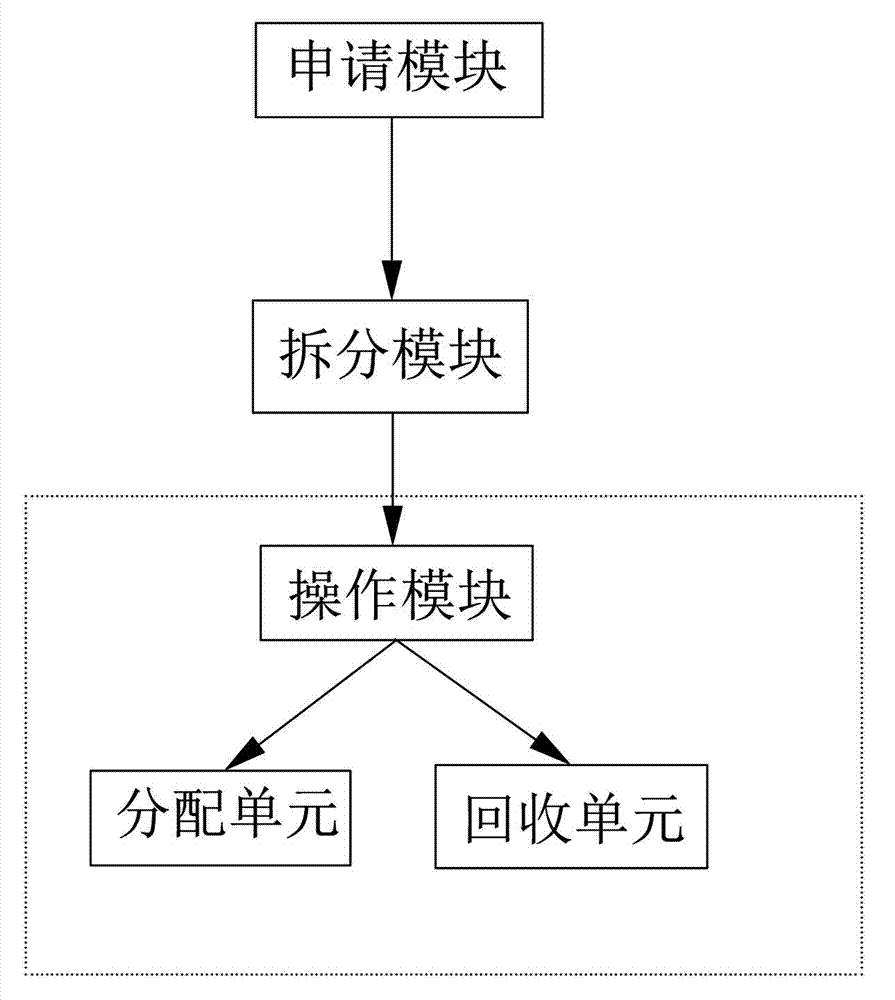

Method and system for memory management by memory block

InactiveCN103617123AProcessing speedImprove management efficiencyMemory adressing/allocation/relocationMemory processingParallel computing

The invention discloses a method and a system for memory management by a memory block. the method includes applying the memory block of 64KB, dividing the memory block into a memory distribution region and a data storage region, dividing the memory distribution region into fixed-size sub blocks connected in a linked-list mode, writing a linked-list head pointer and the number of idle sub blocks into the data storage region, and realizing memory distribution and recovery through linked-list operations. According to the method, the memory block of 64KB is applied, and distribution and recovery of memory units are performed in a linked-list mode, so that fragments possibly caused during memory distribution are reduced and even eliminated; through the linked-list mode, simple structure is realized, complex operations of traversal and inquiry in the prior art are omitted for memory distribution and recovery, memory processing speed is obviously increased, and memory management efficiency is effectively improved.

Owner:ZHUHAI KINGSOFT ONLINE GAME TECH CO LTD +1

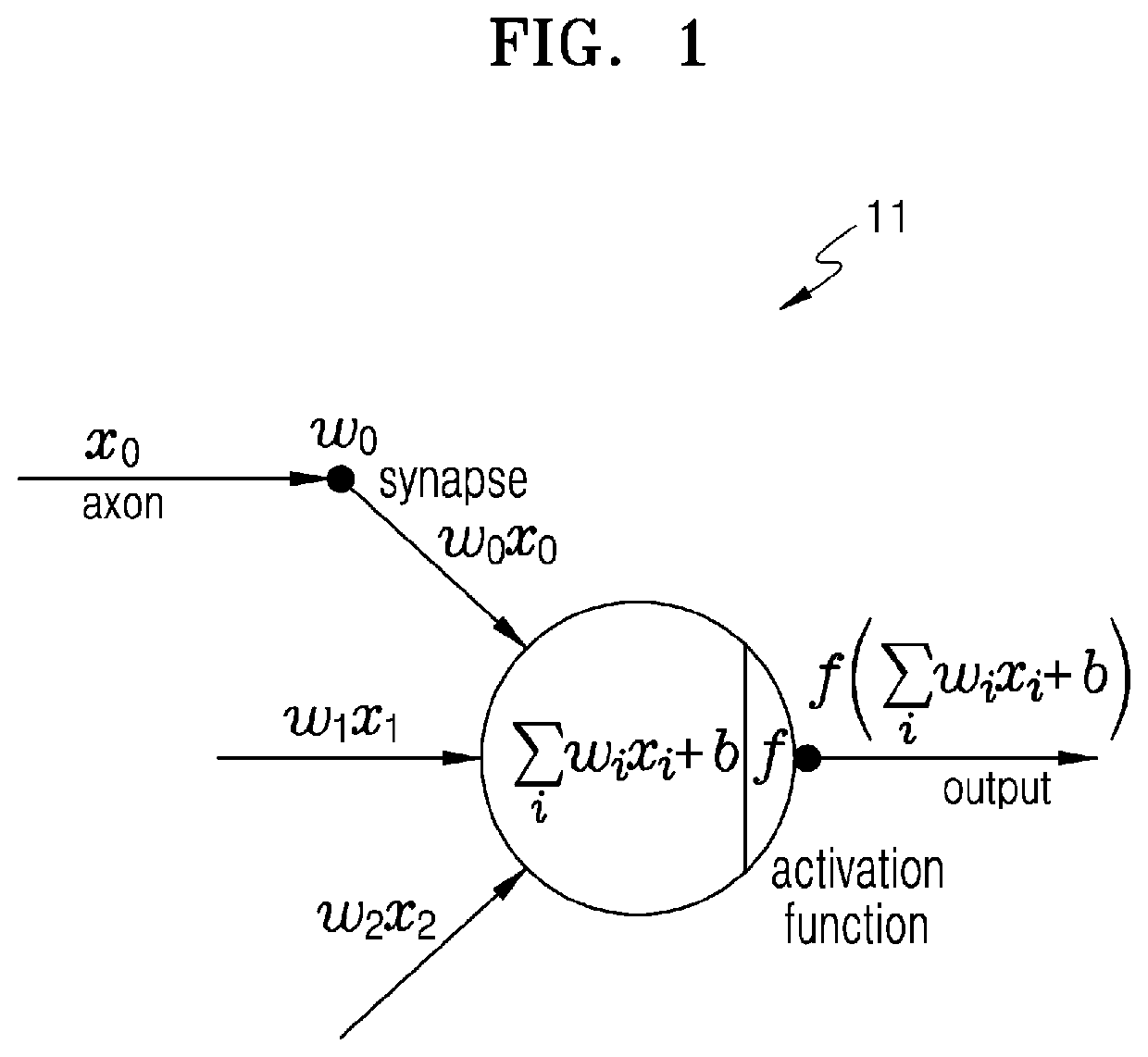

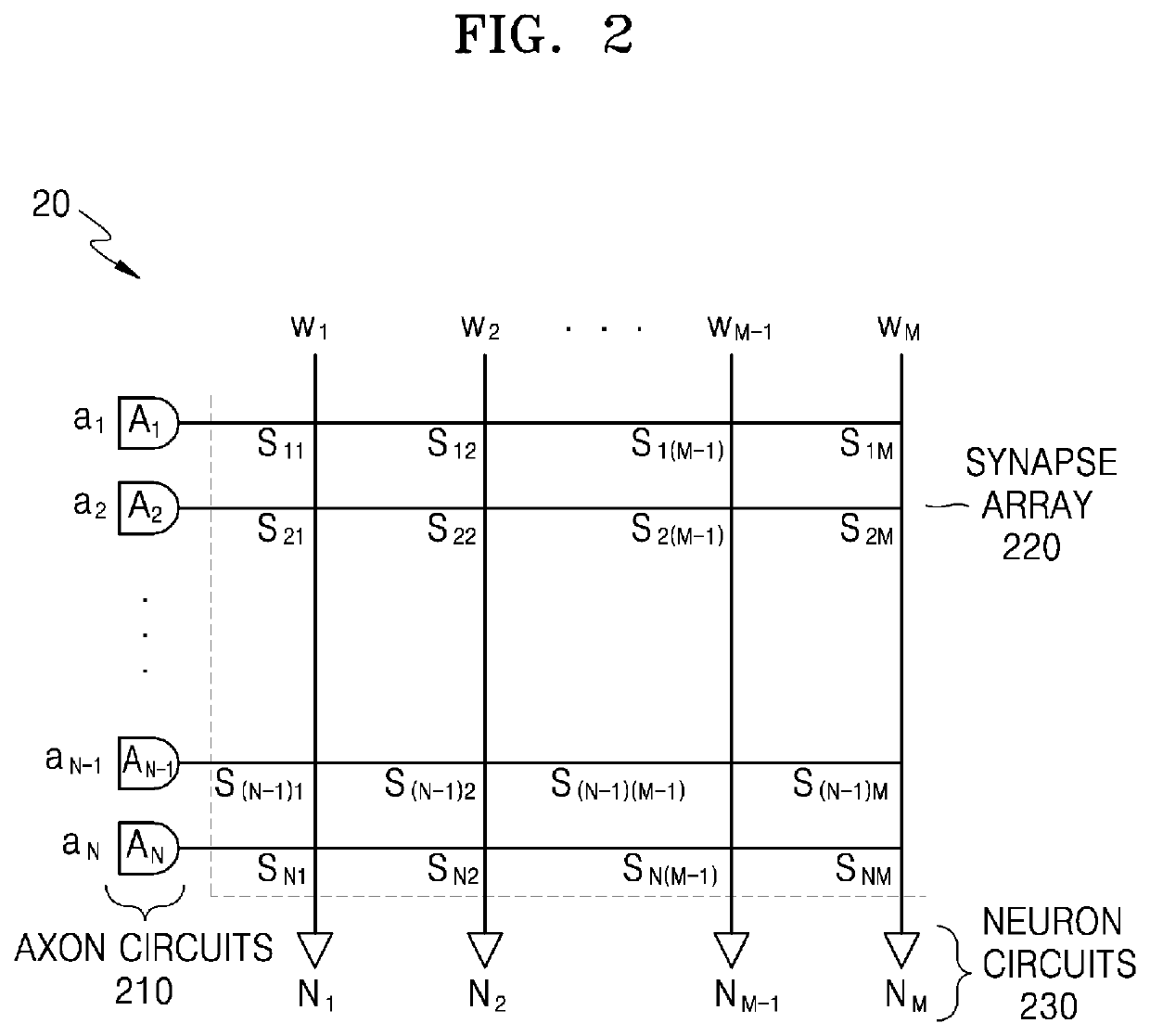

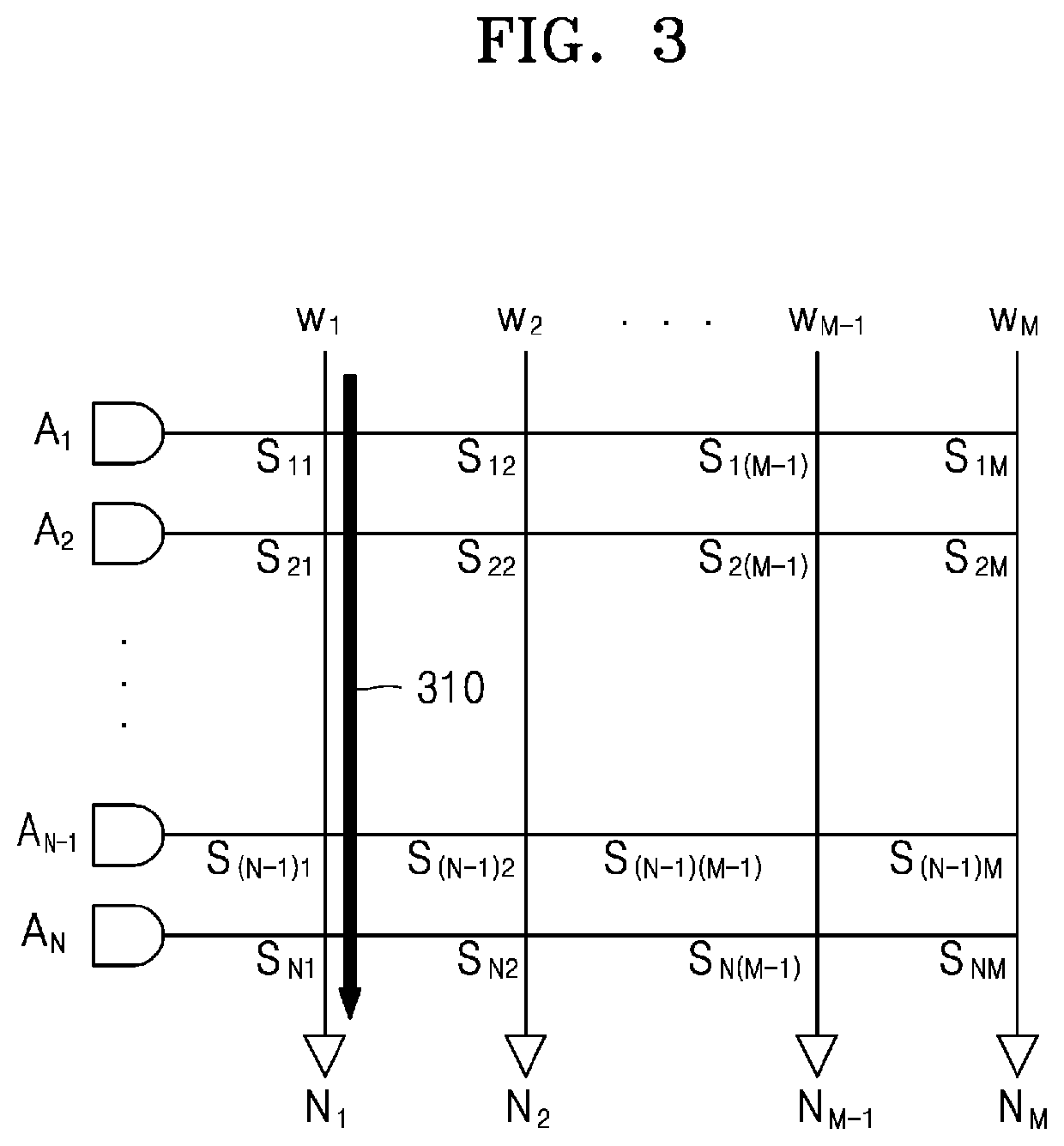

Apparatus and method with in-memory processing

An apparatus for performing in-memory processing includes a memory cell array of memory cells configured to output a current sum of a column current flowing in respective column lines of the memory cell array based on an input signal applied to row lines of the memory cells, a sampling circuit, comprising a capacitor connected to each of the column lines, configured to be charged by a sampling voltage of a corresponding current sum of the column lines, and a processing circuit configured to compare a reference voltage and a currently charged voltage in the capacitor in response to a trigger pulse generated at a timing corresponding to a quantization level, among quantization levels, time-sectioned based on a charge time of the capacitor, and determine the quantization level corresponding to the sampling voltage by performing time-digital conversion when the currently charged voltage reaches the reference voltage.

Owner:SAMSUNG ELECTRONICS CO LTD

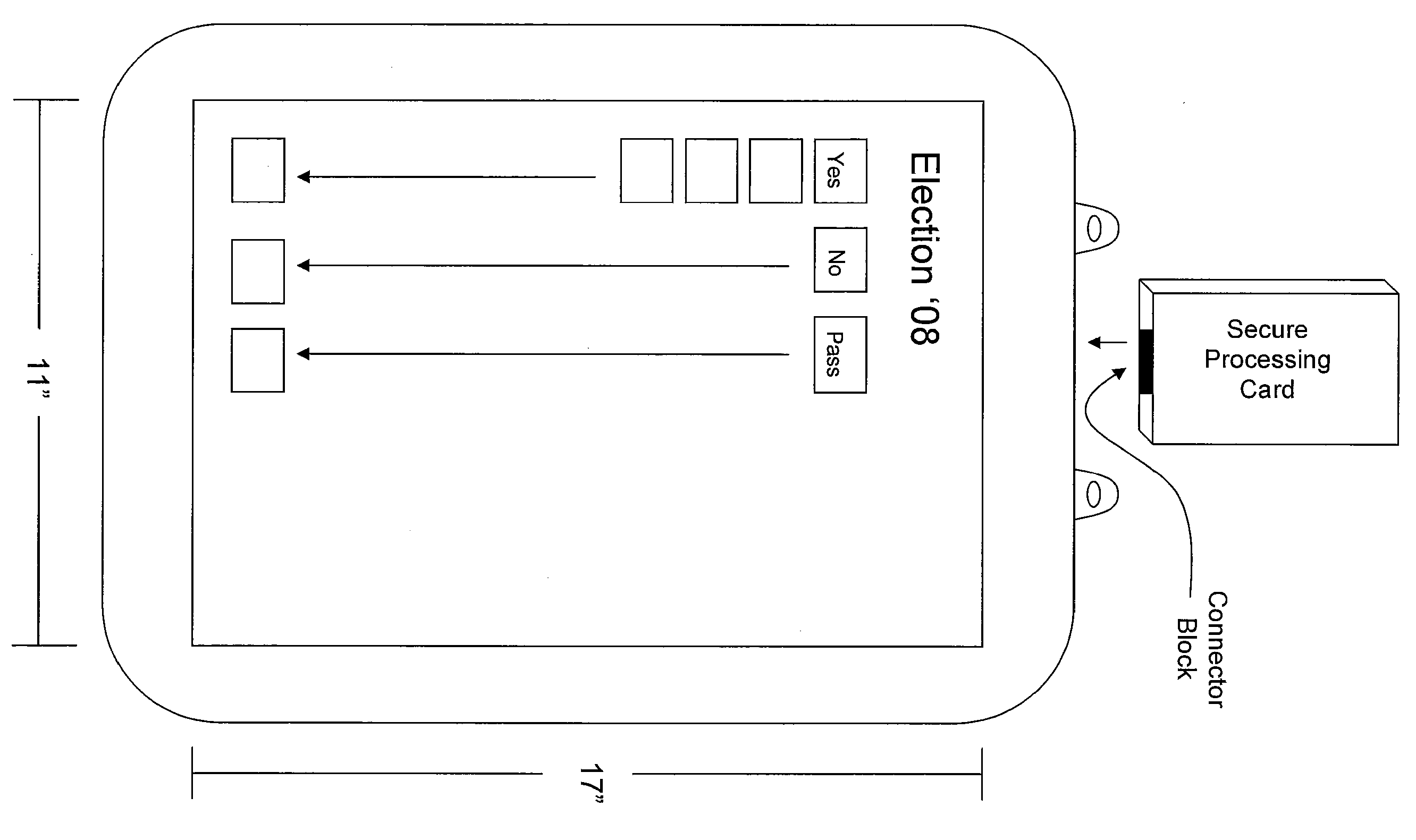

Voting Machine with Secure Memory Processing

InactiveUS20070170252A1Voting apparatusCo-operative working arrangementsComputer hardwareMemory processing

An integrated voting system includes a memory element configured with multiple lock bits. Lock bits are arranged according to vote records, thereby allowing each individual vote cast to be recorded securely, providing a tamper-resistant record of the vote count. The system forms a complete, integrated, secure voting system that can be re-used on different elections without requiring any updates, changes, or other maintenance.

Owner:ORTON KEVIN R

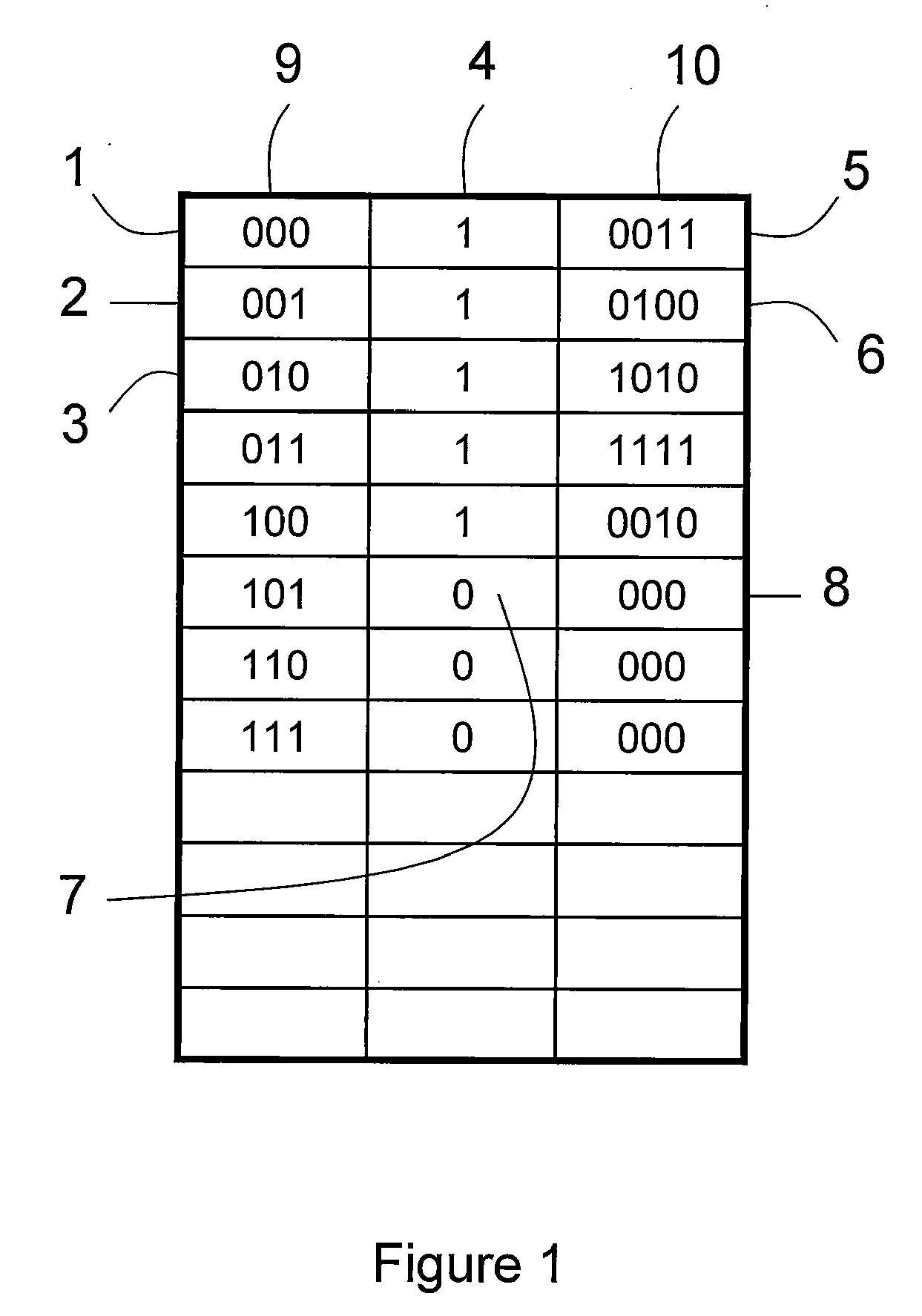

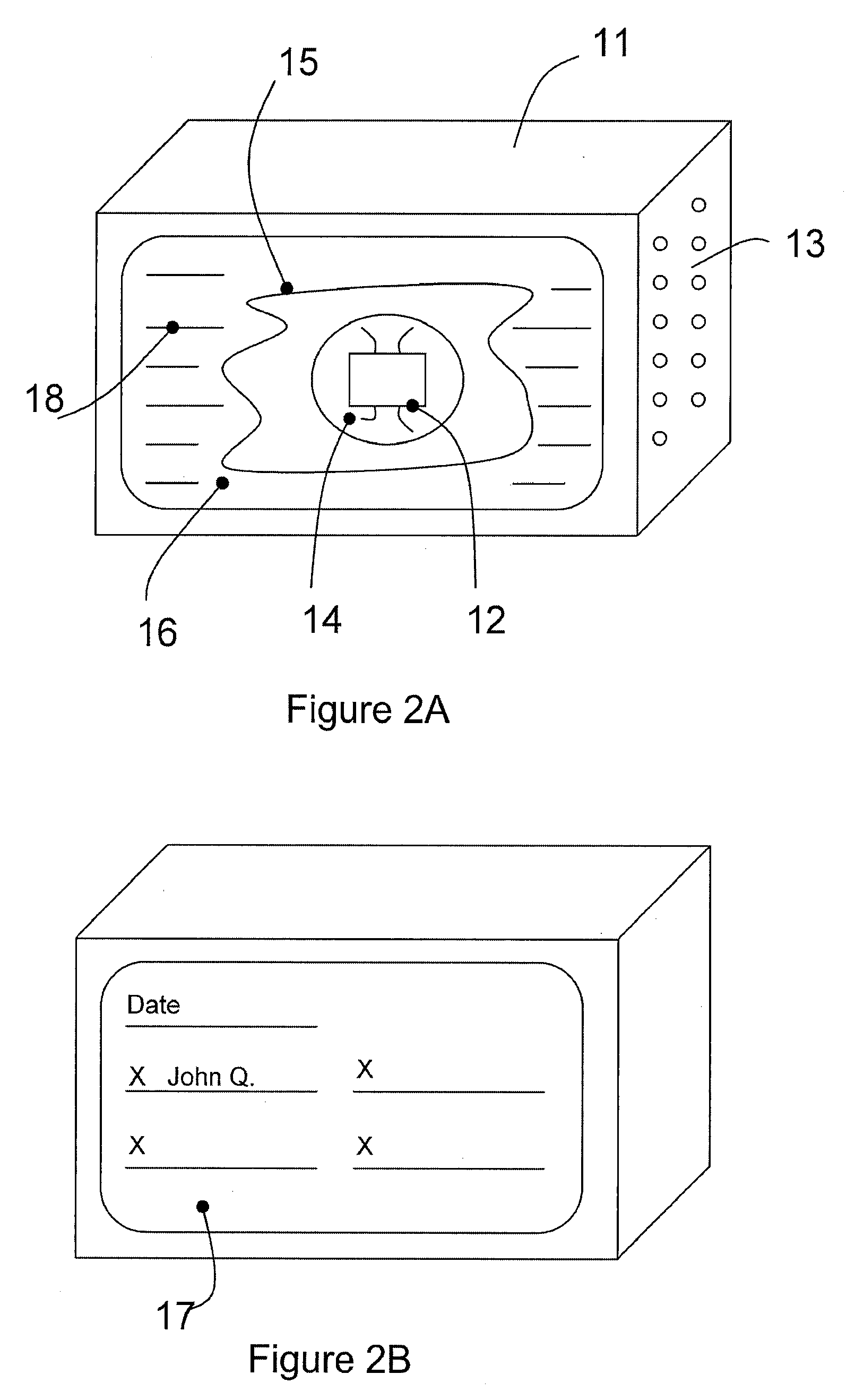

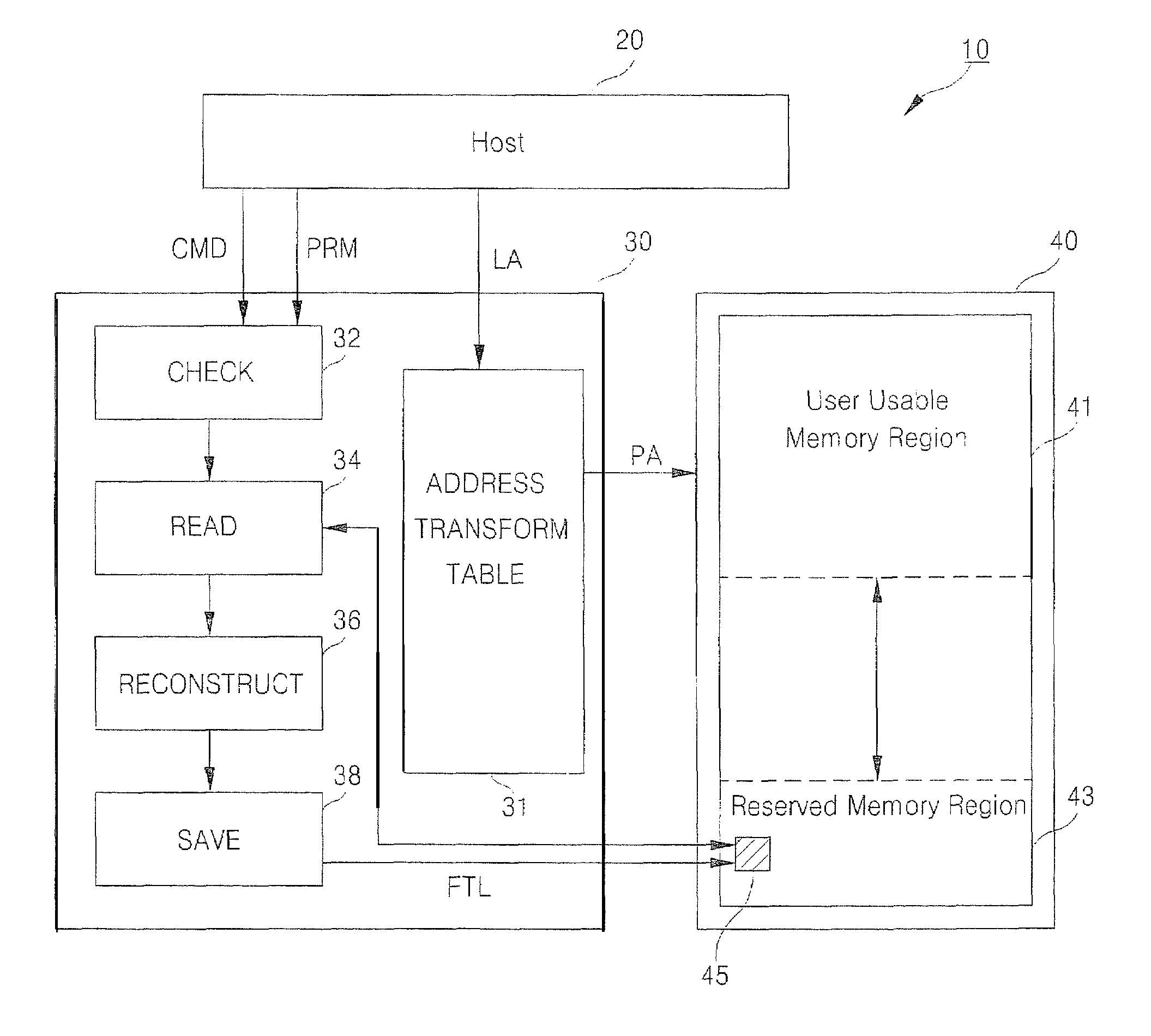

Methods and apparatus for reallocating addressable spaces within memory devices

ActiveUS8001356B2Memory architecture accessing/allocationProgram control using stored programsMemory addressMemory processing

Integrated circuit systems include a non-volatile memory device (e.g, flash EEPROM device) and a memory processing circuit. The memory processing circuit is electrically coupled to the non-volatile memory device. The memory processing circuit is configured to reallocate addressable space within the non-volatile memory device. This reallocation is performed by increasing a number of physical addresses within the non-volatile memory device that are reserved as redundant memory addresses, in response to a capacity adjust command received by the memory processing circuit.

Owner:SAMSUNG ELECTRONICS CO LTD

Memory processing in a microprocessor

InactiveUS20020166037A1Efficient processingSolve the real problemMemory systemsMicro-instruction address formationMemory addressMemory processing

The invention relates to memory processing in a microprocessor. The microprocessor comprises a memory indicated by means of alignment boundaries for storing data, at least one register for storing data used during calculation, memory addressing means for indicating the memory by means of the alignment boundaries and for transferring data between the memory and the register, and a hardware shift register, which can be shifted with the accuracy of one bit, and which comprises a data loading zone and a guard zone. The memory addressing means transfer data including a memory addressing which cannot be fitted into the alignment boundary between the memory and the register through the data loading zone in the hardware shift register, and the hardware shift register is arranged to process data using shifts and utilizing the guard zone.

Owner:NOKIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com