A Data Selective Caching Method Based on Cooperative Caching

A selective, data-based technology, applied in wireless communication, transmission systems, electrical components, etc., can solve the problems of not considering other people's cache data, multi-user cache of the same hotspot data, etc., to achieve efficient use of memory capacity and maximize cellular traffic offloading Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] Below in conjunction with the drawings, preferred embodiments of the present invention are given and described in detail.

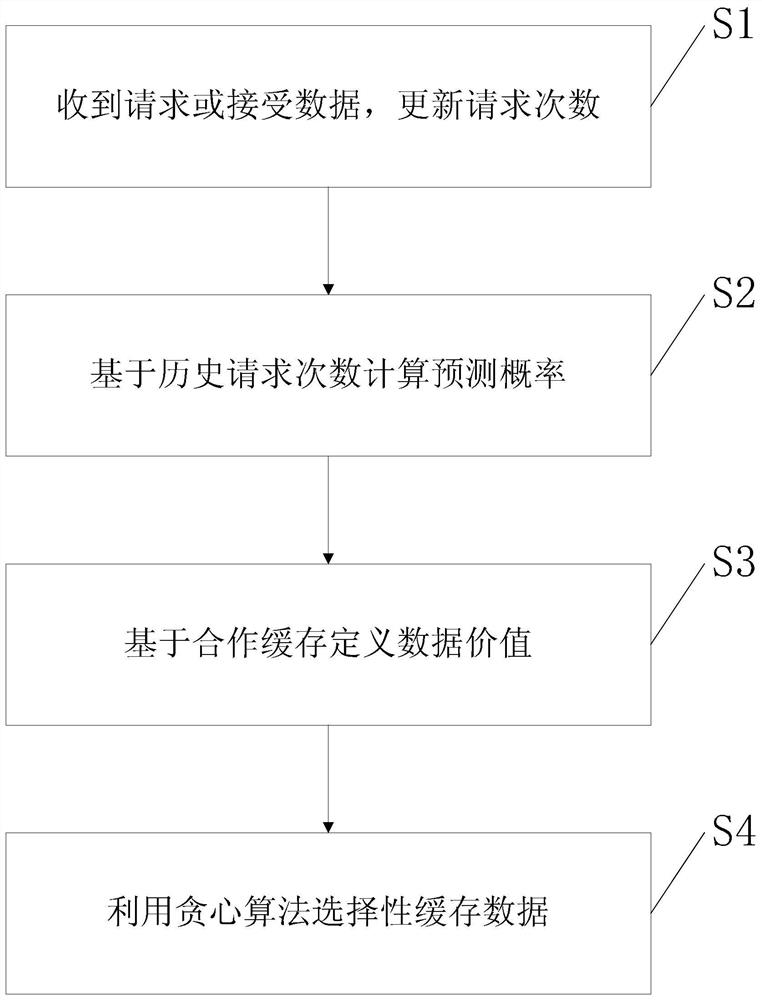

[0040] Such as figure 1 Shown, the present invention, a kind of data selective caching method based on cooperative caching, it comprises the following steps:

[0041] Step S1, when the current user receives a request for each data from an adjacent user, or receives each data from an adjacent user or a base station, record and update the number of requests for each data;

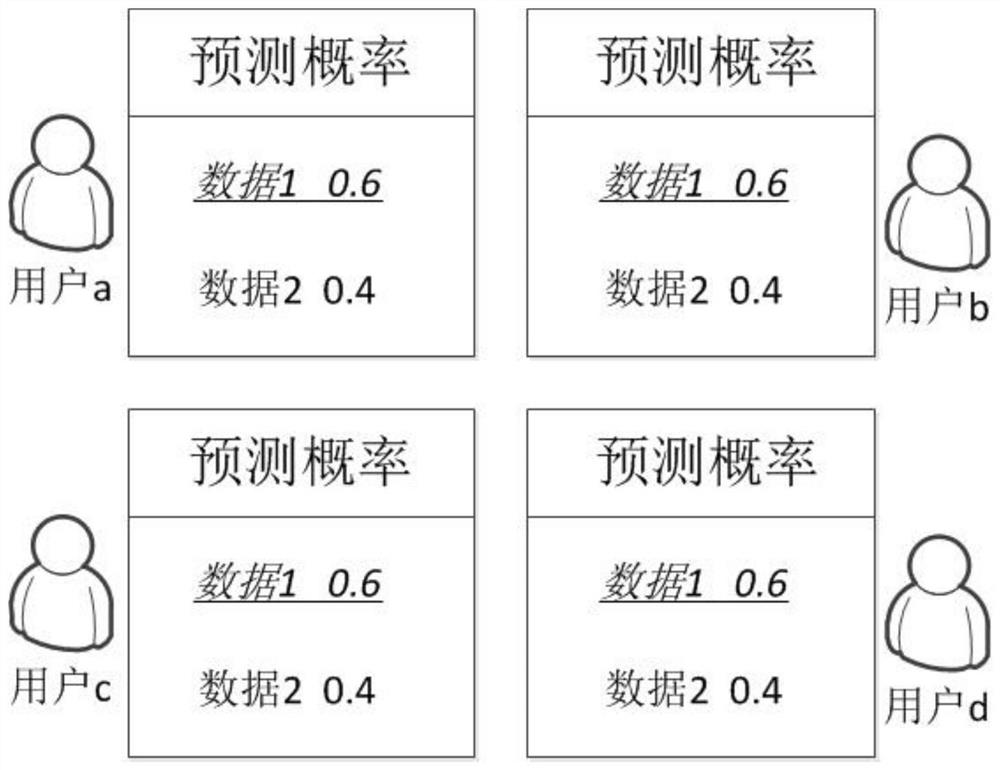

[0042] Step S2, the current user predicts the probability that each data will be requested in the future according to the number of requests for each data in step S1, so as to obtain the predicted probability of each data;

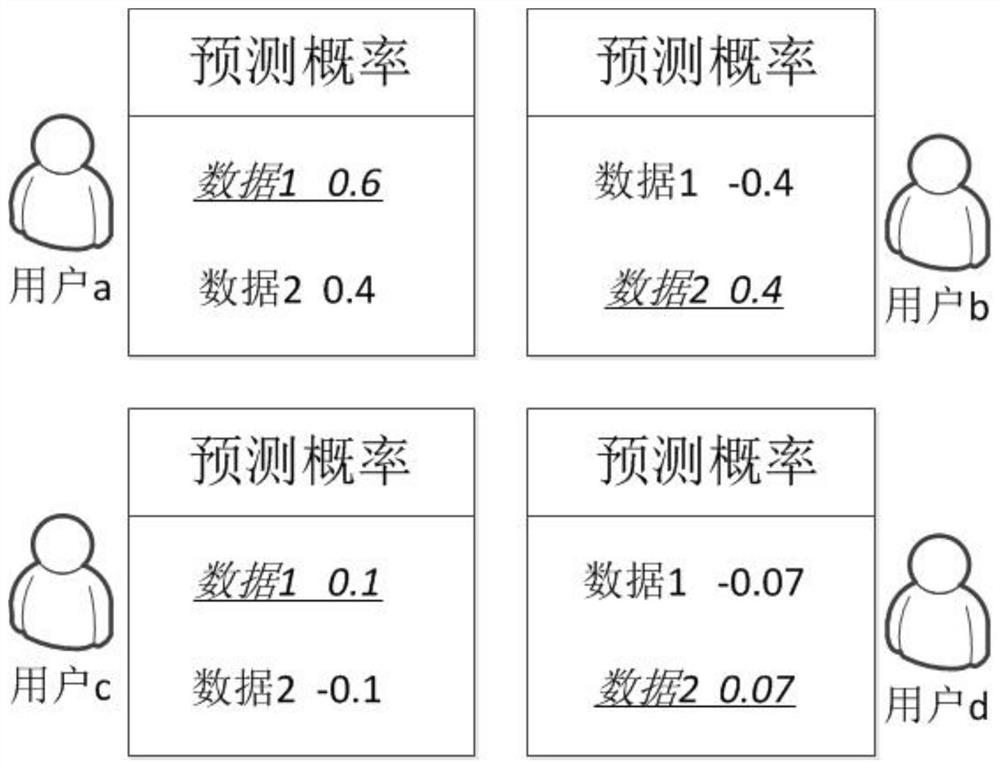

[0043] Step S3, the current user inquires and collects the memory cache situation of adjacent users before caching each data, and defines the value of each data in combination with the size of each data and the predicted probability of each data in step S2; a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com