Real-time object three-dimensional reconstruction method based on depth camera

A technology of depth camera and 3D reconstruction, applied in the field of 3D imaging, can solve the problems of high segmentation complexity, huge data volume of depth camera, and decreased accuracy of 3D object model.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

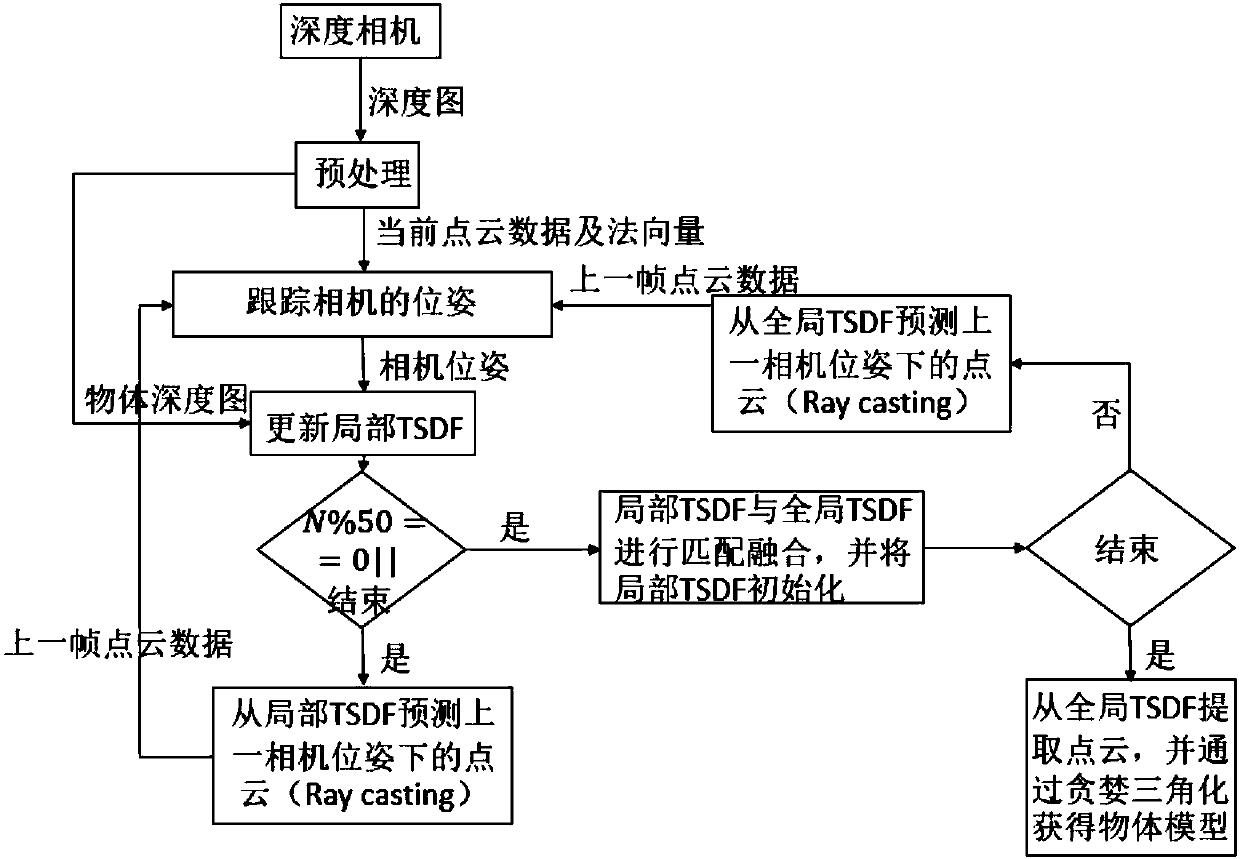

[0058] The overall flow of the object 3D reconstruction algorithm is as follows:

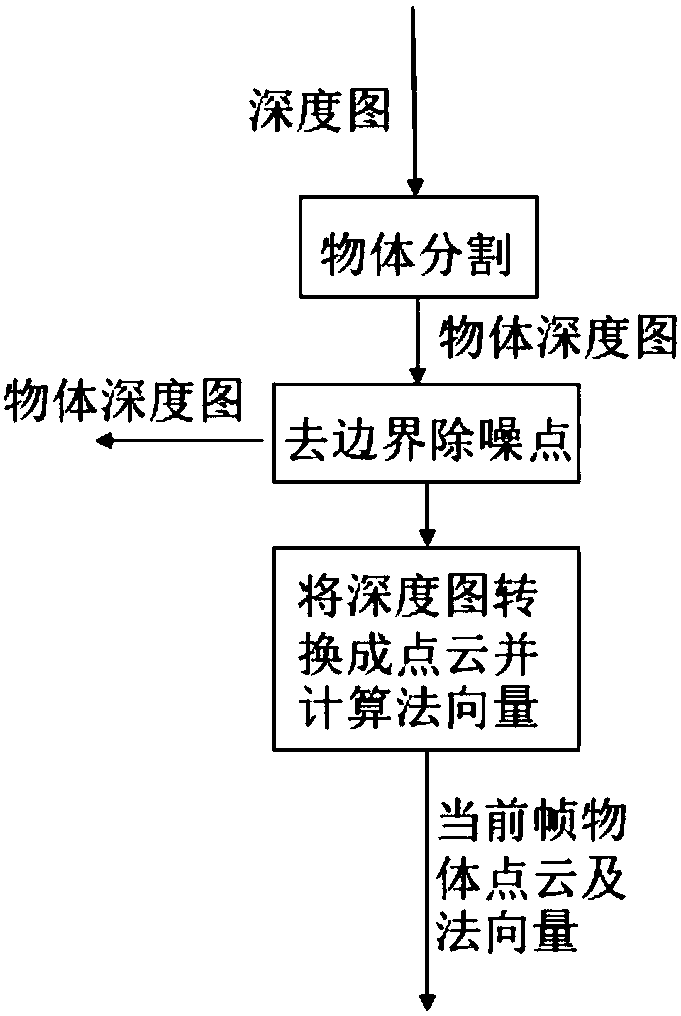

[0059] Step 1. Obtain the depth image from the depth camera, through the object segmentation in the preprocessing, remove the boundary and denoise points, generate the point cloud, and use PCA to calculate the point cloud normal vector to obtain the object depth image and object point cloud data after removing the boundary and denoising points and normal vectors.

[0060] Step 2. Use the centroids of the two frames of point clouds before and after to obtain the initial value of the camera translation, and then use the ICP algorithm to estimate the precise pose of the camera.

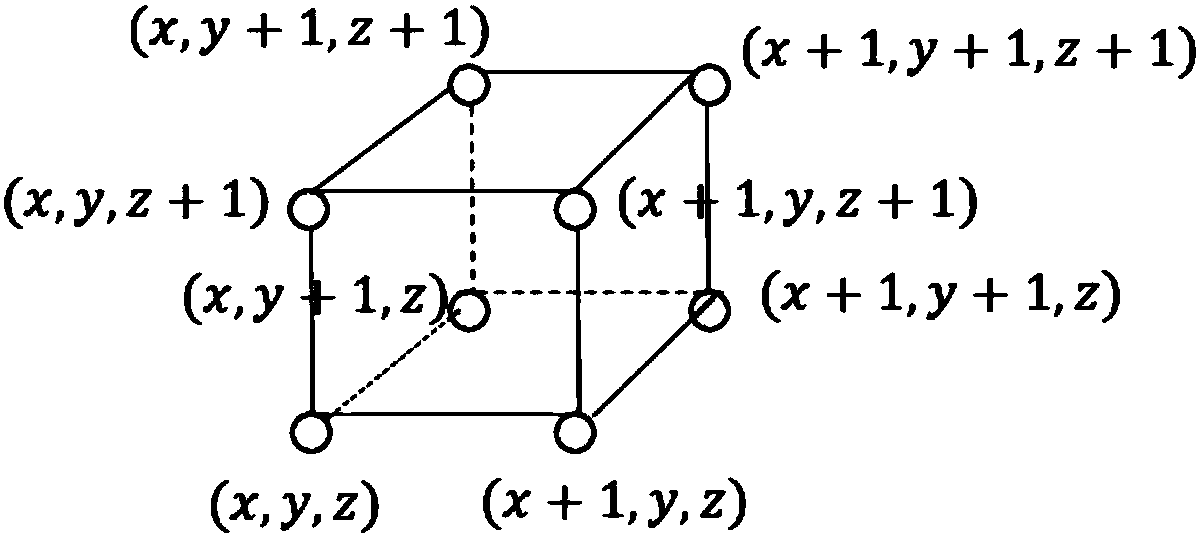

[0061] Step 3. Fuse the frame data into a local TSDF using the estimated precise camera pose.

[0062] Step 4. Determine whether there is an end instruction. The end instruction refers to the instruction issued when the program end instruction and the number of frames required for local TSDF fusion reach the predetermined...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com