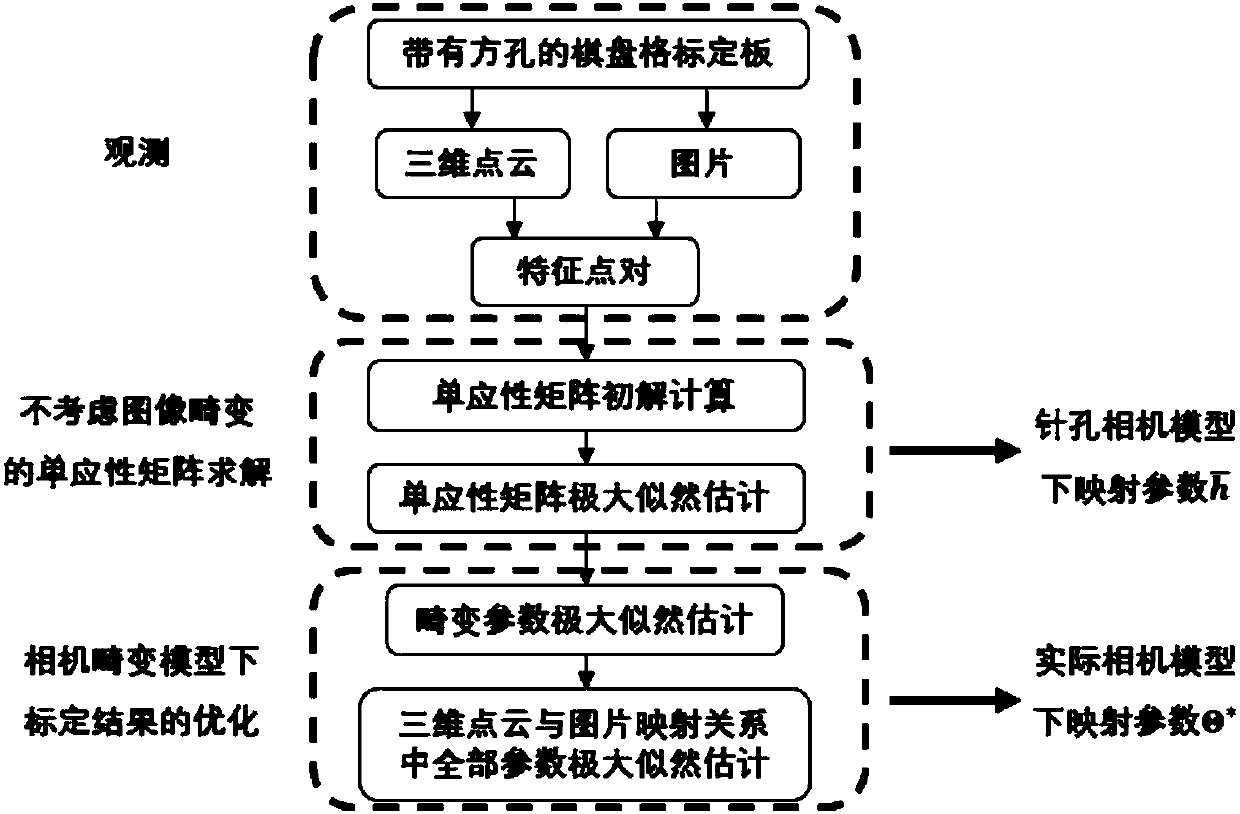

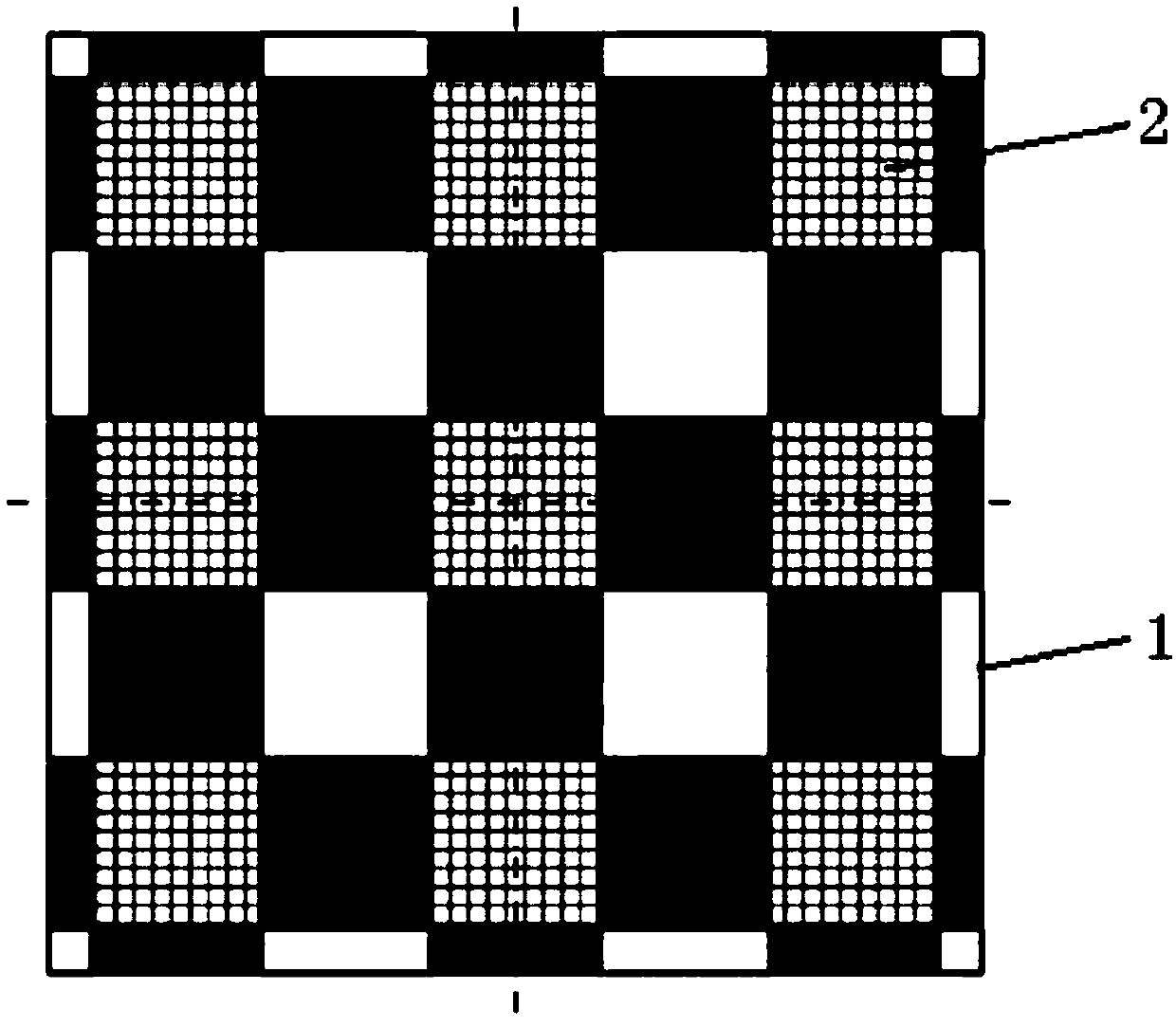

Quick and precise calibrating method of mapping relation of laser point cloud and visual image

A visual image and mapping relationship technology, applied in the field of intelligent networked vehicle environment perception, can solve the problems of cumbersome calibration process, global optimization of difficult calibration process, 3D point cloud and pixel error accumulation, etc., to simplify the calibration process and reduce calibration Steps, effects with high mapping accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The present invention will be described in detail below in conjunction with the accompanying drawings and embodiments. However, it should be understood that the accompanying drawings are provided only for better understanding of the present invention, and they should not be construed as limiting the present invention.

[0048] Suppose there is a space point x in the world coordinate system world , which is a three-dimensional space point x in the lidar coordinate system lidar =(x l ,y l ,z l ) T ; and its coordinate in the camera coordinate system is x camera =(xc ,y c ,z c ) T , becomes a two-dimensional point u in the pixel coordinate system projected by the camera camera =(u,v) T . The so-called calibration is to establish x lidar with u camera The corresponding relationship between, that is, to give the representation of a certain space point in the world coordinate system in the lidar coordinate system x lidar , the corresponding u of the point can be...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap