Video image sequence segmentation system and method

A video image and sequence technology, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve problems such as 2D camera operation and setting, difficulty in equipment construction and setting, lengthy calculation process, etc., to achieve easy operation and Effects of settings, easy self-testing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

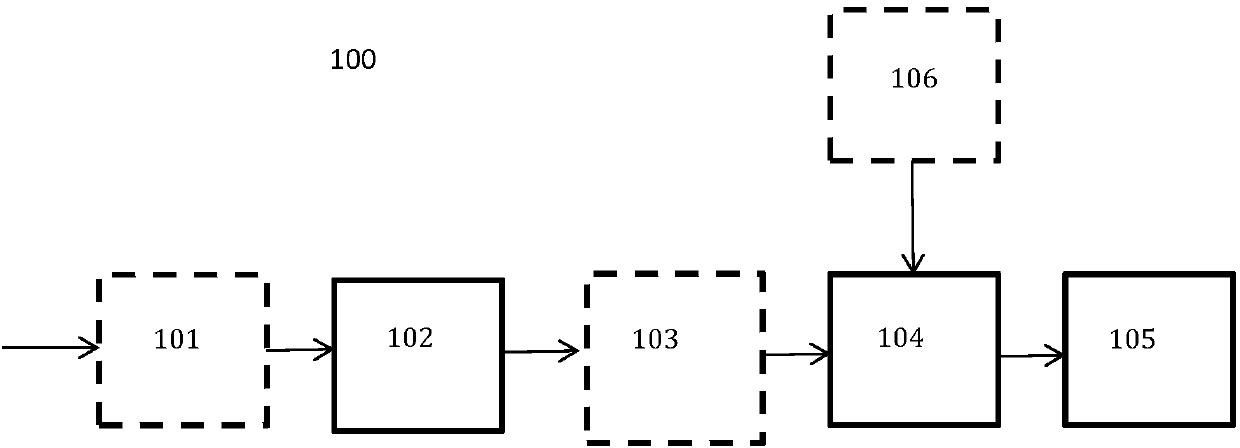

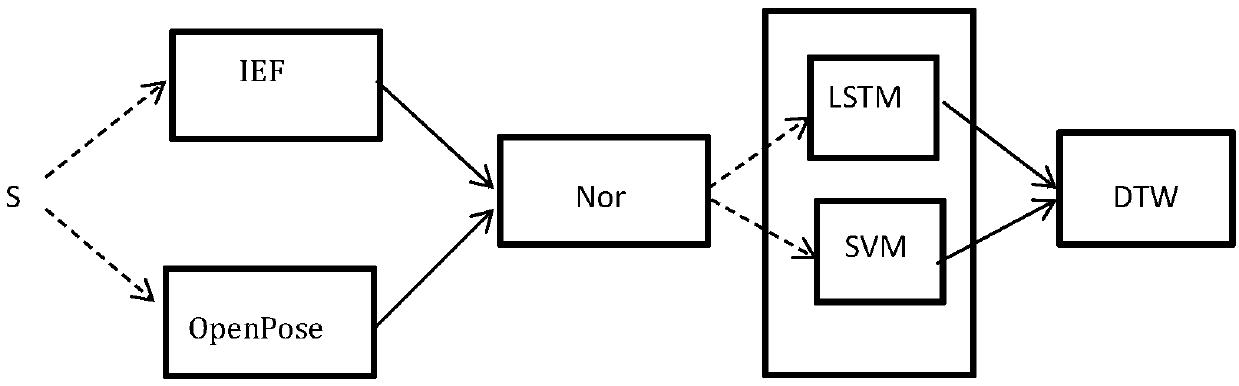

[0031] The specific implementation of the present invention will be described below with reference to the TUG test, but those skilled in the art should understand that this is not limiting, and the video image sequence segmentation system and method of the present invention can be used to perform video image segmentation on different motion states of objects. Segmentation of any scene, especially during various tests performed to monitor the mobility of the subject.

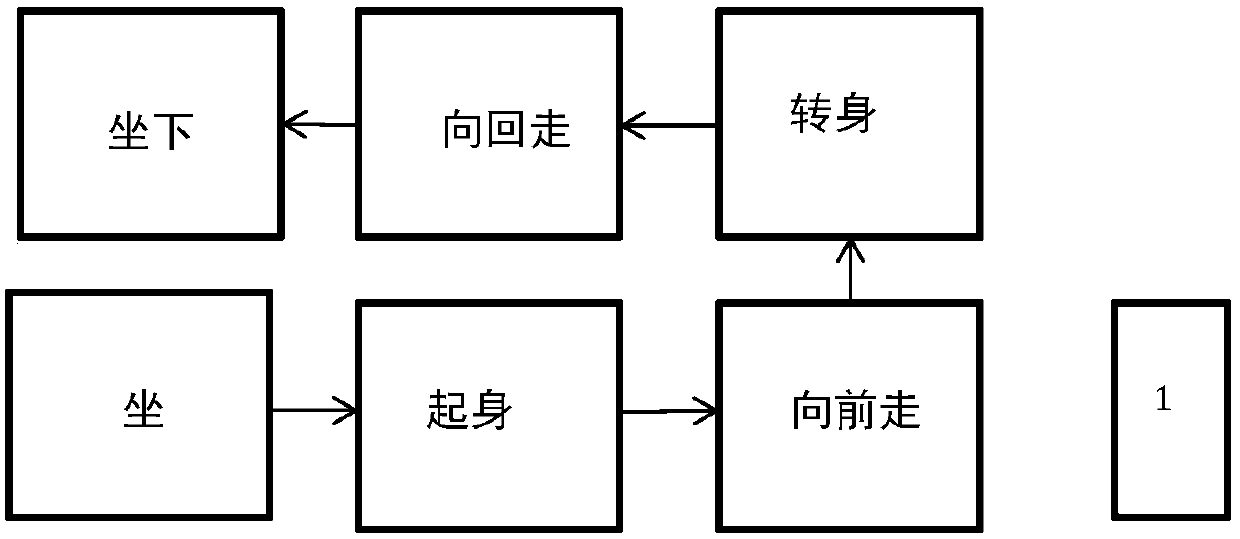

[0032] figure 1 The motion state transitions of the subjects during a typical TUG test are shown. Throughout the test, a camera 1 needs to be set directly in front of the object, so as to collect video images of the object during the entire test. At the beginning of the test, the subject sits on a chair, and then performs the actions of sitting, getting up, walking forward, turning around, walking back and sitting down in sequence according to the requirements. Each action corresponds to a different motion state...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com