Spatio-temporal information and deep network-based monitoring video object detection method

A deep network, surveillance video technology, applied in image data processing, instrument, character and pattern recognition, etc., can solve the problem that detection time and performance cannot meet the increasing demand.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following implementations are used to illustrate the present invention, but not to limit the scope of the present invention.

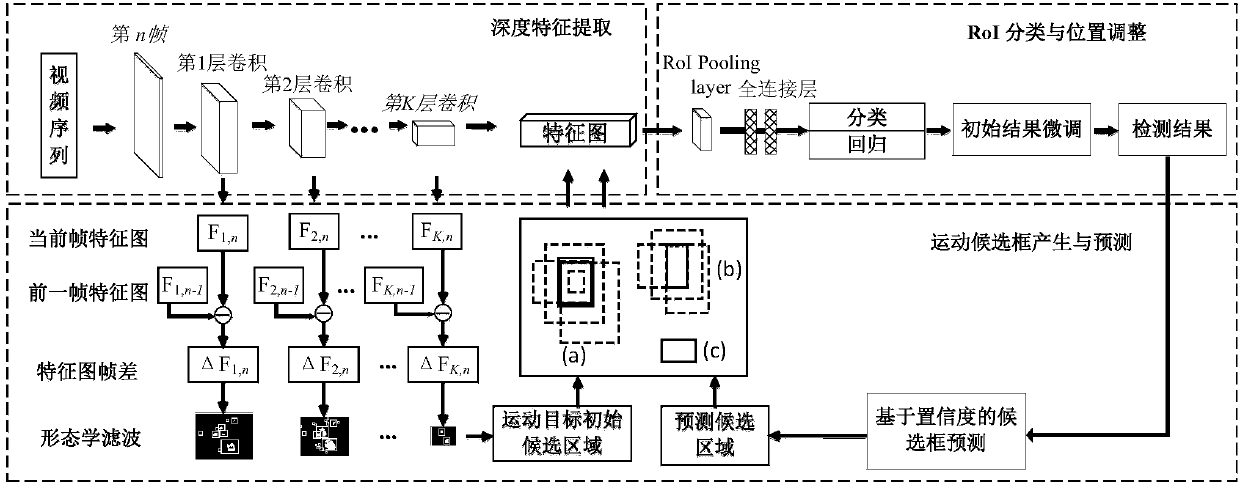

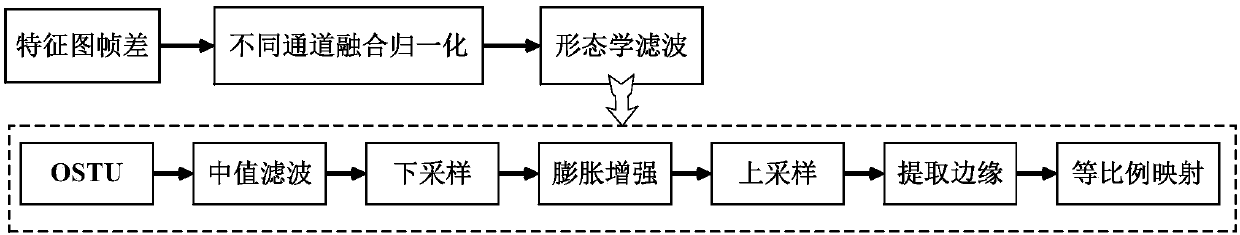

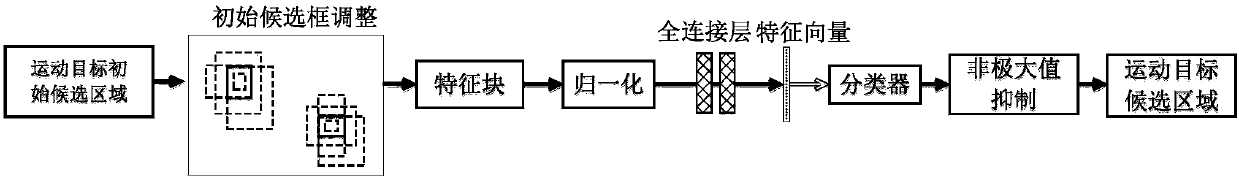

[0068] like figure 1 As shown, the monitoring video object detection method based on spatio-temporal information and deep network in this embodiment includes three parts: deep feature extraction, generation of moving object candidate frames and prediction candidate frames, and RoI classification and position adjustment. The present invention can use different deep neural networks to extract multi-scale deep features. In this example, VGG16 network and PVANET are used to extract features. VGG has 13 convolutional layers and 5 max-pooling layers, and uses the output results of these 13 convolutional layers as the input of the moving target candidate area generation part. Simila...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com