Self-encoding document representation method using random walk

A random walk and self-encoding technology, applied in special data processing applications, natural language data processing, instruments, etc., can solve serious high-dimensional sparse problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

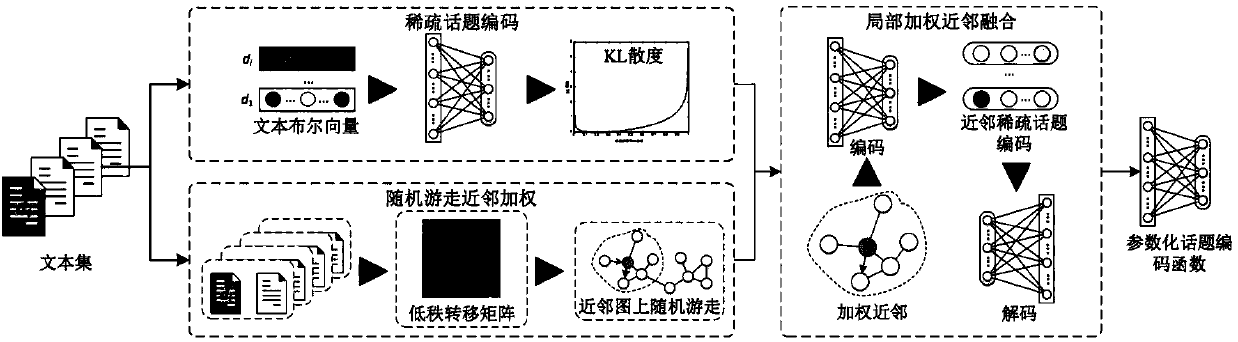

[0030] In order to better illustrate the purpose and advantages of the present invention, the implementation of the method of the present invention will be further described in detail below in conjunction with examples.

[0031] The specific process is:

[0032] Step 1, perform sparse topic encoding on the text set.

[0033] Step 1.1, given a Boolean vector X of a text (i) , then the posterior probability p(t i |X) can be generated by an encoding network composed of a nonlinear sigmoid function, in the form of formula (1).

[0034] p(t i |X)←f θ (X)=σ(WX+b) (1)

[0035] Step 1.2, given text topic code Y (i) , word distribution Z (i) Chinese word w j The posterior probability of occurrence p(w j |Y) can be generated by a decoding network composed of a nonlinear sigmoid function, in the form of formula (2).

[0036] p(w j |Y)←g θ′ (Y)=σ(W T Y+c) (2)

[0037] Step 1.3, use the Bernoulli cross entropy shown in formula (3) to measure the difference between the real wo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com