Light and shadow effect displaying method and device of augmented reality (AR) and electronic equipment

A technology of augmented reality and display method, which is applied in the processing of 3D images, details related to processing steps, image data processing, etc. It can solve the problems of single lighting effect, increase the workload of lighting design, and cannot provide users with sufficient virtual experience. , to achieve the effect of enhancing the virtual experience and improving the display effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

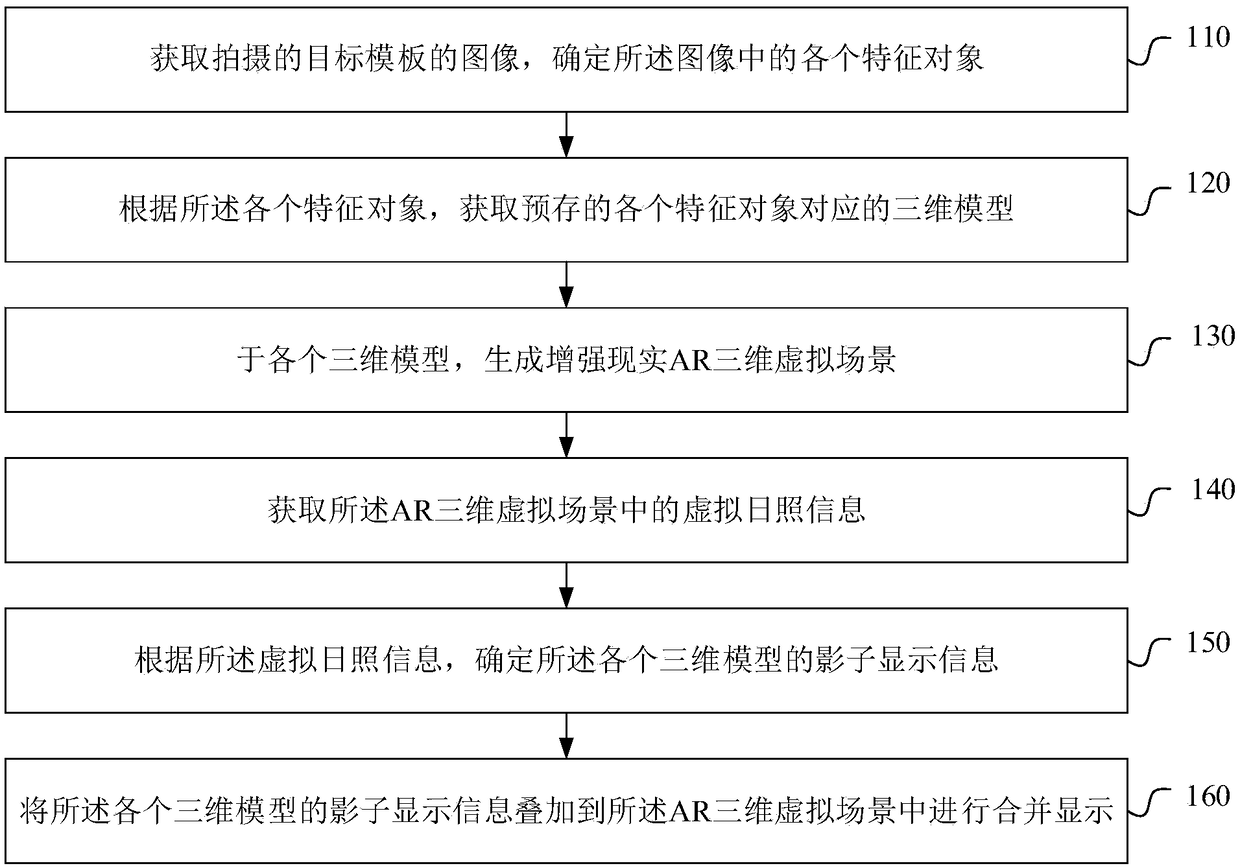

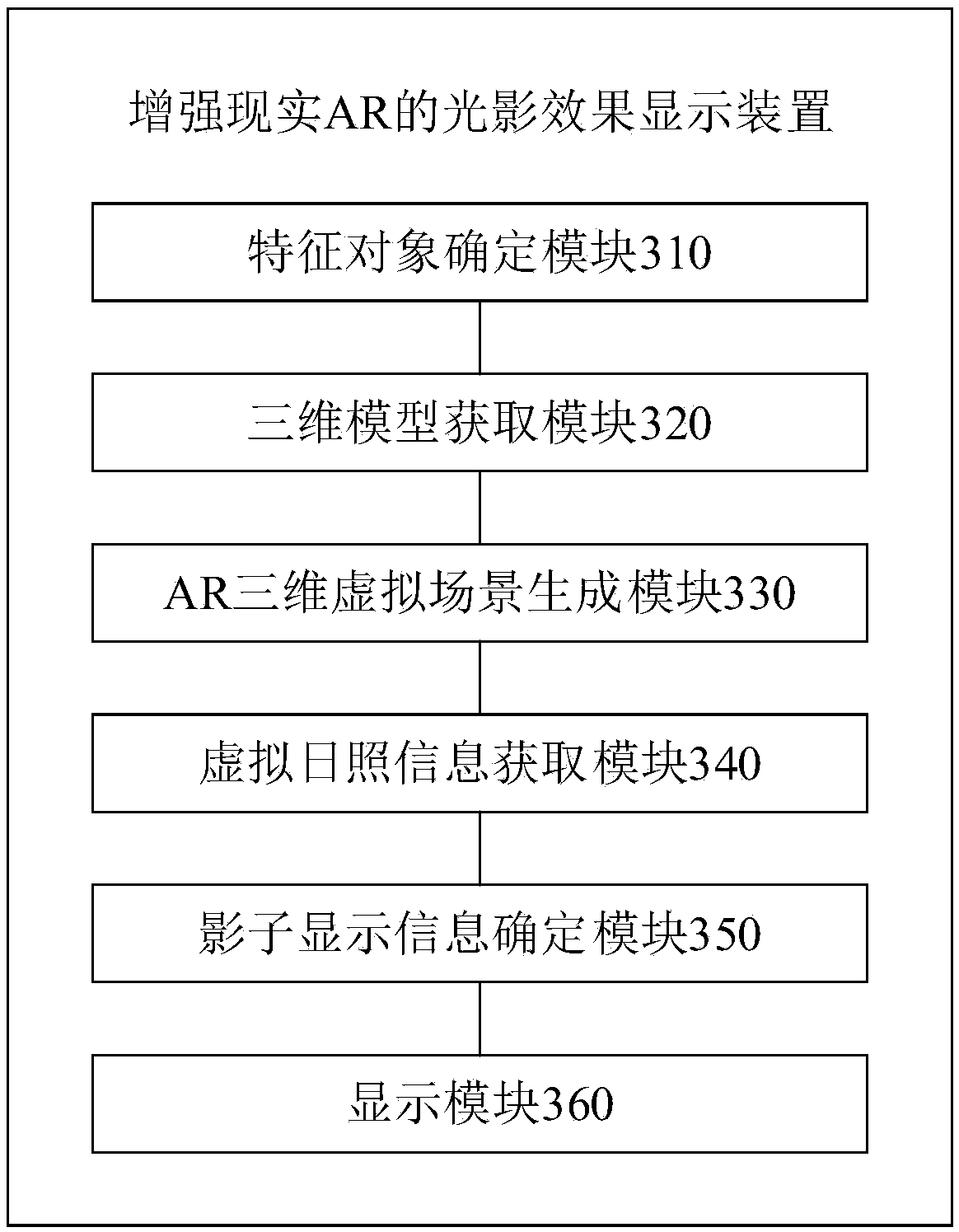

[0078] Embodiment 1 of the present invention provides a light and shadow effect display method for augmented reality AR, such as figure 1 shown, including

[0079] Step 110: Acquire the captured image of the target template, and determine each characteristic object in the image.

[0080] Wherein, the target template is any scene object in the real world that needs to be fused with AR content. As an example, if Embodiment 1 of the present invention provides users with virtual scenes such as commercial tours, tourism, exhibitions, municipal construction planning, audio-visual, etc., some similar environments are simulated through target templates, such as building models, real estate models, and military terrains through sand tables. models, industrial models, etc. In practical applications, the target template can use a planar sand table plan, which saves the cost of making the target template and facilitates the expansion of more virtual scenes.

[0081] Further, determinin...

Embodiment 2

[0127] Another possible implementation of the present invention, on the basis of the first embodiment, also includes the operations shown in the second embodiment, wherein,

[0128] Before step 130, a step is also included: acquiring a preset 3D dynamic model in the AR 3D virtual scene.

[0129] For the second embodiment of the present invention, it is applicable to the case where an independent database is established for all the three-dimensional models required by a virtual scene in the above example. After the corresponding database is determined according to each characteristic object, the corresponding virtual scene can be determined. 3D dynamic model.

[0130] Similarly, the relevant 3D dynamic model can be queried from the database in real time according to the user's shooting content for real-time display, or all the 3D dynamic models can be obtained, loaded into the memory all at once, and displayed accordingly according to the user's shooting content .

[0131] Wh...

Embodiment 3

[0141] Another possible implementation of the present invention, on the basis of Embodiment 1 and Embodiment 2, also includes the operations shown in Embodiment 3, wherein,

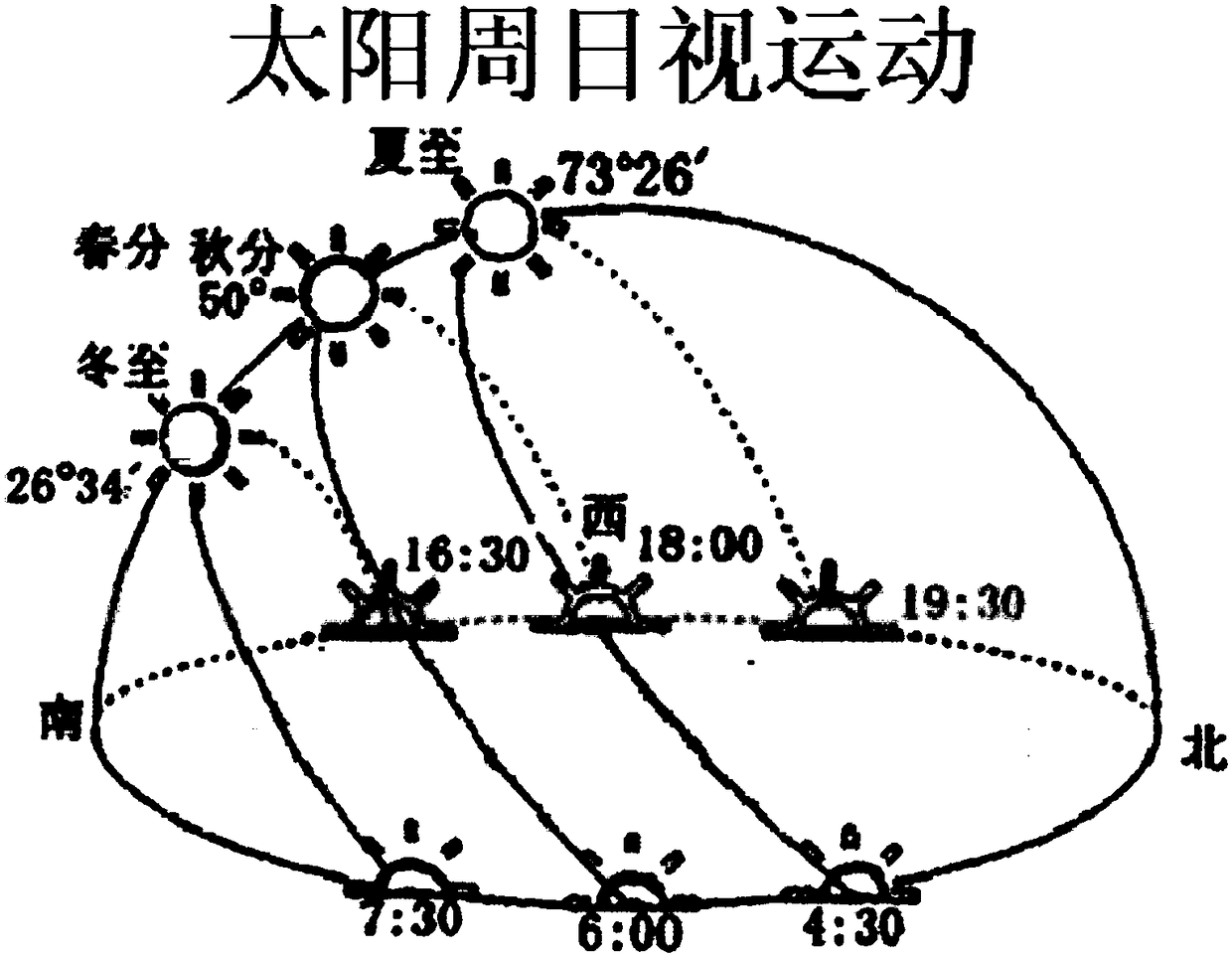

[0142] After step 140, further steps are included: determining the light intensity information and / or hue information in the AR three-dimensional virtual scene according to the virtual sunshine information; superimposing the light intensity information and / or hue information in the AR three-dimensional virtual scene Combined display in the scene.

[0143] Specifically, according to the spectral changes of the virtual light corresponding to different time information through atmospheric refraction, the corresponding hue information is matched in the database, and the hue in the AR three-dimensional virtual scene is adjusted.

[0144] According to the change of light intensity corresponding to different time information, the corresponding light intensity information is matched in the database, and the light...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com