Method for estimating severity of dysarthria based on deep audio features

A dysarthria and severity technology, applied in the field of dysarthria severity estimation based on deep audio features, can solve the problems of invasive diagnostic methods, expensive instruments, and patients with dysarthria not receiving timely treatment.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

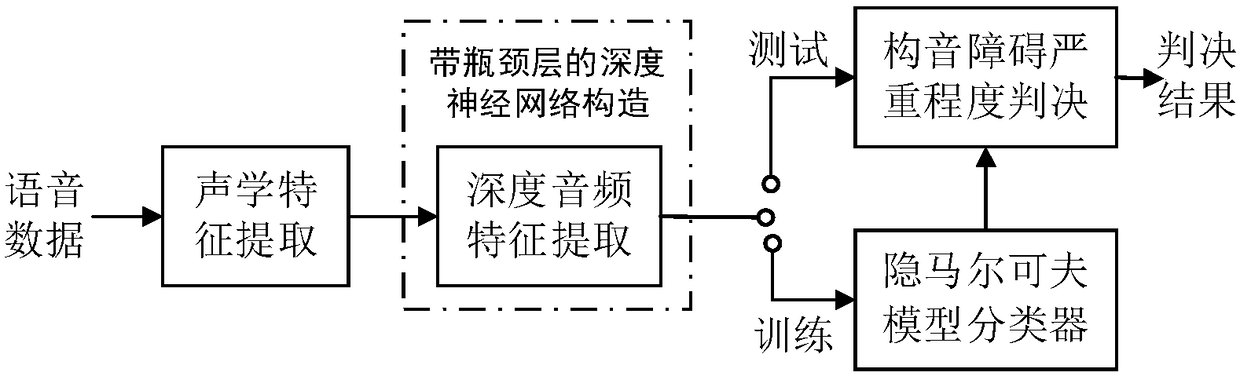

[0075] Such as figure 1 As shown, a method for estimating the severity of dysarthria based on deep audio features is characterized in that it comprises the following steps:

[0076] S1, carry out preprocessing to speech data, extract acoustic feature, described acoustic feature comprises linear prediction coefficient, fundamental frequency, fundamental frequency perturbation, amplitude, amplitude perturbation, zero-crossing rate and formant, obtains speech data characteristic matrix F= [Linear prediction coefficient, fundamental frequency, fundamental frequency perturbation, amplitude, amplitude perturbation, zero-crossing rate, formant].

[0077] Preferably, the acoustic feature extraction in step S1 specifically includes the following steps:

[0078] S1.1. Pre-emphasis: the transfer function is H(z)=1-αz -1 The digital filter of is used to filter the input speech, where α is a constant coefficient with a value range of [0.9,1];

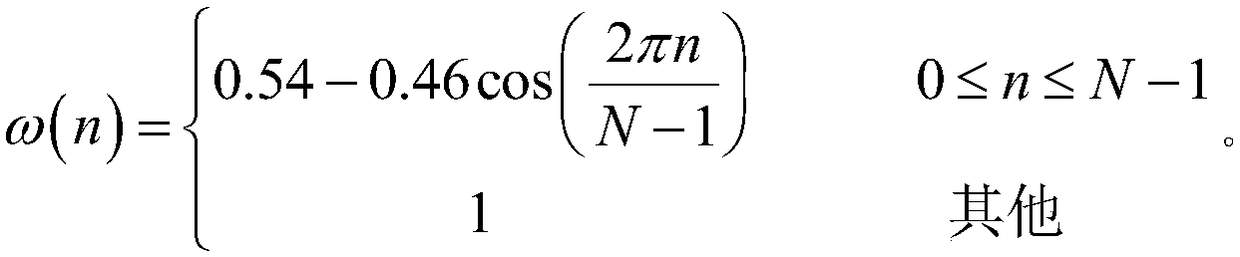

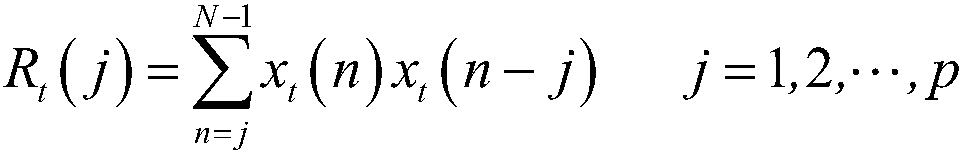

[0079] S1.2. Framing: Divide the pre-empha...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com