Compression method and device of deep neural network model, storage medium and terminal

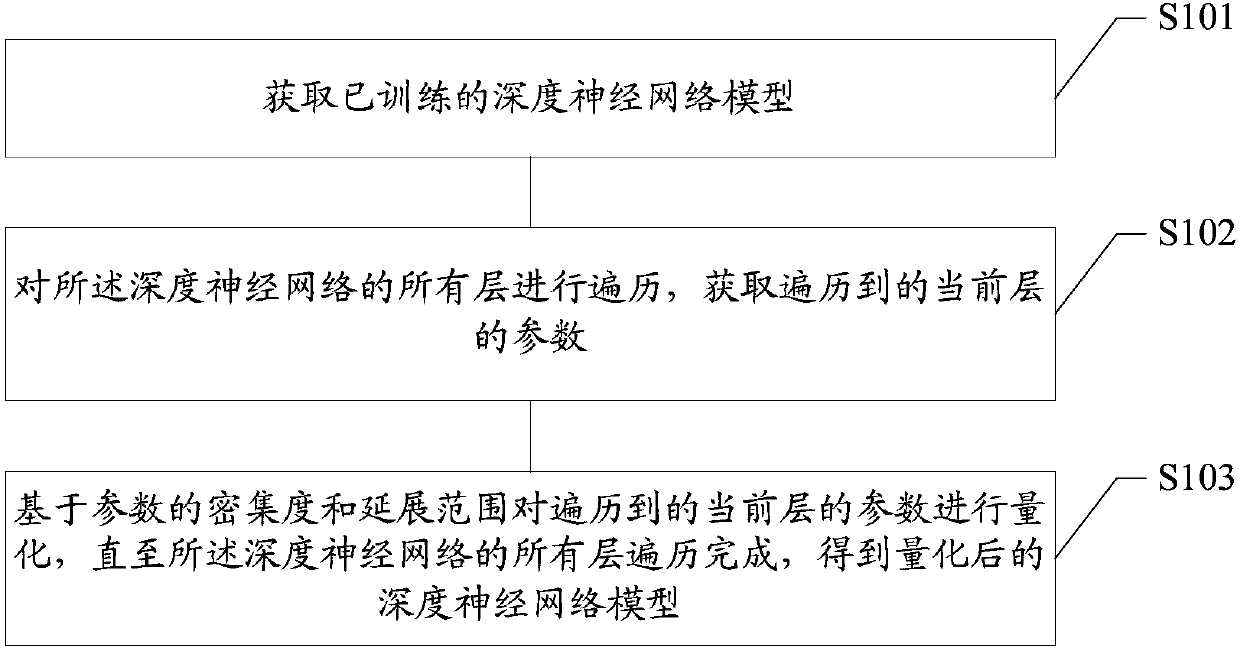

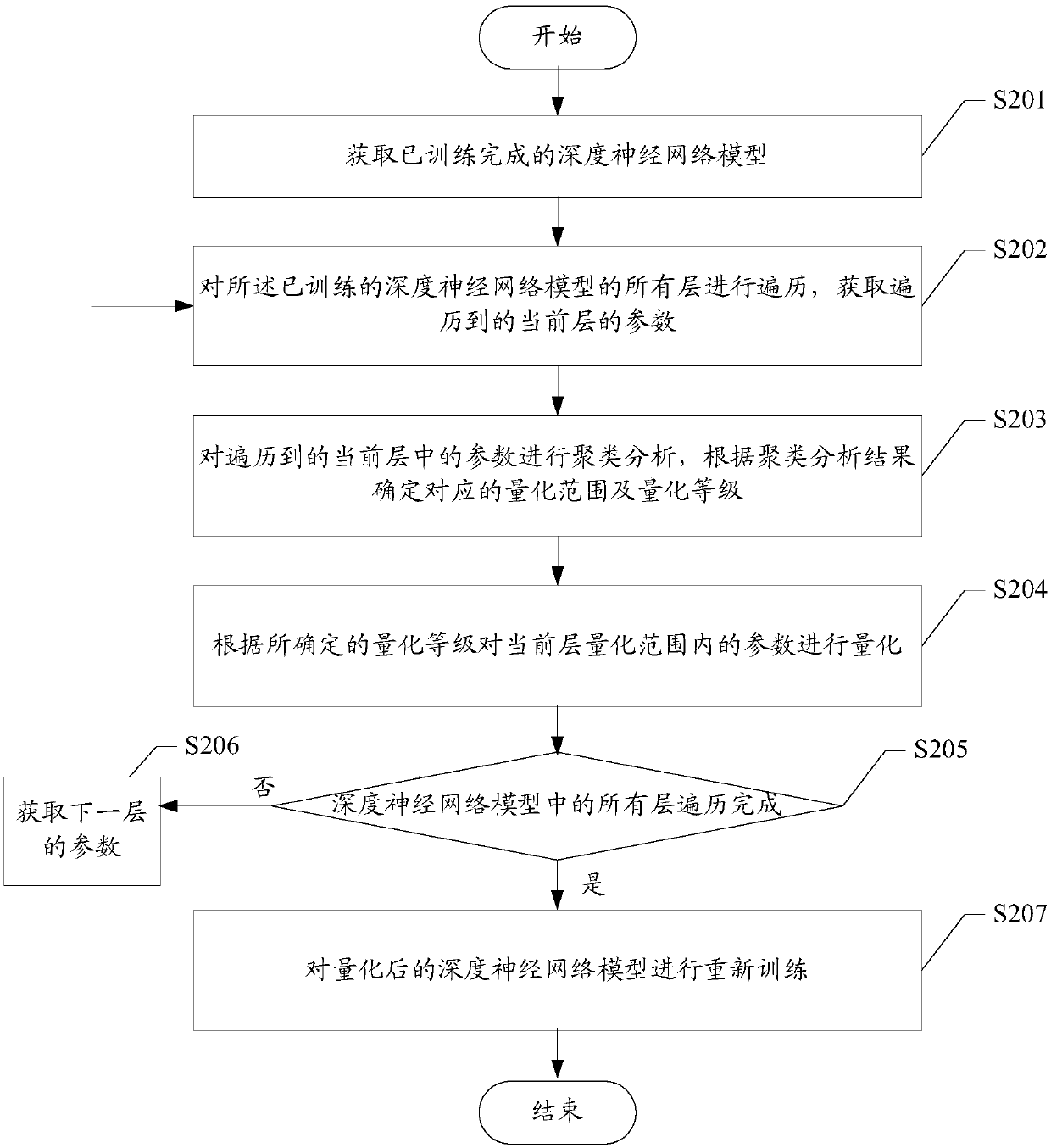

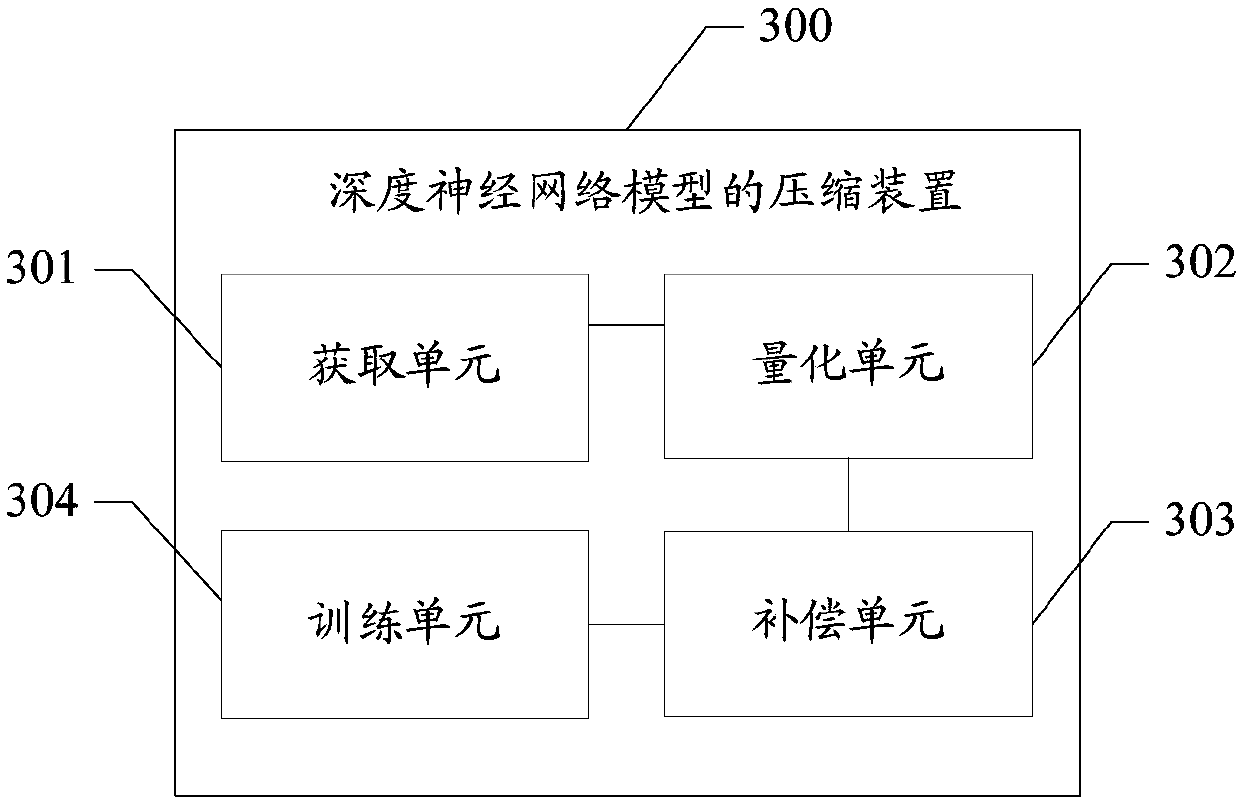

A technology of deep neural network and compression method, which is applied in the field of terminal, storage medium, compression method and device of deep neural network model, and can solve the problems of low precision and effectiveness of deep neural network model, so as to avoid precision loss and reduce Loss of precision, effect of improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] As described in the background art, current simplification and compression methods for deep neural network models are mainly divided into two categories: methods for changing the density of the deep neural network model and methods for changing the diversity of parameters of the deep neural network model.

[0026] Change the density method of the deep neural network model, and achieve the purpose of compression by changing the sparseness of the neural network. In some algorithms, a relatively small threshold is usually given to delete small-value parameters in the deep neural network model, which is highly subjective, and it is necessary to perform too many parameter adjustments for neural networks with different structures to obtain ideal simplification. Effect. Other algorithms screen input nodes based on the contribution relationship between input nodes and output responses. Such algorithms only target single-hidden layer neural networks and do not sufficiently proce...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com