An Optimal Method for Depth Image Restoration and Viewpoint Synthesis Based on Color Image Guidance

A technology of viewpoint synthesis and optimization method, applied in the field of image processing, which can solve the problems of limited application scope, poor anti-noise ability, and inability to repair inconsistent area values, and achieve the effect of avoiding boundary blur, strong anti-noise ability, and stable model.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

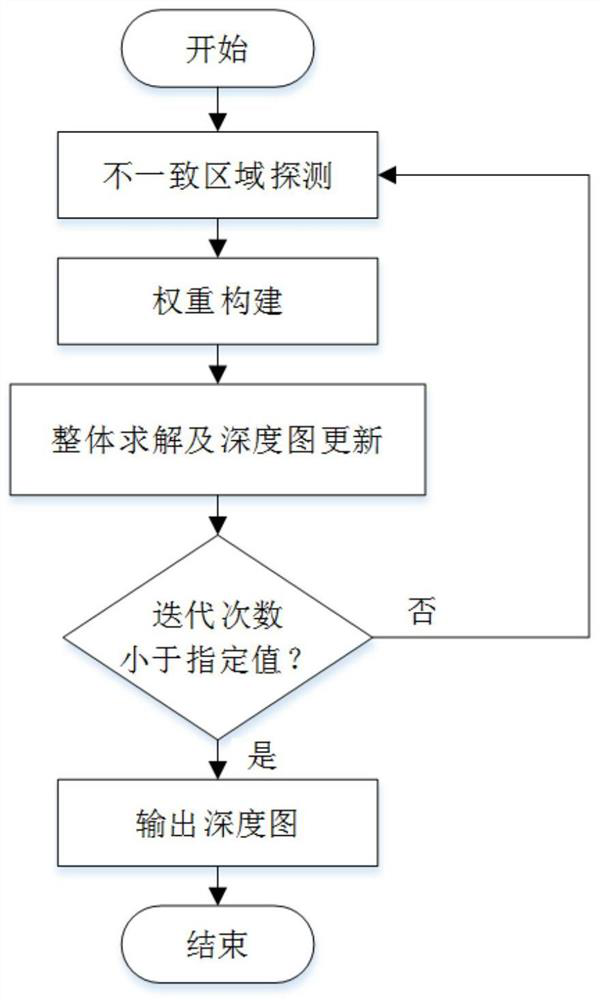

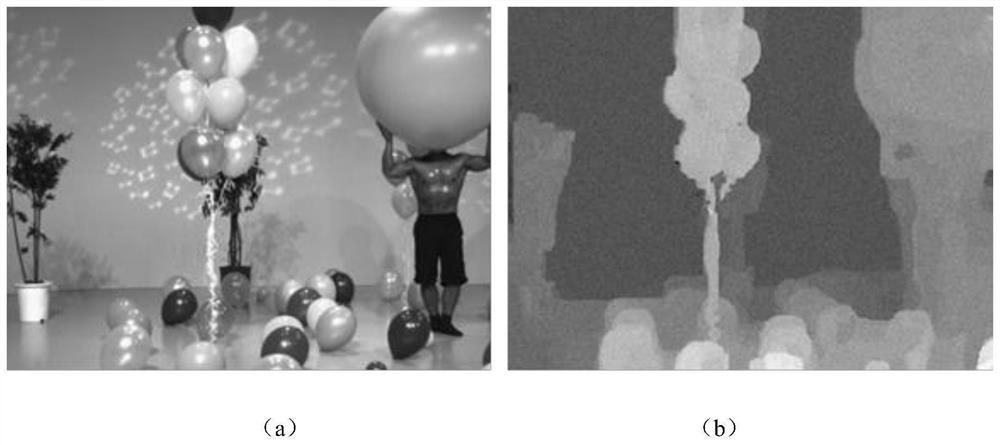

[0044] The present invention provides a depth map restoration and viewpoint synthesis optimization method based on color map guidance, detects the edges of the input depth map, and performs expansion processing on the edges, marks the expanded edges as potential inconsistent regions, and then reweights based on iteration The least squares algorithm constructs the weights. After the weights are constructed, the overall solution is performed and the depth map is updated to judge whether the set number of iterations is reached. If it reaches the set number of iterations, the depth map is output and the calculation is completed. Otherwise, the inconsistency area is detected again. This method can remove a large amount of noise and reduce the blurring of the image edge, and can restore the inconsistency between the depth map and the color map, and improve the consistency of the two, thereby improving the quality of view synthesis.

[0045] see figure 1 , the concrete steps of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com