Pedestrian local feature big data hybrid extraction method

A local feature and mixed extraction technology, applied in the field of traffic monitoring, can solve the problems of detection local limitations, low accuracy, and difficulty in pedestrian detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0064] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

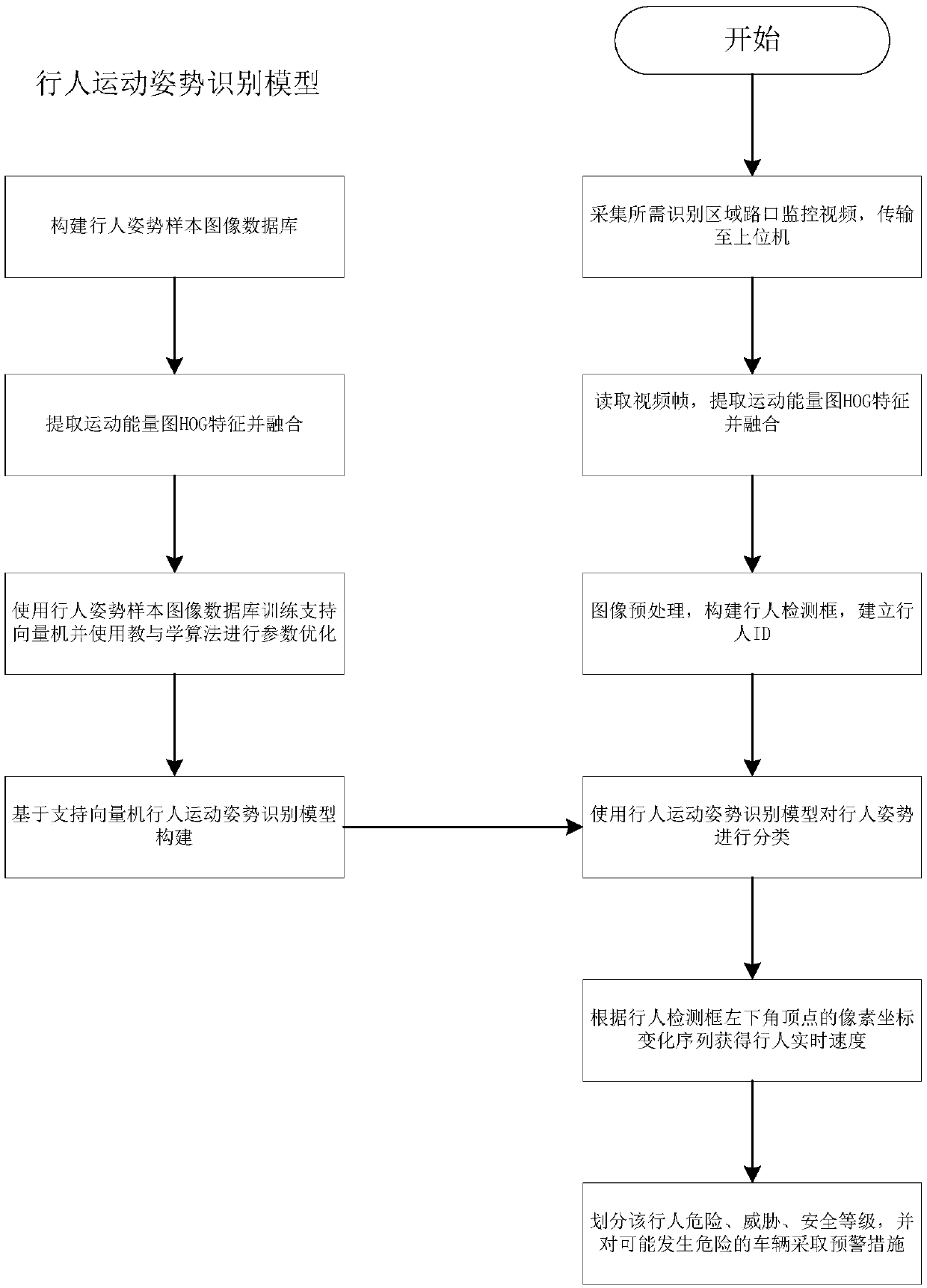

[0065] Such as figure 1 As shown, a pedestrian local feature big data hybrid extraction method includes the following steps:

[0066] Step 1: Build pedestrian motion database;

[0067] Collect videos of various motion postures and road positions of pedestrians in each shooting direction of the depth camera. three directions, the postures include walking, running and standing;

[0068] Step 2: Extract images from videos in the pedestrian motion database, and preprocess the extracted images to obtain the pedestrian detection frame of each frame of image, and then extract the pedestrian detection frame images of the same pedestrian in consecutive image frames;

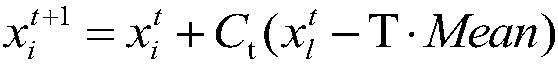

[0069] Step 3: Perform grayscale processing on each pedestrian detection frame image, synthesize the motion energy map of the grayscale image corresponding to the pedestrian detection frame imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com