Multi-specification text collaborative positioning and extracting method

A technology of co-location and extraction methods, applied in character and pattern recognition, instruments, computer parts and other directions, can solve the problems of classification and collection of difficult recognition results, can not directly meet the application requirements of text recognition and digital collection, and avoid text and the interference of noise information, overcoming missed detection and false detection, and improving the accuracy and precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

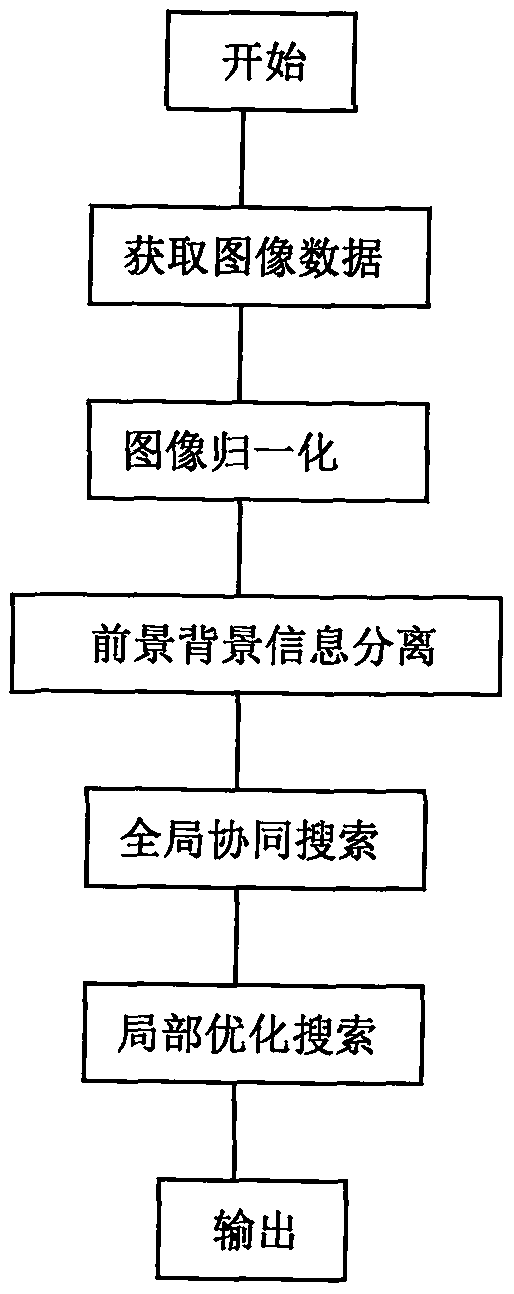

[0060] For example, on a general computer, the image of the hospital outpatient and emergency bill obtained by scanning is processed, and the method shown in the present invention is used. After the image is normalized in step 120, the angle of the bill is corrected, and the brightness and direction of the bill are consistent. Then, after step 130 foreground and background information separation, foreground information such as text and images on the bill about the hospital name, outpatient number, consultation fee, seal, etc. can be obtained, and then after step 140 global collaborative search and step 150 local optimization, it can be obtained The information to be extracted at the corresponding position on the bill, the multi-standard text positioning and extraction results are finally output through step 160 .

Embodiment 2

[0062] On a general computer, the scanned hospital admission bill image is processed, using the method shown in the present invention, after step 120 image normalization, an image with consistent size, brightness, and direction is obtained, and then through step 130 foreground and background information After separation, the key information on the inpatient bill can be obtained, such as the name of the hospital, gender, expense details, date, etc., and then after the global collaborative search in step 140 and the local optimization in step 150, the corresponding position of the key information on the bill can be obtained, such as the The multi-standard text positioning and extraction results such as title, name, gender, and diagnosis fee details are finally output through step 160.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com