Method and system for collision and occlusion detection between virtual and real objects

A technology for occlusion detection and objects, applied in image data processing, instruments, etc., can solve problems such as poor tightness of bounding boxes, high hardware requirements, and heavy workload of point cloud data processing, and achieve the effect of short calculation time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

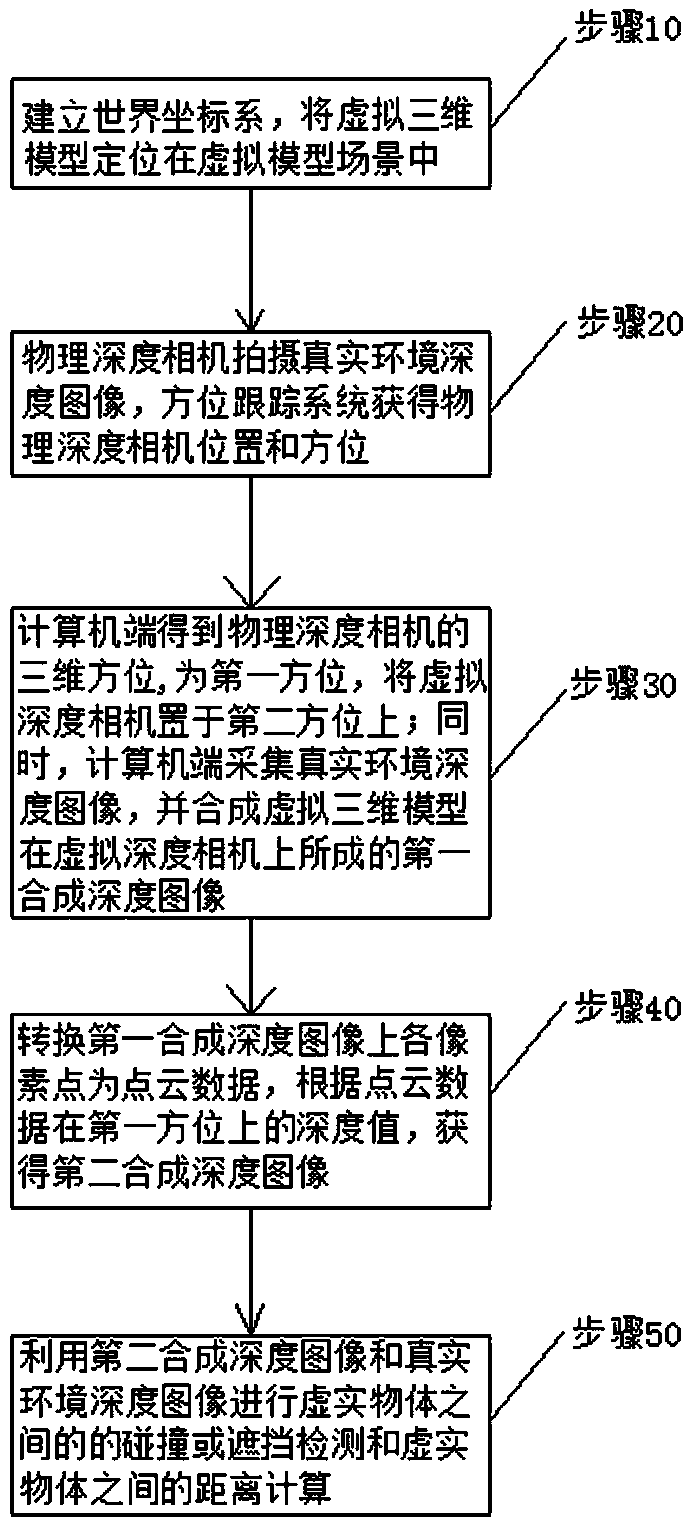

[0049] see figure 1 and figure 2 , a collision and occlusion detection method between virtual and real objects, comprising the following steps:

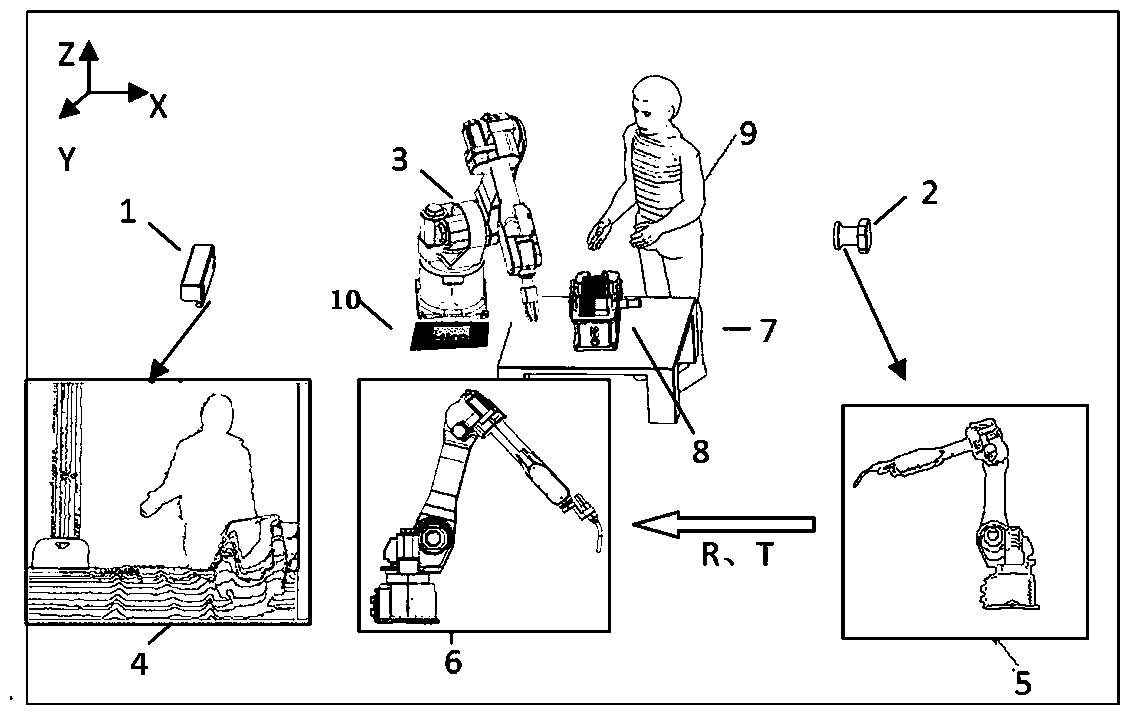

[0050] Step 10. Unify the computer-side virtual model scene coordinate system and the real environment coordinate system, so that both virtual and real objects are in the same world coordinate system, and then position the virtual 3D model 3 of the object to be detected (the virtual robot is taken as an example in the figure) in the In the virtual model scene on the computer side; this positioning can utilize methods such as augmented reality registration, for example, augmented reality registration card 10 can be used, and augmented reality registration card 10 is used as the world coordinate system to complete accurate positioning;

[0051] Step 20, in the real environment, the physical depth camera 1 captures the real environment depth image 4, and at the same time, uses the orientation tracking system to obtain the position and...

Embodiment 2

[0064] see figure 2 , figure 2 A real environment 7 including a reducer 8 to be assembled and its staff 9 is shown. A collision and occlusion detection system between virtual and real objects, including a physical depth camera 1, an azimuth tracking system and a computer system, the physical depth camera 1 takes a depth image of a real environment, and the azimuth tracking system acquires the physical depth of the physical depth camera 1 Position and orientation in the environment coordinate system; the physical depth camera 1 and the orientation tracking system are all connected to the computer system, and the depth image collected and the direction position tracked are transmitted to the computer system; please refer to Figure 7 , when the computer system is running, the following steps are implemented:

[0065] Step 1. Unify the computer-side virtual model scene coordinate system and the real environment coordinate system, so that both virtual and real objects are in t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com