Emotion recognition method, device and apparatus, and storage medium

An emotion recognition and emotion feature technology, applied in character and pattern recognition, special data processing applications, speech analysis, etc., can solve the problems of poor controllability of results, high cost, poor versatility, etc., to simplify the sample training process and improve accuracy. sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

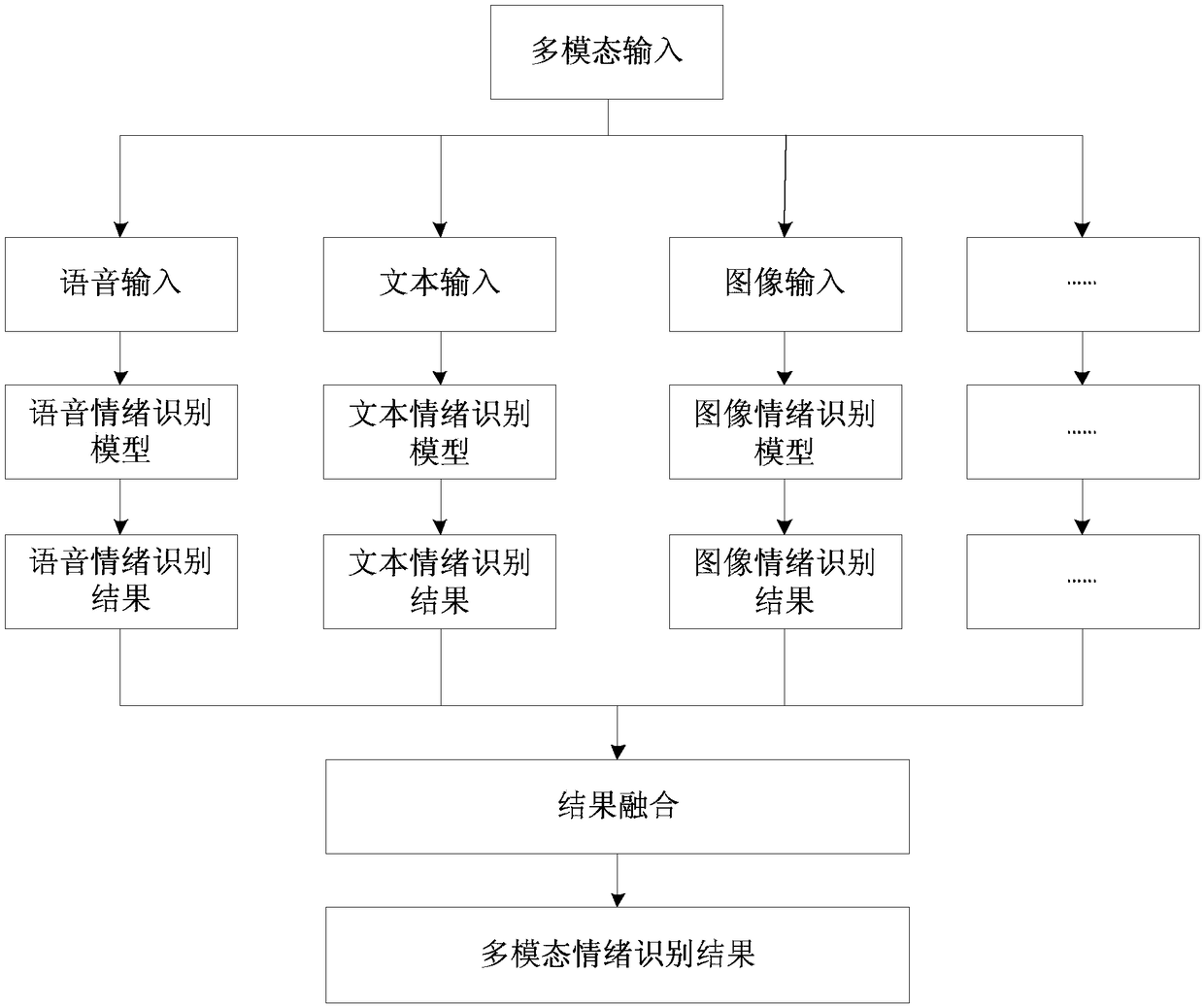

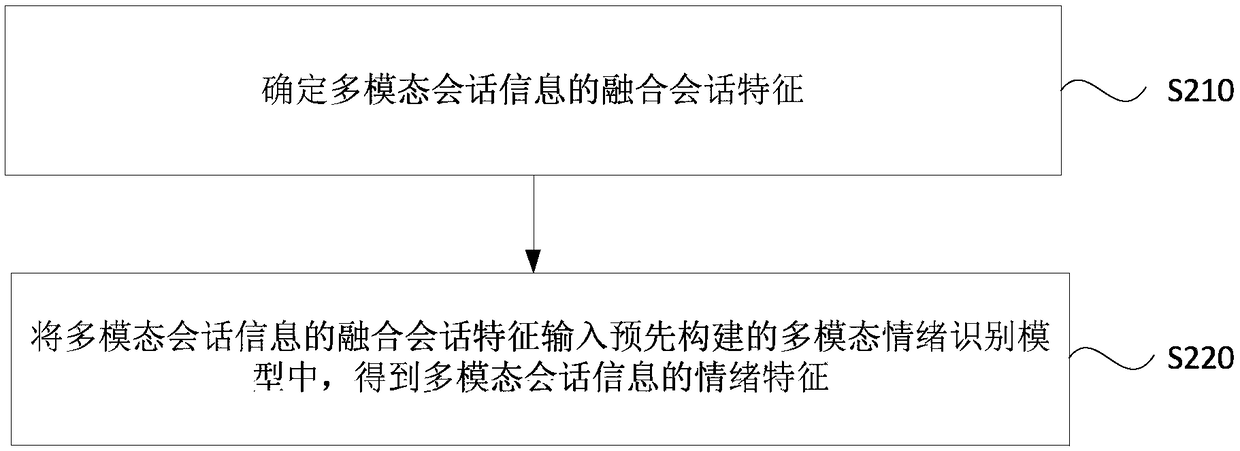

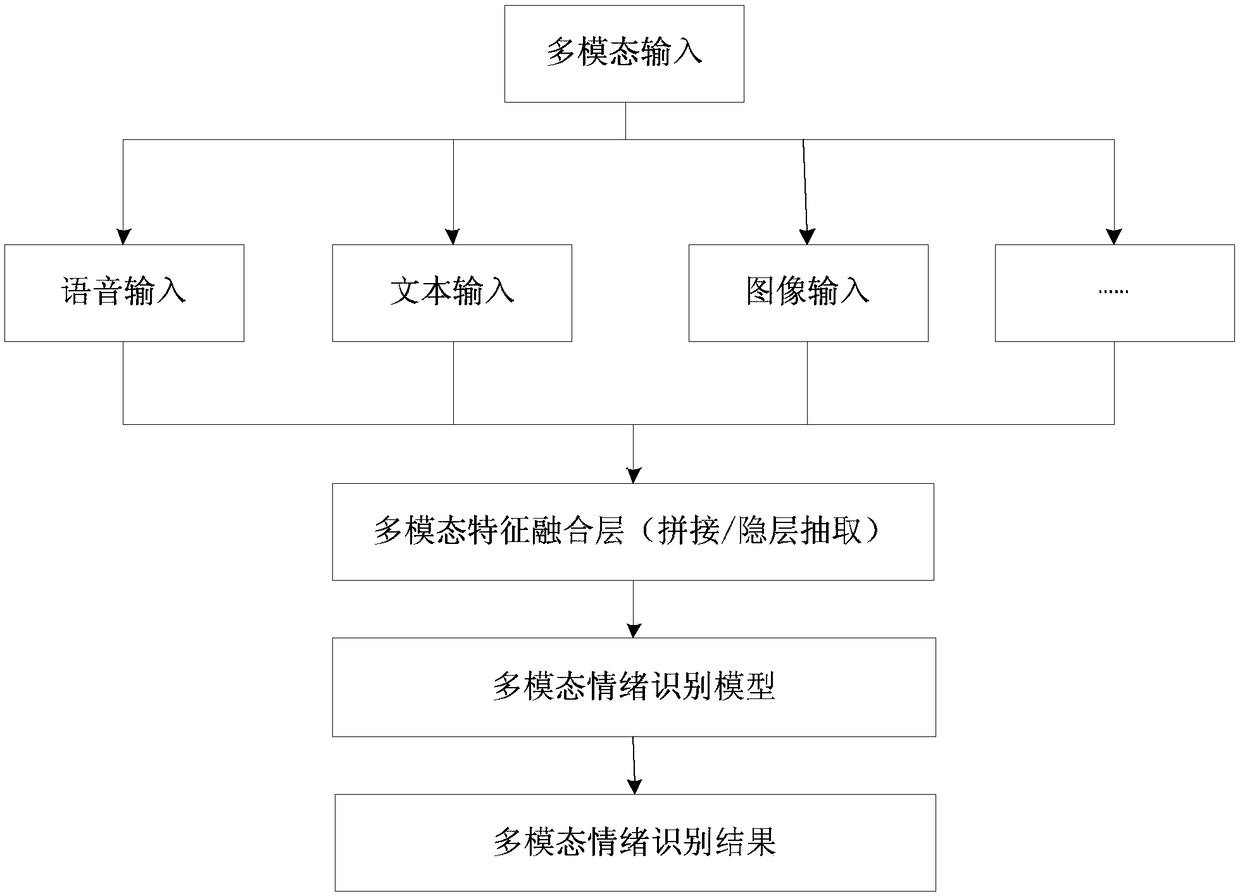

[0025] Figure 2A It is a flow chart of an emotion recognition method provided in Embodiment 1 of the present invention, Figure 2B is a schematic diagram of a learning model based on multi-modal feature fusion applicable to the embodiment of the present invention. This embodiment is applicable to the situation of how to accurately identify the user's emotion in the process of multimodal interaction. The method can be executed by the emotion recognition device provided by the embodiment of the present invention, which can be realized by software and / or hardware, and can be integrated into a computing device. see Figure 2A with 2B , the method specifically includes:

[0026] S210. Determine the fusion session feature of the multi-modal session information.

[0027] Among them, modality is a term used in interaction, and multimodality refers to the phenomenon of comprehensively using text, image, video, voice, gesture and other means and symbol carriers to interact. Corre...

Embodiment 2

[0038] image 3 It is a flow chart of an emotion recognition method provided by Embodiment 2 of the present invention. On the basis of Embodiment 1 above, this embodiment further optimizes the fusion conversation features for determining multimodal conversation information. see image 3 , the method specifically includes:

[0039] S310. Determine vector representations of at least two modal conversation information among voice conversation information, text conversation information and image conversation information, respectively.

[0040] Exemplarily, the multimodal session information may include: voice session information, text session information, and image session information. The vector representation of session information refers to the representation of a session information in a vector space, which can be obtained through modeling.

[0041]Specifically, by extracting the characteristic parameters that can represent emotional changes in the voice conversation inform...

Embodiment 3

[0054] Figure 4 It is a structural block diagram of an emotion recognition device provided in Embodiment 3 of the present invention. The device can execute the emotion recognition method provided in any embodiment of the present invention, and has corresponding functional modules and beneficial effects for executing the method. Such as Figure 4 As shown, the device may include:

[0055] A fusion feature determination module 410, configured to determine the fusion session feature of the multimodal session information;

[0056] The emotional feature determination module 420 is configured to input the fusion session features of the multi-modal conversation information into the pre-built multi-modal emotion recognition model to obtain the emotional features of the multi-modal conversation information.

[0057] The technical solution provided by the embodiment of the present invention obtains the fused conversation features by fusing the conversation features of each modality i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com